5_1_inozem_texts_for_tech_spec

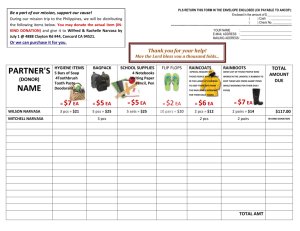

advertisement