Mixture of Gaussians

advertisement

Mixture of Gaussians and RBF’s

“Computational Learning” course,

Semester II, 1998 / Dr. Nathan Intrator (email: nin@math.tau.ac.il)

Class notes from May 3rd 1998, by Chaim Linhart (email: chaim@math.tau.ac.il)

Contents:

(1) Mixture of Gaussians:

Introduction

Description of model

Main Property

Training (EM algorithm)

Application to NN:

1. Mixture of experts

2. Radial Basis Functions

(2) Radial Basis Functions:

Interpolation

NN Implementation

Representational Theorems

Training:

1. Supervised training

2. Two-stage hybrid training

Conclusion

Mixture of Gaussians

Introduction:

Our goal is to estimate the probability density of given data.

Previous solutions we saw to this problem included parametric and nonparametric methods. When using parametric estimators, we assume a certain

underlying distribution, and use the data to estimate its parameters. The

problem is that this distribution might be wrong. In non-parametric methods

there is no need to choose a model - we simply estimate the density at each

point according to the input vectors in its neighborhood. These methods

require a lot of data, and do not extrapolate well.

The mixture of Gaussians is a semi-parametric solution - we won’t give one

global model, but rather many local ones.

Description of model:

We are given N input vectors in d . In order to estimate the input’s

probability density, we will use a linear combination of M basis functions:

M

P ( x ) p( x / j ) p( j )

j 1

P(x) is the density at point x;

p(x/j) is called the j-th component density;

p(j)’s are the mixing coefficients, i.e. the prior probability of a data vector

having been generated from component j of the mixture.

Constraints:

(1)

0 p( j ) 1

M

(2)

p( j ) 1

j 1

(3)

p( x / j ) dx 1

Main Property:

For many choices of component density functions, the mixture model can

approximate any continuous density to arbitrary accuracy, provided that:

1) M is sufficiently large,

2) the parameters of the model are chosen correctly.

We shall discuss only the case of mixture of Gaussians:

p( x / j )

1

(2 j )

2 d /2

exp{

2

x j

2 j

}

2

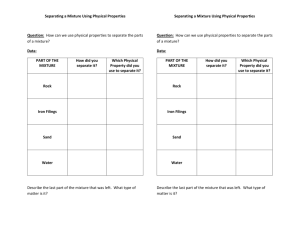

Training:

In order to determine the parameters of the mixture model - p(j), j, j, we

will use the maximum likelihood technique.

N

N

M

n 1

j 1

E ln L ln P ( x ) ln{ p( x n / j ) p( j ) }

n

n 1

The maximum likelihood L is achieved when E is minimal.

Analytical solution (using Gaussian definition and Bayes theorem):

N

j x

E

n

(I)

p( j / x )

0

2

j n

j

1

n

E

d

p( j / x n ) {

j n 1

j

N

(II)

xn j

j

3

2

}0

(III) Define the mixing coefficients as the “softmax function” (also called

“normalized exponential”, or Gibbs distribution):

p( j )

exp ( j )

(j j 0)

M

exp ( k )

k 1

N

E

p( j / x n ) p( j ) 0

j

n 1

This system of equations (I-III) leads to the following solution:

(1)

j

p( j / x n ) x n

n

p( j / x n )

n

p( j / x ) x j

n

(2) j

2

1

d

n

n

2

p( j / x n )

n

(3)

p( j )

1

p( j / x n )

N n

How can we find the values of these inter-dependent parameters?

One way is using standard non-linear optimizations;

Another practical solution is the EM (Expectation Maximization) algorithm:

1. Guess initial values for the parameters jold , jold , p(j)old ;

2. Calculate “new” values by evaluating (1)-(3) using the “old” values

jnew , jnew , p(j)new ;

3. jold jnew , jold jnew , p(j)old p(j)new ;

4. Back to step 1, until convergence.

The EM algorithm converges to a local minimum. It is useful in many

situations, not only the one described above.

For example, suppose L(,) is the likelihood, as a function of the

expectation and the standard deviation . Under some convexity

assumptions, we can maximize L by guessing , then finding that

maximizes L for this , then freezing the we finding that maximizes L,

and so on.

Application to NN:

(1) Mixture of Experts:

(Jacobs & Jordan; Hinton & Nowlan ’91)

Consider the classification problem, when the data is distributed in

different areas of the space (see figure below).

We’ll construct an “expert” network for each region, and another

“gating” network that will decide which expert to use on a given input

(i.e., how much weight to give to each of the other networks).

A possible error function for this network is:

N

M

n 1

i 1

E ln{ i ( x n ) i (t n / x n ) }

where :

i (t / x )

1

2 c / 2

t i ( x ) 2

exp{

}

2

is the Mahalanobis distance of the target value t from the i-th Gaussian

(i=1), i(xn) is the output of the gating network for the n-th input vector

xn, and C is the number of classes.

In this architecture, all the networks are trained simultaneously, and the

error is propagated according to the output of the gating net.

It is possible to replace the simple network “Net i” by a mixture of

experts, and so on, recursively. The result is a complex network, which is

a hierarchy of mixture-of-experts networks. The EM algorithm can be

expanded to deal with this hierarchical structure.

(2) Radial Basis Functions

Radial Basis Functions

Interpolation:

(Powell ’87)

Our goal is to perform an exact interpolation.

Formally, given N vectors in d: x1, ..., xN, and their corresponding target

values: t1, ..., tN, we seek a projection h:d , so that: 1iN h(xi)=ti.

We can use N basis functions (one for each vector), and combine them

linearly. The basis functions used typically are Gaussians (so the solution is

similar to what we have seen earlier, in “Mixture of Gaussians”):

i ( x ) exp{

x xi

2

2

2

}

(Notice that the center of the i-th Gaussian is exactly at xi)

NN Implementation:

The interpolation described in the previous section uses a basis function for

each interpolation point. Obviously, this might lead to poor results for new

vectors, since the solution “overfits” the data. Furthermore, this solution is

rather complex, since N could be very large. Therefore, in order to get a

compact solution with good generalization, we shall reduce the number of

basis functions from N to M (M << N). The price we’ll pay is that at the N

given vectors, the interpolation will not necessarily be exact.

The general network, that can be applied to other problems as well (e.g.

classification), looks like this:

Each hidden unit calculates the Mahalanobis distance of the input vector

from the corresponding Gaussian:

j ( x ) exp{

x j

2 j

2

2

}

This implies that the weights between the input layer and the hidden units

actually perform a subtraction - the (j,i) weight subtracts the i-th coordinate

of j (the center of the j-th Gaussian) from the i-th coordinate of the input

vector.

The weights between the hidden units and the output layer, denoted by wkj,

are regular multiplication weights (as in a MLP). These weights are the

Gaussians’ mixing coefficients. Each output unit also has a bias - wk0:

M

y k ( x ) wkj j ( x ) wk 0

j 1

Representational Theorems:

The following theorems refer to the representational properties of a Radial

Basis Functions network, or RBF in short, i.e., which functions can

theoretically be represented by a RBF, and how well this approximation is;

they do not say how the RBF can be trained to do so.

(1) Universal Approximation Property:

RBF’s are capable of approximating any continuous function to arbitrary

accuracy (provided that M is large enough).

Simple MLP’s, with only one hidden layer, also possess this property.

(2) Best Approximation Property:

Given a set of functions (e.g. C[U], Ud), a subset A, and a

function f, we wish to find a function gA, that is closest to f.

The distance between a function f and a set A is defined as follows:

d ( f , A) inf { d ( f , g )}

g A

The distance between two functions - f and g is ||f-g|| (in L2 norm, for

instance).

The set A is said to possess the best approximation property if for any

function f, the infimum in the expression above is attained for gA,

i.e. there exists gA, s.t. d(f,A)=d(f,g) .

RBF’s with N basis functions (N is fixed) possess the best approximation

property (in other words, it’s a closed set of functions).

MLP’s do not possess this property.

Training:

We have studied two training approaches for MLP networks. In supervised

training, we are given labelled data (e.g., class labels, or interpolation target

values), and the network attempts to fit the target labels to the

corresponding input vectors. When labelled input is not available, we can

use unsupervised training methods (e.g., competitive learning) to cluster the

data.

In the case of a RBF network, we usually prefer the hybrid approach,

described below.

(1) Supervised training:

This is the regular training method, that we have seen for MLP’s.

The multiplicative weights wkj are trained as usual. The Gaussians’

parameters are updated according to the derivatives:

E

[ y k ( x n ) t n k ] wkj exp{

ji n k

xn j

E

[ y k ( x n ) t n k ] wkj exp{

j n k

xn j

2

i

2 j 2

2 j 2

x

}

2

}

n

ji

j2

xn j

2

j3

The shortcomings of this approach is that it is computationally intensive,

and it does not guarantee that the basis functions will remain localized.

(2) Hybrid training:

The idea is to train the network in two separate stages - first, we perform

an unsupervised training to determine the Gaussians’ parameters (j, j).

In the second stage, the multiplicative weights wkj are trained using the

regular supervised approach.

The advantages of the two-stage training is that it is fast and very easy to

interpret. It is especially useful when labelled data is in short supply,

since the first stage can be performed on all the data (not only the

labelled part).

Conclusion:

We have introduced a new network architecture, which is a linear mixture of

basis functions. The RBF network gives local representation of the data

(elliptic zones in the case of Gaussian kernels), unlike the distributed

representation of the regular MLP’s (in which the zones are half planes).

While both architectures can approximate continuous functions to arbitrary

accuracy, RBF’s are faster to train, using the hybrid two-stage training

method.