DATA MINING

advertisement

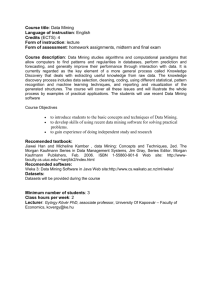

DATA MINING CSCI-453 Dr. Khalil Research Paper Presented by Tarek El-Gaaly Ahmed Gamal el-Din 900-00-2342 900-00-0901 Outline 1. Introduction 2. Keywords 3. The Architecture of Data Mining 4. How does Data Mining Actually Work? 5. The Foundation of Data Mining 6. The Scope of Data Mining 7. Data Mining Techniques 8. Applications for Data Mining 9. Data Mining Case Studies 10.Conclusion of this Research Abstract: This paper is a research paper designed to outline and describe data mining as a concept and implementation. Real-life examples of implemented data mining projects will be given to illustrate deeper details into the nature and environment of this complicated and extensive field. This paper provides an introduction to the basic concepts and techniques of data mining. It illustrates examples of applications showing the relevance to today’s business and scientific environments as well as a basic description of how data mining architecture can evolve to deliver the value of data mining to end users and the techniques utilized in data mining. A large portion of this research document is dedicated to the techniques used in data mining. 1. Introduction: What is Data Mining? Data Mining is the process of extracting indirect knowledge hidden in large volumes of raw data. Data mining is an information extraction activity. The goal of this activity is to discover hidden information held within a database. This technique uses a combination of machine learning, statistical analysis, modeling techniques and database technology. Data mining finds patterns and relationships in data and concludes rules that allow the prediction of future results. So basically in lay-man terms it is a technique, which as its name indicates, involves the mining or analysis of data in databases and to extract and establish from this data, relationships and patterns. Data mining is done by database applications. These applications probe for hidden or undiscovered patterns in given collections of data. These applications use pattern recognition technologies as well as statistical and mathematical techniques and can have a unique impact on businesses and scientific research. Data mining is not simple, and most companies have not yet extensively mined their data, though many have plans to do so in the future. Data mining tools predict future trends and behaviors. This allows businesses to make educated and knowledge-based decisions. Data mining tools can answer business questions that traditionally were too time-consuming to resolve. The importance of collecting data that reflect business or scientific activities to achieve competitive advantage is widely recognized now. Powerful systems for collecting data and managing it in large databases are in place today, however turning this data into an advantage in markets is the difficulty. Human analysts with no special tools can no longer make sense of enormous volumes of data that require processing in order to make educated business decisions. Data mining automates the process of finding relationships and patterns in raw data and delivers results that can be either utilized in an automated decision support system or assessed by a human analyst. 2. Keywords: The following keywords must be understood before proceeding into the detailed description of data mining: - - Data Warehousing: A collection of data designed to support management decision making. Data warehouses contain a wide variety of data that present a coherent picture of business conditions at a single state in time. Development of a data warehouse includes development of systems to extract data from operating systems plus installation of a warehouse database system that provides managers flexible access to the data. The term data warehousing generally refers to the distribution of many different databases across an entire enterprise. Predictors: A predictor is information that supports a probabilistic estimate of future events. 3. The Architecture of Data Mining: To best apply these advanced data mining techniques, they must be fully integrated with a data warehouse as well as flexible interactive business analysis tools. Many data mining tools currently operate outside of the warehouse, requiring extra steps for extracting, importing, and analyzing the data. Furthermore, when new insights require operational implementation, integration with the warehouse simplifies the application of results from data mining. The resulting analytic data warehouse can be applied to improve business processes throughout the organization, in areas like promotional campaign management and new product rollout. The diagram below illustrates an architecture for advanced analysis in a large data warehouse. Integrated Data Mining Architecture The ideal starting point is a data warehouse containing a combination of internal data tracking all customer contact coupled with external market data about competitor activity. This data warehouse can be implemented using many relational database systems like: Sybase, Oracle and Redbrick and should be optimized for flexible and fast data access. An OLAP (On-Line Analytical Processing) server enables a more sophisticated end-user business model to be applied when navigating the data warehouse. The multidimensional structures allow the user to analyze the data as they want to view their business. The Data Mining Server must be integrated with the data warehouse and the OLAP server to embed ROI-focused (ROI is return on investment which is a measure of a company’s profitability) business analysis directly into this infrastructure. An advanced, process-centric metadata template defines the data mining objectives for specific business issues. Integration with the data warehouse enables operational decisions to be directly implemented and tracked. As the warehouse grows with new decisions and results, the company can continually mine the best practices and apply them to future decisions. This design represents a fundamental shift from conventional decision support systems. Instead of simply delivering data to the end user through query and reporting software, the Advanced Analysis Server applies business models directly to the warehouse and returns a proactive analysis of the most relevant information. These results enhance the metadata in the OLAP Server by providing a dynamic metadata layer that represents a refined view of the data. Reporting, visualization, and other analysis tools can then be applied to plan future actions and confirm the impact of those plans. 4. How does Data Mining Actually Work? How exactly is data mining able to tell you important things that you didn't know or what is going to happen in future? The technique that is used to perform these feats in data mining is called modeling. Modeling is simply the act of building a model in one situation where you know the answer and then applying it to another situation that you don't know the answer of. This act of model building is thus something that people have been doing for a long time, certainly before the advent of computers or data mining technology. What happens on computers, however, is not much different than the way people build models. Computers are loaded up with lots of data about a variety of situations where an answer is known and then the data mining software on the computer must run through that data and refine the characteristics of the data that should go into the model. Once the model is built it can then be used in similar situations where you don't know the answer. 5. The Foundations of Data Mining Data mining techniques are the result of a long process of research and product development. This evolution began when business data was first stored on computers, continued with improvements in data access, and more recently, generated technologies that allow users to navigate through their data in real time. Data mining takes this evolutionary process beyond data access and navigation to practical information delivery. Data mining is ready for application in the business community because it is supported by three technologies that are now sufficiently mature: Massive data collection Powerful multiprocessor computers Data mining algorithms The accompanying need for improved computational engines can now be met in a cost-effective manner with parallel multiprocessor computer technology. Data mining algorithms embody techniques that have existed for at least 10 years, but have only recently been implemented as mature, reliable, understandable tools that consistently outperform older statistical methods. Today, the maturity of these techniques and with the help of high-performance relational database engines makes these technologies practical for current data warehouse environments 6. The Scope of Data Mining Given databases of sufficient size and quality, data mining technology can generate new commercial and scientific opportunities by providing these capabilities: Automated prediction of trends and behaviors: Data mining automates the process of finding predictive information in large databases. Questions that traditionally required extensive hands-on analysis can now be answered directly from the data.. Automated discovery of previously unknown patterns: Data mining tools sweep through databases and identify previously hidden patterns in one step. The history of the data stored on the database is analyzed to extract hidden patterns. When data mining tools are implemented on high performance parallel processing systems, they can analyze massive databases in minutes. Faster processing means that users can automatically experiment with more models to understand complex data. High speed makes it practical for users to analyze huge quantities of data. Larger databases, in turn, yield improved predictions because there is more previous data to supply the models and construct predicting models. 7. Data Mining Techniques: In this section the most common data mining algorithms in use today will be briefly outlines. The most commonly used techniques in data mining are: Artificial neural networks: Non-linear predictive models that learn through training and resemble biological neural networks in structure. Decision trees: Tree-shaped structures that represent sets of decisions. These decisions generate rules for the classification of a dataset. Genetic algorithms: Optimization techniques that use processes such as genetic combination, mutation, and natural selection in a design based on the concepts of evolution. Nearest neighbor method: A technique that classifies each record in a dataset based on a combination of the classes of the k record(s) most similar to it in a historical dataset (where k ³ 1). Sometimes called the k-nearest neighbor technique. Rule induction: The extraction of useful if-then rules from data based on statistical significance. Clustering: As the word illustrates, this technique is used to cluster together similar data components together. These techniques have been sub-divided into two main categories: Classical Techniques: Statistics, Neighborhoods and Clustering Next Generation Techniques: Trees, Networks and Rules 7.1 The Classic techniques: The main techniques that we will discuss here are the ones that are used 99.9% of the time on existing business problems. There are certainly many other ones as well as but in general the industry is converging to these techniques that work consistently and are understandable and explainable. Statistics: By strict definition "statistics" or statistical techniques is not data mining. They were being used long before the term data mining. Despite this, statistical techniques are driven by the data and are used to discover patterns and build predictive models. From the users perspective you will be faced with a choice when solving a "data mining" problem as to whether you wish to attack it with statistical methods or other data mining techniques. For this reason it is important to have some idea of how statistical techniques work and how they can be applied because the data mining does utilize statistical techniques to a great extent. Data, counting and probability: One thing that is always true about statistics is that there is always data involved, and usually enough data so that the average person cannot keep track of all the data in their heads. Today people have to deal with up to terabytes of data and have to make sense of it and pick up the important patterns from it. Statistics can help greatly in this process by helping to answer several important questions about your data: What patterns are there in my database? What is the chance that an event will occur? Which patterns are significant? What is a high level summary of the data that gives me some idea of what is contained in my database? Certainly statistics can do more than answer these questions but for most people today these are the questions that statistics can help answer. Consider for example that a large part of statistics is concerned with summarizing data and this summarization has to do with counting. One of the great values of statistics is in presenting a high level abstract view of the database that provides some useful information without requiring every record to be understood in detail. Statistics at this level is used in the reporting of important information from which people may be able to make useful decisions. There are many different parts of statistics but the idea of collecting data and counting it is often at the base of even these more sophisticated techniques. The first step then in understanding statistics is to understand how the data is collected into a higher level form. One of the most famous ways of doing this is with the histogram. Linear regression Statistics prediction is usually identical to regression of some form. There are a variety of different types of regression in statistics but the basic idea is that a model is created that maps values from predictors in such a way that the lowest error occurs in making a prediction. The simplest form of regression is simple linear regression that just contains one predictor and a prediction. The relationship between the two can be mapped on a two dimensional space and the records plotted for the prediction values along the Y axis and the predictor values along the X axis. The simple linear regression model then could be viewed as the line that minimized the error rate between the actual prediction value and the point on the line (the prediction from the model). The simplest form of regression seeks to build a predictive model that is a line that maps between each predictor value to a prediction value. Of the many possible lines that could be drawn through the data the one that minimizes the distance between the line and the data points is the one that is chosen for the predictive model. Linear regression is similar to the task of finding the line that minimizes the total distance to a set of data. Nearest Neighbor and Clustering Clustering and the Nearest Neighbor prediction technique are among the oldest techniques used in data mining. Most people have an intuition that they understand what clustering is, namely that similar records are grouped or clustered together. Nearest neighbor is a prediction technique that is quite similar to clustering. Its essence is that in order to predict what a prediction value is in one record look for records with similar predictor values in the historical database and use the prediction value from the record that it “nearest” to the unclassified record. Clustering Clustering is the method by which like records are grouped together. Usually this is done to give the end user a high level view of what is going on in the database. Clustering is sometimes used for mean segmentation, which most marketing people will say is useful for coming up with a birds-eye view of the business of a company. Clustering information is then used by the end user to tag the customers in their database. Once this is done the business users can get a quick high level view of what is happening within the cluster. Once the business user has worked with these codes for some time they also begin to build intuitions about how these different customers clusters will react to the marketing offers particular to their business. Sometimes clustering is performed not so much to keep records together, but rather to make it easier to see when one record sticks out from the rest. An example of elongated clusters created from data in a database: 7.2 Next Generation Techniques: Trees, Networks and Rules The data mining techniques in this section represent the most often used techniques. These techniques can be used for either discovering new information within large databases or for building predictive models. Though the older decision tree techniques such as CHAID are currently highly used the new techniques such as CART are gaining wider acceptance. Decision Trees A decision tree is a predictive model that, as its name implies, can be viewed as a tree. Specifically each branch of the tree is a classification question and the leaves of the tree are partitions of the classified data in the database. For instance if we were going to classify customers who don’t renew their phone contracts in the cellular telephone industry, a decision tree might look like this: Interesting facts about the trees: It divides up the data on each branch point without losing any of the data. The number of renewers and non-renewers is conserved as you move up or down the tree. It is pretty easy to understand how the model is being built. It would also be easy to use this model if you actually had to target those customers that are likely to renew with a targeted marketing offer. Another way that the decision tree technology has been used is for preprocessing data for other prediction algorithms. Because the algorithm is fairly robust with respect to a variety of predictor types and because it can be run relatively quickly decision trees can be used on the first pass of a data mining run to create a subset of possibly useful predictors. CART CART stands for Classification and Regression Trees and is a data exploration and prediction algorithm. Researchers from Stanford University and the University of California at Berkeley showed how this new algorithm could be used on a variety of different problems. In building the CART tree each predictor is picked based on how well it divides apart the records with different predictions. For instance one measure that is used to determine whether a given split point for a given predictor is better than another is the entropy metric. CHAID Another equally popular decision tree technology to CART is CHAID or ChiSquare Automatic Interaction Detector. CHAID is similar to CART in that it builds a decision tree but it differs in the way that it chooses its splits. Instead of the entropy or “gini” metrics for choosing optimal splits the technique relies on the chi square test used in contingency tables to determine which categorical predictor is furthest from independence with the prediction values. Due to the fact that CHAID relies on the contingency tables to form its test of significance for each predictor all predictors must either be categorical or be forced into a categorical form. Neural Networks This paper will outline neural networks do have disadvantages that can be limiting in their ease of use and ease of deployment, but they do also have some significant advantages. Foremost among these advantages is their highly accurate predictive models that can be applied across a large number of different types of problems. To be more precise with the term “neural network” one might better speak of an “artificial neural network”. True neural networks are biological systems (brains) that detect patterns, make predictions and learn. The artificial ones are computer programs implementing sophisticated pattern detection and machine learning algorithms on a computer to build predictive models from large historical databases. Artificial neural networks derive their name from their historical development which started off with the premise that machines could be made to “think” if scientists found ways to mimic the structure and functioning of the human brain on the computer. To understand how neural networks can detect patterns in a database an analogy is often made that they “learn” to detect these patterns and make better predictions in a similar way to the way that human beings do. This view is encouraged by the way the historical training data is often supplied to the network, for example one record at a time. Neural networks do “learn” in a very real sense but under the top the algorithms and techniques that are being deployed are not truly different from the techniques found in statistics or other data mining algorithms. It is for instance, unfair to assume that neural networks could outperform other techniques because they “learn” and improve over time while the other techniques are static. The other techniques in fact “learn” from historical examples in exactly the same way but often times the examples (historical records) to learn from a processed all at once in a more efficient manner than neural networks which often modify their model one record at a time. Neural Networks for feature extraction One of the important problems in all of data mining is that of determining which predictors are the most relevant and the most important in building models that are most accurate at prediction. A simple example of a feature in problems that neural networks are working on is the feature of a vertical line in a computer image. The predictors or raw input data are just the colored pixels that make up the picture. Recognizing that the predictors (pixels) can be organized in such a way as to create lines, and then using the line as the input predictor can prove to dramatically improve the accuracy of the model and decrease the time to create it. Some features like lines in computer images are things that humans are already pretty good at detecting; in other problem domains it is more difficult to recognize the features. One fresh way that neural networks have been used to detect features is the idea that features are sort of a compression of the training database. Rule Induction Rule induction is one of the major forms of data mining and is perhaps the most common form of knowledge discovery in unsupervised learning systems. It is also perhaps the form of data mining that most closely resembles the process that most people think about when they think about data mining, namely “mining” for gold through a vast database. The gold in this case would be a rule that is interesting (that tells you something about your database that you didn’t already know and probably weren’t able to explicitly articulate). Rule induction on a database can be a massive undertaking where all possible patterns are systematically pulled out of the data and then an accuracy and significance are added to them that tell the user how strong the pattern is and how likely it is to occur again The annoyance of rule induction systems is also its strength because it retrieves all possible interesting patterns in the database. This is a strength in the sense that it leaves no stone unturned but it can also be viewed as a weakness because the user can easily become overwhelmed with such a large number of rules that it is difficult to look through all of them. This too many patterns can also be problematic for the simple task of prediction because all possible patterns are gathered from the database there may be conflicting predictions made by equally interesting rules. Automating the process of collecting the most interesting rules and of combing the recommendations of a variety of rules are well handled by many of the commercially available rule induction systems on the market today and is also an area of active research. Discovery The claim to fame of these ruled induction systems is much more so for knowledge discovers in unsupervised learning systems than it is for prediction. These systems provide both a very detailed view of the data where significant patterns that only occur a small portion of the time and only can be found when looking at the detail data as well as a broad overview of the data where some systems seek to deliver to the user an overall view of the patterns contained n the database. These systems thus display a nice combination of both micro and macro views. Prediction After the rules are created and their value is measured there is also a call for performing prediction with the rules. The rules must be used to predict or else they will prove almost useless. Each rule by itself can perform prediction. The resulting is the target and the accuracy of the rule is the accuracy of the prediction. But because rule induction systems produce many rules for a given ancestor or result there can be conflicting predictions with different accuracies. This is an opportunity for improving the overall performance of the systems by combining the rules. This can be done in a variety of ways by summing the accuracies as if they were weights or just by taking the prediction of the rule with the maximum accuracy. 8. Applications for Data Mining: A wide range of companies have deployed successful applications of data mining. Early adopters of this technology have tended to be in information-intensive industries such as financial services and direct mail marketing; the technology is applicable to any company looking to influence a large data warehouse to better manage their customer relationships. Two critical factors for success with data mining are: a large, wellintegrated data warehouse and a well-defined understanding of the business process within which data mining is to be applied. Some successful application areas include: A pharmaceutical company can analyze its recent sales force activity and their results to improve targeting of high-value physicians and determine which marketing activities will have the greatest impact in the next few months. The data needs to include competitor market activity as well as information about the local health care systems. The results can be distributed to the sales force via a wide-area network that enables the representatives to review the recommendations from the perspective of the key attributes in the decision process. The ongoing, dynamic analysis of the data warehouse allows best practices from throughout the organization to be applied in specific sales situations. A credit card company can leverage its vast warehouse of customer transaction data to identify customers most likely to be interested in a new credit product. Using a small test mailing, the attributes of customers with an affinity for the product can be identified. Recent projects have indicated more than a 20-fold decrease in costs for targeted mailing campaigns over conventional approaches. A diversified transportation company with a large direct sales force can apply data mining to identify the best prospects for its services. Using data mining to analyze its own customer experience, this company can build a unique segmentation identifying the attributes of high-value prospects. Applying this segmentation to a general business database such as those provided by Dun & Bradstreet can yield a prioritized list of prospects by region. A large consumer package goods company can apply data mining to improve its sales process to retailers. Data from consumer panels, shipments, and competitor activity can be applied to understand the reasons for brand and store switching. Through this analysis, the manufacturer can select promotional strategies that best reach their target customer segments. Each of these examples has a clear common ground. They control the knowledge about customers understood from a data warehouse to reduce costs and improve the value of customer relationships. These organizations can now focus their efforts on the most important (profitable) customers and prospects, and design targeted marketing strategies to best reach them. 9. Data Mining Case Studies: The following case studies have been written to display and give the reader an indepth and below the surface description of data mining in the real world. These real life situations will allow the reader to relate to actual data mining in the world today, and the reader will understand more about the concepts of data mining. The following are three examples of data mining in the real world: 9.1 Energy Usage in a Power Plant: ICI Thornton Power station in the United Kingdom, produces steam for a range of processes on the site, and generates electricity in a mix of primary pass out and secondary condensing turbines. Total power output is approximately 50 MW. Fuel and water costs amount to about £5 million a year, depending on site steam demands. The objective of the data mining project was to identify opportunities to reduce power station operating costs. Costs include the cost of fuel (gas and oil to fire the boilers) and water (to make up for losses). Electricity and steam are sold and represent a revenue. Rule induction data mining was used for the project with the outcome of the analysis being the net cost of steam per unit of steam supplied to the site (the cost of the product). The attributes fall into two categories; disturbances such as ambient temperature and the site steam demand over which the operators have no control, and control settings such as pressure and steam rates. The benefits derived from the generated patterns include the identification of opportunities to improve process revenue considerably (by up to 5%). Mostly these involve altering control set points which can be implemented without any further work and for no extra cost. Implementing some of the opportunities identified needed additional controls and instrumentation. The pay back for the additional controls would be a few months. 9.2 Gas Processing Plant: This project was carried out for an oil company and was based in a remote US oil field location. The process investigated was a very large gas processing plant which produces two useful products from the gas from the wells, natural gas liquids. The aim of the study was to use data mining techniques to analyze historical process data to find opportunities to increase the production rates, and hence increase the revenue generated by the process. Approximately 2000 data measurements for the process were captured every minute. Rule induction data mining was used to discover patterns in the data. The business goal for data mining was the revenue from the Gas Process Plant, while the attributes of the analysis fell into two categories: Disturbances, such as wind speed and ambient temperature, which have an impact on the way the process is operated and performs, but which have to be accepted by the operators. Control set points, these can be altered by the process operators or automatically by the control systems, and include temperature and pressure set points, flow ratios, control valve positions, etc. This is the tree generated by rule induction. It reveals patterns relating the revenue from the process to the disturbances and control settings of the process. The benefits derived from the generated patterns include the identification of opportunities to improve process revenue considerably (by up to 4%). 9.2 Biological Applications of Multi-Relational Data Mining: Biological databases contain a wide variety of data types, often with rich relational structure. Consequently multi-relational data mining techniques frequently are applied to biological data. This section presents several applications of multi-relational data mining to biological data, taking care to cover a broad range of multi-relational data mining techniques. Consider storing in a database the operational details of a single cell organism. At minimum one would need to encode into the data warehouse or database the following. - Genome: DNA sequence and gene locations Proteome: the organism's full complement of proteins, not necessarily a direct mapping from its genes - Metabolic pathways: linked biochemical reactions involving multiple proteins. - Regulatory pathways: the mechanism by which the expression of some genes into proteins The database contains other information as well besides the items listed above, such as operons. The data warehouse being used with the data mining project stores data about genomes (DNA sequences and gene locations). From the data stored, patterns and relationships between the genes and genomes are fed into a neural network along with certain genetic laws and slowly the data mining tool learns the framework and network of genes and genomes. The data mining tools extracts hidden patterns and correlation hidden in the data stored and displays to the human analysts dependencies and relationships in the genetic material. This hidden data produced could never have been discovered by humans because of the huge information-intensive storage of data. This genetic biological application is a perfect application for data mining. It is an excellent example of a huge amount data that is exceedingly hard to analyze using other techniques besides data mining. Data mining has proved exceedingly useful in fields like this, where the amount of data is way beyond the scope of human comprehension. 10. Conclusion of this Research: The only basic conclusion that can be deducted from this intense research into the field of data mining with all its concepts, techniques and implementations is that it is a complex and growing technique. It is being more and more used in the real world to increase economy, knowledge and learning from data storages we already have, which sometimes sit idol. The details of some of the techniques of data mining have been omitted in this paper for the very fact that they dig down to the lowest level of abstraction which is un-necessary to describe the whole concept of data mining. References 1. “An Introduction to Data Mining” by Kurt Thearling, www.thearling.com 2. “An Overview of Data Mining Techniques”, by AlexBerson, Stephen Smith and Kurt Thearling. 3. “An Overview of Data Mining at Dun & Bradstreet”, Kurt Thearling 4. “CHAID”, G. J. Huba, http://www.themeasurementgroup.com/Definitions/chaid.htm 5. “Understanding Data Mining: It's All in the Interaction”, Kurt Thearling 6. “Data Mining Case Studies”, Dr Akeel Al-Attar, http://www.attar.com/tutor/mining.htm 7. “GDM – Genome Data Mining Tools”, http://gdm.fmrp.usp.br/projects.php