Full text

advertisement

The effect of sand nourishments on foredune sediment volume

Bachelor Thesis

N. van der Zwaag

5887712

Supervised by:

John van Boxel

&

Bas Arens

Date: 18-7-2011

Keywords: Nourishment, foredunes, sediment, volume, statistics

Table of Contents

1.

2.

3.

4.

5.

6.

7.

8.

9.

Summary ........................................................................................................................................ 2

Introduction .................................................................................................................................... 3

Goal ................................................................................................................................................ 3

Methods .......................................................................................................................................... 4

4.1. Data import profile ............................................................................................................. 6

4.2. Data import kilometer ........................................................................................................ 6

4.3. Data check .......................................................................................................................... 6

4.4. Outlier Removal ................................................................................................................. 7

4.5. Interpolation ....................................................................................................................... 7

4.6. Extrapolation ...................................................................................................................... 8

4.7. Landward Boundary ........................................................................................................... 8

4.8. Calculating total volume .................................................................................................... 9

4.9. Fitting Trend lines .............................................................................................................. 9

Results ...........................................................................................................................................11

5.1. Organize and repair Jar-Kus data ..................................................................................... 11

5.2. Finding landward boundary and creating volume data .................................................... 11

5.3. Fit trend lines to volume developments ........................................................................... 11

5.4. Determine spatial effects of a nourishment at a location downstream ............................. 13

Discussion .................................................................................................................................... 15

6.1. Organize and repair Jar-Kus data ..................................................................................... 15

6.2. Finding landward boundary and creating volume data .................................................... 15

6.3. Fit trend lines to volume developments ........................................................................... 16

6.4. Determine spatial effects of a nourishment at a location downstream ............................. 18

Conclusions .................................................................................................................................. 19

Literature ...................................................................................................................................... 20

Appendix (code) ........................................................................................................................... 21

9.1. Data import profile ........................................................................................................... 21

9.2. Data import kilometer ...................................................................................................... 25

9.3. Data check ........................................................................................................................ 29

9.4. Outlier Removal ............................................................................................................... 32

9.5. Interpolation ..................................................................................................................... 33

9.6. Extrapolation .................................................................................................................... 37

9.7. Landward boundary.......................................................................................................... 39

9.8. Calculating total volume .................................................................................................. 41

9.9. Fitting trend lines ............................................................................................................. 42

1. Summary

The Dutch dune coast has to be maintained in order to keep the inland safe. This is done by

adding sand to the system and increasing foredune sediment volume. These nourishments have

significant effects on natural values in the dunes. This report further investigates the effect of

nourishments on foredune sediment volume. New methods are developed to automate the use of

coastal height data to calculate volume developments. The best number of trend lines is added to

the developments, showing breaks in trend. These breaks in trend visualize the year of the

nourishment. This research concludes with a case study to see whether nourishments upstream

have effect on the volume development of the Groote Keeten location. There is no effect.

This thesis concludes the Earth Sciences Bachelor’s thesis course at the University of Amsterdam.

2. Introduction

Dunes are the Dutch' primary defense against water and storms from the North Sea (De Staat,

2009). A new vision on coastal appearance and natural values shows the importance of a dynamic

coastal line (Arens & Mulder, 2008). Measures to maintain the position of this coastal line should

be seen in perspective: The coastline is a part of a coastal zone: dunes, beaches, shores and

offshore areas (De Ruig, 1998). Some dunes grow naturally, others break down. Some locations

still have to be strengthened for safety of the inland. This is done by monitoring the BCL (Basic

Coastal Line) actually a coastal zone of approximately between +3m and +5m NAP (Normaal

Amsterdams Peil = Sea level reference). When the amount of sand in this zone drops below a

certain limit, the coast has to be replenished with sand nourishments.

Nourishments are an essential part of maintenance of the Dutch coastal defense system. They

strengthen the system by replenishing sand losses due to erosion. They can be applied in the

dunes, on the beach or off shore. This sand widens the beaches, increases foredune volume and,

in case of underwater nourishments, is able to break waves. The adding of sand to the coastal

system could have extensive consequences for natural habitats. A lot of these habitats are also

part of Natura 2000, a network of natural areas in the European Union. The goal of Natura 2000 is

the prevention of degradation of biodiversity (European Commission Publication Office, 2009). In

order to keep an eye on biodiversity in dune habitats, it is imperative to know what happens with

the sand that is being added.

The transportation of sand in the coastal zone has been researched. Coastal dynamics studies

(De Swart, 2006) are done and coastal sediment models (Van der Burgh et al., 2007. Van der Wal,

1999) have been developed to describe the effect of nourishments. Sand budget trends have been

calculated along the length of the Dutch coast from the foredunes to a point about 3000 m in sea

(De Ruig, Louisse, 1991). The aeolian transportation of the sediment is researched and it is shown

that grain size and composition of the sand have an effect on the dispersion (Van der Wal, 1998).

Furthermore the effect of nourishments on the sand budget is researched showing that

nourishments temporarily protect the foredunes from being eroded by waves and increase the

aeolian transport to the dunes (Van der Wal, 2004). The effect of nourishments on natural values

has also been researched. Sizeable impacts are described on several beach ecosystem

components (Speybroek et al., 2006). Arens and Wiersma (1994) classified types of foredunes to

gain further insight into the occurrence and extent of natural processes in the foredunes.

The Dutch coastal infrastructure and environment ministry are having the effects of nourishments

on dune development researched (Arens et al., 2010). The report states that there is indeed an

effect of nourishments on foredune sediment volume. However the report lacks the statistical

significance to proof this theory: The foredune sediment volumes are investigated and the dunes

are classified, volume developments for 18 locations are analyzed and trend lines are fitted and

the breakpoints of these lines are found visually and do not have statistical significance.

3. Goal

The primary goal of this research is to evaluate the (positive) effect of nourishments on foredune

sediment volume. Temporal trends in foredune volume are examined and their statistic

significance is determined. These calculations are completely automated. Methods are developed

to fit trend lines and determine their significance. Then these methods will be applied to evaluate

the spatial effect of sand nourishments at a location downstream of the nourishment.

The following research questions are formulated.

What is the best way to organize and repair the dataset for statistical analysis?

What is the best way to significantly proof the existence of a break in trend line?

What is the effect of sand nourishments on foredune sediment volume?

Is it possible to determine spatial effects of a nourishment at a location downstream?

3

4. Methods

To know how the foredunes respond to the added sand, the foredune sand volume is calculated

for every year using coastal height data. Jar-Kus data (Minneboo, 1995). Since 1997 Jar-Kus data

is measured annually by laser altimetry from a plane. Before 1997 this was done by means of

photogrammetric interpretation from air photography. A database is available which contains data

since 1964, it consists of cross profiles (height profiles from sea to land) with a mutual distance of

200-250 meters over the length of the beach. The foredune volume is calculated from the point

where the elevation first reaches +3 m NAP to a stable point landwards.The landward boundary is

calculated automatically at a location where height is stable through the years. Which means that

no sand is transported beyond this point. All the changes in foredune volume should be between

this point and the beach.

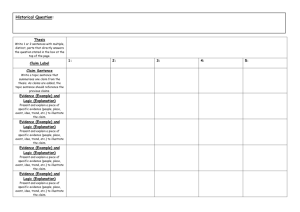

In the following paragraphs, the script used for this research is described. It consists of several

subscripts that are all put in series. Figure 1 shows a flowchart with the basic operations of the

script. A green box represents data in different states. The first state is the raw Jar-Kus dataset.

This dataset contains nearly 2000 profiles. A lot of them are too incomplete to be used and others

need to be repaired before they can be useful. In the first section of the script the dataset is

cleaned up and repaired. Incomplete profiles and outliers are deleted. The remaining profiles are

interpolated, both chronically and spatially, and extrapolated, so the data can be used effectively.

In the second section the volume is calculated. The landward boundary is found and used to

calculate the volume development. The profile data can than be averaged over a kilometer. This is

done to filter possible measurement errors. The last subscript fits the trend lines. One, two or three

lines are fitted automatically to the data, based on their Root Mean Square Error (RMSE), the

standard deviation of the residuals, a measurement of fit (Willmot et al., 1985).

A blue box represents a subscript that will be described in this chapter.

In the sections below, the script is explained section by section. For more details see the actual

commented script at the appendix. The main script is divided in two: One can be used to plot

multiple kilometers and the other can be used to plot single profiles and all the profiles from one

kilometer.

Scripts:

Data import profile

Data import kilometer

Data check

Outlier removal

Interpolation

Extrapolation

Finding landward boundary

Calculating total volume

Fitting Trend lines

4

Figure 1: Flowchart of the functionality of the script.

5

4.1. Data import profile

This main script is used to analyze separate profiles or all profiles in one kilometer.

The script first loads the open source file 'transect.nc': the raw Jar-Kus data. Then the user is

prompted to choose between one separate profile or all profiles of one kilometer and for a grid

number and a profile or kilometer- number. The number of profiles per kilometer differs: the

mutual distance between profiles varies from 100 meters to 250 meters. One profile per kilometer

is added to correspond to the way Arens et al. (2010) averaged kilometer profiles. For example if

the profiles are 250 meters apart the kilometer will consist of (1000/250)+ 1 = 5 profiles. The

profiles are then checked to see whether they are consistent enough to use with the subscript

'data check'. Incomplete profiles are discarded. Then the data is clipped: 1965-1974 and 2010 are

removed, because those years have incomplete data. Also all cross profile coordinates that

contain height data that is lower than 0 m NAP, or do not contain any data at all, are removed. A

profile is created with on the x-axis the years and on the y-axis the cross profile coordinates. The

profile is filled with the corresponding height data. Then the script continues with all the subscripts:

Interpolation and extrapolation, calculating the landward boundary, the foredune volume and finally

fitting the trend lines.

All data is in cm.

This script is produced in 2011. Data of 2010 was not yet added to the calculations because it was

incomplete. If data for 2011 is added, 2011 is automatically discarded and 2010 is added. If data

from 2011 is good enough to use it could be added by deleting line 168. Only six profiles per

kilometer can be visualized, because of the limiting color scheme (See 'fitting trend lines' for more

information) .

This version of the script does not use the outlier script to delete outliers (see 'Outliers Removal'

for more information). Note that if only a single incomplete profile is put in, the script will fail,

because it will be removed by data check and no data are able to be used.

4.2. Data import kilometer

This script is used to analyze multiple kilometers of profiles. It develops practically the same as the

data import per profile. The separate profiles are averaged over one kilometer. Up to a maximum

of 6 kilometer profiles can be analyzed. Input is the Jar-Kus database. This script loads the data,

prompts the user for input on location, removes incomplete years and contains all the subscripts

necessary for output: A figure showing the volume development of the separate kilometer profiles,

the fitted trend lines, and the statistical significance of these lines.

Note that no outlier removal script is present (see 'Outliers Removal' for more information).

4.3. Data check

In this script the data is checked in 4 different ways to delete profiles that are too incomplete to

use. The script's input is location data of the profiles kilometers that need to be checked. The

dataset that is to be checked is loaded in the same way as the data import scripts.

First the data is checked to see if it contains 5 or more rows (Height data per coordinate) of data

that meet criteria. The first criterium is that the minimum height per row is above 200 cm NAP.

This because volume data is only calculated above 300 cm NAP. The extra meter is added

because of height jumps: extra data are needed to successfully calculate volume. The second

criterium is that every coordinate should have at least more than 5 years of height data. If those

criteria are not met, the profile is discarded.

After this a full profile is created using interpolation and extrapolation.

Then the profiles are deleted that contain more than 7 years that still consist of only NaN (Not a

6

Number = empty data cell) data. The profiles in which last year contains more than ~40 NaNs

(length of profile - 10) are also deleted. This because this would mean that the last few years are

not interpolated correctly.

Finally the first year (1975) is checked. If it contains more than ~35 (no. rows – 15) NaNs it is

deleted. The following years are also checked and deleted until a year meets the criterium. This

creates a problem, because the volume developments need to exist of the same number of years.

So a variable is created stating how many years are deleted, so the next profile (that may or may

not have incomplete first few years) is clipped the same length. This clipping happens in the import

script. Furthermore the script puts out an array of names of the profiles that are and are not

discarded.

4.4. Outlier Removal

The outlier script detects outliers and replaces them with NaNs. It calculates mean and standard

deviation of the array and compares those with the height data in the array. If the height data

minus the mean is bigger than 'x' times the standard deviation, the height data at that location is

replaced by a NaN. The 'x'-value can be changed by user. The output returns the data with all

outliers replaced as NaNs. They should be interpolated. Furthermore it shows the number of

outliers removed.

If this number is too large, too much outliers were deleted. This might happen because the outliers

deleted lower mean, creating more outliers, etc. If that is the case, it is best to raise the standard

deviation x-value or to remove the outlier script.

The outlier script is not used because in large profiles it deletes highest numbers that were still

correct.

4.5. Interpolation

The interpolation script interpolates data both chronically (during years) and spatially (at the same

x-coordinate). The interpolation is done linearly. The chronical interpolation is done first. It

interpolates over a maximum of 15 meters. The spatial interpolation is done over a maximum of

10 years. In the below figures examples of interpolation are given. The cross coordinates are

shown (x) with their corresponding heights (y). The main part of this script is from Van Puijvelde's

(2010) internship report.

Table 1: An example of chronical interpolation

7

Table 2: An example of spatial interpolation

The first columns and rows are ignored, they can't be interpolated. So the quality of the

interpolation depends on the quality of the first and last columns. In the whole year of 2002 no

measurements were done. All those data are now interpolated. It would be better if no data were

interpolated, no volume was calculated and that year was not visualized in the graph.

4.6. Extrapolation

In the extrapolation subscript height data is calculated further landward as to further fill up the

dataset. This makes it possible to find landward boundaries more landward, so the possibility of it

being more stable is bigger. The extrapolation only takes place at the most seaward ending data

columns (a column is a year) and is designed to stop when the extrapolated heights drop below

300 cm. It will extrapolate a maximum of 7 rows.

The user can choose between spline extrapolation or linear extrapolation. It will output the

enhanced profile and the number of extrapolated rows.

X

Y

X

Y

-23000 NaN

-23000 NaN

-22500 NaN

-22500 559

-22000 NaN

-22000 551

-21500 NaN

-21500 558

-21000 NaN

-->

-21000 563

-20500 NaN Extrapolation

-20500 564

-20000 NaN

-20000 556

-19500 NaN

-19500 540

-19000 611

-19000 611

-18500 553

-18500 553

-18000 542

-18000 542

-17500 547

-17500 547

-17000 608

-17000 608

Table 3: An example of extrapolation

4.7. Landward Boundary

To calculate the total foredune volume a landward boundary has to be established. The landward

boundary is a coordinate from where the dune height does not change (as much). The dunes

behind this boundary do not change in volume and are therefore not a part of the foredunes. First

8

all height data that are +300 cm NAP and do not contain any NaNs are selected. Then a trend line

is fitted through all the heights on one location through all years. This is done to find the coordinate

where the heights change the least through the years: there where the slope of the fitted trend line

is smallest. Also the RMSE of the trend line is checked. The process starts with the landward most

coordinate. If that point does not meet the criteria, say, a slope of 1 cm per year and a RMSE of 10

cm, the next coordinate seaward is fitted a trend line and checked.

If none of the points of the cross profile meets criteria the criteria are raised and the process starts

again. If the criteria are raised too high, the furthest point landward that is +300 cm NAP and does

not contain any NaNs is chosen.

Figure 2: An example of a profile through the years. The x-axis shows the distance to the sea in cm.

The y-axis shows the height in cm. The landward boundary would be found somewhere between 15000 cm and -20000 cm.

4.8. Calculating total volume

This subscript calculates the total volume per year per profile. From the dune toe to the landward

boundary, the height is multiplied with the length (500 cm). The dune toe is the maximum seaward

coordinate with heights of over 300 cm and is in this method a single cross profile location. The

height multiplied with the length is then summed and this calculation is then repeated for all years

of the profile. Because heights at the dune toe are irregular (the height drops from over 300cm to

under 300 cm) an extra 500 cm in length is added to the volume. Which might not be enough: In

some years the height might not drop under 300 cm, 1000 cm seaward from the stated dune toe.

This method differs from the way Arens et al. (2010) calculate volume. Arens et al.(2010)

interpolate the data at the 300 cm height border. This means coordinate of the dune toe differs over

the years. The coordinate of the dune toe is a static one in this report's script.

9

4.9. Fitting Trend lines

In this script the breakpoints are calculated, the trend lines are fitted and the data is visualized.

First the breakpoints are calculated. The script uses a subfunction that uses the least squares

approach to find 1, 2, or no breakpoints in the data. These breakpoints are then used to fit trend

lines (1, 2 or 3 lines, depending on the number of breakpoints). The RMSE is calculated of these

lines. The RMSE of multiple trend lines are averaged. More trend lines plotted to the data, always

means a lower statistical error, but does not always provide a logical fit.

It is imperative the correct number of trend lines is fitted to the data. To make sure of this, criteria

are formed to fit the correct number of lines. To find these criteria volume developments with clear

breakpoints are looked at. When on visual inspection of clear developments two trend lines need

to be plotted, the criteria are adapted. An example of a criteria could be that the RMSE of 2 trend

lines has to be lower than two times the RMSE of 1 trend line. For example, the RMSE of one

trend line is 30, the averaged RMSE of two trend lines is 20. if a criterium is 2 for this comparison,

then 2 * 20 is higher than 30 and a single trend line is plotted.

Finally the script puts out a figure showing the volume development, the fitted trend lines, the year

of the breakpoints and the (averaged) RMSE of the fitted lines. The second output is a table that

shows the RMSE if a different number of trend lines was plotted.

A maximum of 6 lines can be plotted to keep the figure clear.

10

5. Results

5.1. Organize and repair Jar-Kus data

As described in methods, the best way to organize the data is by deleting incomplete or false

values and adding data. The deleting is done by the script data check: It deletes incomplete

profiles and removes incomplete years. Then data is added by interpolation and extrapolation. The

complete profiles are then ready to use for calculating volume development and fitting trend lines.

5.2. Finding landward boundary and creating volume data

Landward boundaries are found as described in section 4.7. Table 4 shows the landward

boundaries found at 10 profiles at Heemskerk and Texel. The landward boundary is measured in

cm from the RSP( RijksStrandPaal = Reference line for the Dutch coast).

RSP 50: Heemskerk

RSP 50: Heemskerk

Profile

number

Profile number (km)

(km) Landward

Landward boundary

boundary (m)

(m)

50.000

-160

50.000

-160

50.250

-180

50.250

-180

50.500

-190

50.500

-190

50.750

-135

50.750

-135

51.000

-185

51.000

-185

RSP 13: Texel

Profile number (km) Landward boundary (m)

13.120

-180

13.320

-180

13.520

-180

13.720

-210

13.920

-170

13.920

-170

Table 4: Examples of landward boundaries

found.

5.3. Fit trend lines to volume developments

Figure 3 and 4 show the volume developments of multiple kilometers, with trend lines fitted. The

breakpoints and RMSE of all lines are annotated in the upper left corner. These figures are used to

calibrate the criteria, starting with Heemskerk (figure 3), kilometers 48, 49 and 50. Heemskerk was

used because of the three clearly different types of developments, all requiring a different number

of trend lines. Three numbers of trend lines are indeed fitted to the data.

Figure 4 shows the volume development of the foredunes of Texel, kilometer 11, 12, 13 and 14.

Texel was used for further calibration of the criteria because of the breakpoint around 1995 that is

clearly visible in all figures. The trend lines fitted al show a breakpoint around 1995.

After calibration the criteria found for these comparisons are:

The RMSE of three lines has to be 1.46 times smaller than that of two trend lines.

The RMSE of two lines has to be 1.50 times smaller than that of one trend line.

The RMSE of three trend lines has to be 1.67 times smaller than that of one trend lines.

These criteria are put in series. So first the RMSE of three trend lines is compared with that of the

two trend lines. If the RMSE is not smaller the second criteria is compared. Etcetera.

11

Figure 3: Volume development and trend lines of Heemskerk (RSP 48-50).

12

Figure 4: Volume development and trend lines of Texel (RSP 11-14)

13

5.4. Determine spatial effects of a nourishment at a location downstream

The volume development of Groote Keeten (RSP 8-9) is looked at. Groote Keeten is an area

downstream from large nourishments and only had a small nourishment done in 2003. The

nourishment data of the upstream location is compared with the volume development and trend

lines of Groote Keeten.

The nourishment data shows us three types of nourishments: Underwater, beach and dune

nourishments. The underwater nourishments are done in front of the beach. The effect of these

nourishments is not drastic, it takes a while before the sand gets transported into the dunes. And

they might not even be visible in the volume development (Van de Graaff et al., 1991). The beach

and dune nourishments should have a larger impact on the foredune volume development,

because they only depend on aeolian transport for dispersal.

Nourishments Upstream

Year Start profile(km) End profile (km) Nourishment size (m3/m)

1979

11.150

12.800

285

1986

10.825

13.725

428

1986

11.750

12.050

260

1991

11.000

14.000

179

1996

12.200

14.100

242

1996

10.010

12.130

217

2001

11.080

14.010

512

2003

11.100

13.750

165

2003

10.000

16.000

429

2004

11.100

13.740

82

2006

10.000

15.200

308

Nourishment at Groote Keeten

Year Start profile(km) End profile (km) Nourishment size (m3/m)

2003

9.130

9.430

41

Type of nourishment

Underwater nourishment

Beach nourishment

Beach nourishment

Underwater nourishment

Underwater nourishment

Beach nourishment

Beach nourishment

Beach nourishment

Beach nourishment

Dune nourishment

Dune nourishment

Type of nourishment

Underwater nourishment

Table 5: Nourishment data of upstream of and at the Groote Keeten location

Figure 5 shows the volume development of the dunes near Groote Keeten. When compared with

the above nourishment data, it firstly shows that the small nourishment done in 2003 in the area

itself is not visible in the volume data. But it is an underwater nourishment, so it would not make a

clear change in volume. In the kilometer figure, figure 5, no clear changes that could be correlated

to the nourishment are visible. There are some jumps in volume, but those are not in the same

year as a nourishment.

In Figure 6, kilometer 9 is split into the development data of the separate profiles. Kilometer 9 is

the kilometer of the Groote Keeten area closest to the upstream nourishments. So profile 10.000 is

the closest to the upstream nourishments and should be most influenced by them. Note that three

of the profiles show a breakpoint around 1990, although there has not been a significant

nourishment at that year, except for maybe 2 large beach nourishments in 1986. Also the large

beach nourishments of 2003 and the dune nourishment of 2006 does not seem to have a radical

influence on the volume development.

The volume developments do not on visual inspection show clear influence of the nourishments.

14

Figure 5: Volume development and trend lines of Groote Keeten (RSP 8-9).

15

Figure 6: Volume development and trend lines of all the profiles in RSP 9 of Groote Keeten.

6. Discussion

6.1. Organize and repair Jar-Kus data

The deleting of incomplete data and supplementing by means of interpolation and extrapolation

proofs to be an excellent method to get complete profiles for calculation of landward boundaries

and volume development.

The outlier script is not used in the organization of the Jar-Kus data. The script uses mean and

standard deviation values to find outliers. In big arrays, with long profiles, the mean drops so low,

that normal high height valued are seen as outliers. On visual inspection no outliers were detected

in the dataset. It is unsure whether or not an outlier removal script is required.

The database's last year, 2010, contains data that is too incomplete to use. Maybe this is because

the altitude measurements were done badly or maybe the data are not uploaded completely. Also

there is not data available for 2002. 2002 is interpolated completely. A better alternative would be

to leave 2002 empty in the volume development data and graph.

Data check deletes the first few years of a profile if they are too incomplete to work with. To make

sure the kilometer summing does not give a lower volume at those years, those years are not

added at all. This is a good thing, however, the script also deletes those first years in other

kilometer developments that are put in the same graph. In stead, these developments should show

their full data and only the incomplete one should be clipped.

6.2. Finding landward boundary and creating volume data

Tables 6 and 7 show landward boundaries found by this research and by Arens et al. (2010). It

shows us the results of the two calibration areas Heemskerk and Texel midden. The landward

boundaries do not differ very much except for Heemskerk profile 50.750 and Texel profile 13.720.

16

Closer examination of these locations shows the script finding a location that might be different

but is certainly stable. At the Heemskerk location the boundary found has a slope of 1.4 cm with a

RMSE of 13.35 cm.

The Texel boundary is further than found by Arens et al. (2010). This is probably due to excellent

extrapolation. The boundary has a slope of 0.07 cm and a RMSE of 9.08.

RSP 50: Heemskerk

Profile number (km) Landward boundary (m)

50.000

-160

50.250

-180

50.500

-190

50.750

-135

51.000

-185

RSP 13: Texel

Profile number (km) Landward boundary (m)

13.120

-180

13.320

-180

13.520

-180

13.720

-210

13.920

-170

Table 6: The landward boundaries found by

this research.

RSP 50: Heemskerk

Profile number (km) Landward boundary (m)

50.000

-180

50.250

-175

50.500

-175

50.750

-170

51.000

-165

RSP 13: Texel

Profile number (km) Landward boundary (m)

13.120

-180

13.320

-175

13.520

-175

13.720

-175

13.920

-165

Table 7: The landward boundaries found

by Arens et al. (2010).

Although the results seem to be good, a point of discussion is the fact that a point can be super

stable in height, but more landward points are not. So instead of checking only how stable one

point is, that point plus the more landward points need to be checked. In the routing of a more

seaward point is to be checked, the slope and RMSE are added to the more landward points

already checked and it is seen whether or not the slope and RMSE values improve. If these values

improve or stay the same (thus being a stable point), another point more seaward can be checked.

If the slope and RMSE values get worse significantly, that point is less stable and should be

considered as landward boundary.

6.3. Fit trend lines to volume developments

The resulting figures are compared with those of Arens et al. (2010). First the Heemskerk results

are checked (figure 7). The volumes calculated by Arens et al. (2010, figure 8) seem a bit higher

overall, but the shape of the development matches. This might be the result of different landward

boundaries: The boundaries found by this thesis are mostly lower than those found by Arens et al.

(2010), this lowers volume.

The breakpoints differ quite a lot. Arens et al. (2010) breakpoints are found by visual inspection

and probably influenced by the knowledge that a big nourishment has been done at kilometers 49

and 50 at 1996 and 1997. Note that this thesis' results don't show a breakpoint in kilometer 48,

where no nourishment has been done ever. Kilometers 49 and 50 show breakpoints before or after

1997.

While the trend lines of this research might fit better, they don't always have to make things

clearer. For example, the second breakpoint at km 50 in 1994 could be just as well moved to 1996:

1994-1996 don't show any major decline or incline. This would show a clearer effect of the

nourishment.

17

Figure 7: Volume development and trend lines of Heemskerk (RSP 48-50).

Figure 8: Volume development and trend lines of Heemskerk (RSP 48-50) by Arens et al. (2010).

18

The volume development of Texel midden (figure 9) does not seem to differ much from that of

Arens et al. (2010, figure 10). The breakpoints all are around 1994, the same year Arens et al.

(2010) found their breakpoints. However the main difference here is the major decline visible in the

Arens et al. (2010) figure of kilometer 14, the first few years. Further inspection reveals a flaw in

the results. Because this script chooses a steady seaward boundary (the point where the height

drops below 300 cm) for all years, it does not take into account that the seaward boundary could

also change per year. In this case, the seaward boundary for these results is way too far landward

for the first few years, thus showing a lower volume at those years. Seaward boundaries also need

Figure 9: Volume development and trend lines of Texel midden (RSP 11-14).

to be calculated annually.

19

Figure 10: Volume development and trend lines of Texel midden (RSP 11-14) by Arens et al.

(2010).

The criteria are found and calibrated for 7 trend lines. This might not be enough The calibration

should be done more extensively. Although the script works well on other location, more

calibration could improve the applicability of the script.

The trend line criteria should not be in series. Instead they should all be compared to one another.

This would insure an optimal choice of number of trend lines and not a by accident chosen

number, because it was first in line. Also the trend line criteria could be made user adjustable, if for

example more single trend lines have to be fitted.

Visualization could be improved: Separate tables with data, out of the graphs and names of

kilometers that are a bit more than numbers.

6.4. Determine spatial effects of a nourishment at a location downstream

There does not seem to be a lot of effect of nourishments upstream at the Groote Keeten location,

however the visual inspection done is flawed and biased. Statistical tests should be performed to

find correlation between the nourishment data and the volume developments.

20

7. Conclusions

The Jar-Kus dataset contains a lot of faulty, incomplete profiles that have to be repaired or

removed.

A script is developed that will remove the most badly damaged profiles and repair the

others.

The correct coordinates of the landward boundary is found automatically by this script.

It fits 1, 2 or 3 trend lines depending on certain criteria which are developed to make sure

the correct number of lines are fitted.

The script has been tested on some profiles and is shown to work.

There is no influence noticeable of upstream nourishments at a downstream location.

Recommendations:

Landward boundaries should be calculated differently: Not only a single point should be tested for

stability, but all the points landward. The test should start at the most landward point. Then the

second point, more seaward, should be tested. If stability increases or stays more or less the

same, that point is stable too. If stability decreases, that point should be seen as landward

boundary.

The error in volume calculation should be repaired: Seaward boundaries should be calculated per

year and not be a single coordinate for all years.

Input user interface could be improved. The user should be able to choose from a list of available

grid numbers, RSP and profile numbers. Also the user should be able to choose between two

options and not having to type 0 or 1. The input could furthermore be improved by giving it the

option of changing (subscript) parameters, such as the amount of data extrapolated or the criteria

for the number of trend lines fitted.

Output should be visualized better and the output tables should be added to the graph. Local

nourishment data could be added to the graph. The name of the location should be the title of the

graph.

21

8. Literature

Arens, S.M., Mulder, J.P.M., 2008. Dynamisch kustbeheer goed voor veiligheid en natuur. Land +

Water no. 9, 33-35.

Arens, S.M., Puijvelde, van, S.P., Brière, C., 2010. Effecten van suppleties op duinontwikkeling.

Bosschap, bedrijfschap voor bos en natuur, 1-98

Arens, S.M., Wiersma, J., 1994. The Dutch foredunes: Inventory and classification. Journal of

Coastal Research 10(1), 189-202.

De Staat, 2009, Samenwerkingsovereenkomst zandsuppleties natuurbeschermingsorganisaties en

Rijkswaterstaat.

De Ruig, J.H.M., Louisse, C.J., 1991. Sand budget trends and changes along the Holland coast.

Journal of Coastal Research 7(4), 1013-1026.

De Ruig, J.H.M., 1998. Coastline management of the Netherlands: Human use versus natural

dynamics. Journal of Coastal Conservation 4, 127-134.

De Swart, H., 2006. Kustdynamica, Weer, Klimaat en Kust. Meteorologica 15(4): 10-14.

European Commission Publication Office, Natura 2000, 2009, Natura 2000 factsheet.

http://ec.europa.eu/environment/nature/index_en.htm .

McLaughlan, P.F., 2001. Levenberg-Marquardt minimisation. University of Surrey.

Minneboo, F.A.J., 1995. Jaarlijkse Kustmetingen, richtlijnen voor de inwinning, bewerking en

opslag van gegevens van jaarlijkse kustmetingen. Rapport RIKZ – 95.022.

Van de Graaff, J., Niemeyer, H.D., Van Overeem, J., 1991. Beach nourishment, philosophy and

coastal protection policy. Coastal Engineering 16, 3-22.

Van der Burgh, L.M., Wijnberg, K.M., Hulsher, S.J.M.H., Mulder, J.P.M., Koningsveld, van, M,

2007. Linking coastal evolution and super storm dune erosion forecasts. Coastal

Sediments ASCE, 1-14.

Van der Wal, D., 1998. The impact of the grain-size distribution of nourishment sand on aeolian

sand transport. Journal of Coastal Research 14(2), 620-631.

Van der Wal, D., 1999. Modelling aeolian sand transport and morphological development in two

beach nourishment areas. Earth Surface Processes and Landforms 25, 77-92.

Van Puijvelde, S.P., 2010. Classification of Dutch foredunes, Internship report Deltares / Arens

bureau voor Strand en Duinonderzoek / University of Utrecht.

Speybroeck, J., Bonte, D., Courtens, W., Gheskiere, T., Grootaert, P., Maelfait, J.P., Mathys, M.,

Provoost, S., Sabbe, K., Stienen, E.W.M., Van Lancker, V., Vincx, M., Degraer, S.,

2006. Beach nourishment: an ecologically sound coastal defence alternative? A

review. Aquatic Conservation: Marine and Freshwater Ecosystems 16, 419-435

Wilmott, C.J., Ackleson, S.G., Davis, R.E., Feddema, J.J., Klink, K.M. Legates, D.R., O'Donell, J.,

Rowe, C.M., 1985. Statistics for the evaluation and comparison of models. Journal

of Geophysical Research Volume 90, No. C5, 8995-9005.

22

9. Appendix (code)

9.1. Data import profile

%

%

%

%

Main loading script to analyze loose profiles or all profiles in one

kilometer.

N.B. This Script not only gives a figure with significance, it also shows

some extra important data per profile! See Output - Data in Arrays (Below).

%

%

%

%

%

%

%

Created by: Bob van der Zwaag

Part of the BSc thesis: 'The effect of sand nourishments on foredune

sediment volume'

University of Amsterdam

Date: June 2011 ( the database was till 2010)

Contact: bobzwaag@gmail.com

Matlab Version: R2010b

% Input:

% - transect.nc:

% This file contains the raw JarKus-data. It's open source

% data from Rijkswaterstaat (Dutch Coastal maintainance office) and

% it's available at Deltares Open Earth:

http://opendap.deltares.nl:8080/thredds/catalog/opendap/rijkswaterstaat/jarkus/

catalog.html

% Files needed for operation:

% - MexCDF

% transect.nc is a database generated by MexCDf, to open it, MexCDF

% commands have to be added to Matlab system. For more information see:

% http://mexcdf.sourceforge.net/

% - Stixbox

% Stixbox functions are needed. I believe only linreg.m and llsfit.m.

% - Subscripts not native to Matlab

% These are the scripts that where created especially for this thesis.

% - Datacheck.m

% - Outliers.m

% - interpolatie.m

% - extrap.m

% - Landward_Boundary2.m

% - caltotvol.m

% - CalcTrend.m

% - User Input

% Consists of correct grid no. and RSP or Profile No. For more information

% see:

%

http://www.rijkswaterstaat.nl/geotool/geotool_kustlijnkaart.aspx?cookieload=tru

e

% and Arens et al. (2010)

%

%

%

%

%

%

%

%

%

%

%

Output

Output is decribed briefly in this section. The individual outputs

are described more thouroughly in the output sections of the subscripts.

- Figure

This script generates a figure with on the x-axis the years and on

the y-axis the volume. It is filled with the volume data and trend

lines are fitted. The Breakpoints and the siginificance of the lines

are annotated.

- Data in arrays

- idprofiles: the profiles that were used.

- idkicked: The profiles that were removed because they were

23

%

%

%

%

%

%

%

%

%

%

%

%%

incomplete.

- sumnoutliers: The number of outliers removed per run.

- sumnrextrap: the number of rows extrapolated landward

- sumboundary: All landward boundaries

- summinmax: All min & max coordinates landward boundaries

were found between

- sumslope: the slopes of landwardboundaries

- sumRMSE: the RMSEs of boundaries

- RMSE2: The RMSE of all lines(1 line per column). first row

shows the RMSE if one line was fitted, second row if two

lines where fitted and third if three lines were fitted

INITIALIZATION

clc

clear all

close all

% Creating empty arrays for concatenation

totvolsum = [] ;

sumnoutliers = [] ;

sumslope = [] ;

sumRMSE = [] ;

sumnrextrap = [] ;

summinmax = [] ;

sumboundary = [] ;

% Adding paths with neccesary files

addpath ([pwd '/mexcdf/mexnc']);

addpath ([pwd '/mexcdf/snctools']);

javaaddpath([pwd '/netcdfAll-4.2.jar']);

setpref('SNCTOOLS','USE_JAVA',true);

setpref('SNCTOOLS','USE_MEXNC',false);

addpath stixbox

% Loading RWS Data

url = 'transect.nc';

id = nc_varget(url,'id'); % Loading Profile IDs

gridlist = round(id/1000000) ; % Creating list of Grid (2-17)

Raailist = id - (gridlist*1000000) ; % Creating list of profiles

RSPlist = floor(double(Raailist)/100) ; % Creating list of kilometers

%% PROMPT USER FOR LOCATION

% Choose between 1 loose profile or all profiles in one kilometer

prompt = {'Choose profiles per km(enter 1) or loose profiles( enter 0)'} ;

dlg_title = 'Input';

num_lines = 1;

def = {'0'};

answer = inputdlg(prompt,dlg_title,num_lines,def);

Choose = str2double(answer{1}) ; % Answer

if Choose == 1 % If Answer was'1' whole kilometer is examined.

% Enter Grid And RSP(kilometer)

prompt = {'Enter GridNo. (2-13, 15-17):','Enter RSP'};

dlg_title = 'Input';

num_lines = 1;

def = {'6','11'};

answer = inputdlg(prompt,dlg_title,num_lines,def);

grid = str2double(answer{1}) ; % Answer grid

RSP = str2double(answer{2}) ; % Answer RSP

24

loc = find(gridlist == grid & RSPlist == RSP) ; % find location of id in

lists

loc(length(loc)+1) = loc(length(loc))+1 ; % Taking first of next km as well

[loc, i2, lockicked] = Datacheck(loc) ; % checking data for incomplete

profiles

else % If answer was 0, only one raai is examed

% Enter grid and profile no. (see raailist)

prompt = {'Enter GridNo. (2-13, 15-17):','Enter profile 1'};

dlg_title = 'Input';

num_lines = 1;

def = {'7','4925'};

answer = inputdlg(prompt,dlg_title,num_lines,def);

grid = str2double(answer{1}) ; % Answer grid

Raai = str2double(answer{2}) ;% Answer Profile

loc = find(gridlist == grid & Raailist == Raai) ; % find location of id in

lists

[loc, i2, lockicked] = Datacheck(loc) ; % checking data for incomplete

profiles

end

idkicked = id(lockicked(:))' ; % Summing al Profiles that were discarded

idprofiles = id(loc(:)) ;

%% CREATING PROFILE

for ID = id(loc(:))'

% Loading years

profile = [] ; % empty array to make shure everything fits :)

year = nc_varget(url,'time'); % Loading Years

year = round(year/365+1970); % Creating Years

[u,~] = size( year) ; % quantity of years

year = year(1:u-1) ; % Deleting 2010(Incomplete)

[u,~] = size( year) ; % new quantity

% Load Cross Shore Coordinates

XRSP = nc_varget(url, 'cross_shore') ;

% Create Year Numbers

for k=1:u

year_nr(1,k)= find(year == 1964+k);

end

clear k;

% Create transect number of ID

transect_nr = find(id==ID)-1;

% Load Height Data of corresponding profile

for l=1:u

z(:,l)= nc_varget(url,'altitude',[year_nr(l),transect_nr,0],[1,1,-1]);

end

clear l;

% Clear first 10 years because they're incomplete (1965-1975)

z = z(:,10:u-1) ;

year = year(11:u-1) ;

[u,~] = size( year) ;

25

year(u+1) = year(u) +1 ;

[u,~] = size( year) ;

% Multiplying heights and coordinates by 100, so they're in cm.

z= z.*100 ;

XRSP = XRSP .* 100 ;

%Heights that are -0m NAP and inComplete Data(all NaNs through all years

%on one location) are deleted

datamin = min(z,[],2) ; % Finding minimum of height on cross profile

for k = 1:u

nan(:,k) = isnan(z(:,k)) ;

nansum = sum(nan,2) ; % finding quantity of NaNs cross profile

end

com3rows = find(datamin >150 & nansum < u-5 ) ; % find rows that meet

criteria

z = z(min(com3rows):max(com3rows),:); % clip height data

XRSP = XRSP(min(com3rows):max(com3rows),:) ; % clip coordinates

%

%

[z,noutliers] = Outliers(z, 3) ; % remove outliers

sumnoutliers = [sumnoutliers,noutliers] ; % concatenate outliers

% Creating profile array

k=1;

for g=1:2:u*2

profile(:,g)=XRSP(:,1); % coordinates every uneven number

profile(:,g+1)=z(:,k); % height data every even number

k=k+1;

end

clear k g

% Clipping the profile to the smallest one(see Datacheck)

profile2(:,1:i2) = profile(:,(u*2-i2+1):(u*2)) ; % clip

profile = profile2 ;

year2 = year((length(year) - (i2/2-1)):length(year),1) ; % clipperdeclip

year = year2 ;

clear profile2 year2

%% REPAIRING PROFILE

profile = interpolatie(profile) ; %interpolation

[profile, nrextrap] = extrap(profile,1) ; %extrapolation

sumnrextrap = [sumnrextrap,nrextrap] ; %concatenating no. extrapolation

%% CALCULATING VOLUME

[BOUNDARY, slope, RMSE, minmax] = Landward_Boundary2(profile,year) ; %

finding landward boundary

sumslope = [sumslope,slope] ; % concatenate all slopes

sumRMSE = [sumRMSE,RMSE] ; % concatenate all RMSEs

summinmax = [summinmax, minmax'] ; % concatenate all min max boundaries

sumboundary = [sumboundary,BOUNDARY] ; % concatenate all found boundaries

clear z year_nr u i

totvol = caltotvol(profile, BOUNDARY) ; % calculating volume

totvolsum = [totvolsum;totvol] ; % concatenating volume developments

end

%% FITTING TRENDLINES, CALCULATING SIGNIFICANCE & VISUALIZATION

26

% Creating profilenames for visualization.

for u = 1:length(loc) % all locations

name(u) = {num2str(Raailist(loc(u)))} ; % names are number

end

% Fitting trendlines and stuff

RMSE2 = CalcTrend(totvolsum, year', name)

% Cleaning up( keeping only important data for review)

clear Choose Q RSPlist ID Raailist XRSP answer com3rows datamin def dlg_title

grid gridlist i2 i3 loc name nan nansum num_lines prompt totvol totvolsum

transect_nr u lockicked slope minmax noutliers nrextrap RMSE BOUNDARY

27

9.2. Data import kilometer

% Main loading script to analyze kilometer(s) of profiles.

% N.B. This Script does not output a lot of tables with extra information.

% For more detailed information (on RMSE of chosen boundary, the corresponding

slope, the

% minmax boundary tested, the no. outliers the no. extrapolation: please

% use dataimportraai.) please use 'dataimportraai.m'.

%

%

%

%

%

%

%

Created by: Bob van der Zwaag

Part of the BSc thesis: 'The effect of sand nourishments on foredune

sediment volume'

University of Amsterdam

Date: June 2011 ( the database was till 2010)

Contact: bobzwaag@gmail.com

Matlab Version: R2010b

% Input:

% - transect.nc:

% This file contains the raw JarKus-data. It's open source

% data from Rijkswaterstaat (Dutch Coastal maintainance office) and

% it's available at Deltares Open Earth:

http://opendap.deltares.nl:8080/thredds/catalog/opendap/rijkswaterstaat/jarkus/

catalog.html

% Files needed for operation:

% - MexCDF

% transect.nc is a database generated by MexCDf, to open it, MexCDF

% commands have to be added to Matlab system. For more information see:

% http://mexcdf.sourceforge.net/

% - Stixbox

% Stixbox functions are needed. I believe only linreg.m and llsfit.m.

% - Subscripts not native to Matlab

% These are the scripts that where created especially for this thesis.

% - Datacheck.m

% - Outliers.m

% - interpolatie.m

% - extrap.m

% - Landward_Boundary2.m

% - caltotvol.m

% - CalcTrend.m

% - User Input

% Consists of correct grid no. and RSP or Profile No. For more information

% see:

%

http://www.rijkswaterstaat.nl/geotool/geotool_kustlijnkaart.aspx?cookieload=tru

e

% and Arens et al. (2010)

%Output

% Output is decribed briefly in this section. The individual outputs

% are described more thouroughly in the output sections of the subscripts.

% - Figure

% This script generates a figure with on the x-axis the years and on

% the y-axis the volume. It is filled with the volume data and trend

% lines are fitted. The Breakpoints and the siginificance of the lines

% are annotated.

% - Data in arrays

% - idprofiles: the profiles that were used.

% - idkicked: The profiles that were removed because they were

% incomplete.

28

% - RMSE2: The RMSE of all lines(1 line per column). first row

% shows the RMSE if one line was fitted, second row if two

% lines where fitted and third if three lines were fitted

%% INITIALIZATION

clc

clear all

close all

% Creating empty arrays for concatenation

i3 = 10000000000 ; %Datacheck necessary constant

idkicked = [] ;

% Adding Paths with necessary files

addpath ([pwd '/mexcdf/mexnc']);

addpath ([pwd '/mexcdf/snctools']);

javaaddpath([pwd '/netcdfAll-4.2.jar']);

setpref('SNCTOOLS','USE_JAVA',true);

setpref('SNCTOOLS','USE_MEXNC',false);

addpath stixbox

% Loading RWS Data

url = 'transect.nc';

id = nc_varget(url,'id');

gridlist = round(id/1000000) ;

Raailist = id - (gridlist*1000000) ;

RSPlist = floor(double(Raailist)/100) ;

%% PROMPT USER FOR LOCATION OF CALCULATION

% Choose Grid no. and RSP nos.

prompt = {'Enter GridNo. (2-13, 15-17):','Enter RSP 1','Enter RSP 2','Enter RSP

3','Enter RSP 4','Enter RSP 5','Enter RSP 6'};

dlg_title = 'Input';

num_lines = 1;

def = {'6','11','12','','','',''};

answer = inputdlg(prompt,dlg_title,num_lines,def);

grid = str2num(answer{1}) ; % Answer Gridno.

% Answer RSP

for k = 2:length(answer)

if ~isempty(answer{k}) % Ignoring empty input

RSP(k-1) = str2num(answer{k}) ;

end

end

%% CREATING PROFILE

% Kilometer Loop

for a = 1: length(RSP)

nrRSP = RSP(a)

loc = find(gridlist == grid & RSPlist == nrRSP) ; % find location of id in

lists

loc(length(loc)+1) = loc(length(loc))+1 ; % Taking first of next km too

[loc, i2, lockicked] = Datacheck(loc) ; % Checking Data for incomplete

profiles

totvolsum = [] ; % (re) emptying the voulume data

idkicked = [idkicked, id(lockicked(:))'] ; % Summing all discarded profiles

29

idprofiles = id(loc(:)) ;

% Profiles per km loop

for ID = id(loc(:))'

% Loading Years

profile = [] ; % Empty array to make shure everything fits

year = nc_varget(url,'time'); % Loading years

year = round(year/365+1970); % Creating Years

[u,~] = size( year) ; % No of years

year = year(1:u-1) ; % Deleting 2010(incompete)

[u,~] = size( year) ; % New count

% Load Cross Shore Coordinates

XRSP = nc_varget(url, 'cross_shore') ;

% Create Year Numbers

for k=1:u

year_nr(1,k)= find(year == 1964+k);

end

clear k;

% Create transect number of ID

transect_nr = find(id==ID)-1;

% Load Height Data of corresponding profile

for l=1:u

z(:,l)= nc_varget(url,'altitude',[year_nr(l),transect_nr,0],[1,1,1]);

end

clear l;

% Clear first 10 years because they're incomplete

z = z(:,10:u-1) ;

year = year(11:u-1) ;

[u,~] = size( year) ;

year(u+1) = year(u) +1 ;

[u,~] = size( year) ;

% Multiplying by 100 so everything is in cm.

z= z.*100 ;

XRSP = XRSP .* 100 ;

%Heights that are -0m NAP and inComplete Data(all NaNs through all

years

%on one location) are deleted

datamin = nanmin(z,[],2) ; % Finding minimum of heght per cross profile

nan = [] ;

for k = 1:u

nan(:,k) = isnan(z(:,k)) ;

nansum = sum(nan,2) ; % Finding Quantity of NaNs per cross profile

end

com3rows = find(datamin >150 & nansum < u - 5 ) ; % Find rows that meet

criteria

z = z(min(com3rows):max(com3rows),:); % clip height data

XRSP = XRSP(min(com3rows):max(com3rows),:) ; % clip coordinates

% [z,noutliers] = Outliers(z, 3) ;

% Creating profile array

k=1;

30

for g=1:2:u*2

profile(:,g)=XRSP(:,1);

profile(:,g+1)=z(:,k);

k=k+1;

end

clear k g

% Clipping profile to the smallest dataset of all profiles

if i3 < i2 % Clipping per km

i2 = i3 ;

end

profile2(:,1:i2) = profile(:,(u*2-i2+1):(u*2)) ;

profile = profile2 ;

year2 = year((length(year) - (i2/2-1)):length(year),1) ;

year = year2 ;

clear profile2 year2

%% REPAIRING PROFILE

profile = interpolatie(profile) ; % Interpolation

[profile, nrextrap] = extrap(profile,1) ; % Extrapolation

%% CALCULATING VOLUME

[BOUNDARY, slope, RMSE, minmax] = Landward_Boundary2(profile,year) ; %

Finding Landward Boundary

clear z year_nr u i

totvol = caltotvol(profile, BOUNDARY) ; % Calculating Volume

totvolsum = [totvolsum;totvol] ; % Concatenating Volume Development

end

i3 = i2 ;

km(a,:) = nanmean(totvolsum(1:length(loc),:)) ; % Concatenaing volume data

per km

totvolsum = [] ;

name(a) = {num2str(nrRSP)} ; % concatenating names

end

%% FITTING TRENDLINES

RMSE2 = CalcTrend(km, year', name)

clear Choose Q RSPlist ID Raailist XRSP answer com3rows datamin def dlg_title

grid gridlist i2 i3 name nan nansum num_lines prompt totvol totvolsum

transect_nr u lockicked a minmax noutliers nrRSP nrextrap slope

31

9.3. Data check

function [loc2,i2, lockicked] = Datacheck(loc)

% This script check data for completeness and displays which profiles have

% to be removed

%

%

%

%

%

%

%

Created by: Bob van der Zwaag

Part of the BSc thesis: 'The effect of sand nourishments on foredune

sediment volume'

University of Amsterdam

Date: June 2011 ( the database was till 2010)

Contact: bobzwaag@gmail.com

Matlab Version: R2010b

% Input

% - Location Data (user) input from import script

% - transect.nc:

% This file contains the raw JarKus-data. It's open source

% data from Rijkswaterstaat (Dutch Coastal maintainance office) and

% it's available at Deltares Open Earth:

http://opendap.deltares.nl:8080/thredds/catalog/opendap/rijkswaterstaat/jarkus/

catalog.html

% Files needed for operation:

% - MexCDF

% transect.nc is a database generated by MexCDf, to open it, MexCDF

% commands have to be added to Matlab system. For more information see:

% http://mexcdf.sourceforge.net/

% - Stixbox

% Stixbox functions are needed. I believe only linreg.m and llsfit.m.

% - Subscripts not native to Matlab

% These are the scripts that where created especially for this thesis.

% - Outliers.m

% - interpolatie.m

% - extrap.m

%

%

%

%

%

%

Output

- loc2: the profiles that were used.

- lockicked: The profiles that were removed because they were

incomplete.

- i2: The number of remaining years after the first rows are

checked: The Years that are too incomplete are deleted.

%% INIT

% Creating Empty arrays for concatenation

a2 = [] ;

i2 = [] ;

lockicked = [] ;

% Loading RWS data

url = 'transect.nc';

id = nc_varget(url,'id');

%% CREATING PROFILE

for a = 1: length(loc) % loop all profiles

ID = id(loc(a)) ; % profile number

32

% Loading Years

profile = [] ; % Empty Array to make sure everything fits

year = nc_varget(url,'time'); % Loading years

year = round(year/365+1970); % Creating years

[u,~] = size( year) ; % Number of years

year = year(1:u-1) ; % Deleting 2010

[u,~] = size( year) ; % New Quantity

% Load Cross Shore Coordinates

XRSP = nc_varget(url, 'cross_shore') ;

% Create Year Numbers

for k=1:u

year_nr(1,k)= find(year == 1964+k);

end

clear k;

% Create transect number of ID

transect_nr = find(id==ID)-1;

clear z

% Load Height Data of corresponding profile

for l=1:u

z(:,l)= nc_varget(url,'altitude',[year_nr(l),transect_nr,0],[1,1,-1]);

end

clear l;

%Clear first 10 years because they're incomplete

z = z(:,10:u-1) ;

year = year(11:u-1) ;

[u,~] = size( year) ;

year(u+1) = year(u) +1 ;

[u,~] = size( year) ;

% Multiplying heights and coords by 100, so the data is in cm

z= z.*100 ;

XRSP = XRSP .* 100 ;

%Heights that are -0m NAP and inComplete Data(all NaNs through all years

%on one location) are deleted

datamin = min(z,[],2) ; % Finding minimum height on cross profiles

nan = [] ;

for k = 1:u

nan(:,k) = isnan(z(:,k)) ;

nansum = sum(nan,2) ; % Finding Quantity of NaNs on cross profiles

end

com3rows = find(datamin >200 & nansum < length (year)-5 ) ;% find Rows that

meet criteria

if length(com3rows) < 5 % If not enough rows (5) meet criteria, delete that

profile

a2 = [a2,a ] ;

'kicked out Raai no.:' ;

loc(a) ;

lockicked = [lockicked, loc(a)] ;

i2 = [i2,0]

;

else

z = z(min(com3rows):max(com3rows),:); % Clip height data

XRSP = XRSP(min(com3rows):max(com3rows),:) ; % Clip coordinates

33

%

[z,noutliers] = outliers(z, 3) ;

% Creating Profile Array

k=1;

for g=1:2:u*2

profile(:,g)=XRSP(:,1); % Coordinates every uneven number

profile(:,g+1)=z(:,k); % Height data every even number

k=k+1;

end

%% REPAIRING PROFILE

profile = interpolatie(profile) ; % INterpolation

[profile, nrextrap] = extrap(profile,1) ; % Extrapolation

%% MORE CHECKING

% Kick whole raai

nan = [] ;

for k = 1:u

nan(:,k) = isnan(profile(:,(k*2))) ;

nansum = sum(nan,1) ; % Find NaNs per column

end

clear i

[u,i] = size(profile) ;

% If number of columns with only NaNs is bigger than number of years /5

% or if the number of NANs in last column is bigger than columnsize -10

if (numel(find(nansum == u))) > (i/10) | nansum(i/2) > u-10

a2 = [a2,a ] ; % Profile number from list of locs

'kicked out Raai no.:' ;

loc(a) ; % profile

lockicked = [lockicked, loc(a)] ; % Add to lockicked

i2 = [i2,i]

; % add profile size to list

% kick first years

else

clear i

[u,i] = size(profile) ;

b=find(isnan(profile(:,2))) ;% calculating no. NaNs in first column

while numel(b)>u-15 % checking if no NaNs is less than size - 15

for a = 2 % if it is: check no NaNs

b = find(isnan(profile(:,a))) ;

if numel(b)>u-15

profile = profile(:,3:i) ; % CLip profile (Making

column 4 the new column 2)

year = year(2:length(year),1) ; % CLip years

end

end

clear i

[u,i] = size(profile) ; % Checking new size

end

i2 = [i2,i]

;% add i to list(clipped profile size)

end

end

end

%% FINALIZING

i2(a2) = [] ; % Delete all size data of deleted profiles

i2 = min(i2) ; % Finding min size

clear k

loc(a2) = [] ;

34

loc2 = loc ;

35

9.4. Outlier Removal

function [data, noutsum3, tel] = Outliers(data,s)

%% REMOVING OUTLIERS, s TIMES STANDARDDEVIATION OF MEAN REPLACING THEM WITH

NANS

% data is an array of data

%s = standarddeviation used to detect outliers. (if left empty it is 3).

%Nout is the number of outliers removed

%tel is the number of runs it does, in case of outlier lowers mean too much

%it finds new outliers, lowering mean etc etc.

%Data is the dataset you put in, with NaNs instead of outliers.

%The NaNs should be interpolated.

%% INIT

if isempty(s), s = 3; end

noutsum = 0 ;

noutsum2 = 2 ;

noutsum3 = 0 ;

[~,i] = size(data) ;

tel = 0 ;

%% LOOP

while noutsum2 > 0 % While there are still outliers in de data

% Calculate the mean and the standard deviation

% of each data column in the matrix

for k = 2: i

year = data(:,k) ;

%Delete first of column(year)

year(1) = [] ;

%Calculate mu and s

mu = nanmean(year);

sigma = nanstd(year) ;

[n,~] = size(year);

% Create a matrix of mean values by

% replicating the mu vector for n rows

MeanMat = repmat(mu,n,1);

% Create a matrix of standard deviation values by

% replicating the sigma vector for n rows

SigmaMat = repmat(sigma,n,1);

% Create a matrix of zeros and ones, where ones indicate

% the location of outliers

outliers = abs(year - MeanMat) > s*SigmaMat;

% Calculate the number of outliers in each column

nout = sum(outliers) ;

%Total number of outliers in data

noutsum = [noutsum, nout] ;

noutsum2 = sum(noutsum) ;

%Find outliers location

a = find(outliers == 1) ;

%Replace by NaNs(a+1 because of the deletion of year(row 1)

data(a+1,k) = NaN ;

end

tel = tel+1 ;

% Total no outliers removed

noutsum3 = noutsum3+noutsum2 ;

noutsum = [] ;

end

36

%Cleaning up

clear MeanMat SigmaMat a k mu n nout noutsum noutsum2 outliers p sigma year

37

9.5. Interpolation

function [profile] = interpolatie(profile)

% Interpolate to get rid of NaN's during years:

% Thanks to S. Van Puijvelde!

nanny=isnan(profile);

for k=2:length(nanny(1,:))

for m=2:length(nanny(:,1))-3

if nanny(m,k) == 1 && nanny(m+1,k) == 0

profile(m,k)=(profile(m-1,k)+profile(m+1,k))/2;

elseif nanny(m,k) == 1 && nanny(m+1,k) == 1 && nanny(m+2,k) == 0

profile(m,k)=(profile(m-1,k)+profile(m+2,k)-profile(m-1,k))/3;

profile(m+1,k)=profile(m,k)+nanny(m+2,k)/2;

elseif nanny(m,k) == 1 && nanny(m+1,k) == 1 && nanny(m+2,k) == 1 &&

nanny(m+3,k) == 0

profile(m,k)=(profile(m-1,k)+profile(m+3,k)-profile(m-1,k))/4;

profile(m+1,k)=(profile(m,k)+profile(m+3,k)-profile(m,k))/3;

profile(m+2,k)=profile(m+1,k)+nanny(m+3,k)/2;

else profile(m,k)=profile(m,k);

end

end

end

clear nanny k m;

% moving across length

for p=4:2:length(profile(1,:)) %Moving columns

first=isnan(profile(:,p)); %establishing NaN's of first column(column p)

if sum(first) ~=0 %If sum of NaNs first column is not 0

cellstrt=find(first==1); % Finding NaNs in column

nro=cellstrt(1);nrl=cellstrt(end); % Starting at first NaN & ending at last

findnan=isnan(profile); % Find NaNs in whole profile

for k=p:2:length(profile(1,:))-2 %moving across columns

for m=nro:nrl; %moving across rows

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==0

profile(m,k)=(profile(m,k-2)+profile(m,k+2))/2;

end

end

end

for k=p:2:length(profile(1,:))-4

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+4)-profile(m,k2))/3);

profile(m,k+2)=(profile(m,k)+profile(m,k+4))/2;

end

end

end

for k=p:2:length(profile(1,:))-6

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+6)-profile(m,k2))/4);

profile(m,k+2)=(profile(m,k))+((profile(m,k+6)profile(m,k))/3);

38

profile(m,k+4)=(profile(m,k+2)+profile(m,k+6))/2;

end

end

end

for k=p:2:length(profile(1,:))-8

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+8)-profile(m,k2))/5);

profile(m,k+2)=(profile(m,k))+((profile(m,k+8)profile(m,k))/4);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+8)profile(m,k+2))/3);

profile(m,k+6)=(profile(m,k+4)+profile(m,k+8))/2;

end

end

end

for k=p:2:length(profile(1,:))-10

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==1 &&

findnan(m,k+10)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+10)-profile(m,k2))/6);

profile(m,k+2)=(profile(m,k))+((profile(m,k+10)profile(m,k))/5);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+10)profile(m,k+2))/4);

profile(m,k+6)=(profile(m,k+4))+((profile(m,k+10)profile(m,k+4))/3);

profile(m,k+8)=(profile(m,k+6)+profile(m,k+10))/2;

end

end

end

for k=p:2:length(profile(1,:))-12

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==1 &&

findnan(m,k+10)==1 && findnan(m,k+12)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+12)profile(m,k-2))/7);

profile(m,k+2)=(profile(m,k))+((profile(m,k+12)profile(m,k))/6);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+12)profile(m,k+2))/5);

profile(m,k+6)=(profile(m,k+4))+((profile(m,k+12)profile(m,k+4))/4);

profile(m,k+8)=(profile(m,k+6))+((profile(m,k+12)profile(m,k+6))/3);

profile(m,k+10)=(profile(m,k+8)+profile(m,k+12))/2;

end

end

end

for k=p:2:length(profile(1,:))-14

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==1 &&

findnan(m,k+10)==1 && findnan(m,k+12)==1 && findnan(m,k+14)==0

39

profile(m,k)=(profile(m,k-2))+((profile(m,k+14)profile(m,k-2))/8);

profile(m,k+2)=(profile(m,k))+((profile(m,k+14)profile(m,k))/7);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+14)profile(m,k+2))/6);

profile(m,k+6)=(profile(m,k+4))+((profile(m,k+14)profile(m,k+4))/5);

profile(m,k+8)=(profile(m,k+6))+((profile(m,k+14)profile(m,k+6))/4);

profile(m,k+10)=(profile(m,k+8))+((profile(m,k+14)profile(m,k+8))/3);

profile(m,k+12)=(profile(m,k+10)+profile(m,k+14))/2;

end

end

end

for k=p:2:length(profile(1,:))-16

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==1 &&

findnan(m,k+10)==1 && findnan(m,k+12)==1 && findnan(m,k+14)==1 &&

findnan(m,k+16)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+16)profile(m,k-2))/9);

profile(m,k+2)=(profile(m,k))+((profile(m,k+16)profile(m,k))/8);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+16)profile(m,k+2))/7);

profile(m,k+6)=(profile(m,k+4))+((profile(m,k+16)profile(m,k+4))/6);

profile(m,k+8)=(profile(m,k+6))+((profile(m,k+16)profile(m,k+6))/5);

profile(m,k+10)=(profile(m,k+8))+((profile(m,k+16)profile(m,k+8))/4);

profile(m,k+12)=(profile(m,k+10))+((profile(m,k+16)profile(m,k+10))/3);

profile(m,k+14)=(profile(m,k+12)+profile(m,k+16))/2;

end

end

end

for k=p:2:length(profile(1,:))-18

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==1 &&

findnan(m,k+10)==1 && findnan(m,k+12)==1 && findnan(m,k+14)==1 &&

findnan(m,k+16)==1 && findnan(m,k+18)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+18)profile(m,k-2))/10);

profile(m,k+2)=(profile(m,k))+((profile(m,k+18)profile(m,k))/9);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+18)profile(m,k+2))/8);

profile(m,k+6)=(profile(m,k+4))+((profile(m,k+18)profile(m,k+4))/7);

profile(m,k+8)=(profile(m,k+6))+((profile(m,k+18)profile(m,k+6))/6);

profile(m,k+10)=(profile(m,k+8))+((profile(m,k+18)profile(m,k+8))/5);

profile(m,k+12)=(profile(m,k+10))+((profile(m,k+18)profile(m,k+10))/4);

profile(m,k+14)=(profile(m,k+12))+((profile(m,k+18)-

40

profile(m,k+12))/3);

profile(m,k+16)=(profile(m,k+14)+profile(m,k+18))/2;

end

end

end

for k=p:2:length(profile(1,:))-20

for m=nro:nrl;

if findnan(m,k-2)==0 && findnan(m,k)==1 && findnan(m,k+2)==1 &&

findnan(m,k+4)==1 && findnan(m,k+6)==1 && findnan(m,k+8)==1 &&

findnan(m,k+10)==1 && findnan(m,k+12)==1 && findnan(m,k+14)==1 &&

findnan(m,k+16)==1 && findnan(m,k+18)==1 && findnan(m,k+20)==0

profile(m,k)=(profile(m,k-2))+((profile(m,k+20)profile(m,k-2))/11);

profile(m,k+2)=(profile(m,k))+((profile(m,k+20)profile(m,k))/10);

profile(m,k+4)=(profile(m,k+2))+((profile(m,k+20)profile(m,k+2))/9);

profile(m,k+6)=(profile(m,k+4))+((profile(m,k+20)profile(m,k+4))/8);

profile(m,k+8)=(profile(m,k+6))+((profile(m,k+20)profile(m,k+6))/7);

profile(m,k+10)=(profile(m,k+8))+((profile(m,k+20)profile(m,k+8))/6);

profile(m,k+12)=(profile(m,k+10))+((profile(m,k+20)profile(m,k+10))/5);

profile(m,k+14)=(profile(m,k+12))+((profile(m,k+20)profile(m,k+12))/4);

profile(m,k+16)=(profile(m,k+14))+((profile(m,k+20)profile(m,k+14))/3);

profile(m,k+18)=(profile(m,k+16)+profile(m,k+20))/2;

end

end

end

clear findnan nxt ngnxt first

end

clear nro m p k cellstrt nrl

end

41

9.6. Extrapolation

function [profile, t] = extrap(profile, z)

%profile is the profile in which extrapolation takes place. Note that the

%extrapolation only takes place at the lowest rows, landinward. it is

%extrapolated to increase the number of data points that are above 3 meters

%and doesn't contain Nan's

%z can be 1 or left empty. 1 means the extrapolation is done linearly,

%everything else meanss it is done by cubic spline method.

dataclip = profile ;

[u,i] = size(profile) ;

t = 0 ;

if isempty(z) == 1

z = 0 ;

end

for k = 1:u

nan(k,:) = isnan(dataclip(k,:)) ;

nansum = sum(nan,2) ;

end

datamin = min(dataclip(:,2:2:i),[],2) ;

com3rows = find(datamin >300 & nansum ==0 ) ;

%Furthest Coordinate Landward

minrow = min(com3rows) ;