Efficient protocols in Complex networks

advertisement

Efficient protocols in Complex networks

Danny Dolev (Hebrew University)

Shlomo Havlin (Bar Ilan University)

(Supported by ISOC)

The Internet is by far the largest distributed system mankind has ever built. The number of nodes and

links is constantly increasing in amazing speed. The future Internet raises new challenges due to the

scale and seemingly unstructured nature of the network. A fundamental question in Internet research

is what are the algorithms and protocols that are suitable for such a system. What theoretical ideas

can be used to help improve the Internet?

The project has lead to many new observations, algorithms and theoretical studies that have made

lasting impact on the research community. Many research papers were written and the thesis work

of several PhD and MSc students were focused on topics covered by this research.

Among other things, we investigated new protocols for search in complex networks. The ability to

perform an efficient search is of great importance in many real systems (e.g. social networks), and in

particular in communication networks. Naive methods which store the global network connectivity at

each node are not scalable, and therefore new schemes have to be considered, in which the memory

requirement at the nodes (routing table) is bounded in at the cost of slightly decreasing quality of

routing. We suggested a new method for searching when such constraint exists. We assign new

names to nodes ('labelling') based on the path between them to the closest hub (node with high

connectivity). The new names are expected to be short since in real networks each node is within a

very short distance from one of the hubs. We were able to reduce significantly the amount of

information stored at the nodes, such that the required memory needed scales only logarithmically

with the network size. Due to the special properties of the hub-containing network we analyzed, the

actual paths used in the scheme are very close in distance to the shortest ones. We gave theoretical

arguments why the method is expected to be efficient, and bound the average length of the paths

taken. We also considered a distributed version of the algorithm. This should make our method

extremely useful for realistic systems such as the Internet.

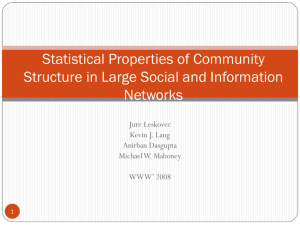

To deepen our understanding of the characteristics of real communication networks, we studied a

new map of the Internet (at the autonomous systems level) that was obtained with exclusive data

from the DIMES project. In this project, the Internet was measured by exploiting collaboration of

many world-wide distributed volunteers. We introduced and use the method of "k-shell

decomposition" and methods of percolation theory and fractal geometry, to construct a model for the

structure of the Internet. In the k-shell method, the network nodes are peeled layer by layer in

ascending order of their number of connections into deeper shells. We used results on the statistics

of the network shells to separate, in a unique and fast way, the Internet into three subcomponents: (i)

a nucleus that is a small (~100 nodes), very well connected globally distributed sub-network; (ii) a

fractal subcomponent that is able to connect the bulk of the Internet without congesting the nucleus,

with self-similar properties and critical exponents predicted from percolation theory; and (iii)

dendrite-like structures, usually isolated nodes that are connected to the rest of the network through

the nucleus only. The resulting structure is illustrated schematically as a jellyfish with the nucleus at

the center. We showed that our method of decomposition is robust and provides insight into the

underlying structure of the Internet and its functional consequences. Our approach of decomposing

the network is general and is also useful when studying other complex networks.

As an effort to model the transport properties of real and random networks, we studied the

distribution of flow and electrical conductance in these networks. Our model networks are scale-free

networks (random networks with broad distribution of degrees) and Erdos-Renyi networks (random

networks where each link exists independently with small probability, leading to narrow, Poisson

distribution of degrees). Our theoretical analysis for scale-free networks predicts a power-law tail

distribution for the conductance and flow in the network. We also find an expression for the decay

exponent. The power-law tail in leads to large values of G, thereby significantly improving the

transport in scale-free networks, compared to Erdos-Renyi networks where the tail of the

conductivity distribution decays exponentially. We then studied the case of many sources and sinks,

where the transport is defined between two groups of nodes. This corresponds to exchange of files

between many users in parallel. We found a fundamental difference between the conductance and

flow when considering the quality of the transport with respect to the number of sources, due to the

different dependence on the distance travelled. We also found an optimal number of sources, or

users, for the flow case, which is supported by theoretical arguments

A complementary topic we studies the use of Belief Propagation in a large distributed network. Belief

propagation (BP, a.k.a. sum-product algorithm) message-passing is a powerful and efficient tool in

solving, exactly or approximately, inference problems in probabilistic graphical models. The

underlying essence of estimation theory is to detect a hidden input to a channel from its observed

output. The channel can be represented as a certain graphical model, while the detection of the

channel input is equivalent to performing inference in the corresponding graph. In one part of our

research, the analogy between message-passing inference and estimation is further strengthened,

unveiling a surprising link among disciplines. In spite of its significant role in estimation theory, linear

detection has never been explicitly linked to BP, in contrast to optimal MAP detection and several

sub-optimal nonlinear detection techniques. In this paper, we reformulate the general linear detector

as a Gaussian belief propagation (GaBP) algorithm. This message-passing framework is not limited to

the large-system limit and is suitable for channels with arbitrary prior input distribution. Revealing

this missing link allows for a distributed implementation of the linear detector, circumventing the

necessity of, potentially cumbersome, direct matrix inversion (via, e.g. , Gaussian elimination).

As a final note, we also studied the problem of distributing streaming media to a large and dynamic

network of nodes. We developed an unstructured solution where stream segments are given priority

based on their real-time scheduling constraints. We model the problem using a probabilistic graphical

model and run a distributed inference protocol for solving it. The solution is used to determine the

best schedule for the transfer of data satisfying the real time constraints. Our protocol is completely

distributed and scalable to very large networks. It doesn’t assume any predefined topology, and is

therefore fault tolerant. Unlike many of the proposed systems that are optimized for the efficient

transfer of either bulk-data or real-time streaming we proposed a novel method for the efficient

transfer of both streaming media and bulk-data. We demonstrated the applicability of our approach

using simulations over both synthetic topologies and real-life topologies.