failure - Department of Earth and Planetary Sciences

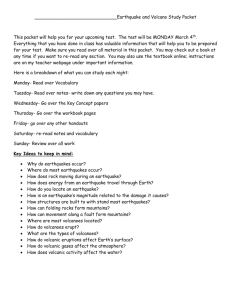

advertisement