What is Prisoner`s Dilemma

advertisement

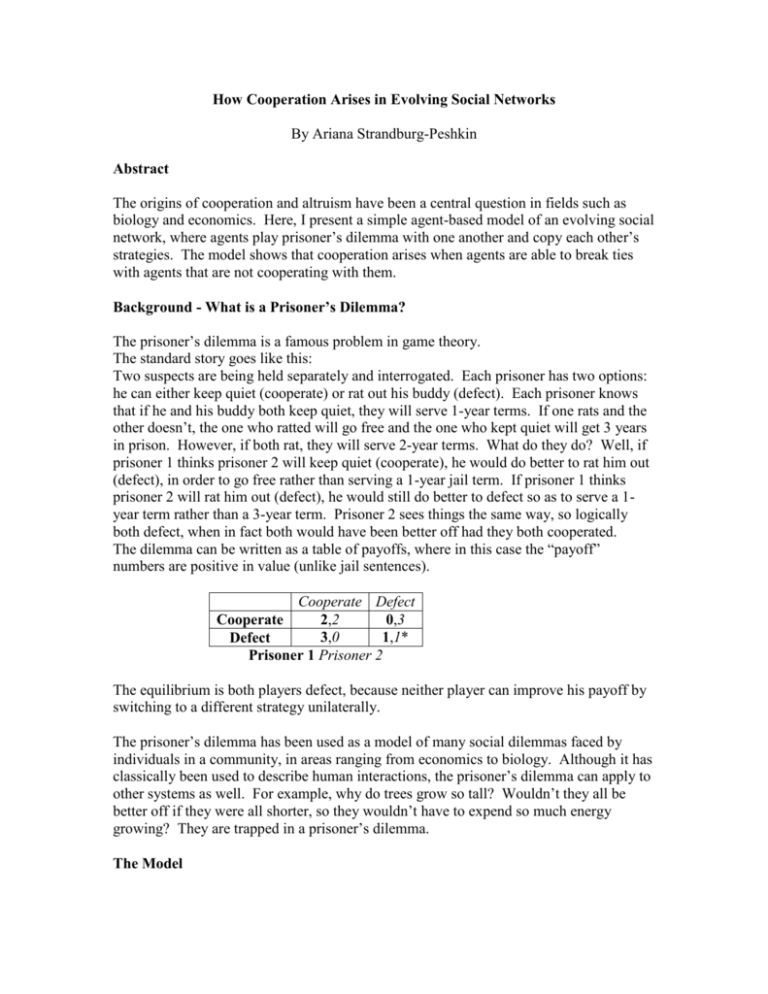

How Cooperation Arises in Evolving Social Networks By Ariana Strandburg-Peshkin Abstract The origins of cooperation and altruism have been a central question in fields such as biology and economics. Here, I present a simple agent-based model of an evolving social network, where agents play prisoner’s dilemma with one another and copy each other’s strategies. The model shows that cooperation arises when agents are able to break ties with agents that are not cooperating with them. Background - What is a Prisoner’s Dilemma? The prisoner’s dilemma is a famous problem in game theory. The standard story goes like this: Two suspects are being held separately and interrogated. Each prisoner has two options: he can either keep quiet (cooperate) or rat out his buddy (defect). Each prisoner knows that if he and his buddy both keep quiet, they will serve 1-year terms. If one rats and the other doesn’t, the one who ratted will go free and the one who kept quiet will get 3 years in prison. However, if both rat, they will serve 2-year terms. What do they do? Well, if prisoner 1 thinks prisoner 2 will keep quiet (cooperate), he would do better to rat him out (defect), in order to go free rather than serving a 1-year jail term. If prisoner 1 thinks prisoner 2 will rat him out (defect), he would still do better to defect so as to serve a 1year term rather than a 3-year term. Prisoner 2 sees things the same way, so logically both defect, when in fact both would have been better off had they both cooperated. The dilemma can be written as a table of payoffs, where in this case the “payoff” numbers are positive in value (unlike jail sentences). Cooperate Defect 2,2 0,3 Cooperate 3,0 1,1* Defect Prisoner 1 Prisoner 2 The equilibrium is both players defect, because neither player can improve his payoff by switching to a different strategy unilaterally. The prisoner’s dilemma has been used as a model of many social dilemmas faced by individuals in a community, in areas ranging from economics to biology. Although it has classically been used to describe human interactions, the prisoner’s dilemma can apply to other systems as well. For example, why do trees grow so tall? Wouldn’t they all be better off if they were all shorter, so they wouldn’t have to expend so much energy growing? They are trapped in a prisoner’s dilemma. The Model The agent-based model described here is intended to reflect human interactions in a social network. Such a social network could be an economic market, a community of individuals, or even a larger society. The model pertains to any network where humans interact with one another and are faced with social dilemmas. The model is not representative of “simpler” life forms because of various assumptions of the agents’ intelligence, as will be discussed in the conclusion. The model consists of a network of agents that repeatedly play prisoner’s dilemma with one another. - Each agent knows its strategy: a real number p, between 0 and 1 – this number represents the probability that the agent will cooperate in a given interaction. - Each agent knows its links and their weights: each agent is connected to a certain number of other agents, and each link is weighted based on how much payoff the agent has received from that individual in the past. - Each agent knows the strategies and payoffs of all of its neighbors (the agents to which it is linked). The network is initialized by creating L random (bidirectional) links between the N agents. The following then takes place each iteration: 1. Agents play all of their neighbors in prisoner’s dilemma many times. 2. Agents tally up their payoffs, then find which of their neighbors has the highest payoff and move towards that strategy. 3. Agents break ties with their lowest weighted neighbor, with a certain probability, b. If an agent breaks a tie, it chooses another agent to link with at random. In this way, the total number of links in the network remains constant. To speed up the code, a probability function is used to calculate the payoffs for each interaction (rather than having the agents interact manually). A small amount of local noise is also added each iteration in order to prevent the agents from quickly converging to one value simply because they are copying one another. This noise takes the form of one agent each iteration adding or subtracting (at random) .001 from its strategy. Results When no ties are broken (b = 0), the network generally converges to an average strategy of 0 (all agents defect). When any breaking of ties occurs, the network converges to an average strategy of 1 (all agents cooperate). These results suggest that “punishment” by breaking ties with defectors is sufficient to encourage cooperative behavior in social networks. Speed of Convergence I investigated how the speed of convergence to an average strategy of 1 varies with the probability of breaking ties, the size of the network, and the density of links. The results can be summarized as follows. - As the probability of breaking ties increases, the speed of convergence increases (See Fig. 1). In other words, harsher punishment yields faster compliance. However, it is notable that even very small probabilities of breaking ties (very gentle punishment) yield cooperative behavior eventually. - As the size of the network increases, the speed of convergence decreases (See Fig. 2). In larger networks, cooperation spreads more slowly. - As the density of links increases, the speed of convergence decreases (See Fig. 3). The reason for this behavior is probably that in more highly-connected networks, links are not as “valuable” to agents since they are so numerous. Therefore, the punishment of breaking ties is not as effective, so the network is slower to converge to a cooperative strategy. Speed of Convergence vs. Probability of Breaking Ties 180000 Iterations until Average Strategy = .99 160000 140000 120000 100000 80000 60000 40000 20000 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 Probability of Breaking Ties Fig. 1 (a) Harsher punishment yields faster compliance. 0.9 1 Speed of Convergence vs. Probability of Breaking Ties Iterations until Average Strategy = .99 1000000 100000 10000 y = 5271.2x - 0 .7 0 2 1 1000 0.01 0.1 1 Probability of Breaking Ties Fig. 1 (b) And here is my contribution to the log-log-plot-draw-a-line-and-call-it-apower-law approach… Speed of Convergence vs. Size (Network Density = 2 Links / Agent) Iterations to Average Strategy = .99 1000000 100000 10000 10 100 1000 Agents Fig. 2 Larger networks take longer to converge to cooperation. Convergence Speed vs. Number of Links Convergence Speed (Iterations to .99) 25000 20000 15000 10000 5000 0 0 20 40 60 80 100 120 140 160 Number of Links (Agents = 25) Fig 3 More densely-connected networks take longer to converge to cooperation. Conclusion This simple model of social interaction showed that social punishment (by agents refusing to interact with a defector) can be effective in promoting cooperation. This punishment tends to be most effective in small, loosely-connected networks, and harsher punishment will yield cooperation faster. The model helps explain why individuals in a society would conform to social norms, even against their individual interests. As mentioned in the introduction, the model requires that agents be relatively intelligent. They must have the capacity to keep track of who their “neighbors” are and how much they value them. In addition, it requires that they have information about each of their neighbors’ payoffs as well as strategies. For this reason, it is ideally suited for modeling human interactions, but does not pertain to the interactions of “simpler” life forms. In addition, while it requires complex social intelligence, the model fails to capture more complex strategies. All agent strategies are probabilistic, thus neglecting more complicated strategic possibilities, such as tit-for-tat. Further research should explore the effects of adding more complex strategies. It would also be interesting to look at games other than prisoner’s dilemma, and how the game played on the network affects the network structure and vice versa.