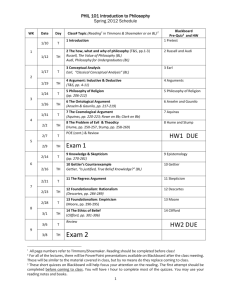

Naturalizing Mental Meaning: Week 4 Presentation (Dretske

advertisement

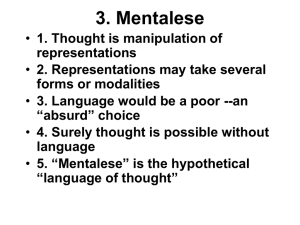

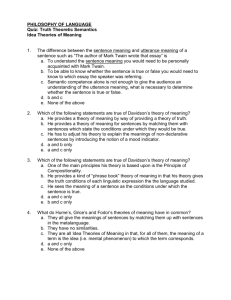

Mike Shehan Naturalizing Mental Meaning: Week 4 Presentation (Dretske and Fodor) Introduction The naturalization project Terms Causal / Internalist theory o Problems we’ve seen o Misrepresentation [articulate this] Dretske’s functional / diachronic theory o Mental content is linked, in some way, to the organism’s functioning. Misrepresentation does not perform its function. Fodor o Truth-repression (i.e. Freudian defence mechanisms) Dretske: Representational Systems Dretske’s context…He opens the chapter by telling us why we should care about mental representation. o It is required in order to explain behaviour (the title of the book in which this chapter is found). o He opens with the sentence, “Some behaviour is the expression of intelligent thought and purpose.” o Explanatory competition between folk-psychology (or everyday explanation) and neuroscience. Folk-psych. Explains behaviour Neuro-physiology explains ‘output’ So no reconciliation is necessary. o What we’re really after, is an understanding of whether or not an agent’s ‘reasons’ for action are causal… if so, how does the interaction work? What makes a reason? ‘Normally’, beliefs will be a constituent of a reason (unless you’re Hume). p. 52. What is a representational system? o ‘Terms’ page. o Functions can vary between systems and in the way the function is performed (i.e. how it indicates) e.g. calculator vs. mind. o We are interested in Type III systems and their ‘natural’ representations but it is worth considering the other two types first. o Representation ≠ Meaning (necessarily) What a system represents is not what its elements indicate. Representation is a function of function (for Dretske)… representation has to do with what the elements of a system have the function of indicating. 3 types of representational system. o Type I (symbols) Systems that represent what we stipulate they represent. -1- Mike Shehan We assign function to the representational objects / determine what they indicate. His example: coins and popcorn = basketball game. Symbols have no intrinsic power of representation. They rely on something to assign them a function. o Type II (signs) Things that serve as indications intrinsically / their ‘natural’ meaning is exploited. Natural meaning cannot be otherwise. o e.g. Quail tracks cannot mean ‘quail was here’, unless ‘quail was here’ is true. Not dependent on us to assign function. p. 54 His examples: tracks in the snow, instruments of measurement. Instruments operate independently of the creator i.e. according to physical laws. Interestingly, He says that for these systems to indicate requires a knowledgeable observation (e.g. for a clock to indicate time, it must be observed by someone who knows how to read it). Genuine lawful dependency between indicator and the indicated. But not necessarily a natural law. e.g. doorbell = someone’s at the door. Uses the word ‘genuine’ to show that there is more going on here than mere ‘persistent correlation’. Representation and function. p. 59 Many things are correlated with indicators but usually only one is assigned the function of representing. o e.g. gas gauge Important: we assign function. o Type II misrepresentations It follows from the primacy Dretske assigns to function, that misrepresentations are limited to the system’s function. e.g. gas gauge. o Does not have superfluous function (indicating force on bolts) so it cannot misrepresent other correlated indices. p. 66-67 o Type III (natural) Recap: Type I: we assign what the system a function and assign what elements are to indicate. Type II: indication is intrinsic, we assign the system a function. p. 62 Intrinsic indicator functions (not assigned). Foreshadowing why Dretske’s theory is ‘teleological’. His externalism p. 62 His examples: Internal indicators… firings in the visual cortex indicate energy on the photoreceptors / cognitive maps indicate place for rats. -2- Mike Shehan o Interesting point: function of organs is independent of what we think. That is, we cannot assign a new purpose to our kidneys or heart. p. 63 Type III Representational Systems Intrinsic intentionality. o It is not a thing of our making in the way that intentionality is a reflection of our minds in Type I and Type II systems (determined by us when we assign function) [I’m confused here, how can it not be us, that makes it, since we can (in part) determine what we focus on… what our thoughts or perceptions will be about…does he merely mean it’s not assigned by us as is the case with Type I and Type II systems?] Misrepresentation o Why it’s important in our understanding… It’s a fact that we do misrepresent things, at least in folk psychology. Example from class: the dog is really a cat / the dog is really a rat (Mexican hairless) Misrepresentation is a power that minds have. (p. 65 bottom) So we must be able to get things wrong, in order to get things right. o Type I and Type II systems: power of misrepresentation comes from us. o Misrepresentation vs. misindication There is no such thing as misindication Comes from the definition of the term. [not sure about this point] We can think of indicating as ‘pointing to’—nothing ‘points to’ the ‘wrong’ thing, but we (the observer) can interpret it as pointing to something other than it is. Not every indicator represents. o Type III Because these systems are “sufficiently self-contained” [by this I take it a ‘source’ of intentionality] It is possible that this system type has developed the function of indicating something that has never been the case. P. 67 [is this the idea of non-natural meaning?] o Example: Inverto the robot / the newts who’s eyes were altered surgically 180 degrees. Human analogue… visual field experiments. o Draws on removal from natural environment (to explain the frog case) P. 69 Misrepresentation depends on two things: (1) the condition of the world being represented (2) the way it is represented Problem: the indeterminacy of function o What is the function of our representations? o Is he saying that behavioural success is a way of fixing function? P. 70. also p. 63 (bottom) o If so, does this mean that naturalizing function will lead us to a sort of behaviourism? We have internal representations But we can only know if they are correct representations if our behaviours are successful S R… Does Fodor say a similar thing to get out of the Frege problem? -3- Mike Shehan Dretske: Content 2 questions about representational content: o (1) what is it a representation of? (his externalism) o (2) how is the representation represented (his teleology?) Representational Content contain o Sense and reference (i.e. meaning) o Same as Topic and comment Goldman’s Pictures of black horses vs. black-horse pictures. o Black horse pictures represent the black horses as black horses (topic is identifiable) He admits non-pictorial content. Our ordinary descriptions draw on the distinction between topic and comment. o i.e. we assume people see things (e.g. horses) as we see them, if we are in similar conditions. de re representations o We can get information from something without seeing what it is. o We have topic without comment. o P. 73 o e.g. picture of a twin de dicto representations o Content whose reference is determined by how it is represented. How things represent: o All Dretske’s systems are property specific. o e.g. a system can represent property F without representing G, even though every F is G can represent shape without representing colour o p. 75 bottom Question from the last paragraph… how can something be about some part of the world even those parts of the world with which the animal has never been in direct perceptual contact… does this mean, for instance, seeing a picture of Antarctica? --Discussion questions— Would there be a way to determine function if we didn’t see behaviour? the role of folk psychology? Is it a dodge to say that misrepresentation cannot occur without assigning function? How would Dretske handle Frege cases? Perhaps he could say that we do not have the function of discerning evening star from morning star so it is not a misrepresentation? -4- Mike Shehan Fodor: Life without Narrow Content Twin Earth: o Hilary Putman’s experiment. o Twins… is there misrepresentation if the earthling labels XYZ water? o Superficial problem: We are made up of water so it is impossible that we could have an exact replica on a planet without water. Frege Cases: o The evening star / morning star. Both point to the same object (the planet Venus). The problem: Externalist theories say that we learn nothing new when we are told that evening star = morning star = venus. If the identity relation changes the meaning of any of the terms, then externalism has a problem, because meaning is simply reference (recall Dretske’s indication). o Oedipus. Didn’t want to marry his mother but married her anyway because he didn’t know about the identity condition fiancé = mother. Why are these cases important to Fodor? He is an externalist. o But, he believes in transformations within the subject (computational implementation) [I think this is what he means?????] So we have the Eponymous Question: How can we reconcile laws that are characteristically intentional (causally related to the external world) with the idea that they are implemented computationally (i.e. transformations occur within us). Fodor’s thesis: Narrow content is superfluous (p. 28) Lessons from Twin Earth P. 28 What does “supervenience of broadly intentional upon the computational” mean? What is nomological supervenience? Fodor dismisses XYZ… is because we can’t have a twin who’s chemical structure is different. But he doesn’t claim twin cases don’t happen. o But these cases should be treated as accidents [what does he mean by accident here… does he mean aberration?] o His Argument: There are two natural kinds that fail to be distinguished by his concept. But he can still distinguish these natural kinds from other things. They are indistinguishable by more than just their causal properties [I’m not sure what he’s saying here—in number 3] They can’t be indistinguishable in their effects. So the failure to distinguish is accidental. Experts Using an expert to distinguish between elms and beeches. For me, who can’t distinguish between the two trees (p. 33) Deference = yielding to another’s opinion. o So are deferential concepts those that are held in limbo until we get more information? -5- Mike Shehan P. 35 Fodor says semantics, for the informational view is mainly about counterfactuals: p. 37. So what is an ELM? P. 37. Fodor says the question doesn’t matter because the ELM concept is connected in a certain way to instantiated elmhood [how is it connected??? Is this Fodor’s big problem] [is Fodor saying that syntax is computational, but content isn’t]. Frege Cases In the initial class we learned that Twin Cases posed a big problem for conceptual-role theories, we didn’t see them in the context of causal theories. We did see Frege Cases. Fodor spends a good deal of space in his attempt to deal with them. Fodor admits there are many Frege cases. His issue: p. 39 Are intentional laws (i.e. causal relation???) disconfirmed by Frege cases? Begins by saying his laws allow exceptions (as long as they aren’t systematic). Narrow content allows Frege cases o E.g. S believes Fa and a=b doesn’t mean S believes Fb o So he can believe that the evening star is Venus without beliving the morning star is Venus. Fodor’s argument: predictive adequacy p. 40 No serious psychology can treat routine success as accidental Smith can be relied upon to know that a = b if the fact that a= b is germane to the success of his behaviour. Principle of Informational Equilibrium (PIE): p. 42 o Belief / desire psychology must endorse the principle because (1) You cannot choose A over B unless you believe you would prefer A to B if all the facts were known. (2) The success of an action is accidental unless the beliefs that the agents act on are true. o Fodor says that psychologies committed to PIE must treat Frege cases as aberrations. Oedipus… highlights the relation between content and action. o Fodor tells us to keep in mind that something went wrong. o This case is an exception to the law: people try to avoid to marry their mothers. o do we need to naturalize exceptions in the project of naturalizing mental meaning or is it enough to show that the case is not systematic? o P. 46 Is broad content the only kind that psychological explanations require??? Oedipus acted out of his beliefs and desires but it doesn’t follow that he acted out of the contents of his beliefs and desires. P. 47 3 Place Relations Normally, we consider to place relations: creature and proposition. Fodor describes the three place relation: creature, proposition, mode of presentation. o Oedipus, then p. 47 o Modes of presentation = sentences of Mentalese. o So the identity relation between the sentences: O wants to marry M O wants to marry J is broken p. 48. -6- Mike Shehan Fodor admits there are problems with this effort… what are they? Do they give us something else to naturalize… i.e. the sentences of Mentalese? What is the mechanism that keeps Frege cases from proliferating??? Perceptual discrimination? Behaviour? Does this need to be explained? o Talks about the purpose of perception and cognition… is he approaching Dretske? The OBJECTION: o P. 49 o [is mentalese narrow content?] o Does this mean that Mentalese explains nothing, only the computations are needed to explain? Especially if narrow content supervenes on computational role. o Fodor’s reply p. 50 Psychological laws apply to a mental state in virtue of its broad content. -7- Mike Shehan Naturalizing Mental Meaning: Terms Mental content Narrow mental content Broad mental content Eponymous question (EQ) Intentionality Computation Supervenience Natural kinds Informational Semantics Deferential concepts Principle of Informational Equilibrium (PIE) Modes of presentation Meaning Representational System (RS) Content (i.e. meaning) of a mental state e.g. thoughts, beliefs, desires, fears, intentions, or wishes. Mental content that is not based (or dependent upon) on the individual’s external environment, i.e. content that is intrinsic to the individual. Because it depends only on the individual’s properties, an exact duplicate of the individual must have exactly the same content (re: twin earth problem). Content that depends on both features of the environment and features of the individual. How can we reconcile the intentionality of psychological laws with their computational implementation? An faculty of minds that allows them to be about or represent things (e.g. properties or propositions) Transformations between representational states. To be dependent on a set of facts or properties in such a way that change can occur only after change has occurred in those facts or properties (www.dictionary.com). Objects that are available for naming. A single set exists for each natural kind term. e.g. water = H20. Ascribe content to a state depending upon the causal relations obtaining between the state and the object it represents. Concepts that are comprised of the concepts of other speakers. Fodor uses the example of employing a botanist for our concept of ELM. We would not, normally, act differently if we were privy to more relevant information. Fodor uses the principle in his argument dealing with Frege cases. Fodor’s third place in his 3-place relation. These are “sentences (of Mentalese)” (p. 47). Individuated by prepositional content and syntax. Dretske distinguishes between ‘natural’ and ‘non-natural’. Natural meaning is the same as ‘indicate’. Non-natural meanings have no dependency between the representational object and that which it represents. For Dretske, “…any system whose function it is to indicate how things stand with respect to some other object, condition, or magnitutde” (1988, p. 52). He distinguishes 3 different systems: Type I (symbols), Type II (signs) and Type III (natural). Mental representations fall in to the third category. -8-