Detailed Guidelines for Assessment Processes

advertisement

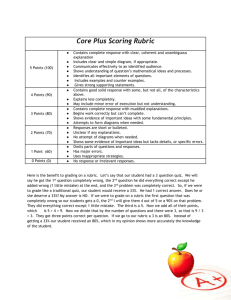

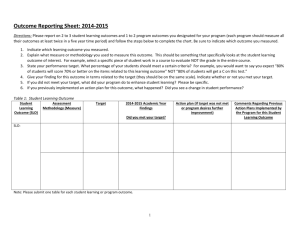

To: Program directors (except PsyDoc) From: Matthew C. Bronson, Director of Academic Assessment Re: Guidelines for MA Capstone Assessment and Reports from CIIS Academic Programs Date: September 17, 2009 I. II. III. Context and Purpose Designing and Conducting the Assessment Writing the Report I. Context and Purpose As a key component of our ongoing assessment of academic achievement, CIIS has committed to putting in place a way for all programs to evaluate MA Capstone projects and “aggregate” the results in an ongoing fashion. The goal is to systematically create a profile of program-level achievement on key learning outcomes that spans the work of all graduating students. The comparisons of profiles should allow programs to identify strengths and opportunities for improvement as well as evaluate the impact of changes in curriculum over time. In order to meet this requirement, capstone assessments must include: 1) a means for assessing individual students’ work in terms of the overall learning outcomes (e.g., a rubric), 2) the ratings of more than one reader for each artifact, 3) a means for aggregating the individual assessments into a collective picture of achievement across the cohort or class, and 4) a process of analysis and reflection resulting in suggested changes to educational practices/curriculum and the assessment process itself. (Please see WASC Capstone essay for background on what we have done so far.) For programs that have already done a pilot, this will be a chance to refine and deepen the existing processes and begin to build an archive of reports against which future results can be cross-referenced. This process should occur at least every year henceforth in order to facilitate institutional learning and to develop the mature culture of evidence expected by WASC and the higher education community at large. Programs are encouraged to design a process that will be useful as well as workable in collaboration with the Director of Academic Assessment in Fall and Spring of 2009. Final reports on assessment of Fall and Spring capstones will be conducted over the summer of 2010 with a final report due by September 1, 2010. II. Designing and Conducting the Assessment 1. The first step in this process is to identify what student work will be assessed and then when and how it will be assessed. For all programs except ACS, there are a capstone seminar and an associated portfolio, generally encompassing integrative papers, presentations and/or performances. The thesis option is still available in some programs but is rarely chosen. 1 2. The next step will be to create a rubric or other instrument by which the student work can be evaluated as evidence of the extent to which desired outcomes have been achieved. Several CIIS programs have such rubrics already in place and this will be a chance to review and refine them. One way to construct the rubric is to identify the key learning outcomes that will be addressed by the student work being reviewed. 3. Then, each artifact or set of artifacts can be evaluated in terms of what it shows about the students’ learning in the program using the rubric. Here is a sample rubric: Student___________ Date of evaluation______ Rater____________ 1 No Evidence 2 Little evidence 3 Adequate evidence 4 Good evidence 5 Excellent evidence Student Learning Outcome 1: ___ Comments: Student Learning Outcome 2:___ Comments: Student Learning Outcome 3:_ Comments: 4. After a sample rubric is created, several raters should evaluate the same piece of work and then discuss and amend their responses to enhance inter-rater reliability. They can also identify several “anchor papers” for each level of achievement as reference points. 5. Once a process is agreed upon, all the readings and evaluations of performances and presentations should occur. If a rubric is not being used, some other means for aggregating the results will need to be developed at this point. 6. Once all the results are in, aggregation can be accomplished by adding up or consolidating ratings across all students and outcomes (see below chart): 2 Sample Capstone Assessment Matrix SLO1 SLO2 SLO3 SLO4 ___ ___ ___ ___ ___ ___ ___ ___ Student 1 Student 2 Student 3 Student 4 Student 5 …… Student N Average: SD: The “average” score represents the aggregated rating for the entire group of students on each outcome. Standard deviations may be useful on larger samples to determine the extent to which outliers may be skewing the results. Care should be taken to compile comments as well as scores as these may contain useful qualitative information that does not lend itself to numerical consolidation. Once the first cycle of review is complete, there should be a discussion among all the raters as to what was found, its significance and next steps in rethinking the curriculum and the assessment process. It will be helpful to include advanced students and/or outside reviewers in this process to increase the validity of the rankings and the integrity of the data generated. The discussion should move toward concrete steps that will be taken to 1) improve the curriculum and education you are providing and 2) to improve the review process (by adding new elements or dimensions, revising the rubric, focusing on different questions and sources, etc.). A final report is due on September 1, 2010. Please do your best to organize your timelines now so that this will be achievable. A suggested format for the final report follows. III. Writing the Report Introduction In a few paragraphs describe the evolution of the capstone in your program and the current state of affairs. What processes and discussions have shaped the culminating experience(s)? What process was used this time to assess the capstone projects? What You have been Learning Share a few highlights of the key findings of your pilot (and subsequent iterations where available). What steps have you taken that have been informed by this process or other parallel reflection and discussion on student learning in your program? How would you 3 like to adapt or refine the process moving forward? What support or special training do you need to achieve your goals as a program in assuring the quality of the education you are delivering? Sample Reports Please include: the program and authors’ names on the top page and examples of rubrics or other instruments used in the review process and how they were aggregated here. You may also include relevant internal memos or notes. Thank you for your time and effort on this important initiative. I will be happy to support you in any way possible to get the most out of the exercise for your students and your program. 4