Assignment4

advertisement

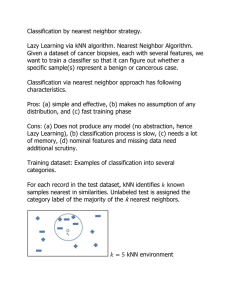

Introduction to Artificial Intelligence (236501) Home assignment #4 – Machine learning Due date: Monday, January 31, 2005, at 12:00 noon. Submit your work in pairs. Only typed (i.e., not handwritten) submissions will be accepted. Warning: Absolutely no extensions will be granted! Late submissions will not be accepted. This assignment deals with the utility of feature selection for machine learning algorithms. You will work with two algorithms: ID3, which learns decision trees and inherently performs feature selection, by computing information gain at each node of the tree being built. K Nearest Neighbors (KNN), which classifies each instance based on the labels of its k nearest neighbors, and does not perform feature selection internally. KNN usually suffers in the presence of too many features, hence explicit feature selection is often performed prior to invoking KNN. Your task is to compare the performance of ID3 to that of KNN, while the latter is used with and without feature selection, and to draw conclusions on their relative merits. Algorithms to compare 1) ID3 – regular ID3 with internal feature selection 2) KNN.all – KNN that uses all the features available 3) KNN.FS – KNN with a priori feature selection Datasets You will use the following two datasets (obtained from the UCI repository of machine learning datasets): 1) Spambase Database The "spam" concept is diverse: advertisements for products/web sites, make money fast schemes, chain letters, pornography... These are useful when constructing a personalized spam filter. Number of Instances: 4601 (1813 Spam = 39.4%) Number of Attributes: 58 (57 continuous, 1 nominal class label) 2) Multiple Features Database This dataset consists of features of handwritten numerals (`0'--`9') extracted from a collection of Dutch utility maps. 200 patterns per class (for a total of 2,000 patterns) have been digitized in binary images. Digits are represented in terms of Fourier coefficients, profile correlations, Karhunen-Loeve coefficients,pixel averages,Zernike moments and morphological features. Number of Instances: 2000 (200 per class) Number of Attributes: 649 Number of Classes:10 (= digits) Note: you don’t need to understand the physical meaning of the coefficients in order to use this dataset The Spam dataset is in the so-called C4.5 data format, which is very common in machine learning. Here is a brief description of this format (adopted from http://www.cs.washington.edu/dm/vfml/appendixes/c45.htm). You can learn more about C4.5 data format at http://sdmc.lit.org.sg/GEDatasets/Format/UsedFormat.html and http://www2.cs.uregina.ca/~dbd/cs831/notes/ml/dtrees/c4.5/c4.5.html, or you may want to go to the source: J. Ross Quinlan, “C4.5: Programs for Machine Learning”, Morgan Kaufmann, 1993. The dataset has two files – spambase.data and spambase.names. The .names file describes the dataset, while the .data file contains the examples which make up the dataset. The files contain series of identifiers and numbers with some surrounding syntax. A | (vertical bar) means that the remainder of the line should be ignored as a comment. Each identifier consists of a string of characters that does not include comma, question mark or colon (unless escaped by a backslash). Periods may be embedded provided they are not followed by a space. Embedded whitespace is also permitted but multiple whitespace is replaced by a single space. The .names file contains a series of entries that describe the classes, attributes and values of the dataset. Each entry is terminated with a period, but the period can be omitted if it would have been the last thing on a line. The first entry in the file lists the names of the classes, separated by commas (and terminated by a period). Each successive line then defines an attribute, in the order in which they will appear in the .data file, with the following format: attribute-name : attribute-type . The attribute-name is an identifier as above, followed by a colon, then the attribute-type (always “continuous” for this dataset). The .data file contains labeled examples in the following format: one example per line, attribute values separated by commas, class label is at the last position. In your experiments, you’ll need to split the .data file into two, which will contain the training and the testing data. Conventionally, these files have extensions .train and .test, respectively. The format of the second dataset (Multiple Features) is even simpler – the data is distributed as 6 files (named ‘mfeat-???’), each containing 2000 lines with one feature vector per line. Each file corresponds to one set of coefficients (Fourier coefficients, profile correlations, KarhunenLoeve coefficients, pixel averages, Zernike moments, and morphological features). In each file the 2000 patterns are stored in ASCII on 2000 lines. The first 200 patterns are of class `0', followed by sets of 200 patterns for each of the classes `1' - `9'. Corresponding patterns in different feature sets (files) correspond to the same original character. Note: the two datasets are available for download at the course Web site. Each dataset contains a file with extension “.DOCUMENTATION” that gives further information about the dataset and its format. Your tasks 1) Get acquainted with the datasets and their format 2) Study the code of ID3 (we also review it in the recitation) 3) Implement KNN. Note that you need to develop a metric that is appropriate for comparing feature vectors of instances in each dataset. Theoretically, you may use the same metric for each dataset, but you’d better have very good (and properly documented) reasons for doing so. 4) Write a function that computes Information Gain, in order to use it as an external module prior to the invocation of KNN. To achieve this aim, you may use the appropriate functionality of the Lisp code for ID3. 5) For each dataset D, perform the following experiments using the cross-validation scheme with N=10 folds - Classify D using ID3 * Count the number of attributes that were used for splitting instances anywhere in the tree; denote this number as M. - For each k = 1, 5, 10, 25, 50 * Classify D using KNN.all * For each feature selection level l = 0.01, 0.05, 0.1, 0.2, …, 0.9, 1.0 - classify D using KNN.FS with the corresponding fraction of best-scoring features (judged by their IG), i.e., 1%, 5%, etc. - classify D using KNN.FS with M best-scoring features (where M was determined by using ID3 above) - classify D using KNN.FS with exactly those M features that were used by ID3 (see above) For each experiment, measure the accuracy obtained in each cross-validation fold, then average the accuracy over the 10 folds. This is the accuracy value that represents the outcome of each experiment. Summarize your experimental results in tables and graphs 6) Compare the performance of ID3, KNN.all and KNN.FS based on the accuracy they yielded in the above experiments. Draw conclusions about the utility of feature selection for different classification algorithms and with different parameter settings (values of k, levels of feature selection etc.). 7) Bonus (9¾ points): repeat the above experiments to build learning curves, i.e., perform each experiment for increasingly large fractions of the training set, and plot the accuracy as a function of the cardinality of the training set. Draw conclusions about the importance of feature selection at the various points of the learning curve. Plotting the learning curve: suppose you’re using cross-validation with N=10 folds. Normally, for each of the 10 folds you use 9/10 of the data for training (TRAINi, i=1..10), and 1/10 for testing (TESTi, i=1..10). To build a learning curve, you also consider subsets of the training data. That is, for each fold you repeat the experiment by using 10%, 20%, …, 100% of TRAINi for training, while still using the entire test set (TESTi) for testing. Notes 1. The analysis of experiments constitutes the main point of this assignment. Therefore, you should discuss your experimental results in detail, explaining the nature of your results and observations. 2. Submit all your code, which should be accompanied by both internal and external documentation. The maturity level of your documentation should be appropriate for an advanced course in Computer Science. Naturally, the entire assignment should be implemented in the Lisp programming language. 3. Your entire submission should be in hard copy (no electronic submission is necessary for this assignment). Submit your work in pairs. In order to find a partner, you may take advantage of the “Find a partner” mechanism available on the course Web page at http://webcourse.technion.ac.il/236501 (see buttons on the left). Good luck! Questions? Evgeniy Gabrilovich (gabr@cs.technion.ac.il) Flames? /dev/null