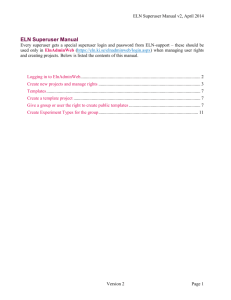

[1 - Berkeley Law

advertisement