Clusters and outlier

advertisement

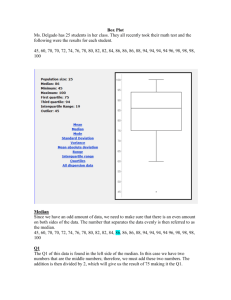

Phase (3) Clusters and outlier Index: 1) Introduction:……………………………………………………….2 2) Clusters:………………………………………………………………2 2.1) White Wine dataset:………………………………2 2.2) Brest Tissue dataset:………………………………4 3) Outlier:…………………………………………………………………7 3.1) Brest Tissue dataset:………………………………7 3.2) White Wine dataset:………………………………9 4) Conclusion:…………………………………………………………13 1 1) Introduction: In this phase we introduce how to implement clusters and outliers on two data sets (White Wine and Brest Tissue). We can use clusters and outliers on unsupervised or supervised data (because target class is not important in the computation to find nearest center, so ignore it automatically). Clusters is very important to know how many group can we have, and which data have the same information. So, we can make an important decision from these clusters. But outlier is used to find noise data or find data out of specific range. To detect if the outlier data is new knowledge or an error. Note: you can open wineclusers and brestclusterkmodule from clusters folder and DISTANCEWINE, LOFBREST, wineLOF and distancebrest from outlier folder. 2) Clusters: 2.1) White Wine dataset: 1) K-means was used because all data are numeric. Analysis: at first we specify number the value of k is four to give four clusters, but when we plot clusters we see that the data is very close to each other. You can verify that when you see the plot view figure 2.1.3 and centroid table in figure 2.1.4. For example if we take alcohol attribute then we see that the centers of cluster_3, custer_2 and custer_1 are approximately close to center 10.8. Therefore, we minimize the value of k to 2. So, we see that there are spaced center from each to other . So, two clusters is very good choice. Figure 2.1.1: The process of K-means method in wine dataset Figure 2.1.2: The text view result for four clusters of K-means method 2 Figure 2.1.3: The plot view of k-means method after using SVD method to transfer dataset from multi dimensional to 2 dimension for four clusters Figure 2.1.4: The centroid table for four clusters in wine datasets Figure 2.1.5: The text view result for two clusters of K-means method 3 Figure 2.1.6: The plot view of k-means method after using SVD method to transfer dataset from multi dimensional to 2 dimension for two clusters Figure 2.1.7: The centroid table for two clusters in wine datasets 2.2) Brest tissue dataset: 1) K-medodies was used because the dataset has nominal attribute. Analysis: in this dataset we remove AREA attribute because it has a noise data (you can see phase one). When we assign k to any number there is a cluster which contains a one record, this record has noise data in AREA attribute. So, we decide to remove it. After that we tried to choose the best k. So, we assigned k at first time to six and the other choice to two. When assign k to six we found a many nearest centers we can merge it to one cluster see figure 2.2.3 and figure 2.2.4. From figure 2.2.4 you can see cluster_1, cluster_4 and cluster_5 can be a one cluster and cluster_0, cluster_2 and cluster_3 can be another cluster. Therefore, we decide to choose k equaled to two. See figure 2.2.6 and figure 2.2.7. 4 Figure 2.2.1: The process of K-medodies method in Brest tissue dataset Figure 2.2.2: The text view result for six clusters of K-medodies method Figure 2.2.3: The centroid table for six clusters in Brest tissue datasets 5 Figure 2.2.4: The plot view of k-medodies method between target class and number of clusters Figure 2.2.5: The text view result for two clusters of K-medodies method Figure 2.2.6: The centroid table for two clusters in Brest tissue datasets 6 Figure 2.2.7: The plot view of k-medodies method between target class and number of clusters 3) Outlier 3.1) Brest Tissue dataset: Analysis: From the beginning we choose Distance outlier which need to specify k number of neighbors and d the distance of outlier by using Euclidian distance. With start value of k equal to ten and number of outliers equal ten also. Then we have many outliers that does not have any meaning of errors or new knowledge. So, from phase one we have only one error on instance 103 in attribute AREA. Therefore we change number of outlier to one and k still equal ten to have a large number of neighbors. See figure 3.1.1.2. And when you see figure 3.1.1.3 the table of data view you can see there is only one error which have a true value in the first row. When we apply the LOF outlier we ensure that the value of number of outlier is correct because LOF outlier compute how much outlier is far from the nearest point. See figure 3.1.2.2 and figure 3.1.2.3. from the table of data view in figure 3.1.2.3 you can see the maximum distance is also for instance 103. Therefore this instance is an error outlier because it has an invalid value in AREA attribute maybe at the entered this value. 3.1.1) Distance outlier: Figure 3.1.1.1: the process distance outlier on Brest Tissue dataset 7 Figure 3.1.1.2: the plot view of Distance outlier on Brest Tissue Figure 3.1.1.3: the data view of Distance outlier on Brest Tissue 3.1.2) LOF outlier: Figure 3.1.2.1: the process LOF outlier on Brest Tissue dataset 8 Figure 3.1.2.2: the plot view of LOF outlier on Brest Tissue Figure 3.1.2.3: the data view of LOF outlier on Brest Tissue 3.2) White Wine dataset: Analysis: In this dataset we give a 2000 instances as a sample because there is no enough memory to process all data. after that we have a one outlier by using LOF method and we don’t know why? So, we use a filter example to show only "quality = 7 && alcohol =13" because in this range we have an outlier, also we need to know why this instance is an outlier. See figure 3.2.1.1. Then we make a zoom out at point 0.020 in x-axis of figure 3.2.1.2 to have figure 3.2.1.3. Because the outlier in first figure is not clear. after that we compare between the instances and an outlier instance see figure 3.2.1.5. We conclude that the outlier instance is a new knowledge of a new type of white wine in quality 7 with minimum value of volatile acidity and maximum value of residual sugar. So, in this case we have a new knowledge outlier not an error outlier. 9 3.2.1) LOFE outlier: Figure 3.2.1.1: the process of LOF outlier on White wine dataset Figure 3.2.1.2: the plot view of LOF method on white wine dataset after applying SDV method to convert from multi dimension to two dimension (there is an outlier but not clear) Figure 3.2.1.3: the maximum zoom from previous figure to see clear an outlier in red color 10 Figure 3.2.1.4: the data view table of LOF outlier that you can see the first row has the maximum outlier for instance number 1449 and quality equal to seven Figure 3.2.1.5: in this table show all row of quality 7 and alcohol 13 to compare between outlier row and the residual rows. Where the last row is the outlier row. 3.2.2) DISTANCE outlier: Analysis: when we apply Distance outlier on 2000 samples of white wine dataset we surprised from the results. The outlier appear in LOF outlier does not appear hear. So, we increase the number of outlier to be 100 and 1000, but we did not found row 1449 as an outlier in this method. Therefore, we conclude that the distance outlier just found the points which is far from the distance point. See figure 3.2.2.4, figure 3.2.2.5 and figure 3.2.2.6. Figure 3.2.2.1: the process of Distance outlier on 2000 sample of White wine dataset where number of outlier is ten and number of neighbors k also 10. 11 Figure 3.2.2.2: The plot view of White wine dataset that appear ten outlier by using distance outlier. Figure 3.2.2.3: The data view table of White wine dataset that appear ten true outlier by using distance outlier. Figure 3.2.2.4: the process of Distance outlier on 2000 sample of White wine dataset where number of outlier is 100 and number of neighbors k is 10. 12 Figure 3.2.2.5: The plot view of White wine dataset that appear 100 outlier by using distance outlier. Figure 3.2.2.6: The data view table of White wine dataset that appear 100 true outlier by using distance outlier. But row 1449 not appear. 4) Conclusion: 1- From this experiment we decide that the LOF outlier is more accurate than Distance outlier. 2- You can use LOF outlier to detect the correct error or knowledge outlier. But using Distance outlier for just to count the points which are so far from the distance. So, it is maybe not outlier. 3- when you use K-nearest or K-medodies you must choose k which give a pure center (i.e. there are no two clusters you can merge it in one cluster). 13