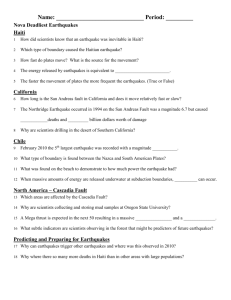

all abstracts as a Word document

advertisement