PBIS In Alternative Schools

advertisement

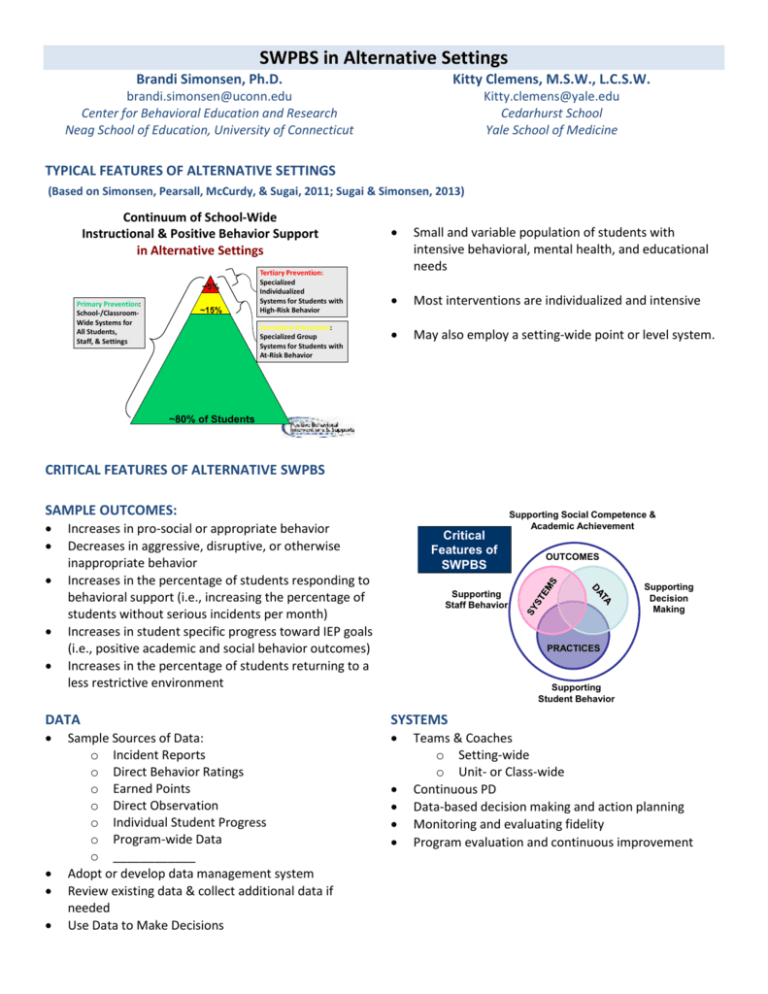

SWPBS in Alternative Settings Brandi Simonsen, Ph.D. Kitty Clemens, M.S.W., L.C.S.W. brandi.simonsen@uconn.edu Center for Behavioral Education and Research Neag School of Education, University of Connecticut Kitty.clemens@yale.edu Cedarhurst School Yale School of Medicine TYPICAL FEATURES OF ALTERNATIVE SETTINGS (Based on Simonsen, Pearsall, McCurdy, & Sugai, 2011; Sugai & Simonsen, 2013) Continuum of School-Wide Instructional & Positive Behavior Support in Alternative Settings ~5% Primary Prevention: School-/ClassroomWide Systems for All Students, Staff, & Settings ~15% Tertiary Prevention: Specialized Individualized Systems for Students with High-Risk Behavior Secondary Prevention: Specialized Group Systems for Students with At-Risk Behavior Small and variable population of students with intensive behavioral, mental health, and educational needs Most interventions are individualized and intensive May also employ a setting-wide point or level system. ~80% of Students CRITICAL FEATURES OF ALTERNATIVE SWPBS SAMPLE OUTCOMES: Increases in pro-social or appropriate behavior Decreases in aggressive, disruptive, or otherwise inappropriate behavior Increases in the percentage of students responding to behavioral support (i.e., increasing the percentage of students without serious incidents per month) Increases in student specific progress toward IEP goals (i.e., positive academic and social behavior outcomes) Increases in the percentage of students returning to a less restrictive environment Critical Features of SWPBS Supporting Student Behavior Supporting Decision Making PRACTICES SYSTEMS OUTCOMES Supporting Staff Behavior DATA Sample Sources of Data: o Incident Reports o Direct Behavior Ratings o Earned Points o Direct Observation o Individual Student Progress o Program-wide Data o ____________ Adopt or develop data management system Review existing data & collect additional data if needed Use Data to Make Decisions Supporting Social Competence & Academic Achievement Teams & Coaches o Setting-wide o Unit- or Class-wide Continuous PD Data-based decision making and action planning Monitoring and evaluating fidelity Program evaluation and continuous improvement PRACTICES • Program- or School-wide o Clearly stated purpose and approach o A few positively stated expectations o Procedures for directly teaching expectations program-wide o Continuum of strategies for reinforcing expectation following o Continuum of strategies for correction expectation violating • Classroom Setting o Maximize structure and predictability o Establish, post, teach, monitor, and reinforce a small number (3-5) of positively stated expectations o Actively engage students in observable ways o Establish a continuum of strategies to acknowledge students for following expectations o Establish a continuum of strategies to respond when students violate expectations • Non-classroom Setting o Actively supervise o Teach setting-specific routines and expectations directly o Reminders and pre-correct frequently o Positively reinforce frequently, specifically, and regularly • Individual Student o Develop data-decision rules to identify students who do not respond to Tier 1 o Organize other supports along a continuum o Develop an assessment process to determine which additional intervention(s) may be appropriate o Collect progress monitoring data General Process for Implementation 1. Identify Team (adapted from Sugai et al., 2010, p. 48) 2. Complete Self-Assessment 3. Develop/Adjust Action Plan 4. Implement Action Plan 5. Monitor & Evaluate Implementation EMERGING EVIDENCE BASE • • Descriptive case studies have documented that implementing SWPBS, or similar proactive system-wide interventions, in alternative school settings results in positive outcomes. o Decreases in crisis interventions (e.g., restraints) and aggressive student behavior o Increases in percentage of students achieving the highest levels In addition, staff members are able to implement with fidelity and staff and students generally like SWPBS. (Miller, George, & Fogt, 2005; Farkas et al., 2012; Miller, Hunt, & Georges, 2006; Simonsen, Britton, & Young, 2010) CASE STUDY: CEDARHURST 1. What is the Cedarhurst School? Private, therapeutic, special education outplacement Students with ED and OHI labels o Social, emotional and behavioral problems o Psychiatric diagnoses Middle and High School (ages 11-21) Public school students from all over Connecticut Tuition paid by sending Districts Small class size (no more than 8 per class) Self-contained and mainstream classrooms Special education teachers, social workers, behavioral support staff Therapeutic groups, individual counseling, crisis intervention, collaboration with collaterals Use of time-out 2. What does PBIS look like at Cedarhurst? Positively stated expectations: Responsibility, Safety Respect Recognition system: o Students earn points, which provide access to 3 levels of privileges Level A: Earned 90% of points for each expectation Level B: Earned 75% of points for each expectation Level C: Earned less than 75% of points for each expectation (are a new student or recently off 1:1 status) o Students also earn tickets Student can earn tickets for a positive behavior in each of the three expectations categories When tickets are given, students are directly told why they have earned them Tickets are currency and can be used to buy activities, field trip, breakfast, special lunches, themed snacks, activity with specific staff members, auctioned items and raffled items The homeroom who earns the most tickets in a week wins the “rock-on” award and is entitled to homeroom activity (donuts for breakfast, choice of music during lunch, play Wii during homeroom) Students can use tickets to buy items in the school store PBIS Lessons: You can’t expect anyone to do anything until they are taught! 3. Does it work? Significant reduction in office referrals Fewer and shorter time outs 4. Why does it work? Annual action plan o Annual Goals Reduce frequency and duration of time out Increase percentage of students maintaining level 4:1 ratio of positive reinforcement to negative consequences Fidelity to the PBIS model o Data on progress compiled quarterly to keep us on track o Achieving goals promotes on-going buy-in from staff and students PBIS Practices o Start with the Universal (Tier 1) Emphasis on structuring universal behavioral system that applies school-wide Tier II and III won’t work unless Tier I is solid o Align existing practices with PBIS Universal Practices o Tier II Implemented once Tier I is solid Define criteria for plan development, implementation and fade out Individual mentoring and coaching, contingent and non-contingent PBIS Team o PBIS Team coach o Teachers, paras, social workers, director o Student council provides input o Team meets once a week RELIGIOUSLY o Review data o Plan PBIS activities o Problem-solve Problems are discussed by PBIS team Solutions are sought from entire staff Daily Wrap Up Meeting o Entire staff meets every day for 30 minutes Determine behavioral goals Review data Discuss levels o All staff have opportunity to discuss PBIS practices, effectiveness and goals o PBIS Team members present identified issues and ask for or offer possible solutions Everyone takes ownership Student Investment o Student Council o Student input into rewards o Careers Class creates posters to advertise rewards o Culture of participation has built over years All students participate enthusiastically Parent Involvement o Weekly communication home Postcards home to emphasize positive Email Multi-staff approach o Point card as communication tool Parents reward school progress at home Data Collection o Everyone participates Teachers: ticket tallying, expectation card tallying, sign up for events, tracking levels o Other staff Data drives decisions Student Tweak reward universally Tier II plan Time of Day Location Staff 5. How is it sustained? Modify the program as you go to correct ineffective practices Three examples of program modifications: o Level system Reducing the number of behavioral expectations Simplify lessons/expectations Reduce subjectivity Increased the value of each point relative to level Criteria for maintaining and attaining levels Gradually increased percentage of points necessary Then changed from overall point percentages to requiring 75% (B) or 90% (A) within each category. Failure to maintain level due to “major safety violations.” Problems o No objective definition of major safety violation o Drawn out arguments in staff meetings about whether students should lose their levels o Students not learning from consequences due to lack of clarity Behavioral Goals Level student with problematic behavior is given a specific behavioral goal Student is taught how to achieve the goal A check is given for any instance of the specific problematic behavior Level A’s move to a B if they receive one check, level B’s move to a C after they receive two checks If students achieve their goal, they maintain their level and can achieve a higher level (if they have the points.) o Data collection Many team members involved in data collection, entry and processing If any team member feels overburdened, we examine the process to spread the load Example: Initially one person was responsible for entering all ticket data each week, as well as running data reports for PBIS team Now each person enters their own ticket data each week in a shared “ticket tally” o Rewards Rewards are consistently given Level activities Ticket trips/Field Trips Student of the Week/Month Tickets and levels are more meaningful/valuable Student input assures rewards are wanted More privileges available with higher levels Outside privileges during lunch Use of electronics at gym o Ticket spend downs Ticket raffle o Address spike in negative behaviors o Can only use tickets they have earned within specified time period o Can use tickets to buy raffle tickets Other Factors SET Evaluation to monitor fidelity Positive behavior in the classroom reinforces/rewards staff participation in the system Behaviors worse in the beginning Consistency and repetition (teaching expectations) led to student acceptance and success ¨GOOSE (Get Out Of School Early) Healthy competition Being recognized Staff cohesion Staff “thank you” ¨Attention is on positive behavior which fosters positive feelings in both student and teacher/staff DISCUSSION: LESSONS LEARNED • • • • Alternative schools with a large number of behavioral challenges can greatly benefit from strong effective universal practices Take the time to build each component with consideration Use data at every step Make sure data guides each decision! THANK YOU!