Do Random Series Exist - Association for Business and Economics

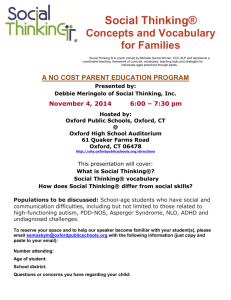

advertisement

2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 Do “Random Series” Exist? Lewis L Smith Consulting Economist in Energy and Advanced Technologies mmbtupr@aol.com 787-640-3931 Carolina, Puerto Rico, USA June 22-24, 2008 Oxford, UK 1 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 2 Do “Random Series” Exist ? Lewis L. Smith mmbtupr@aol.com ABSTRACT The existence and nature of randomness is not an arcane matter, best left to specialists. It is fundamental, not only in helping to define the nature of the universe but also in more mundane matters. Examples are making money in the stock market, improving the accuracy of economic forecasts and saving lives in hospitals, especially by the correct interpretation of diagnostic printouts. The whole question is still open. Some say that not only quantum mechanics but also mathematics, is random in at least one fundamental aspect. Others believe that someday even most “random” phenomena will be explained. Most economists side with the latter group. However, there are lots of random-looking systems and data around. So to get about their business, economists have sought to tame this randomness with intellectual patches. Unfortunately, the patches have started to come apart, and a great many questions are now open to discussion. What do we mean by random? Does a single distribution really fit all possible random outcomes? Are the data generating processes of securities markets and their outputs chaotic, random or what? If random, how many and what kind of distributions do we need to characterize price behavior in a given market? What do we do about extreme values (outliers)? And more generally, what do we do about nonrandom systems which generate runs of data which look and/or test random? And vice versa? To deal with these problems, this paper proposes to separate the characterization of processes from the characterization of output. In place of random processes, we define “independent-event generators”, and in place of random series, we set forth a research program to define “operationally random runs”. INTRODUCTION June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 3 At first glance, this subject would seem to be an arcane matter, best left to specialists such as econometricians, mathematicians, meteorologists, philosophers, physicists and statisticians. But it is not. The question of randomness is fundamental, not only in helping to define the nature of the universe but also in more mundane matters. Examples are making money in the stock market, improving the accuracy of economic forecasts and saving lives in hospitals, especially by the correct interpretation of diagnostic printouts. Since well before the time of Leibnitz (1646-1716), scholars have been arguing over whether the universe is completely explainable or whether some of its behavior is “random” and in what sense. On one hand, there is a thread running from Godel (accidentally) to Chaitin and others which ends up saying that not only quantum mechanics but also mathematics, is random in at least one fundamental aspect. On the other, there is a contrary thread running through Einstein to Wolfram, who tries to explain too much with cellular automata. In brief, as Einstein would put it, Does God “play dice” with the world? And if so, when and how? (Calude 1997, Casti 2000, Chaitin 2005, Einstein 1926, Wolfram 2002.) Economists’ hearts are with Wolfram. Indeed a strong bias towards order pervades “mainstream” economics. For example, economies and markets are alleged to spend most of their time at or near equilibrium, after the manner of self-righting life boats or stationary steam boilers, preferably one of the simpler designs invented in the 19th century. All this raises suspicions of “physics envy” and even stirs calls for a new paradigm. (Mas-Colell 1995, Pesaran 2006, Smith 2006.) RANDOMNESS AND ECONOMICS However, the goal of explaining almost everything is far off, and present reality is full of systems whose data-generating processes and outputs sometimes look and/or test random, whether or not they really are. Economics in particular is heavily dependent on concepts of randomness. For example: [1] The parameters used by econometrics to define economic relationships are stochastic. That is, they are “best” estimates of supposedly random variables, each made with a specified margin of June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 4 error. Moreover, their validity depends in large part, on the “quality” of these random aspects. (Kennedy 1998.) [2] Many analysts still believe that some very important time series are generated largely or exclusively by random processes. These include the prices of securities whose markets are the largest in the world and among those with the lowest transaction costs. (Mandelbrot 2004.) Randomness also shows up in the models of agent-based computational economics. (Arthur 2006, Hommes 2006, Voorhees 2006, Wolfram 2002.) [3] Randomness plays multiple roles in the applications to economics of the new discipline of complexity, which studies what such systems as biological species, cardiovascular systems, economies, markets and human societies have in common. For example, with too many definitions of complexity “on the table”, it is operationally convenient to define a complex system as one whose processes generate at least one “track” (time series) whose successive line segments (regions) exhibit at least four modes of behavior, one of which must be — random! (Smith 2002.) So to get about their business, economists have made a virtue of an unpleasant reality by attempting to tame randomness with a number of asymptotic truths, bald-faced assertions, fundamental assumptions and/or working hypotheses. For example: [1] The essence of randomness is the spatial and/or time wise independence of the numerical output of a system. This independence could be due to process design, to pure chance or to a hidden order so arcane that we cannot perceive it. But whatever the case, we are unable to predict the next outcome on the basis of any of the previous ones or any combination thereof. In brief, the process has absolutely no memory. (Beltrami 1999) [2] 1 An implicit corollary of the foregoing is that each and every run of data generated by any such process is random, in a heuristic sense at least. (Pandharipande 2002.) 1 Some would argue that properly speaking, randomness is a property of the generating process, not of its outputs. But in practice, this distinction is frequently ignored, so we will do likewise. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program [3] ISBN : 978-0-9742114-7-3 5 Conversely a run which looks and/or tests random has (hopefully) been generated by a random process. [4] All random outcomes follow the Gaussian distribution or, thanks to the magical powers of the Central-Limit Theorem, approach it asymptotically as n increases, as if economic events had something in common with the heights of French soldiers in the 18th Century! Unfortunately we are seldom told how far away we are from this state of bliss in any particular instance. DISARRAY IN THE RANDOMNESS COMMUNITY Over time, the first of the preceding items has acquired competition and the other three have turned out to be wrong on too many occasions. In particular: [1] The definitions of randomness currently “on the table” use a variety of incompatible concepts, such as the nature of the generating process, the characteristics of one or more of its tracks, a hybrid of these two or the length of the shortest computer program which reproduces a given track. As a result, the “randomness” of given process or track depends on the definition used. Indeed there is no all purpose “index” of randomness. (Pandharipande 2002.) [2] More often than one expects, some genuinely random processes generate runs which do not look and/or test random (in the popular sense) while non-random processes sometimes generate random ones. [3] Since Pareto’s pioneering work with income distributions (Pareto 1896-97), there is increasing recognition that “departures from normal” among probability distributions are in fact, quite normal! For example, the heights of a collection of human beings at any one point in time follows a Gaussian Distribution; the expected lives of wooden utility poles, a Poisson Distribution; and the speed of the wind at a given height and location for a given period, a Weibull Distribution. In the cases of June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 6 econometric parameters, residual errors and test statistics, we find a bewildering variety of distributions, many of which are not even asymptotically Gaussian. (Razzak 2007.) [4] In securities markets, it quite possible for the distribution of outcomes in the/ “tails” of a price series to be different from that which characterizes the bulk of the sample and sometimes, from each other. (Sornette 2003.) [5] Popular concepts of randomness do not tell us how to distinguish errors from other kinds of extreme values. Nor do they give us adequate guidance on “noise”, which need not be Gaussian. We now examine these matters in detail from the point of view of “operating types”, such as business economists, organizational managers and strategic planners. We begin with what were long thought to be the “easy cases”, ones in which both the processes and their tracks were held to be random. SECURITIES MARKETS For several decades, it was “gospel truth” in academic and financial circles that the processes of securities markets were not only random but generated outputs which tested random. In particular, the log differences of any and all prices for any period in almost all such markets were alleged to faithfully obey the Gaussian distribution, with nary a chaotic thrust, off-the-wall event or statistical outlier to disturb one’s sleep. (Lo 1999.) In this enchanted world, happenings such as those of 10/29, 10/77, 10/87 and O3/00 had nothing to do with the dynamics or structure of a particular market or with the distribution of returns. Such occurrences were genuine, bona fide outliers, rare aberrations caused by factors which were external, unusual and unique to each occasion. Examples are the gross errors attributed to the Federal Reserve System’s Board of Governors in 1929 or those never found “sufficient causes” of the 10/87 debacle, whose absence is a sure signature of chaotic, not random behavior. (Peters 2002, Sornette 2003, Jan 2003.) June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 7 Moreover, this illusion protected brokers. If their clients suffered heavy losses, it was not the broker’s fault. And no one could make money by “playing the market”, as opposed to investing for the long haul. Those who did were just lucky and would eventually “go broke”, as did the famous speculator Jesse Livermore. (LeFevre 1923.) Thanks to the dogged work of Mandelbrot, Sornette, Taleb and other skeptics, researchers began to have doubts and so today, there are many open questions. Are markets random, chaotic or what ? What is the relevant output — prices, price changes or run length? Should we use one, two or three distributions to describe output, and which ones? And what do we do about extreme values? The resolution of these disputes is not an easy matter. There are many candidate distributions, and many of these “shade into” one or more of the others. Worse yet, as Sornette puts it, there is no “silver bullet” test by which one can tell if the tail of a particular distribution follows a power law. (Bartoluzzi 2004; Chakraborti 2007); Espinosa-Méndez 2007; Guastelo 2005; LeBaron 2005; Lokipii, 2006; Motulsky, 2006; Pan, 2003; Papadimitrio, 2002; Perron 2002; Ramsey 1994; Scalas 2006; Seo 2007; Silverberg, 2004; Sornette all, Taleb 2007.) Of course, one may sometimes obtain a tight fit to historic data with a “custom-made” distribution obtained by the use of copula functions. But over fitting is a risk, the methodology is complicated and requires both judgment calls and the use of a Monte Carlo experiment, which plunges us into murky world of pseudo-random number generators of which, more later. (Giacomini 2006) Even if one discovers the “correct” distribution to characterize a particular output, it may not provide an adequate measure of market risk. Moreover, if the generating process is unstable, one may be forced to measure the additional risk by the risky procedure of Lyapunov exponents. (Bask 2007, Das 2002a.) TECHNICAL ANALYSIS To add insult to injury, those who devoutly believe that price formation in securities markets is a purely random process must face up to the existence on Wall Street of an empirical art known as “technical analysis”, which most academics either ignore or classify as “irrational behavior”. (Menkhoff 2007.) June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 8 Some of its practitioners not only make money, but have the effrontery to publish their income-tax returns on the Internet! These persons, called “technicians”, see a veritable plethora of regularities in price charts: candlesticks, cycles, double bottoms, double tops, Elliot waves, Fibonacci ratios, flags, Gann cycles, Gartley patterns, heads, pennants, pitchforks, shoulders, trend lines, triangles, wedges et cetera. (Bulkowski 2006; Griffoen 2003; Maiani 2002; Penn 2007.) Nevertheless, the financial success of a minority of technicians and the discovery of 38,500 patterns by Bulkowski does not signify “the death of randomness” on Wall Street. To begin with, all technicians recognize that any price or price index may slip into “trading range” from time to time and that any pattern may fail on occasion.2 Furthermore, they frankly admit that many patterns are not reliable enough to trade on, that some are too hard to program and that still others have become too popular to give a trader “an edge”. So if academic “hard liners” wish, they may still look down on technicians as if they were priests of ancient Rome examining the entrails of sacred geese, but with the mantra — “past is prologue”. (Blackman 2003; Bonabeau 2003; Bulkowski 2005; Connors 2004, Eisenstad 2001, Katz 2006, Mandelbrot 2004, Menkhoff 2007, Yamanaka 2007.) The attempts of some technicians to explain market behavior entirely as a sequence of non-random cycles have also come a cropper, except in the hands of the most experienced practitioners. These include not only such traditional techniques as Dow Theory, Elliot Waves and Gann Curves, but more modern ones such as the Bartlett algorithm, assorted Fourier transforms, high-resolution spectral analysis and multiple-signal classification algorithms. (Ehlers 2007; Hetherington, 2002; Penn 2007, Ramsey 1994, Rouse 2007 and Teseo 2002.) Fortunately the techniques of wavelets, familiar to engineers and natural scientists, holds promise both for economics and for finance and even more so, the related one of wave-form dictionaries. However, these techniques require judgment calls between a rich variety of options and (sometimes) a knowledge 2 Dukas (2006) finds that for 80 years of the Dow Jones Industrial Average, the index was in a bullish phase (upward trending) 45% of the time, a bearish phase (downward trending) 24% and in “a trading range”, 31%. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 9 of programming, so careful study and considerable experience are a prerequisite to their successful use. (Schleicher 2002, Ramsey 1994, 2002.) EXTREME VALUES In characterizing the output of any process by a probability distribution, one must deal with extreme values. Are they part and parcel of some “fat-tailed distribution” which fully describes the data at hand, do have their own “tail” distribution or are they truly exogenous. That is, are they anomalous, separate events, each one “coming off its own wall” perhaps, such as catastrophic events which lie beyond any power-law distribution? And what about errors in measuring or recording? How do we separate “the sheep from the goats” and obtain a “true” representation of the data-generating process? (Chambers 2007, Maheu 2007, Sornette all, Taleb 2007.) Decisions relating to extreme values are of particular significance whenever one uses estimators in which second or higher moments play important role. Unfortunately there is no consensus as to how to answer these questions in the case of nonlinear regressions, frequently the most appropriate ones for financial time series. The classification of a specific event as an outlier in such cases is not only difficult, but it involves at least one subjective judgment and at least one tradeoff between two desirable characteristics of parameter estimates. (Le Baron 2005; Moltusky 2006; Perron 2001 and 2002, White 2005.) RANDOM PROCESSES WITH NON-RANDOM OUTPUTS Paradoxically many processes known with absolute certainty to be random, frequently generate runs of data which do not look and/or test random by any of the popular criteria. Coin flippers and roulette wheels are obvious examples. Their outputs, especially the cumulative versions, may quite accidentally manifest one or more autocorrelations, including pseudo cycles, pseudo trends and unusual runs, any one of which may end abruptly when one least expects it! Needless to say, such an event can cause great distress to those not familiar with the behavior of such processes, especially if they have placed a bet on the next outcome! June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 10 For example, given n tosses of a fair coin, each possible sequence has exactly the same probability of occurring, whether it is “orderly”, like HHHHHH… and HTHTHT… or well scrambled, by far the most common case. However, it is very human to forget that random events often exhibit patterns. So upon seeing HHHHHH… in a short run, almost all lay persons and some researchers will “cry foul”. (Stewart 1998.) First-order Markov chains with a unit root, such as those used to represent the cumulative results of coin flipping, provide some of the worst examples. The longer the run, the more likely it is that the track will exhibit long, strong “trends” and make increasingly lengthy “excursions” away from its expected mean. In fact, the variance of such a series is unbounded and grows with time. For example, consider the “Record of 10,000 coin tosses”. (Mandelbrot 2004, p. 32). This cumulative track takes some 800 tosses to cross the 50% line for the first time and 3,000 tosses to cross it for the second. Even after 10,000 tosses, the track has not yet made a third crossing, although it is getting close! Of course, given enough realizations, the famous “law of averages” will always reassert itself from time to time. But frequently it fails within the shorter runs which are all that casino gamblers and “players” in securities markets can afford. So the latter usually run out of money long before things “even up”. (Leibfarth 2006.) In sum, drawdown usually trumps regression to the mean, a truth apparently forgotten by the principals of both Amaranth and Long-Term Capital. (Peters 2002.) DETERMINISTIC PROCESSES WITH RANDOM OUTPUTS Conversely many largely or purely deterministic systems can generate long runs which look and/or test random by popular criteria. A classic example is the chaotic system which begins as a tropical storm off the west coast of Africa, wanders erratically to within few hundred miles of Barbados and then suddenly turns into a hurricane. Worse yet, some purely deterministic systems are intentionally designed to generate nothing but runs of data which test random by standard tests, although in the very long run, they will repeat themselves. Prominent among them are the computer algorithms known as “Pseudo-random Number Generators” (PRNG’s). Unfortunately, none of these provides an “all weather” standard of randomness. Each has its June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 11 own deficiencies and idiosyncrasies, so one must chose among them with care. (Bauke 2004; Hellekalek 2004; OmniNerd 2007 and P Lab all.) Another example is provided by the constant π, well known to ancient Greek geometers and modern students of trigonometry. Although the true value has an infinite number of digits, Leibnitz worked out an exact formula for the first n digits, a formula which can be easily calculated by a short computer program. If one generates enough of these digits, one can select a long run which tests random and use it for evaluating still other series, in lieu of the output of a PRNG. This example also violates all definitions of randomness based on the length of the generating computer program. (Chaitin 2005 and Shalizi 2003.) In summary, nearly all processes of human confection, whether deterministic, random or some of each, are sooner or later going to generate some output which looks or tests as if it came from somewhere else. ILL-DEFINED PROCESSES WITH AMBIGUOUS OUTPUTS In many cases, we face a double challenge. Our understanding of a process limited, and the behavior of it output is ambiguous. There are several ways by which we may tempted to increase our knowledge of the situation. If the possible classifications of a given track can be reduced to two, a comparison of the series may settle the issue. Unfortunately, large sample sizes and sophisticated mathematical techniques are often required, without any guarantee of success. (Das 2002b, Geweke 2006.) For example, in their respective fields, biologists and medical researchers have great difficulty in distinguishing between time series generated by the processes of deterministic systems and those June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 12 generated by random ones, although the difference may be critical for diagnosis and treatment of patients. (Das 2005.) For example, “…in many cases, it may be impossible to distinguish between a process which follows a deterministic (algorithm) subject to (random) ‘noise’ and a random process (which has been) subjected to selection or reinforcement”. (Vorhees 2006.) Alternatively we may try to construct a forecasting function from the data at hand, analyze an existing model of the process or construct our own model of the process. The first of these endeavors is fraught with peril; the other two are usually a waste of time, at least as far as randomness is concerned. For example, large amounts of data are often required for the construction of a good forecasting function, such as Sornette’s heroic “Formula 19”, which requires eight years of stock-market prices to be properly estimated. (Sornette 2003, p. 236.) Moreover, the longer the time series, the greater the risk that the researcher will be “ambushed” by phenomena such as changing seasonality, cycles, extreme values, fractional integration, gaps, improbable runs, log periodicity, long memory, near unit roots, nonlinearity, non-stationary noise, nonstandard distributions, omitted variables, power-law accelerations, regime switching, stationarity problems, stochastic trends, structural breaks, thick tails, thresholds, time-varying parameters, time-varying volatility and volatility clusters. Any one may affect parameters, variables or both, and many are hard to detect and/or distinguish from each other. (Bartolozzi 2004, Bassleer 2006, Bernard 2008, Byrne 2006, Chakraborti 2007, Diebold 2006, Dolado 2005), Dow 2006, Harvey 1994 and 1997, Hjalmarsson 2007, Harvey 1997, Heathcote 2005, Johansen 2007, Komunjer 2007, Koop 2007, Koopmand 2006, Kuswanto 2008, Maheu 2007, Noriega 2006, Perron 2005: Pindyck 1999, Royal 2003, Sornette all, Teräsvirta 2005 and Zietz 2001.) Moreover, it is not enough to classify whole time series or segments thereof. Operating types need to know when the mode of behavior is going to change. Unfortunately there is the “70% barrier”, in both econometrics and technical analysis. It is extremely difficult to call more than 70% of the turning points in any given time series. (Cobly 2006, Gopalakrishnan 2007, Peters 2002, Pring 2004, Taleb 2007 and Zellner 2002.) June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 13 As for existing models, they have probably been designed for another purpose and therefore are unlikely to be of much help in detecting randomness. (Karagedikli 2007; Zellner 2002.) Building a model “from scratch” is a complex, difficult and resource-consuming activity, as well established by a vast literature. (Favero 2007, Gilboa 2007, Phillips 2003 and 2007, and Zellner 2002 and 2004.) More to the point, given the propensity of dynamic systems to imitate each other’s outputs from time to time, it is unlikely to enlighten us very much. Speaking of the travails of central-bank economists, Razzak (2007) neatly summarizes the modeling problem : “… we will not be able to find out what the (data-generating process) is exactly. We are also not able to precisely pin down the nature of the trend”. WHERE TO TURN? At the present time, the only way to be sure of obtaining a genuinely random process that generates random outputs on every occasion, is have recourse to certain physical processes in nature. An example is the “Quantum Random Bit Generator”, a a fast, non-deterministic, widely distributed, random-bit generator developed and operated by a group of computer scientists at the Ruder Bokovic Institute in Zagreb, Croatia. (QRBG Service 2007.) It relies on the intrinsic randomness of photonic emission in semiconductors, a quantum physical process, and on subsequent detection of these emissions by photoelectric effect TOWARDS SOME OPERATIONAL DEFINITIONS It seems futile to persist in the search for a concept which embraces all kinds of random processes and their outputs. We suggest that the term “random process” be dropped ant that processes which generate independent events be called just that, “independent-event generators”. We further suggest that ther term “random series” be dropped and that line segments which test random (by criteria yet to be determined) be called “operationally random runs”. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 14 At first glance, we have a surfeit of riches with regard to tests. For example, the three volumes of Walsh (1962-68) run to 1,982 pages! In particular, there are a large number of diagnostic tests used to evaluate the residuals of functions estimated by econometric techniques, tests which can be applied to line segments in general. (Kennedy 1998.) Unfortunately, there is no all purpose test for randomness. Moreover, tests for randomness and related concepts suffer not only from problems of definitions but from most of the problems associated with the construction of forecasting functions. In addition, they are plagued by other problems, such as asymptotic distributions and degrees of power which vary with the alternatives to be compared and/or the characteristics of the data. Also runs which test random may be stationary or non-stationary. White noise is always random and stationary, but not all runs which test random or stationary are white noise. (Lo 1999, Kennedy 1999, Patterson 2000.) The most popular measures of correlation measure only linear relationships. In any case, the corresponding indices represent average conditions along the entire distance between dates, when conditions may be changing in that distance. Such fluctuations in the degree of correlation may well reduce the value of the calculated index and cause one to miss those conditions under which a particular correlation is significant. This problem is of course, made particularly acute wherever structural breaks occur. (Bergsma 2006, Phillips 2004.) And so on. Under the circumstances, we propose that a small number of researchers attempt to define an “operationally random run”, in the spirit of parallel processing. Each one would take a different stock market and (in the USA at least) emphasize a moving window of 201 trading days, since 200 days is a popular and statistically significant period for traders. At a minimum, the data in the period would be subject to the following calculations and tests, every time the window advanced a day: (1) Tests of autocorrelation of the absolute values of the price changes. (2) A sign test. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program (3) ISBN : 978-0-9742114-7-3 15 The largest uninterrupted gain and largest uninterrupted loss, based on trading days. (Sornette 2003, pp. 51+.) (4) The distribution of these gains and losses. These last two items may also serve as rough alerts of the approach of a chaotic mode. In addition, (5) The low-volatility index (VIX) should be downloaded for each trading day from a convenient stock-price service, and compared with its moving average for eleven trading days ending. When VIX is 5% below its 10 period moving average, stock markets tend to be in a “dead money zone”. (Connors 2004; Ruffy 2004.) The researchers would have their own private discussion group on the Internet. CONCLUSION Yes, random series do exist. They are not artifacts of our ignorance. However, they do not always appear where we expect to find them, nor do their outputs always look like what we expect to see. POSTSCRIPT We can learn much from peering at the mathematical “entrails” produced by the manipulation of data but in the end, we cannot avoid the hard tasks of “collecting the dots” across space, time and disciplines, connecting them and then marshalling evidence, all as best we can. We can never “tell the story” without the numbers, the numbers seldom if ever, “tell the whole story”! In the final analysis, we come back to what Professor Manoranjan Dutta of Rutgers University told Puerto Rico’s first econometrics class one summer day in 1966, “There is no substitute for knowledge of the problem!” June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 16 REFERENCES Arthur, W. Brian (2006) : Out-of-Equilibrium Economics and Agent-Based Modeling, in Judd, K. and Tesfatsion, L. (editors) : Handbook of Computational Economics (vol. 2). Elsevier/North Holland, Amsterdam, Netherlands. Bartolozzi, M., Leinweber, D. B.; Thomas, A.W. (2004) : Self-organized criticality and stock market dynamics : an empirical study. Dept. of Physics, U. of Adelaide, Australia. Bask, Mikael (2007) : Measuring potential market risk. Discussion paper 20-2007, Bank of Finland, Helsinki, Finland. Bassler, Kevin E.; Gunaratne, Gemunu H. and McCauley, Josephy L. (Jul 2006) : Markov processes, Hurst exponents and nonlinear diffusion equations. Physics Dept., University of Houston TX. Bauke, Heiko and Mertens, Stephan (2004) : Pseudo-random coins show more heads than tails. Journal of Statistical Physics 114, pp. 1149-1169. Beltrami, Edward (1999) : What is Random? Springer-Verlag, New York NY Bergsma, Wicher (July 2006) : A new correlation coefficient and associated tests of independence. London School of Economics and Political Science. Bernard, Jean-Thomas; Khalaf, Lynda; Kichian, Maral and McMahon, Sebastienne (Feb 2008) : Oil prices : heavy tails, mean reversion and the convenience yield. Bank of Canada and Ministry of Finance, Quebec, Quebec, Canada. Blackman, Matthew (May 2003) : Most of what you need to know about technical analysis. Technical Analysis of Stocks and Commodities, pp. 44-49. Bonabeau, Eric (2003) : Don’t trust your gut, reprint R0305J. Harvard Business Review. (reprint) June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 17 Bulkowski, Thomas N. (Sep 2006) : Fulfilling the dream. Technical analysis of stocks and commodities, pp. 46-49. Bulkowski, Thomas N. (2005) : Encyclopedia of chart patterns. (second edition) John Wiley & Sons, New York NY. Byrne, Joseph P. and Perman, Rodger (Oct 2006) : Unit roots and structural breaks — a survey of the literature, Dept of Economics, University of Glascow, Glascow, Scotland. Calude, Christine S. (1997) : A genius’s story : two books on Gödel. Complexity 03/02. Casti, John L. and DePauli, Werner (2000) : Gödel — a life of logic. Persus Publishing, New York NY. Chakraborti, A.; Patriarca, M.; Santhanam, M. S. (Apr 2007) : Financial time-series analysis : a brief overview. ArXiv.org. Cornell U Library, Cornell NY, Chaitin, Gregory (2005) : Meta Math — the Quest for Omega. Pantheon Books, New York NY. Chambers (2007) : Robust automatic methods for outlier and error detection. Journal of the Royal Statistical Society 167 (part 2), 323-339. Colby, Robert W. (Dec 2006) : Robert W. Colby — he’s the original encyclopedia guy. Technical Analysis of Stocks and Commodities, pp. 48-56. Connors, Larry (Dec 2004) : How Markets Really Work. Technical Analysis of Stocks and Commodities, pp. 74-79. Das, Atin; Das, Pritha and Smith, Lewis (Nov 2005) : Random versus chaotic data — identification using surrogate method. Proceedings. EU conference on complexity, Paris, France. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 18 Das, Atin, Das, Pritha and Roy, A. B. (2002a) : Applicability of Lyanpunov exponent in EEG data analysis. Complexity International 09. Das, Atin et al (2002b) : Nonlinear analysis of experimental data and comparison with theoretical data. Complexity 07/03, pp. 30-40. Diebold, F. X., Kilian, L. and Nerlove, M. (May 2006) : Time Series Analysis. Penn Institute for Economic Research, U. of Pennsylvania, working paper 06-019. Dolado, Juan J., Gonzalo, Jesús and Mayoral, Laura (Sep 2005) : What is what ? A simple time-domain test of long memory vs. structural breaks. Dept. of Econ., U. Carlos III, Madrid, Spain. Dow, James and Gorton, Gary (May 2006) : Noise traders. National Bureau of Economic Research, Cambridge MA, working paper 12256. Dukas, Charles (Jun 2006) : The Holy Grail of trading is within us. Technical Analysis of Stocks and Commodities, Jun 2006, pp 62-55. Ehlers, John F. (Jan 2007) : Fourier transform for traders. Technical Analysis of Stocks and Commodities. Einstein, Albert (Dec 4, 1926) : Letter to Max Born. Eisenstadt, Samuel (May 2001) : Disproving the efficient market theory. Technical Analysis of Stocks and Commodities, pp. 57-65. Espinosa-Méndez, Christian; Parisi, Franco and Antonino Parisi (Apr 2007) : Evidencia de comportamiento caótico en indices bursátiles americanos. Munich Personal RePEc Archive, paper 2794. Favero, Carlo A. (Sep 2007) : Model evaluation in macroeconomics … , working paper 327, Innocenzo Gasparini Institute for Economic Research, University of Bocconi, Italy. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 19 Geweke, John F. ; Horowitz, Joel L. and Pesaran, M. Hashem (Nov 2006) : Econometrics : a bird’s eye view. Discussion paper 2458. Intitute for the Study of Labor, Bonn, Germany. Giacomini, Enzio ; Härdle, Wolfgang K. ; Ignatieva, Ekaterina and Spokoiny, Vladimir (2006) : Inhomogeneous dependency modeling with time-varying copulae. Working paper 2006-075. Humbolt University, Berlin, Germany. Gilboa, Itzhak; Postlewaite, Andrew and Schmeidler, David (Aug 2007) : Probabilities in economic modeling, working paper 07-023. Penn Institute for Economic Research, Philadelphia PA. Gopalakrishnan, Jayanthi (Dec 2007) : Opening position. Technical Analysis of Stocks and Commodities, p 8. Griffoen, Gerwin A. W. (Mar 2003) : Technical analysis in financial markets. Research series 305, Tinbergen Institute. U., Amsterdam, Netherlands. Guastello, Stephen J. (Oct 2005) : Statistical distributions and self-organizing phenomena. Nonlinear dynamics, psychology and life sciences 09/04, pp. 463-478. Harvey, Andrew (Jan 1997) : Trends, cycles and autoregressions. The Economic Journal 107, 192-201. Harvey, Andrew and Scott, Andrew (Nov 1994) : Seasonality in dynamic regression models. The Economic Journal 104, 1324-45. Heathcote, Andrew & Elliot, David (Oct 2005) : Nonlinear dynamical analysis of noise time series. Nonlinear dynamics, psychology and life sciences 09/04, pp. 399-433. Hellekalek, Peter (2004) : A Concise Introduction to Random Number Generation, presentation, University of Salzburg, Austria. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 20 Hetherington, Andrew (Jul 2002) : How Dow Theory works. Technical Analysis of Stocks and Commodities, pp. 91-94. Hjalmarsson, Eric and Österholm, Pär (Dec 2007) : Testing for cointegration using the Johansen Methodology … Discussion paper 915. Board of Governors of the Federal Reserve System, Washington DC. Hommes, Cars H. (2006) : Heterogeneous agent models in economics and finance, in Judd, K. and Tesfatsion, L. (editors) : Handbook of Computational Economics (vol. 2) , Elsevier/North Holland, Amsterdam, Netherlands. Johansen, Soren (Nov 2007) : Correlation, regression and cointegration of nonstationary economic time series. Discussion paper 07-25. Dept. of Econ. U. Copenhagen, Copenhagen, Denmark. Karagedikli, Ozer; Matheson, Troy; Smith, Christie and Vahey, Shaun P. (Nov 2007) : RBC’s and DSGE’s : the computational approach to business-cycle theory and evidence. Disussion paper DP2007/15. Reserve Bank of New Zealand, Wellington NZ. Katz, Jeffrey O. (Jan 2006) : Staying alive as a trader. Technical Analysis of Stocks and Commodities, pp. 56-61, 95. Kennedy, Peter (1998) : A Guide to Econometrics (fourth edition). The MIT Press, Cambrisge MA. Komunjer, Ivana and Owyang, Michael T. (Oct 2007) : Multivariate forecast evaluation and ratioality testing. Working paper 2007-047A. Research Division, Federal Reserve Bank of St. Louis, St. Louis MO. Koop, Gary M. and Potter, Simon M. (May 2007) : A flexible approach to parametric inference in nonlinear time-series models. Staff report 285. Federal Reserve Bank of NY, New York NY, June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 21 Koopmand, Siem Jan and Wong, Soon Yip (Nov 2006) : Extracting business cycles … using spectra … Dept. of Econometrics, Vrije Universiteit, Amsterdam, Netherlands. Kuswanto, Heri and Sibbersten, Philipp (2008) : Can we distinguish between common nonlinear timeseries models and long memory? Dept. of Economics, Leibniz U., Hannover, Germany. LeBaron, Blake and Samanta, Ritirupa (Nov 2005) : Extreme value theory and fat tails in equity markets. International Business School, Brandeis University, Waltham MA. LeFevre, Edwin (1923) : Reminiscence of a stock operator. Sun Dial Press. Garden City NY. Leibfarth, Lee (Nov 2006) : Measuring risk. Technical Analysis of Stocks and Commodities, pp. 21-26. Lo, Andrew W. and MacKinley, A. Craig (1999) : A non-random walk down Wall Street. Princeton U. Press. Princeton NJ. Lokipii, Terhi (2006) : Forcasting market crashes : further international evidence, discussion paper 222006, Bank of Finland, Helsinki, Finland. Maheu, John M. and McCurdy, Thomas H. (Apr 2007) : How useful are historical data for forecasting the long-run equity return distribution? Working paper 19-07, Rimini Centre for Economic Analysis, Rimini, Italy. Maiani, Giovanni (Nov 2002) : How reliable are candlesticks? Technical Analysis of Stocks and Commodities, pp. 60-65. Mandelbrot, Benoit B. and Hudson, Richard L (2004) : The (mis) Behavior of Markets. Basic Books, New York NY. Mandelbrot, Benoit (1997) : Fractals and scaling in finance. Springer Pub., New York NY. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 22 Mas-Colell, Andreu ; Whinston, Michael D ; Green, Jerry R (1995) : Microeconomic Theory, Oxford U Press, New York NY. Menkhoff, Lukas and Taylor, Mark P. (2007) : “The obstinate passion of foreign exchange professionals : technical analysis.” Journal of Economic Literature XLV, 936-972. Motulsky, Harvey J and Brown, Ronald E. (Mar 2006) : Detecting outliers when fitting data with nonlinear regression … Biominformatica O7/123. BMC Informatics. Noriega, Antonio E. and Ventosa-Santularia, Daniel (Dec 2006) : Spurious cointegration : the EngleGranger test in the presence of structural breaks. Working paper 2006-12. Banco de México, Ciudad México, México. OmniNerd (Nov 1, 2007) : www.omninerd.com/articles/Pattern_Analysis_of_MegaMillions_Lottery_Numbers . Pan, Heping (2003) : A joint review of technical and quantitative analysis of financial markets …, Hawaii Int’l. Conf. on Statistics and Related Fields.\, Honolulu HW. Pandharipande, Ashish (Nov 2002) : Information, uncertainty and randomness. IEEE Potentials, pp. 32-34. Papadimitriou, Spiros; Kitagawa, Hiroyuki; Gibbons, Phillip B. and Faloutsos, Christos (Nov 2002) : LOCI — fast outlier detection using the local correlation integral. School of Computer Science 02-188, Carnegie Mellon University, Pittsburg PA. Pareto, Vilfredo (1896-97) : Cours d'économie politique professé à l'Université de Lausanne, Lausanne, Switzerland. (3 volumes.) Patterson, Kerry (2000) : An introduction to applied econometrics. St. Martin’s Press, New York NY. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 23 Penn, David (Sep 2007) : Prognosis Software Development. Technical Analysis of Stocks and Commodities, pp. 68-71. Perron, Pierre (Apr 2005) : Dealing with structural breaks. Boston University, Boston MA. (prepared for Palgrave Handbook of Econometrics, vol. I.) Perron, Pierre and Rodríguez, Gabriel (Apr 2002) : Searching for additive outliers in non-stationary time series. Preprint for Journal of Time Series Analysis. Perron, Pierre Rodríguez, Gabriel; Reiss, Rolf-Dieter; Thomas, Michael (2001) : Statistical analysis of extreme values, Birhauser Verlag, New York NY. Pesaran, Hashem (Nov 2006) : “Econometrics, a bird’s eye view”, IZA discussion paper 2458. Institute for the Study of Labor, Bonn, Germany. Peters, Edgar (Dec 2002) : Butterflies in China, market corrections in New York. Technical Analysis of Stocks and Commodities, pp. 79-86. Phillips, Peter C. B. (Jul 2004) : Challenges of trending time series econometrics. Discussion paper 1472. Cowles Foundation, Yale University, New Haven CT. Phillips, Peter C. B. (Mar 2003) : Laws and limits of econometrics. Discussion paper 1397. Cowles Foundation, Yale University, New Haven CT. Phillips, Peter C. B. and Yu, Jun (Jan 2007) : Maximum likelihood and Gaussian estimation of continuous time series in finance. Discussion paper 1957. Cowles Foundation, Yale University, New Haven CT. Pindyck, Robert S. (1999) : The long-run evolution of energy prices, The Energy Journal 20/02. P Lab (2007) : (random number) Generators. U. Salzburg, Austria. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 24 P Lab (2007) : Tests. (of random number generators) U. Salzburg, Austria. Pring, Martin J (Apr 2004) : Do price patterns really work? Technical Analysis of Stocks and Commodities, pp. 31-37. QRBG Service (2007) : Quantum random bit generator. < http://random.irb.hr/ > . Ramsey, James B. (2002) : “Wavelets in economics and finance : past and future. Studies in Nonlinear Dynamics and Econometrics O6/O3. Ramsey, James B. and Zang, Zhifeng (Feb 1994) : The application of wave-form dictionaries to stockmarket index data, RR 94-05. C.V. Starr Center for Applied Economics, New York University, New York NY. Razzak, Weshah (Mar 2007) : A perspective on unit root and cointegration in applied macroeconomics. Paper 1970. Munich Personal RePE Archive. U. Munich, Munich, Germany. Rouse, William B. (Mar 2007) : Complex engineered, organizational and natural systems, proposal to Engineering Directorate, National Science Foundation, Washington DC. Royal Swedish Academy (Oct 2003) : Time series econometrics : cointegration and autoregressive conditional heteroskedasticity. Stockholm, Sweden. Ruffy, Frederic (May 2004) : Four measures of market volatility. Technical Analysis of Stocks and Commodities, pp. 58-61. Scalas, Enrico and Kim, Kyungsik (Aug 2006) : “The art of fitting financial time series with Levy-stable distributions”, Paper 336. Munich Personal RePEc Archive. U. Munich, Munich, Germany. June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 25 Schleicher, Christophe (Jan 2002) : An introduction to wavelets for economists, working paper 2002-3. Bank of Canada, Quebec, Quebec, Canada. Seo, Myung Hwan (Mar 2007) : Estimation for nonlinear error-correction models. Discussion paper EM-2007-517. London School of Economics, London UK. Shalizi, Cosma R. (Jul 2003) : Methods and techniques of complex systems. Center for the Study of Complex Systems, U Michigan, Ann Arbor MI, pp. 31-33 and p. 31, footnote 20. Silverberg, Gerald and Vespagen, Bart (2004) : Size distributions of innovations revisited … Research memo 2004-021. Maastricht Economic Research Institute on Innovation and Technology, Maastricht University, Maastricht, Germany. Smith, Lewis L (Oct 2006) : Notes for a new paradigm for economics, Proceedings of Sixth Global Conference on Business and Economics, Harvard University, Camabridge MA. Smith, Lewis L (Jan 2002) : Economies and markets as complex systems, Business Economics. National Association for Business Economics. Sornette, Didier (Jul 2007) : Probability distributions in complex systems. Encyclopedia of Complexity and System Science, Springer Science, New York NY. Sornette, Didier (2003) : Why Stock Markets Crash, Princeton U Press, Princeton NJ. Sornette, Didier (Jan 2003) : Critical Market Crashes. ArXiv.org. Cornell U Library, Cornell U, Cornell NY. Stewart, Ian (Apr 1998) : Repealing the law of averages. Scientific American, pp. 102-104. Taleb, Nassim N. (2007) : The Black Swan. Random House. New York NY, June 22-24, 2008 Oxford, UK 2008 Oxford Business &Economics Conference Program ISBN : 978-0-9742114-7-3 26 Teräsvirta, Timo (May 2005) : Forecasting economic variables with nonlinear models. Stockholm School of Economics, Stockholm, Sweden. Teseo, Rudolf (Jul 2002) : Recognizing Elliot Wave patterns. Technical Analysis of Stocks and Commodities, pp. 94-97. Voorhees, Burton (2006) : Emergence and (the) induction of cellular automata rules via probabilistic reinforcement paradigms. Complexity 11/03, 44-57. Walsh, J. E. (1962-68) : Handbook of Nonparametric Statistics (three vol.) Van Nostrand NJ. White, Halbert (Jul 2005) : Approximate nonlinear forecasting methods. Dept. of Economics. U.C. San Diego, San Diego CA. Wolfram, Stephen (2002) : A new kind of science. Wolfram Media, Champaign IL. Yamanaka, Sharon (Sep 2007) ; Counting crows. Technical Analysis of Stocks and Commodities, pp. 48-53. Zellner, Arnold (2004) : Statistics, economics and forecasting. Cambridge U Press, Cambridge UK. Zellner, Arnold (2002) : My experience with nonlinear dynamic models in economics. Studies in Nonlinear Dynamics and Econometrics 06/02. Zietz, Joachim (fall 2001) : Cointegration versus traditional econometric techniques in applied economics. Eastern Economic Journal 21/03, 469-482. June 22-24, 2008 Oxford, UK