July 27th 2012 Scheme for an application to extract 1D spectra out

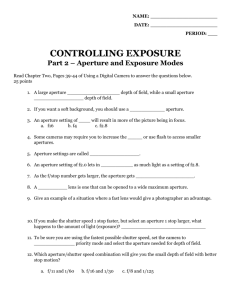

advertisement

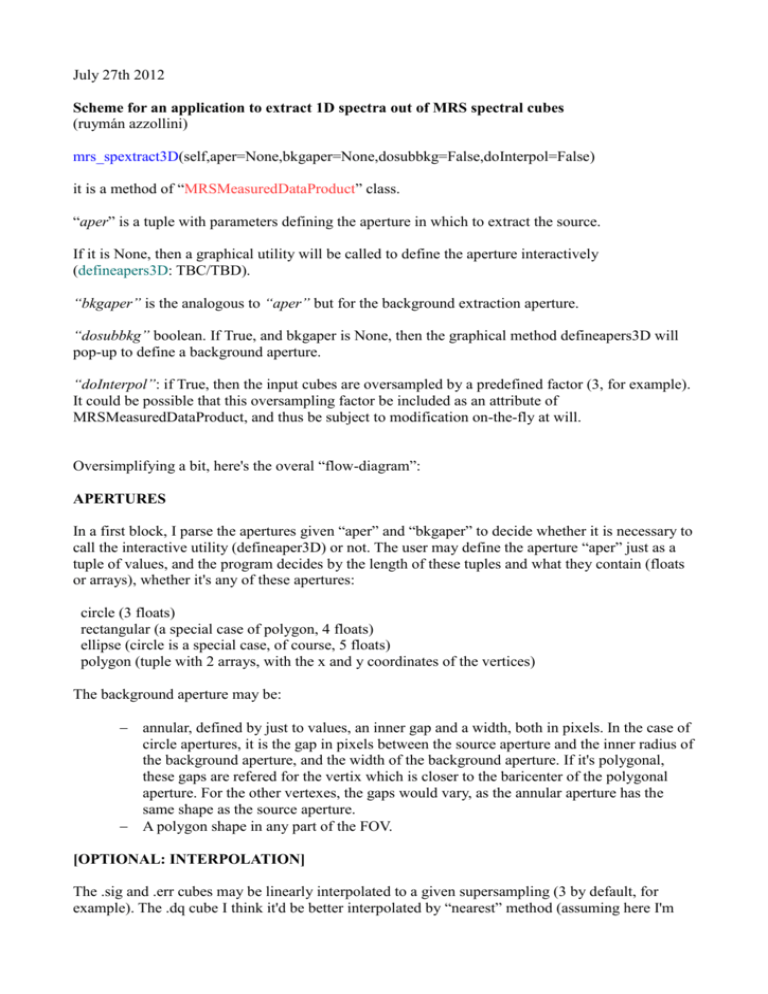

July 27th 2012 Scheme for an application to extract 1D spectra out of MRS spectral cubes (ruymán azzollini) mrs_spextract3D(self,aper=None,bkgaper=None,dosubbkg=False,doInterpol=False) it is a method of “MRSMeasuredDataProduct” class. “aper” is a tuple with parameters defining the aperture in which to extract the source. If it is None, then a graphical utility will be called to define the aperture interactively (defineapers3D: TBC/TBD). “bkgaper” is the analogous to “aper” but for the background extraction aperture. “dosubbkg” boolean. If True, and bkgaper is None, then the graphical method defineapers3D will pop-up to define a background aperture. “doInterpol”: if True, then the input cubes are oversampled by a predefined factor (3, for example). It could be possible that this oversampling factor be included as an attribute of MRSMeasuredDataProduct, and thus be subject to modification on-the-fly at will. Oversimplifying a bit, here's the overal “flow-diagram”: APERTURES In a first block, I parse the apertures given “aper” and “bkgaper” to decide whether it is necessary to call the interactive utility (defineaper3D) or not. The user may define the aperture “aper” just as a tuple of values, and the program decides by the length of these tuples and what they contain (floats or arrays), whether it's any of these apertures: circle (3 floats) rectangular (a special case of polygon, 4 floats) ellipse (circle is a special case, of course, 5 floats) polygon (tuple with 2 arrays, with the x and y coordinates of the vertices) The background aperture may be: annular, defined by just to values, an inner gap and a width, both in pixels. In the case of circle apertures, it is the gap in pixels between the source aperture and the inner radius of the background aperture, and the width of the background aperture. If it's polygonal, these gaps are refered for the vertix which is closer to the baricenter of the polygonal aperture. For the other vertexes, the gaps would vary, as the annular aperture has the same shape as the source aperture. A polygon shape in any part of the FOV. [OPTIONAL: INTERPOLATION] The .sig and .err cubes may be linearly interpolated to a given supersampling (3 by default, for example). The .dq cube I think it'd be better interpolated by “nearest” method (assuming here I'm ussing scipy.interpolate.griddata). MASKING Create Masks to ignore pixels with NaN value or flagged as any condition/s we want to ignore, and to select the source or the background pixels. To build the mask that discards pixels with certain flags, a routine “checkflags” is to be used (TBD, could be of general use). It is just a function that returns True if one pixel (or array with pixels?) has some flag(s) raised. Two such masks are created, one for the source and one (optional) for the background. The masks have a value of 1. in those spaxels to be considered, and 0. in those which are not. Fractional values for partially included pixels are still not considered. Main problem in that is the complexity of determining the fraction of a pixel included in an aperture, and doing it fast. I have to think that better still. EXTRACTION Finally comes the actual extraction of the spectrum and background subtraction. The .sig flux cube is multiplied by the aperture mask and then collapsed in the spatial directions to obtain the spectrum, “spc”. The same applies to the background, though using the background aperture. The resulting background spectrum is then scaled to provide the average background times the area of the object aperture. Then it is subtracted from the spectrum. The error in spc is computed from the .err cube. The error of spc, before back. subtract. is just the sum in cuadrature of the errors of each intervening pixel. The error in background is a bit more complicated. The average variance would be the sum of the variances of the intervening pixels, dividing by the number of pixels. Then this average variance, multiplied by the number of active pixels in the source aperture would be added to the variance of the spectrum. And the error would be the square root of this total variance. With regard to the Data Quality, I've come to two alternative (or complementary) ideas: 1) the simplest would be to give the number of active pixels (in the source and in the background apertures) which are flagged as “X”. So it could be an array of dimensions NxF, where N is the number of wavelength bins, and “F” is the number of flags (like “cosmicray” or “hot-pixel”, or “bad-slope”). Some of the values could be 0, if not a pixel in the aperture(s) had this flag at a certain wavelength bin. 2) One possibility would be to define a new parameter, the IDQ, or the "integrated" Data Quality, as follows: It'd have a value between 0 (useless) to 1 (perfect quality), and it'd weight the quality of each pixel that enters into the calculation of "spc". For example, let's assume we have 3 different types of flags for quality: A, B, and C then wed' define the IDQ as IDQ = exp(-wA * nA/nT) * exp(-wB * nB/nT) * exp(-wC * nC/nT) where “nX” is the number of pixels in the aperture that are flagged as A/B/C. nT is the total number of pixels in the aperture. “wX” are just positive numbers that weight the relevance of flags A, B or C. The values of these weights “wX” would have to be found by trial-and-error to as to be informative of the quality of every datum in spc.