IEEE Paper Template in A4 (V1)

advertisement

A Novel and Optimized Parallel Approach for ETL

Using Pipelining

Deepshikha Aggarwal#, V. B. Aggarwal*

#

Department of IT, Jagan Institute of Management Studies, Delhi

deepshikha.aggarwal@jimsindia.org

vbaggarwal@jimsindia.org

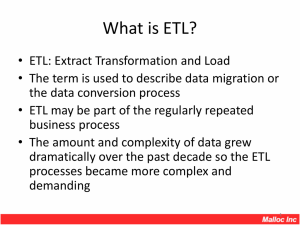

Abstract - Companies have huge amounts of precious data

lying around throughout their servers and networks that

needs to be moved from one location to another such as from

one business center to another or to a data warehouse for

analysis. The problem is that the data lies in different sorts of

heterogeneous systems, and therefore in different formats.

To accumulate data at one place and to make it suitable for

strategic decisions we need a data warehouse system. This

consists of extract, transform and load (ETL) software,

which extracts data from various resources, transform it into

new data formats according to required information needs,

and then load it into desired data structure(s) such as a data

warehouse. Such softwares take enormous time for the

purpose which makes the process very slow. To deal with the

problem of time taken by ETL process, in this paper we are

presenting a new technique of ETL using pipelining. We have

divided the ETL process into various segments which work

simultaneously using pipelining and reduce the time for ETL

considerably.

Keywords— Data Warehouse, Data Mining, Data Mart,

pipelining, nested pipelining, parallel computing.

well-designed. An ETL system is necessary for the

accomplishment of a data warehousing project. ETL is the

process that involves Extraction, Transformation and Loading.

II. EXTRACTION

The first part of an ETL process is to extract the data from

various source systems. Most data warehousing projects

consolidate data from different sources. These systems

may use a different data organization and format.

Common data source formats are relational databases and

flat files, but may include non-relational database

structures such as IMS or other data structures such as

VSAM or ISAM. To improve the quality of data during

the extraction process, two operations are performed:

Data profiling.

Data cleaning.

A. Data Profiling

Data profiling is the process of examining the data

available in an existing data source (e.g. a database or

a file) and collecting statistics and information about that

data [11]. The purpose of data profiling is to identify the

relationships between columns, check for integrity

constraints and also check for ambiguities, incomplete or

incorrect values in data . Data profiling therefore enables

to eliminate errors due to these reasons.

I. INTRODUCTION

In today’s world businesses demand softwares to process large

data volumes at the lightning speeds. Everybody wants to

have information and reports on a single click. In this fast

changing competitive environment data volumes are

increasing exponentially and hence there is an increasing

After this, the process of data cleaning is executed in

which we perform the following tasks:

demand on the data warehouses to deliver available

information instantly. Additionally, data warehouses should

Filling up the missing values.

generate consistent and accurate results.[1] In data warehouse,

Smooth noisy data.

data is extracted from operational databases, ERP applications,

Identify or remove outliers.

CRM tools, excel spread sheets, flat files etc. It then

transformed to match the data warehouse schema and

Resolve inconsistency.

business requirements and loaded into the data warehouse

Resolve redundancy caused by integration.

database in desired structures. Data in the Data warehouse is

added periodically – monthly, daily, hourly depending on the

purpose of the data warehouse and the type of business it B. Data Cleaning

serves.[2]

Scrubbing or Data Cleansing is removing or correcting

errors and inconsistencies present in operational data

Because ETL is a continuous and frequent part of a data

values (e.g., inconsistently spelled names, impossible

warehouse process, ETL processes must be automated and a

birth dates, out-of-date zip codes, missing data, etc.). It

may use pattern recognition and other artificial

intelligence techniques for improving the data quality [7].

Formal programs in total quality management (TQM) can

also be implemented for data cleaning. These focus on

defect prevention, rather than defect correction.The

quality of data improves by:

Fixing errors such as misspellings, erroneous dates,

incorrect field usage, mismatched addresses,

missing data, duplicate data and inconsistencies

It also involves decoding, reformatting, time

stamping, conversion, key generation, merging,

error detection/logging and locating missing data.

The process of extraction also includes parsing the

extracted data to check whether it meets the desired

structure or pattern. If the pattern is not up to the mark

then data may be rejected. Designing and creating the

extraction process is often one of the most timeconsuming tasks in the ETL process and indeed, in the

entire data warehousing process. The source systems

might be very complex and poorly documented, and thus

determining which data needs to be extracted can be

difficult. The data has to be extracted normally not only

once, but several times in a periodic manner to supply all

changed data to the warehouse and keep it up-to-date.

Moreover, the source system typically cannot be

modified, nor can its performance or availability be

adjusted, to accommodate the needs of the data

warehouse extraction process.

Extraction is also known as Capturing which means

extracting the relevant data from the source files used to

fill the EDW. The relevant data is typically a subset of all

the data that is contained in the operational systems. The

two types of capture are:

Static – A method of capturing a snapshot of the

required source data at a point in time, for initial

EDW loading.

Incremental – It is used for ongoing updates of an

existing EDW and therefore it only captures the

changes that have occurred in the source data since

the last capture.

Translating coded values (e.g., if the source system

stores M for male and F for female, but the

warehouse stores 1 for male and 2 for female)

Encoding free-form values (e.g., mapping "Male",”M

" and "Mr" onto 1)

Deriving

a

new

calculated

value

(e.g.,

Age=CurrentDate-DOB)

Joining together data from multiple sources (e.g.,

lookup, merge, etc.)

Summarizing multiple rows of data (e.g., total sales

for each Month)

Generating surrogate key values

Transposing or pivoting (turning multiple columns

into multiple rows or vice versa)

Splitting a column into multiple columns (e.g.,

putting a comma-separated list specified as a string

in one column as individual values in different

columns)

The transformation phase is divided into following tasks:

Smoothing (removing noisy data)

Aggregation (summarization, data cube

construction)

Generalization ( hierarchy climbing)

Attribute

construction

(new

attributes

constructed from the given ones)

IV. LOADING

This phase loads the transformed data into the data

warehouse. The execution of this phase varies depending

on the need of the organization Eg. If we are maintaining

the records of sales on yearly bases then data in the data

warehouse will be reloaded after a year. So the frequency

of reloading or updating the data depends on type of

requirements and type of decisions that we are going to

make.

This phase directly interact with the database, the

constraints and the triggers as well, which helps in

improving the overall quality of data and the ETL

performance.

V. ETL TOOLS

III. TRANSFORMATION

The transform phase applies a set of instructions or

functions to the extracted data so as to convert different

data formats into single format which can be loaded.

Following are the transformations types which may be

required:[8]

Selecting only certain columns to load (Which are

needed in decision making process and selecting

null columns not to load)

ETL process can be implemented on various software

platforms. There are various tools which are developed to

make this process more reliable and advanced. Some of the

tools available for ETL[9]:

Informatica (Informatica Corporation)

Oracle Warehouse Builder(Oracle Corporation)

Transformation manager(ETL Solutions)

DB2 Universal Enterprise Edition(IBM)

Cognos Decision Stream(IBM Cognos)

SQL server integration system(Microsoft)

Ab Initio(Ab Initio Software Corporation)

Data Stage(IBM)[5]

VI. CHALLENGES FOR ETL

The ETL process can involve a number of challenges and

operational problems. Since a data warehouse is

assembled by integrating data from a number of

heterogeneous sources and formats, the ETL process has

to bring all the data together in a standard, homogeneous

environment. Following are some of the challenges:

Dealing with diverse and disparities in source

systems.

Need to deal with source systems with multiple

platforms and different operating systems.

sections we have discussed the ETL process and the

challenges faced by this process. Every new

design/model has been developed with the aim of

reducing time taken to extract, transform and load

data into the data warehouse.

Solution: A new design introduced with the concept

of nested pipelining

To perform Extraction, transformation and loading

for a Data warehouse , we are using Pipelining.

Pipelining is a technique where the complete process

is divided into various segments and all these

segments can work simultaneously. In our model we

have divided the ETL process into 9 segments as

shown in Table 1.

Many source systems are older legacy applications

running obsolete database technologies.

Historical data change values are not preserved in

source operations.

This process takes enormous time.

TABLE I

SEGMENTATION OF ETL PROCESS

Segment1

Segment2

VII. PARALLEL PROCESSING IN ETL

Parallel computing is the simultaneous use of more than

one CPU or processor core to execute a program or

multiple computational threads. Parallel processing makes

programs run faster because there are more engines (CPU

s or Cores) running it.

In order to improve the performance of ETL software

parallel processing may be implemented. This has enabled

the evolution of a number of methods to improve the

overall performance of ETL processes when dealing with

large volumes of data.

Segment3

Segment4

Segment5

Segment6

Segment7

Segment8

Segment9

“Fmv” which will fill

missing values

“Smd” which will

Smooth noisy data.

“Ro” which will

Remove outliners.

“Rc” which will

Remove Inconsistency.

“Sm” Smoothing.

“Agg”Aggregation.

“AC”Attribute

Construction

“Gen” Generalization

Load which will load

the data

There are 3 main types of parallelisms as implemented in

ETL applications:

Data: By splitting a single sequential file into smaller

data files to provide parallel access.

Pipeline: Allowing the simultaneous running of

several components on the same data stream..

Component: The simultaneous running of multiple

processes on different data streams in the same job.

All three types of parallelism are usually combined in a

single job.[2][3]

VIII. A NOVEL TOOL FOR ETL USING PIPELINING

Problem with ETL design

The major problem with the existing ETL models is

the time taken by the process. Several models have

been introduced to reduce this time span. In previous

Data is divided into different modules. During the

first clock cycle Module1 enters into segment1 and

during 2nd clock cycle first module moves to second

segment and module2 enters into segment1. During

3rd clock cycle module1 enters into segment3 ,

module2 enters into segment2 and module3 enters

into segment1. So in this way all segments work

together on a number of data modules simultaneously

as shown in Table 2.

k = no. of segments.

tp = Time to execute a task in case of Pipeline

As the no of tasks increases, n becomes much larger than k-1,

and k+n-1 approaches the value of n. Under this condition, the

speedup becomes

S= tn/tp

If we assume the time taken to process a task is same in

the pipeline and non pipeline circuits, we will have tn =

ktp. It includes, this assumption, the speed reduces to

TABLE 2

DATA MODULES IN SEGMENTATION

S = ktp/tp

We can demonstrate it with the help of an example, let

the time taken to process a sub operation in each

segment be equal to tp= 20ns. Assume that the, pipeline

has k= 9 segments and executes n= 100 tasks in

sequence. The pipeline system will take (k+n-1)tp =

(3+99)*20 = 2040 ns to complete the task. Assuming

that tn= ktp = 9*20 = 180 ns, a non pipeline system

requires nktp= 100*180 = 18000 ns to complete 100

tasks. The speed ratio is equal to 18000/2040 = 8.82 .So

we have speed up the process approximately 9 times.

VIII. CONCLUSION

Extraction Transformation and loading process may

involves a number of tasks and challenges but in this

paper we have shown that if (assuming) ETL process

has only 9 tasks (as shown in the previous section) then

we can speed up the whole process to a great extent

using pipelining.This model can be expanded to have

many more number of segments for the ETL process. In

future, such type of models for ETL can revolutionize

the information management in data warehouses and

decision support systems by saving time and money for

business organizations.

If we perform ETL process in this way then we will

be able to save a lot of clock cycles and will be able

to speed up the process. The following calculation

shows the speeding up of the ETL process.

REFERENCES

Speeding up ETL process

[1]

S=N*tn

(k+N-1)tp

[2]

Where,

[3]

N = no. Of tasks

tn= Time to execute a task in case of Non Pipeline

[4]

Li, B., and Shasha, D., "Free Parallel Data Mining", ACM SIGMOD

Record, Vol.27, No.2, pp.541-543, New York, USA (1998).

Anand, S. S., Bell, D. A. and Hughes, J.G., "EDM: A general

framework for data mining based on evidence theory", Data and

Knowledge Engineering, Vol.18, No.3, pp.189-223 (1996).

Agrawal, R., Imielinsk, T. and Swami, A., “Database Mining: A

Performance Perspective”, IEEE Transaction Knowledge and Data

Engineering, vol. 5, no. 6, pp. 914-925 (1993).

Chen, S.Y. and Liu, X., "Data mining from 1994 to 2004: an

application-oriented review", International Journal of Business

Intelligence and Data Mining, Vol.1, No.1, pp.4-11, 2005.

[5]

[6]

[7]

[8]

[9]

[10]

[11]

[12]

[13]

[14]

[15]

Singh, D. R. Y. and Chauhan, A. S., "Neural Networks In Data

Mining", Journal of Theoretical and Applied Information Technology,

Vol.5, No.6, pp.36-42 (2009).

C.M. Roberts, "Radio frequency identification (RFID)", Computers &

Security, Vol.25, pp. 18 – 26 (2006).

Aboalsamh, H. A., "A novel Boolean algebraic framework for

association and pattern mining", WSEAS Transactions on Computers,

Vol.7, No.8, pp.1352-1361 (2008).

Sathiyamoorthi, V. and Bhaskaran, V.M., "Data Mining for Intelligent

Enterprise Resource Planning System", International Journal of Recent

Trends in Engineering, Vol. 2, No. 3,pp.1-5 (2009).

Floriana Esposito,” A Comparative analysis of methods for pruning

Decision

trees,”IEEE Transaction on pattern

analysis and

pattern matching vol 19, 1997.

http://en.wikipedia.org/wiki/Etl#Transform

http://en.wikipedia.org/wiki/Data_profiling

J.A. Blakeley, N. Coburn, and P.-A. Larson. Up-dating derived

relations: etecting irrelevant and autonomously computable updates.

ACM Transactions on Database Systems, 14(3):369{400, September

1989

P. Vassiliadis, C. Quix, Y. Vassiliou, M. Jarke. Data Warehouse

Process Management.cInformation Systems, vol. 26, no.3, pp. 205-236,

June 2001

T. Stöhr, R. Müller, E. Rahm. An Integrative and Uniform Model for

Metadata Management in Data Warehousing

Environments. In

Proceedings of the International Workshop on Design and

Management of Data Warehouses (DMDW’99), pp. 12.1- 12.16,

Heidelberg, Germany, 1999.

M. Jarke, M. Lenzerini, Y. Vassiliou, P. Vassiliadis (eds.).

Fundamentals of Data Warehouses. 2nd Edition, Springer-Verlag,

Germany,.