Use of Digital Ethnography to Measure Media Consumption

advertisement

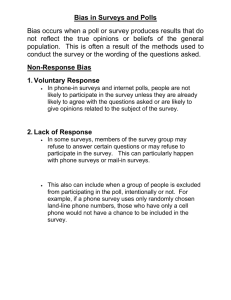

Use of Digital Ethnography to Measure Media Consumption and Consumer Behavior among South Africans during the 2010 World Cup Michael W. Link & Justin T. Bailey The Nielsen Company Contact: Michael W. Link, The Nielsen Company, 3784 Ardsley Ct, Marietta, GA 30062, Michael.Link@Nielsen.com, Abstract Mobile devices are facilitating new and innovative approaches to the collection of survey data. Borrowing heuristically the concept of “digital ethnography” we test whether a smartphone designed to deliver short surveys at periodic intervals throughout the day can be used to capture key information on respondent behaviors and attitudes over time. Leveraging a study conducted in conjunction with the 2010 FIFA World Cup games, a national sample of 384 South African residents was recruited across four different cities and in four languages. Respondents were asked a short series of questions on their current activities, FIFA games viewership, mood, and recent consumer behavior. Respondents were also asked to take a picture of what they were focused on at the time of the survey and to record a brief caption. We examined survey completion rates, when and where respondents completed the surveys, and three additional indicators of survey compliance: number of concurrent activities recorded; respondent selfreported mood; and, percentage of surveys with a picture and caption. Overall, the study showed high levels of compliance with only a modest decline in participation in the latter weeks but little variation in task compliance among those who did participate. The research demonstrates how Smartphones can be used to expand traditional survey methods, capturing both cross-time and “in-the-moment” data. Key words: Smartphone, digital ethnography, mobile survey, Internet survey 1 Use of Digital Ethnography to Measure Media Consumption and Consumer Behavior among South Africans during the 2010 World Cup 1.0 Introduction In its broadest sense, ethnography involves the study and interpretation of cultural diversity, providing a detailed description of a particular culture, society, or community. Often the techniques used -- such as direct, first-hand observations and other “participant observer” approaches -- are meant to help researchers understand in greater detail the thoughts, behaviors, and attitudes of the communities they are studying. The digital age has the potential to transform this type of inquiry. Leveraging the ubiquitous nature of mobile, video and Internet-based technologies, researchers can gain detailed insights into communities of interest without the need to directly embed themselves in the daily lives of their subjects. “Digital ethnography” borrows heuristically from the goals of more traditional ethnographic approaches, but does so through the effective deployment of data collection technologies to facilitate “participant observation.” This new approach can be applied by such diverse groups of researchers as communications specialists focused on media platform usage and cross-linkages, sociologists interested in the networking structures of particular peoples, political scientists studying group identification and interactions within political organizations, and market researchers identifying emerging patterns of consumer behavior. We provide an overview on one such approach, entitled “Life360”, which combines a variety of digital measurements, including repeated mobile phone surveys, visual data capture, and Internet-based questionnaires to provide real-time, in-depth information about respondents. Specifically, the approach is applied to the collection of data from 384 residents of South Africa during the 2010 FIFA World Cup games in June and July, 2010. The analysis focuses on various 2 aspects of respondent compliance, including participation rates over time and across various segments of the day as well as potential longitudinal changes in data quality measures meant to capture the level of respondent engagement in the study. The research shows that with the right design, a cross-time (5-week) repeated daily measures approach to providing “in-the-moment” accounts of behaviors and attitudes is feasible using current smartphone technology. 2.0 Background While the growth of mobile technology has exploded over the past decade, the use of these devices to collect information for research purposes is still in its nascent stage. Electronic measurement of behavior is not new, with a host of custom built devices being developed to measure activities in interest areas such as health studies, quality of life research, and media consumption behaviors (Marceau et al., 2007; Palmblad and Tiplady, 2004; Caro et al., 2001; Peters et al., 2000). Early research in this area was conducted by clinical scientists working to find more reliable ways of measuring patient intensity, frequency of pain and quality of life using portable electronic devices, not very different from the advanced mobile phone. In these studies, when respondents were asked to gauge their current situation researchers found few differences in responses when surveys were taken on electronic portable devices as compared to selfadministered paper questionnaires (Marceau, et al., 2007; Caro et al., 2001). Many of these early studies programmed surveys to occur at regular intervals, which could lead to an anticipation bias on the part of the respondents. To counter this effect, Peters et al. (2000) used an “experience sampling method” (Lee and Waite, 2005; Delespaul, 1995) which provided a randomized pattern for survey administration and demonstrated more accurate and unbiased 3 measurement. Their research also indicated that electronic diaries may be more accurate than other survey modes that rely on patient memory, providing evidence for the reliability and validity of electronic diaries and continuous measurement throughout the data collection period. The emergence of mobile devices -- with a host of integrated features from voice, photography, video, text, email, GPS, application download, and others -- has opened the door to a new generation of measurement tools. Standards or common “best practices,” however, are still in development when it comes to conducting research efforts using mobile devices (Palmblad and Tiplady 2004). In terms of more traditional survey administration over mobile phones, whereby text questions are sent (not to be confused with voice surveys conducted by an interviewer with a cell phone user) there is some early work which argues that the same heuristics that apply to questionnaire design for other survey modes (Tourangeau, Couper, and Conrad, 2004) can be applied to surveys on mobile devices (Peytchev and Hill, 2008). For example, Peytchev and Hill (2008) found that the frequency distribution provided in the response options influences respondent selections in surveys on mobile devices just as it does in other modes. Time use studies are another area where mobile devices may have great application. Such studies are meant to measure how people choose to allot the 24 hours of each day and are used routinely in a variety of sectors from economics (Joyce and Stewart, 1999) to sociology (Lee and Waite, 2005), to consumer spending (Belli, Smith, Andreski, et al, 2007). While the intent of these studies is often similar (understanding how people choose to spend their time), the methods used to make these measurements can vary greatly. Early works involved application of paperand-pencil techniques on various types of time grids with respondents recording their daily activities concordant with their actions (Stinson, 1999). Other time-use surveys have used 4 telephone methodologies (American Time Use Survey, 2007; Belli, Smith, Andreski, et al, 2007) however, these are purely retrospective accounts and are often less precise than data collected at the moment of activity. Wary of these potential threats to reliability, Lee and Waite (2005) analyzed data from a survey designed to measure the amount of housework performed by husbands and wives. Again, utilizing the experience sampling method, the spouses were asked to record a snap-shot of their daily lives without having to account for each quarter hour of the day. Instead, they were instructed to record what they were doing when an alarm went off at randomly-spaced intervals. Respondents were asked to report their primary activity, “What was the main thing you were doing?” and then ask for any other activities they may be participating in, “What else were you doing at that time?” These two questions also helped to identify an unconscious or subconscious ordering of the activities without explicitly asking for the respondent to rank them, thus reducing any ordering bias. We build on these early lessons in the use of portable electronic devices, mobile survey technology, and time use studies by combining these methods with the more traditional goals of ethnographic research. As a result, the Life360 approach was developed to obtain detailed understanding of different communities and sectors of the general public. The various measurement techniques within the Life360 “toolbox” utilize digital mediums to capture a combination of detailed and “in-the-moment” measures of attitudes and behaviors, thereby offering greater insights into various population segments. 3.0 The Life360 Approach Life360 uses an innovative approach to bring traditional ethnography into the digital age. The goal is simple: capture what is happening in people’s lives to depict lifestyles, behaviors, 5 and personalities. The idea is to collect day-in-the-life activities and use them to inform broader questions of behaviors, preferences, and attitudes. 3.1 Data Collection Techniques Life360, as currently administered, combines information from several different data collection techniques: (1) Recurring mobile phone surveys: A central component of Life360 is the administration of brief, yet recurring surveys via a smartphone to capture “in-the-moment” information. While the specific questions can vary by study, the key variables often include current location (i.e., in living room, in transit, on the street, at work, in yoga class, etc.), activities in which the respondent is engaged (i.e., watching television, surfing the Internet, eating lunch, doing laundry, walking down the street, etc.), people with the respondent (i.e., spouse, children friends, strangers, no one, etc.), and the respondent’s current mood (i.e., happy/depressed; energetic/tired; engaged/bored; etc.). Surveys are initiated in one of two ways, prompted (via an alarm) or self-initiated depending on the nature of the data being captured. With the timed alarm approach, respondents are notified of the need to take a survey by way of a timer and alarm system built into the smartphone. Surveys may be timed to reoccur at regular intervals (such as hourly) or at random intervals. The latter may be more effective at reducing any potential bias due to respondent anticipation of the timed survey. The timed alarm approach is used when attempting to build a more complete picture of the range of activities in which an individual may participate. By capturing information at regular points, researchers gain a better perspective on how respondents move through the day, both behaviorally and attitudinally. Self-initiated surveys, in contrast, are used when attempting to capture information about a particular activity, such as mealtime, 6 logging into a computer, or making a purchase. Respondents are instructed to initiate the survey (by pressing a key on the smartphone) each time they begin or end a particular activity with the exact specifications varying based on the research focus and data needs. This approach allows the researcher to drill deeper into a particular activity, behavior, or attitude. The mobile-based questionnaires tend to be relatively brief (2-5 minutes in length) and follow many of the same guidelines as other computer-assisted self-interviews. Questionnaires can be programmed with closed and open-ended questions (although it is advisable to keep the latter to a minimum in a mobile context), including logical skips and range verifications. Given that the screen size is much smaller on a mobile device than a personal computer or laptop, the questions do need to be of shorter wording and, at times, fewer response options. This is to reduce or avoid the amount of scrolling required by the respondent to read and respond to the individual questions. Once the surveys are completed they are automatically uploaded via wireless communication to a central database and made available for immediate analysis. (2) Visual data capture: Moving beyond capture of traditional survey data, Life360 also incorporates the collection of images and video as a means of adding greater context to the other data collected. Each of the smartphones is equipped with a camera, now standard on most mobile devices. After the completion of each survey, respondents are requested to take a picture of what they were focusing on at the time they initiated the survey and to add a brief caption describing the picture. Respondents, therefore, choose what to capture visually, providing researchers with additional insight into the respondent’s focus of attention and current activities at the time the survey was completed. Visual data capture is also one of the more popular aspects of Life360 among respondents. Those responding to a debrief interview after having completed a Life360 7 project overwhelmingly praised the collection of visual images as a way for them to become more involved in the process (as they controlled what was recorded within general guidelines). Further, the ability to caption these photos enabled users to “tell a story” about what they were focused on during each survey. Like the survey results, the visuals are uploaded to a computer and then sent to a central data server. The images are then coded and analyzed and/or used as examples to illustrate findings from the survey component. The coding process is currently conducted manually using a coding scheme based on the needs of the project. This may include coding for place, activity, surroundings, products within visual range, etc. This process is expected to be refined in the future with the development of more sophisticated automated coding techniques. Coding of digital video is accomplished with a similar manual approach but is much more labor intensive. (3) Pre- and post- field period surveys: In addition to mobile-enabled data collection, Life360 also makes extensive use of pre- and post- field period surveys. Respondents are typically asked to complete a pre-survey at the outset of data collection and a post-survey after the fielding period is completed. These surveys follow standard web survey designs with secure logins and encrypted data transfer (Dillman, Smyth, and Christian 2008; Couper and Miller 2009). The pre-surveys collect basic demographic information (i.e., age, sex, number of adults and children in the home, etc.) as well as some baseline behavioral and attitudinal data which varies by project (e.g., hobbies, shopping patterns, device ownership, media usage habits, etc.). The post-surveys tend to go more into details of behaviors and attitudes, and can also address potential changes in behavior since the start of the project. Like other web-based surveys, these data are captured on a central server for quantitative analysis. 8 Life360 collects data which are both “naturalistic” and “structured”. Much like a focus group, the approach aims to capture information, such as emotions, contradictions in thoughts and behaviors, and tensions for the purposes of producing unique insights. Like many qualitative approaches, Life360 takes advantage of spontaneous, unexpected elements. For instance, participants often provide insights in ways that researchers may not have anticipated at the outset of the project. This enables the researcher to learn or confirm not just the facts (as in survey method), but help place the “facts” in a broader context. Survey research methods, on the other hand, traditionally seek generalizations enabling researchers to make predictions about the occurrence of phenomena on a large scale. Life360 contains many of the same structured elements of traditional approaches through the mobile- and Internet-enabled surveys, with the ability to generalize from these data to a broader population being more a function of the sampling and recruitment approaches employed for a specific project, not necessarily the data collection techniques themselves. 3.2 2010 FIFA World Cup Study In June and July 2010, Life360 was used to capture media usage and consumer attitudes and behaviors among a sample of South African residents as their country hosted the 2010 FIFA World Cup games. A panel of nearly 400 South Africans was recruited across the four largest cities in South Africa: Johannesburg, Pretoria, Durban and Cape Town. All four cities were host to several of the World Cup matches played during the field period. Recruitment was a twophase effort, with telephone outreach to a quota sample of potential respondents that fit defined age and employment targets, followed by face-to-face orientation sessions to familiarize respondents with the smartphone devices and study guides. 9 Each respondent was equipped with their own BlackBerry Curve internet-enabled device running a Techneos SODA® survey application. With the exception of the camera and actual phoning capability, all of the other features of the phone were hidden and locked from respondent use in order to minimize potential changes in respondent behavior, particularly with respect to media use given that most of the respondents had never used a smartphone and some of the features could have facilitated access to media the respondent would not otherwise have had access. Survey phones were programmed in one of four languages based on respondent preference: Zulu, Sotho, Afrikaans, or English. An initial set of cell phone minutes was provided as an upfront/on-going incentive for participation. As an additional contingent incentive, panelists were told they would be able to keep the Blackberry as a gift if they completed all five weeks of the study ($85 value in US Dollars). The self-administered mobile survey was delivered five times a day for the 33 day fielding period during the World Cup. Within each of five day-parts (6am-10am; 10am-1pm; 1pm-4pm; 4pm-7pm; 7pm-11pm) a reminder alarm would sound prompting respondents to launch the application and complete the survey. Although the five day parts were known, the actual timing of the alarm and reminders was conducted at random intervals within each time period to deter panelists from anticipating the survey and potentially changing their behaviors accordingly. One of the core advantages of using Life360 methodology to measure attitudes and behaviors associated with the World Cup was the ability to measure respondent engagement “in the moment,” whether at home or on-the-go. The survey collected in the moment information pertaining to respondent’s activities, where they were, what they were doing, and with whom, as well as questions about their current mood. Recall data were also collected to capture 10 retrospective media consumption that occurred between the timed surveys. The goal was to administer all questions within a 3-5 minute survey, including additional probes if respondents indicated they were doing anything related to media usage (TV, radio, mobile or online). If respondents were engaged with the tournament, they could answer whether they were watching news, highlights or an actual game (including names of the competing teams). The last question of each survey asked the respondent to take a picture of what they were currently focused on and to provide a caption to describe the photo. Lastly, for the final survey of each day a rotating series of questions were asked about brand awareness, food and beverage expenditures, or which team they were supporting. These questions rotated on a daily basis. In the analyses presented here we focus primarily on issues related to respondent compliance. Given that surveys were requested five times per day across a 33 day period, there were obvious concerns about potential declining participation and engagement with the process. This could manifest itself in a number of ways over time, such as increasing survey nonresponse, declining item completeness, decreasing compliance with specific tasks (such as taking a photo and providing a caption), and finding other ways to short-cut the process. To assess respondent participation, compliance, and data quality, we examined 6 key measures: Daily survey completion rate Survey completion rate across the five daily time periods Percentage of surveys conducted inside and outside the respondents home (location) Average number of concurrent activities reported when the survey alarm rang Average mood (emotion) rating, based on a 5-point scale (1 = bored; 5 = excited); and, 11 Percentage of surveys that were accompanied by a photo and descriptive caption. These metrics are examined for changes and variations both cross time and across different demographic groups (sex, age, employment status, and respondent language). 4.0 Findings Data were collected from 384 respondents, all of whom were residents of South Africa.1 The fielding period was June 10 – July 12, 2010. The fielding began two days prior to the start of the World Cup in order to give respondents time to learn how to use the smartphone. Earlier pilot testing revealed that since many South Africans had never used a smartphone, several “practice days” were required prior to the start of the actual event. 1 There were initially 413 respondents, however, 29 cases were dropped due to a system error three-fifths of the way through the field process. Only July 1, the cellular carrier dropped service for these 29 respondents for unknown reasons. Respondents who lost service could not immediately transmit data. Study staff had to contact these individuals to manually reconfigure the phones to facilitate re-transmission. Unfortunately not all respondents were able to be contacted in a timely manner (and some not at all during the remainder of the field period). Further complications prevented systematic retrieval of all data saved locally on the phones during the period of transmission blackout. This resulted in missing data for some surveys for these respondents. As a result the data for these cases was excluded from the analysis presented here. The episode highlights some of the potential problems and unanticipated complexities of dealing with mobile measurement systems. 12 4.1 Survey Completion Rate Over the 33 day period, respondents were asked to complete 165 surveys (5 per day across different parts of the day), resulting in 63,360 potential surveys across all respondents. The average, respondent-level completion rate (number of fully completed interviews / number of surveys offered) was 78.7%. The respondent-level average is used here (and with subsequent measures reported below) as we believe that is a more accurate measure of respondent participation, compared to total number of surveys completed across all respondents. The latter measure can often mask important variation across respondents. The average completion rate varied significantly over time (see Figure 1). On average, respondents completed 77.7% of surveys during week 1, when they were first learning to use the device (see Table 1). The average completion percentage rose into the low 80s for week 2 (82.7%) and week 3 (82.6%). During the final two weeks, the rate dropped to the mid-70s (week 4: 75.2%; week 5: 74.2%). Over the 5-week period we see a pattern of initial learning (week 1), followed by more intensive usage (weeks 2 and 3), followed by a slight drop-off in participation during the latter weeks. Looking at participation rates across demographic groups (Table 1), there were no significant differences in participation levels across any of the weeks based on sex, work status, or language. The only significant difference noted for age was in week 2, when usage spiked among those aged 16 to 24 years old (87.0% versus 79.0% for the next highest group, those aged 25 to 24 years). There were, however, significant changes in participation across time for all but one subgroup. Only those aged 50 or older showed no significant change in completion rates (ranging from a high of 80.3% in week 3 to a low of 72.4% in week 1). Other age groups showed 13 an interesting pattern. Those aged 16 to 24 years showed the sharpest decline in participation of any subgroup examined, going from a high of 87.0% participation in week 2 to 69.2% in week 5 (a 18.8 percentage point decline). Those aged 25 to 34 years declined by 11.8 percentage points between weeks 2 and week 4, with a slight uptick in participation in the final week. For the two older groups (35 to 49; 50 and older), however, the lowest participation rates were during week 1 while the highest were in one of the two subsequent weeks – this shift being statistically significant only for the 35 to 49 year old group. The pattern, therefore, is one of more learning during the first week for the older group and less of a “learning curve” but more of a cross-time drop out for the younger groups. Males, those working fulltime, and those who speak Zulu or English also had completion rate declines of 10 percentage points of more over the 5-week period. Finally, looking at completion rates across the different times of the day we find remarkable stability (Table 2). There were no significant differences notes across demographic groups or within subgroups over time. Nonresponse, therefore, appears primarily driven by whether the respondent chose to continue participating in the study, and not necessarily participating only at selected times of the day. 4.2 Location Where Survey Completed One of the critical elements of Life360 is the ability to capture respondent’s attitudes and behaviors “in-the-moment” – no matter where they are at the time of the survey. For the World Cup study, 58.9% of the surveys were completed in the respondent’s own home, while 41.1% were completed outside of the home. As shown on Figure 2, 10.3% were completed while visiting someone else’s home; 12.5% were completed at work; 7.1% while the respondent was in 14 transit; and 11.2% while in some other location, such as a bar, restaurant, store, school, Internet café or the like. As shown in Table 3, surveys were consistently more likely to be completed in the home by women and those currently not working full or part-time. For three of the five weeks, those aged 50 or older were also more likely to complete the surveys while at home rather than outside of the home. In terms of cross-time changes, only those working full-time showed significant variation over time in the percentage of surveys completed at home (Table 3). Among this group a higher percentage of surveys were completed in home during the first and last weeks of the study, while significantly lower percentage in the home occurred during weeks 3 and 4. 4.3 Recording Activities at the Time of the Survey To gain a better understanding of whether or not respondents were “learning” to navigate the shortest path through the survey – a sign of respondent fatigue and potential measurement error - we examined responses provided to the question: “What were you doing before starting the survey?” This is the second question asked on every survey and respondents could choose all that apply from the following list of potential activities: (1) attending a sporting event in person, (2) using a computer (laptop or desktop), (3) watching television, (4) listening to the radio, (5) using a cell phone, (6) shopping, (7) travel or commuting, (8) eating (meal, snack, drink), (9) working (full-time, part-time, housework), (10) watching a DVD, (11) listening to a CD, (12) playing a video game, (13) reading a newspaper or magazine, or (14) some other activity. Most of these questions had specific follow-up questions to obtain more detail about the activity. A 15 respondent wanting to minimize the number of questions they were asked could, therefore, limit the number of activities chosen and hence reduced the survey length. To determine if such behavior was being exhibited, we looked at the average number of activities reported by respondents over time. The weekly average across respondents was 1.63 activities over the five week period. This number was very stable across all weeks of the field period, with no significant variation noted over time overall (Table 4). Moreover, there was little significant variation across time within the various demographic groups examined. This would seem to indicate that respondents did not alter their reporting behavior when it came to concurrent activities from the start to the conclusion of the field period. 4.4 Respondent Mood Another potential indicator of respondent engagement (or disengagement) in the survey process is their self-reported mood at the time of the survey. Halfway through each survey respondents were asked to indicate on a 5-point scale the number that most accurately described their current mood. The scale had two descriptive anchors: 1 = bored, 5 = excited. If respondents were growing weary of completing surveys over the five week period, one would expect a higher percentage of “bored” responses or a shifting in the average rating to the lower end over time. Over the five week period the weekly average rating for respondent mood was 3.45 (slightly to the “excited” side of the scale midpoint). There was a slight change over time across all respondents, with week 1 showing a marginally higher average rating when compared to other weeks. The trending overall was fairly flat with a slight uptick in the final week, indicating that respondents did not appear to change in mood appreciably after the second week of the study. 16 There were also significant differences noted across subgroups. In terms of age, older respondents consistently had higher mood ratings than did younger respondents. This was true of all five weeks of the study. Likewise those who spoke Afrikaans had consistently higher mood rating across the field period compared to those who spoke Zulu, Sotho or English. Significant variation across time was also noted for all subgroups examined, with the exception of those age 50 or older and respondents who spoke Zulu. The pattern for the other groups was quite similar with the highest mood rating occurring during week 1 and then lower but fluctuating ratings occurring during the other four weeks (yet little evidence trending) 4.5 Taking Photos and Recording Captions As a final check on respondent compliance, we examined the percentage of completed interviews accompanied by a photograph and a brief caption recorded by the respondent. The capture of visual data is another key aspect of Life360, not only as another source of data about respondent’s daily activities and preferences, but also because of the impact photos can have in bringing survey data and findings to life. The simple act of photography provides researchers a deeper glimpse into the respondent’s world. To measure compliance with this task, we looked at the percentage of completed interviews that had both a photograph and an accompanying caption. On average 92.0% of all completed interviews were accompanied by a photograph, while 66.4% of all completes had both a photograph and a caption. Women were more likely than men across each of the five weeks to both take a picture and insert a caption. Like some of the other measures already discussed, the percentage of photos with captions remained remarkably stable over time, both overall and within the subgroups examined (see Table 6). Again, this is an 17 indication that respondents were largely compliant with this specific task during their tenure in the World Cup panel. 5.0 Discussion The 2010 World Cup study provides some critical insights into how researchers can utilize current technologies (in this case a Blackberry smartphone) to expand the types of studies that can be conducted. Given the length of the panel and that surveys were required at multiple points during the day (a total of 165 potential surveys per respondent over the five week period), the study achieved a remarkable rate of completion with respondents completing, on average, more than three-quarters of the surveys. While technical issues resulted in a decline in participation during the latter two weeks of the study for some of the affected respondents, most of the other respondent engagement measures showed remarkable stability over time – both overall and within the demographic groups examined. This included measures such as the proportion of completed interviews within the five daily time blocks; number of activities reported just prior to completing the survey; average mood rating on a 5-point scale, as well as taking photos and adding captions. Where there were differences in compliance, they tended to be across demographic groups (as opposed to cross-time changes within specific subgroups) and they tended to be minimal. The greatest variation was seen in the proportion of surveys completed in-home versus out-of-home, with women, adults aged 50 and older and those not currently working full or part time being more likely to complete their surveys in their own home. Considering the lifestyles of these various subgroups in South Africa, this is not a surprising finding. Even among these 18 groups, however, more than 30% of surveys were completed out of the home, an indicator that this survey process works well across different demographics. The study presented here has several potential limitations which should be noted. First, it was conducted in one country (South Africa) and during a time of heightened national awareness and unity with the country hosting the World Cup matches. These factors may limit the applicability of findings to other geographic areas and cultural conditions. Second, the sample was drawn using a quota (non-probability) methodology. While this approach ensured the proper proportion of respondents in terms of sex, age, work status and language, the fact that these respondents were not chosen via a random selection process could affect the generabilizability of the findings as well. In terms of future directions, there are several. First is the inclusion of new data collection techniques in the Life360 “tool box.” These include the use of geo-positioning systems (GPS) and additional software applications. Many smartphones already contain GPS applications. Use of this type of software (with full respondent knowledge and permission) would allow more precise identification of the locations frequented by respondents. This would allow for more refined analyses of travel patterns, exposure to various forms of messaging and advertising, and tie-ins to other community location databases (such as stores, service provides, churches, community organizations, etc.). Smartphones also provide a versatile platform for a variety of software (and some hardware) applications which could be used to enhance the data collection processes, such as trip-logs, social networking portals, voice recording, or barcode readers to track purchases. Second, additional testing is required with recruitment of panels using probability-based sample approaches. This would pose more of a challenge in recruitment and panel retention, but would allow results from Life360 to be more generalizable to a larger 19 population. Third is the use of longer-term panels. To date, most Life 360 panels have been of 7 to 10 day duration. The World Cup study shows, however, that it is feasible to field longer-term panels. Future panels of even longer duration, but which are perhaps not queried as often could provide data on longer range patterns and changes in attitudes and behaviors over time. The promise of the digital age for researchers lies in how we can best harness these new technologies to unlock insights into various avenues of inquiry. Life360 utilizing periodic surveys via smartphones combined with other survey and non-survey data offers one potential option. The approach allows researchers to capture rich, detailed data in a manner heretofore unattainable through traditional focus group or survey approaches. The researcher as “participant observer” no longer needs to be embedded physically with a community to obtain such insights, but rather can capture this richness through the effective use of today’s technologies. And as those technologies continue to evolve, Life360 will keep pace, leveraging new hardware and applications to meet changing data needs. Acknowledgements: The authors would like to thank Karen Benezra, Hala Makowska, E. Nicole Bensky, Lorelle Vanno, Jennie Lai, Jennie Pearson, and Nicole Bensky for their input on the data collection design, background research and early drafts of this manuscript. The findings and conclusions in this article are those of the authors, and do not necessarily represent the views of The Nielsen Company. 20 References Belli, Robert, Lynette Smith, Patricia Andreski, and Sangeeta Agrawal (2007). “Methodological Comparisons Between CATI Event History Calendar and Standardized Conventional Questionnaire Instruments.” Public Opinion Quarterly 71: 603-622. Caro Sr, Jaime, Ingrid Caro, Jaime Caro, Fred Wouters, and Elizabeth Juniper (2001). Does electronic implementation of questionnaires used in asthma alter responses compared to paper implementation? Quality of Life Research, 10: 683-691. Couper, Mick, and Peter Miller (2009).”Web Survey Methods: Introduction.” Public Opinion Quarterly 72: 831-835 Delespaul, Philippe (1995). Assessing Schizophrenia in Daily Life: The Experience Sampling Method. Maastricht University Press: Maastricht. Dillman, Don, Jolene Smyth, and Leah Christian (2008). Internet, Mail, and Mixed-Mode Surveys: The Tailored Design Method, 3rd Edition. John Wiley: Hoboken, NJ. Joyce, Mary and Jay Stewart (1999). What can we learn from time-use data? Monthly Labor Review. 122: 3-6. Lee, Y. and L. Waite (2005). Husbands’ and wives’ time spent on housework: A comparison of measures. Journal of Marriage and Family, 67: 328-336. Lai, Jennie, Lorelle Vanno, Michael Link, Jennie Pearson, Hala Makowska, Karen Benezra, and Mark Green. (2010). “Life360: Usability of Mobile Devices for Time Use Surveys.” Survey Practice, February: www.surveypractice.org. Marceau, Lisa, Carol Link, Robert Jamison, and Sarah Carolan (2007). Electronic diaries as a tool to improve pain management: is there any evidence? Pain Medicine, 8: 101-109. Palmblad, M. and Brian Tiplady (2004). Electronic diaries and questionnaires: Designing user interfaces that are easy for all patients to use. Quality of Life Research, 13: 1199-1207. Peters, Mikael, Marjolijn Sorbi, Dieta Kruise, John Kerssens, Peter Verhaak, and Jozien Bensing (2000). Electronic diary assessment of pain, disability and psychological adaptation in patients differing in duration of pain. Pain, 84: 181-192. Peytchev, Andy and Craig Hill, Craig (2008). Mobile web survey design. Paper presented at the 2008 American Association for Public Opinion Research annual conference, New Orleans, LA. Stinson, Linda. (1999). Measuring how people spend their time: a time-use survey design. Monthly Labor Review, 122: 12-19. 21 Tourangeau, Roger, Mick Couper, and Fred Conrad (2004). Spacing, position, and order: Interpretive Heuristics for visual features of survey questions. Public Opinion Quarterly, 68: 368-393. US Bureau of Labor Statistics. (2007). American Time Use Survey 2003-2006. 22 Table 1. Survey Completion Rate by Demographic Characteristics and Week Completion Rates Demographic Characteristics Total Sex Male Female Between Grp (Sig.) Age 16 – 24 25 – 34 35 – 49 50+ Between Grp (Sig.) Work Status Full-time Part-time Not Working Between Grp (Sig.) Language Zulu Sotho Afrikaans English Between Grp (Sig.) n Overall Week 1 Week 2 Week 3 Week 4 Week 5 Cross Week (Sig.)1 384 78.7 77.7 82.7 82.6 75.2 74.2 (<.001) 190 194 77.4 80.1 (.197) 76.3 79.1 (.347) 82.6 82.8 (.945) 81.4 83.8 (.298) 72.8 77.4 (.122) 72.3 76.1 (.225) (<.001) (<.01) 78 93 137 76 80.5 77.5 79.7 76.8 (.614) 87.0 79.0 74.4 72.4 (.006) 86.1 82.2 82.4 80.3 (.498) 82.6 82.2 84.5 79.7 (.564) 74.2 70.4 78.9 75.2 (.182) 69.2 72.2 77.4 76.2 (.243) (<.001) (<.001) (<.01) (.151) 188 52 144 77.1 80.1 80.4 (.314) 75.6 78.7 80.1 (.367) 82.4 82.9 83.0 (.977) 80.9 81.3 85.3 (.219) 73.1 75.4 77.8 (.346) 72.1 82.7 74.0 (.089) (<.001) (<.05) (<.01) 72 117 121 74 79.7 78.2 80.0 76.6 (.689) 80.5 79.2 74.6 77.8 (.508) 83.7 80.8 84.2 82.1 (.709) 82.5 81.7 83.7 82.4 (.933) 77.7 74.2 78.9 68.1 (.070) 71.8 73.8 78.0 71.1 (.400) (<.01) (<.01) (<.01) (<.01) Note: Completion Rate = Number of Surveys with Complete Data / Number of Surveys in Time Period 1 Significance base on comparison of highest and lowest values across the five weeks. This indicates level of significance in variation over time, not necessarily significant trending. 23 Table 2. Survey Completion Rate by Demographic Characteristics and Time of Day Completion Rates Demographic Characteristics Total Sex Male Female Between Grp (Sig.) Age 16 – 24 25 – 34 35 – 49 50+ Between Grp (Sig.) Work Status Full-time Part-time Not Work Between Grp (Sig.) Language Zulu Sotho Afrikaans English Between Grp (Sig.) [n] n 6am – 10am 10am – 1pm 1pm – 4pm 4pm – 7pm 7pm-11pm Cross Time Sig.1 384 77.6 78.3 78.8 79.5 79.5 (.255) 190 194 76.0 79.3 (.131) 77.5 79.1 (.453) 77.6 79.9 (.290) 77.5 81.5 (.065) 78.3 80.7 (.322) (.442) (.290) 78 93 137 76 79.3 76.1 78.3 76.6 (.750) 80.3 76.7 79.0 76.8 (.628) 78.9 78.2 80.1 76.9 (.741) 80.1 78.2 81.1 77.7 (.630) 83.8 78.3 79.8 76.0 (.229) (.218) (.461) (.563) (.654) 188 52 144 76.1 77.6 79.6 (.354) 76.5 80.0 80.0 (.273) 77.3 80.3 80.1 (.398) 78.1 79.0 81.5 (.324) 77.5 83.4 80.7 (.218) (.454) (.179) (.161) 72 117 121 74 80.7 76.9 78.4 74.6 (.368) 79.9 77.9 79.1 76.0 (.665) 79.6 78.1 79.6 77.6 (.875) 79.2 78.3 81.3 78.8 (.717) 79.1 79.8 81.5 76.0 (.496) (.355) (.329) (.282) (.335) Note: Completion Rate = Number of Surveys with Complete Data / Number of Surveys in Time Period 1 Significance base on comparison of highest and lowest values across the five weeks. This indicates level of significance in variation over time, not necessarily significant trending. 24 Table 3. Percentage of Survey Completions in Home by Demographic Characteristics by Week Percentage of Interviews Completed in Home Demographic Characteristics Total Sex Male Female (Sig.) Age 16 – 24 25 – 34 35 – 49 50+ (Sig.) Work Status Full-time Part-time Not Work (Sig.) Language Zulu Sotho Afrikaans English (Sig.) [n] Cross Time1 (Sig.) (.114) Week 1 59.5 Week 2 59.8 Week 3 58.4 Week 4 57.7 Week 5 59.9 53.8 65.2 (<.001) 52.7 66.8 (<.001) 53.0 63.7 (<.001) 52.3 62.9 (<.001) 55.1 64.4 (<.001) (.407) (<.05) 58.8 56.0 60.3 63.4 (.200) 60.8 56.5 57.8 66.6 (.014) 60.3 51.6 58.2 65.2 (<.001) 58.4 53.3 57.3 63.1 (.052) 59.2 57.7 59.9 63.0 (.602) (.479) (.084) (.229) (.275) 55.8 53.9 66.5 (<.001) 52.4 59.0 69.7 (<.001) 50.5 59.7 68.6 (<.001) 50.3 58.7 67.2 (<.001) 56.0 56.0 66.6 (<.001) (<.01) (.268) (.269) 61.0 61.2 58.9 56.5 (.505) [361] 60.4 60.4 57.9 61.6 (.688) [372] 57.8 57.3 60.2 58.0 (.760) [375] 57.5 55.9 58.5 59.2 (.754) [369] 64.7 58.2 59.0 59.3 (.354) [353] (.099) (.121) (.491) (.191) 1 Significance base on comparison of highest and lowest values across the five weeks. This indicates level of significance in variation over time, not necessarily significant trending. 25 Table 4. Average Number of Activities Reported at Start of Interview by Demographic Characteristics by Week Average Number Reported Activities Demographic Characteristics Total Sex Male Female (Sig.) Age 16 – 24 25 – 34 35 – 49 50+ (Sig.) Work Status Full-time Part-time Not Work (Sig.) Language Zulu Sotho Afrikaans English (Sig.) [n] Cross Time1 (Sig.) (.374) Week 1 1.60 Week 2 1.63 Week 3 1.65 Week 4 1.64 Week 5 1.61 1.58 1.63 (.527) 1.60 1.66 (.480) 1.64 1.65 (.981) 1.63 1.66 (.775) 1.64 1.59 (.637) (.145) (.219) 1.54 1.68 1.60 1.58 (.657) 1.54 1.67 1.63 1.68 (.643) 1.53 1.65 1.66 1.73 (.499) 1.57 1.68 1.63 1.71 (.773) 1.55 1.62 1.60 1.69 (.826) (.247) (.179) (.215) (.237) 1.55 1.68 1.64 (.393) 1.54 1.73 1.71 (.076) 1.54 1.75 1.74 (.057) 1.57 1.74 1.70 (.261) 1.55 1.76 1.65 (.251) (.226) (.247) (.230) 1.65 1.51 1.69 1.58 (.249) [361] 1.62 1.57 1.70 1.62 (.574) [372] 1.59 1.61 1.75 1.58 (.377) [375] 1.63 1.58 1.78 1.58 (.438) [369] 1.63 1.52 1.74 1.52 (.196) [353] (.262) (.283) (.197) (.273) 1 Significance base on comparison of highest and lowest values across the five weeks. This indicates level of significance in variation over time, not necessarily significant trending. 26 Table 5. Average Mood Rating by Demographic Characteristics by Week Average Mood Rating Demographic Characteristics Total Sex Male Female (Sig.) Age 16 – 24 25 – 34 35 – 49 50+ (Sig.) Work Status Full-time Part-time Not Work (Sig.) Language Zulu Sotho Afrikaans English (Sig.) [n] Week 1 3.65 Week 2 3.38 Week 3 3.38 Week 4 3.39 Week 5 3.43 3.69 3.61 (.394) 3.43 3.33 (.359) 3.40 3.35 (.678) 3.43 3.36 (.517) 3.47 3.38 (.452) 3.22 3.61 3.83 3.83 (<.001) 2.86 3.25 3.59 3.69 (<.001) 2.88 3.30 3.56 3.65 (<.001) 3.02 3.20 3.56 3.70 (<.001) 3.10 3.25 3.57 3.73 (<.001) Cross Time1 (Sig.) (<.001) (<.01) (<.01) (<.01) (<.01) (<.05) .108 3.58 3.80 3.68 (.263) 3.31 3.40 3.46 (.416) 3.26 3.46 3.49 (.128) 3.19 3.63 3.57 (<.001) 3.26 3.61 3.58 (.021) (<.001) (<.05) (<.05) 3.45 3.46 3.92 3.72 (<.001) [361] 3.34 3.20 3.62 3.28 (.011) [372] 3.37 3.08 3.67 3.36 (<.001) [375] 3.39 3.05 3.68 3.44 (<.001) [369] 3.41 3.14 3.71 3.43 (.002) [353] (.224) (<.001) (<.01) (<.01) 1 Significance base on comparison of highest and lowest values across the five weeks. This indicates level of significance in variation over time, not necessarily significant trending. 27 Table 6. Average Percentage of Pictures with Captions by Demographic Characteristics by Week Percentage of Pictures with Captions Demographic Characteristics Total Sex Male Female (Sig.) Age 16 – 24 25 – 34 35 – 49 50+ (Sig.) Work Status Full-time Part-time Not Work (Sig.) Language Zulu Sotho Afrikaans English (Sig.) [n] Cross Time1 (Sig.) (.115) Week 1 67.8 Week 2 66.5 Week 3 68.0 Week 4 65.3 Week 5 64.5 63.6 71.9 (<.05) 62.0 70.9 (<.05) 63.8 72.5 (<.05) 60.1 70.3 (<.05) 59.2 69.5 (<.05) (.150) (.215) 71.4 70.0 67.8 60.9 (.351) 65.8 68.6 68.4 61.2 71.1 71.3 68.2 59.9 66.1 66.9 66.4 60.1 62.6 66.7 65.3 62.5 (.077) (.216) (.281) (.356) (.572) (.235) (.684) (.885) 67.0 65.4 69.7 (.735) 64.0 68.2 69.1 (.464) 67.1 66.8 70.7 (.818) 60.9 65.9 70.9 (.082) 61.1 65.0 68.8 (.252) (.073) (.342) (.341) 68.8 62.8 71.3 69.5 (.376) [361] 68.9 58.6 67.9 72.9 (.109) [372] 69.5 62.2 72.2 69.2 (.255) [375] 64.4 58.6 68.2 71.7 (.128) [369] 62.1 57.7 67.9 72.1 (.084) [353] (.147) (.201) (.196) (.354) 1 Significance base on comparison of highest and lowest values across the five weeks. This indicates level of significance in variation over time, not necessarily significant trending. 28 Figure 1. Percentage of Completed Interviews by Day 100 90 % Completed Interviews 80 70 60 50 40 30 20 10 0 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 Day of Study 29 30 Figure 2. Percentage of Completed Interviews by Location Other Location 11.2% In Transit 7.1% Work 12.5% Own Home 58.9% Other’s Home 10.3% 31