ch03 - BYU Marriott School

advertisement

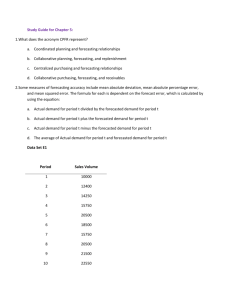

CHAPTER 3 TIME SERIES FORECASTING OF A PATTERNED PROCESS INTRODUCTION A time series measures an attribute, like sales, at equal intervals of time. When a forecaster plots the sales history of a product, the plot often has a recurring pattern. Usually time is on the horizontal axis, and sales in dollars or units is on the vertical axis. Particular months may always be lower than other months year after year. Slow sales may seem to grow each year. Figure 3-1 Here Figure 3-1 is an example of a sales time series that is a patterned process. January and July are always higher than other periods. (This pattern is called seasonal). Most consumer products are seasonal time series. The growth year after year is referred to as trend. SEASONAL PROCESSES Seasonal processes prevail in almost every sales time series. A process which detects a regular pattern of activity each year distinguishes them. The pattern is likely to be predictable from year to year, thus allowing for the extrapolation of the time series over time. Usually we assume a seasonal time series consists of monthly data, but the data 3-1 may also be grouped in quarterly information or weekly reports. In many cases the usual assumption is that the seasonal period (pattern) repeats itself in one complete year. Some causes of seasonal effects on sales appear below. Weather: Sunshine increased demand for ice cream, sunglasses, beer, soft drinks, camera film, and lotion Cool weather heating fuels, clothing, anti-freeze, ski equipment, snow tires, and tire chain Rainfall raincoats, umbrellas, and taxi service Holidays: Christmas food, toys, trees, cards, travel, hotel accommodation, sporting equipment, and clothing of all types Easter baskets, eggs, and fashion clothing Mother's Day confectionery, certain clothing, cards, and appliances In each of the examples, the forecaster expects sales to increase during the period, as compared to the rest of the year. Marketing people refer to "consumption holidays" and run special sales on Labor Day or on President's Day. Some people who have a day off from work because of the holiday spend the time shopping. This shopping raises average sales for months with holidays. Knowing this, forecasters must accommodate the seasonal pattern in their forecasts. A first step in forecasting is to plot 3-2 the data and observe if a seasonal pattern exists. Next the forecaster must find any natural, repetitive factors that influence the sales of the product and cause it to sell in a seasonal pattern. Holidays, weather and promotions like January white sales can create the seasonal pattern. Many personal care products have a fall seasonal pattern because some individuals "stock up" for the winter. Again, since the seasonal effect is recurrent and periodic, it is predictable. Seasonality is a very common forecasting problem, especially as a component of a firm's sales pattern. In most retailing enterprises, sales are higher in December; breweries and soft drink companies have sales patterns that follow the temperature--high sales in warm weather and low sales in cool weather; ski manufacturers have a sales pattern that peaks every winter. One could create a virtually endless list of time series that exhibit seasonal patterns. In fact, it is difficult to find products that are not seasonal. The seasonal pattern in figure 3-1 fits many clothing and household products. Shoe retailers typically run January and July shoe sales every year, causing sales to be greater in these two months than in other months. Many retail stores have annual white sales every January and July, which also causes sales to surge during these two months. Other traditional sales promotions that create seasonal patterns are post-Christmas, President's Day, Easter, Lent, Fourth of July, back-to-school, and store anniversary sales. In addition to traditional sales promotions, consumer habits alone often produce seasonal patterns. Products such as pumpkin and mince pies, ham, turkey and corned beef, eggnog, chocolates, ice creams, and wedding dresses all have seasonal sales patterns because of consumer buying and consumption habits. 3-3 Another cause of seasonality in sales is availability. Some products, like fresh salmon, fresh strawberries, etc., are only available during certain seasons. Whatever the cause, most businesses know their sales are patterned on a seasonal basis. Seasonality for sales in manufac-turing firms causes management problems because it requires the firm to hire and then lay off in order to build large inventories before the peak season. This increases the cost of storage and frequently generates a cash pinch because of inventory and payroll costs. A related problem is the sudden cash accumulation that occurs after the season. Many seasonal industries note that they borrow when funds are dear and loan when funds are plentiful. Most production managers believe the most efficient use of production facilities occurs when production operates at a constant level. Accordingly, managers often offer price concessions during low sales periods to achieve more uniform operations by having some sales occur before or after the season. Another technique to smooth out the seasonal pattern is strong advertising during nonseasonal periods. In recent years, the turkey growers' organization have tried this approach in an effort to influence consumers to eat turkey during the spring, winter, and summer seasons. These efforts may complicate the effort to forecast sales because the seasonal pattern may shift over time. A third approach to lessening the impact of seasonality on the company's operations is to manufacture and market products that have complementary seasonal patterns. For example, a firm manufacturing passenger lawn mowers may add 3-4 snowmobiles to its product mix to smooth operations by smoothing the demand for its products. Since seasonality can have such a dramatic impact on sales, the forecaster must consider it in developing his forecasting system. Several approaches to seasonality have been developed and are discussed later in this chapter. TREND In addition to seasonality, a second prominent pattern exhibited by most time series is trend. Trend refers to a pattern where sales seem to increase or decrease over a period of time. Figure 3-2 Here Figure 3-2 shows a time series with a trend pattern. Sales grow at a very consistent rate. The trend shown above can be described as positive and linear. Some time series have a nonlinear pattern. Both linear and nonlinear trends can be expressed in a mathematical formula. For example: Sales = constant + (slope x months) represents the mathematical pattern of linear trend in figure 3-2. This is the simple regression model explained in the prior chapter. The following values apply: 3-5 constant = 2400 1 slope = 100 If the forecaster wants to know sales at any period, he just substitutes it into the formula. For example, for the 10th month, October: Sales = 2400 + 100 x 10 = 3400 2 And for the 22nd month, October, one year later: Sales = 2400 + 100 x 22 = 4600 3 Many times series have linear trend or near linear trend. Those which are nonlinear can often be segmented, so that over various ranges or segments of the time series, the trend appears to be linear. Figure 3-3 Here The time series in figure 3-3 can be defined using three different linear equations. Many nonlinear time series can also be represented with a single equation. Figure 3-4 Here 3-6 Figure 3-4 is an example of a nonlinear trend which can be represented by Sales = 0 + 1000 x time2 4 (1) Causes of Trend Several factors cause trend. One basic factor is population growth. As the number of potential buyers increases, the sales can increase. Trend can also be the result of more people becoming aware of the product. More or fewer competitors can cause a time series to develop a trend pattern. When the time series is expressed in dollars, the trend is often caused by inflation. Trends are significant factors in most time series, and the forecaster must therefore determine what the trend is and how to account for it in the forecasting system. Another pattern that may appear in the time series is cyclicality. 3-7 Figure 3-5 Here Figure 3-5 shows a time series with cyclicality. Cycles are really a special trend, and some forecasting models can handle cycles as part of trend. When the forecaster chooses to treat cyclic variations separately from trend, he must take cyclicality into account with an equation that includes sine and cosine terms to match the pattern. Complex Time Series Most times series are not just a trend or a seasonal pattern, but a combination of patterns. This complicates the forecasting efforts, since the forecaster must determine the effects of seasons and trends to do a good job. The effects of trend and seasonality may be interactive and difficult to separate. In such a case, forecasting can be very difficult. Decomposing the Time Series One approach to creating a forecasting model is to decompose the time series into its components or various patterns of trend and seasonality. Decomposing a time series is the process of removing each component or pattern step by step using mathematical procedures. If the forecaster correctly identifies the mathematical equation for trend and then 3-8 detrends the time series, he removes a source of variance and simplifies the time series. If he then removes seasonality by determining the seasonal mathematical relationships and adjusting the data for seasonality, he removes a second source of complexity in the time series. What does the forecaster gain by this process? He mathematically models various components of the time series. He can then put these mathematical relationships into his forecasting system. In theory and practice, the easiest time series to forecast is flat, linear, and stable. Figure 3-6 Here Figure 3-6 is such a time series. It is easy to forecast the next month. It is the same as last month and the month before, etc. It is referred to as a stationary time series. Figure 3-7, on the other hand, is a complex time series with both seasonal and trend patterns. It is quite difficult to forecast. The forecaster must decompose the series by identifying each component and writing a mathematical function to express its relationship to the others. He then combines the mathematical relationships to form a forecasting equation or model. The time series in figure 3-7 is tourists' buying airplane tickets to Hawaii. Figure 38 shows this time series with trend removed. Notice that it is still not a flat straight line, because two additional components of the time series are to be removed. These are (1) 3-9 atypical events, and (2) what forecasters call noise. Atypical events are onetime sudden increases in orders and surges in sales caused by atypical events, like promotions, temporary price changes, strikes, tornadoes, legal actions, price freezes, etc. The forecaster usually uses company records or management discussions to identify atypical events. Once identified, the atypical event is replaced--and the time series thus modified--with what managers or the forecaster feels sales would have been without the atypical event. Building forecasting models for atypical periods is explained in chapter 5. Figure 3-10 shows the Hawaii-tourist time series adjusted for seasonality, trend, and atypical events. Although the time series is not a flat, repetitive line, it is nearly so. Forecasters attribute minor variations from a flat line to noise. Figure 3-7 Here Figure 3-8 Here Figure 3-9 Here 3-10 Figure 3-10 Here Noise is a random process of the dynamic environment. The underlying process of seasonality, trend, cycles, and atypical events generates a time series. Noise is minor variances that slightly shift the underlying process from month to month. These minor shifts average to zero over the long run, so the expected value of noise is zero. The forecaster does not write a mathematical expression to model noise. He generally checks to see that noise (random error) is zero. BUILDING THE FORECASTING SYSTEM OR MODEL In developing a model, the first step is generally to identify what components exist. There are three common approaches used to identify the components of a time series. One approach is to plot the data and estimate which components are present from the appearance of the plot. A second approach is to use spectral analysis to determine the presence of seasonality, trend, and cycles. Spectral analysis decomposes the total variations from the stationary time series into contributions at a continuous range of frequencies. This procedure assumes the time series is made up of sine and cosine waves with different frequencies. Spectral analysis converts the time series from the time domain into the 3-11 frequency domain. It then measures the intensity of various frequencies to determine how significant and important they are to the time series. A frequency of .085 is equivalent to 1/12, or a yearly frequency when the time series is monthly observations. If the intensity of the .085 frequency is high, the time series has a seasonal component. Most spectral programs remove linear trend before calculating the intensities at each frequency. A regression approach is used to remove the trend. The program identifies the trend and the mathematical expression that models the trend. If the trend is nonlinear, the regression procedure does not remove all the trend, and the intensity of the low frequencies is very high. A third method of determining the components of a time series is auto-correlation and partial autocorrelation values. This is the basis of ARIMA forecasting models. ARIMA methods and autocorrelations are discussed in chapter 4. Model Building After forecasts have determined the components in the time series, they then have to determine the model that includes these variables and the values for these variables. The forecaster evaluates past sales to determine the model to use. He calculates necessary parameter values from past sales data. One of the advantages of time series forecasting is the elimination of complex and expensive efforts at gathering external data such as housing starts or interest rates. Also, time series forecasting generally produces the most accurate sales forecasts. For many products, the past is an excellent predictor of the future. Several studies have shown that for some products time series forecasting 3-12 outperforms other forecasting techniques. Further, when the firm has many products to forecast, it may be possible to use one type of time series model successfully with all the products which results in a lower cost of forecasting sales. Using econometric forecasting procedures, which were discussed in Chapter 2, frequently requires a different model for each product, which greatly increases the data gathering and forecasting efforts and costs. Finally, time series forecasts can be an automated process of an inventory control system and the forecasts are automatically produced each period with very little cost. TIME SERIES FORECASTING MODELS The Running Average Forecasting Technique This forecasting technique works well if trend is fairly constant and if seasonality is not present in the data. The procedure takes past periods and averages them. The average is the sales forecast. Each period, the forecast drops the oldest period and adds the most recent period. Figure 3-11 is a three-month running average for times series showing sales of airline tickets to Hawaii. Figure 3-11 Here 3-13 Table 3-1 Here The major cause for error in this example is the seasonality of the time series, the running average model doesn't model seasonality. Figure 2-12 shows the three-month running average forecast with a seasonal factor incorporated into the model. Incorporating seasonal factors greatly improves accuracy. The model has a bias toward underforecasting because only a lagged trend is incorporated into the model. A special case of the running average is the naive model. The forecast is last period's value (yt+1 = yt). As discussed in Chapter 2, the naive model is used as a bench mark, and if other models do not out perform the naive model, they are discarded. Figure 3-12 Here Table 3-2 Here Seasonal Factors Seasonal factors are most often multiplicative variables in the forecasting model. 3-14 Sometimes an additive seasonal factor is calculated. Each month, forecasts use one of twelve different seasonal factors--one for each month of the year. Seasonal factors modify forecasts to match the peaks and valleys that seasonality causes in a time series. The seasonal factor relates a particular month to the average month. One way to calculate seasonal factors is to take each month's sales for a year and then average the data. This average is a base by which each of the months' actual sales is divided. A seasonal factor of .80 indicates that a month's sales are 20% less than the average month's. A seasonal factor of 1.15 indicates that that month's sales are 15% greater than the average month's sales. Figure 3-13 shows a plot of the calculated seasonal factors used in the forecast shown in figure 3-12. Figure 3-13 Here Dividing each month by the average of the twelve months gives the values in figure 3-13. Table 3-3 Here The above technique has two problems. First, since only one year is used, one atypical month causes erroneous seasonal factors to be calculated. An atypical event, 3-15 such as a strike or price promotion, affects sales; and the seasonal factor reflects the atypical event rather than the seasonal nature of the time series. In the above procedure, atypical events are interpreted as seasonal influences. To eliminate this problem, forecasters generally use at least two years' data in calculating seasonal factors, or they modify or change the atypical data to reduce the impact of the atypical event. Adjusting for atypical periods is discussed in Chapter 5. The second problem with the procedure is that the date has trend; that is, sales are increasing over time. This trend effect is interpreted as seasonal effect. Removing the trend in the data before seasonal factors are calculated eliminates this problem. Figure 3-14 is a plot of the calculated seasonal factor using two years of detrended data. Figure 3-14 Here Table 3-4 is the calculation of trend, or monthly rate of change, in the time series showing sales of airline tickets to Hawaii. Column 2 indicates the month and year; column 3 (labeled X) is the consecutive numbering of each month through twenty-four. The average of column X is 12.5. Column 4 is actual sales. Column 5 is the difference between each value of column 3 and X. Column 6 is column 4 multiplied by column 5. The total of column 6 is 580,000. Column 7 is the values in column 5 squared. Column 7's total is 1150. Dividing the total in column 6 by the total in column 7 gives trend. In this case trend is 504 per month. This means that because of trend, sales have grown by 504 3-16 after two months and by 11,592 after 23 months. The increase in sales due to trend is shown in column 1. Subtracting column 1 values from actual sales (column 4) one obtains the detrend-sales needed to calculate seasonal factors. These values appear in column 8. It is this column's figures that are used to calculate seasonal factors. The average of this column is 138,000. Table 3-5 is the procedure for calculating the seasonal factors. The two deseasonalized values for each month are averaged, and this average is divided by the overall average for all the detrended months. Table 3-4 Here Table 3-5 Here An Additional Method of Calculating Seasonality A second method of calculating seasonal factors, which doesn't require detrending, is to divide the actual sales by the sum of that month and the 5½ months before and after it. Using the actual data in table 3-4, one can calculate the seasonal factor for July: 3-17 188,000 110,000+123,000+148,000+125,000+131,000+180,000+188,000 +219,000+135,000+149,000+123,000+162,000+1/2(115,000) Taking equal amounts of data before and after the month whose trend is in question eliminates the problem of interpreting trend for seasonality. Unfortunately, this procedure doesn't lessen the problem of having atypical data periods interpreted as seasonality. This procedure is similar to the one used by the Census Bureau in calculating seasonal factors in their X-11 program, which is the process most forecasters use to calculate seasonal factors. Additional Limitations of Running Averages An additional limitation of the running average forecast is that the forecast always lags behind the actual sales when trend is present. To eliminate this problem, the forecaster adds the trend variable to the forecast of sales. For sales of airline tickets to Hawaii, the trend value is 504 per month. Thus if forecasters forecasted one month ahead, they would calculate the running average and add 504 to it. If they forecasted two months ahead, they would add 1008 to their forecast. A Trend Forecasting Model If seasonality is not present in the time series, it is possible to dispense with the running average and simply calculate a trend variable. Adding the trend variable to last month's actual sales allows next month's sales to be forecasted. The procedure for 3-18 calculating trend is found in table 2-4. This procedure is applicable to both positive and negative trends. If the trend is positive, the trend variable is a positive value. If the time series slopes downward, the trend variable is a negative value. The Exponential Smoothing Model This model has received widespread acceptance among American business firms that employ sales forecasts for managerial planning and control. Most computer software packages for inventory control use exponential smoothing for forecasting. Exponential smoothing is generally the most accurate of the time series forecasting models. Exponential smoothing models use special weighted moving averages and a seasonal factor that is multiplied by the weighted moving average to calculate the forecast. These weighted moving averages are referred to as smoothing statistics. The exponential smoothing models are an extension of the running average model. Generally, exponential smoothing uses three smoothed statistics that are weighted, so that the more recent the data, the more weight given the data in producing a forecast. These three averages are referred to as single, double, and triple smoothing statistics and are running averages that are weighted in an exponential declining method. Most forecasting systems use three separate forecasting equations: one model called a constant model, a second called a linear model, and a third called a quadratic model. The constant forecast uses only the single smoothed statistic and is best when the time series has little trend. The linear forecast model uses the single and double smoothed statistics and is best when there is a linear trend in the time series. The 3-19 quadratic forecast model uses all three statistics--single, double, and triple smoothed. A forecasting system using the exponential smoothing model continually evaluates forecasting accuracy and selects the forecasting equation with the most accurate forecasting history. If the equation no longer forecasts as accurately as one of the other equations because the environment has changed, the system automatically switches to the equation that will forecast best. adaptive forecasting. This automatic switching of equations is called Other adapting techniques sometimes built into the model are adapting the weighing scheme (changing the smoothing constant used) and smoothing past errors into future forecasts to reduce bias. If a forecast is consistently underforecasted, adding the average past error into the forecast, will eliminate the bias. An example of exponential smoothing is given in both table 3-6 and Table 3-7. Table 3-6 is the retail department store sales for Phoenix from January 1974 to December 1980. These values are used to establish an exponential smoothing model to forecast future retail department store sales. Table 3-7 shows the forecast's results. When the actual sales for 1981 are compared with the forecasted sales, it is clear that the model can accurately forecast future department store sales. Table 3-6 Here Table 3-7 Here 3-20 The Smoothing Process Each month all three smoothing statistics are updated by the most recent month's sales. The process of updating is called smoothing because a fixed percent, alpha (), of the most recent sales is added to (1-) times the old single smoothing statistic. For example, if were .3 and sales were X, a new single smoothing statistic St[1](X) would be calculated by taking .3X + (1 - .3) times St-1[1](X) = St[1](X). Note that St-1[1](X) refers to last month's single smoothing statistic. If t-2 were used in the statistic, it would refer to the statistic two months ago. Next month's statistic would be t + 1. The t in St[1]X is the current statistic. This is a convenient notational technique to designate what statistic is being referred to. The [1] in St[1] does not refer to an exponential power, but rather identifies that this is the single smooth statistic. St[2](X) refers to the double smoothed statistic. The (X) in both statistics signifies that the statistic is calculated from the time series being examined (sales). It was explained in Chapter 2 that to signify our forecast, we useX t+1. The ^ is referred to as hat. This signifies that the value under the hat is an estimate rather than an actual value.X t+1 is an estimate of X. The t+1 signifies that the equation is the estimate for t, the current period plus 1, which is next month. The error of estimation is calculated for next month by (Xt - Xt). In one month, Xt+1 will have become Xt. The double smooth statistic is calculated like the single smooth statistic with one exception: actual sales are replaced by the new single smoothing statistic. Last month's double smoothing statistic St-1[2](X) is multiplied by (1-) and added to St-1[1](X), giving 3-21 St[2](X). The box 2, [2], indicates this is the double smoothing statistic. The triple smoothing statistic continues the process. The new triple smoothed statistic is calculated by adding St[2](X) to (1-) St-1[3](X). The smoothing process takes into account trend and cycles but ignores seasonality. Therefore, a seasonality variable is included in the forecasting equation. To keep from confounding or combining seasonal effects and trends or cycles, we divide the actual sales data (Xt) by the seasonal factor for month t before it is used in the smoothing process. If the seasonal factor is signified by t, the single smoothed statistic for seasonal data is calculated Xt [1] [1] S t (X) = d + (1 - ) S t -1 (X) 5 t (2) The procedure for calculating seasonal factors is given in an earlier section of this chapter. The Forecasting Equations The three forecasting equations are designed to generate forecasts that follow three different patterns. The constant equation models a flat, constant pattern. This forecasting equation isX t+1 = t+1(St[1](X)). Figure 3-15 shows the patterns of time series forecasted best by the constant equation. Figure 3-15 Here 3-22 The linear equation isX t+1 = t+1[d1St[1](X)-d2St[2]]. The patterns that this equation models best are those showing a linear trend. In the equation, d1 and d2 are lag factors. The smoothing statistics lag the actual values. The value of d1 is 2 + for d2 is 1 + (1 - ) 6. The lag (1 - ) 7. Figure 2-15 also illustrates the patterns of time series forecasted best by the linear equation. The number of future periods for which the forecast is being made is indicated by . If we are forecastingX t+1, equals one; if we are forecasting Xt+2, equals 2; etc. The quadratic forecasting equation is designed to forecast time series with nonlinear trend. The equation is as follows: [1] [2] [3] Xˆ t+1 = t+1 T 1 S t (X) - T 2 S t (X) + T 3 S t (X) . 8 (3) T1, T2, and T3 are lag correction variables. The calculation of lag coefficients is somewhat mathematically complicated and is as follows: T1= 6(1 - )2 + (6 - 5 ) + 2 2 9 2(1 - )2 (4) T2= 6(1 - )2 + 2(5 - 4 ) + 2 2 10 2(1 - )2 (5) T3= 2(1 - )2 + (4 - 3 ) + 2 2 11 2(1 - )2 (6) An example of exponential smoothing forecasting can be made using the seasonal 3-23 factors in figure 2-13. If the initial smoothing statistics, after smoothing in the December 1971, were [3] [2] [1] S t (X) = 184,000 , S 1 (X) = 185,000 , and S t (X) = 190,000 12 and the smoothing constant =.1 and January seasonal factor equals .80, the January 1972 forecasts would be: Constant: [1] Xˆ t+1 = t+1 ( S t (x))= .80(190,000) = 152,000 13 (7) Linear: [1] [2] Xˆ t+1 = t+1 ( d 1 S t (X) - d 2 ( S t (X)) = .80(2.1111(190,000) - 1.1111(85,000)) 14 = .80(401,109 - 205,553) = 156,444 (8) Quadratic: (9) [1] [2] [3] Xˆ t+1 = t+1 ( T 1 S t X - T 2 S t X + T 3 S t X) = .80(3.34568 x 190,000 - 3,58025 x 185,000 + 1.23457 x 184,000) 15 = .80(633,566 - 662,344 + 227,160) = .80(198,392) = 158,705 3-24 If actual sales for January 1972 were 159,097, to make a forecast for February 1972, the forecasting program would smooth in the January sales: Xt [1] [1] S t = + (1 - )( S t (X)) t 159,097 =( ) + .9(190,000) 16 .80 = 19,887 + 171,000 = 190,887 (10) [2] [1] [2] S t (X) = S t (X) + (1 - ) S t -1 (X) 17 = .1(190,887) + (185,000) = 19,089 + 166,500 = 185,589 (11) [3] [2] [3] S t (X) = S t (X) + (1 - ) S t -1 (X) = .1(185,589) + .9(184,000) 18 = 18,559 + 165,000 = 184,159 (12) February forecasts would be, Constant: .82(190,887) = 156,527 19 3-25 Linear: .82[(2.11111)(190,88 7) - (1.11111)(185,589)] = .82(1996,774) 20 = 161,354 Quadratic: .82[(3.34568)(190,887) - 3.580251(185,589) + 1.23457(184,159)] = 165,271 21 Actual sales in February 1972 were 191,424. To forecast March 1972 sales, the program would smooth in the February sales and then make a new forecast. For April, (Xt+2), the lag coefficients d1, d2, T1, T2, and T3 would be calculated with =2. Gardner (1985) discusses various methods used to estimate initial smoothing statistic values. Makridakis and Hibon (1991) have looked at the effect that initial smoothing statistic values have on forecasting accuracy. Selecting Alpha Alpha is selected by a simulation method. Various alphas between .01 and .99 are used to forecast an initial period. Usually, the same years used to calculate seasonal factors are also used to determine which alpha predicts best. The criterion for determining the alpha is accuracy. Usually the constant linear and quadratic equations are tested with various alphas. The forecast with the lowest error determines which alpha 3-26 and model to use for future forecasts. The larger the alpha, the greater the weight of recent data and the less the weight of earlier data. The formula for determining the weight of data (past months) is (1-)k, where K is the data's age. The weight of this month is equal to in the smoothing statistic since k=1; the weight of this month in the smoothing statistics is (1-). Theoretically, this way of calculating smoothing statistics is appropriate because the most recent data have the greatest impact on the forecast. Figure 3-16 Here Figure 3-16 shows the weights for various values of alpha. Note how quickly the effects of past data diminish as alpha increases. When alpha equals .1, the data affect the smoothing statistic for eighteen months. This is in contrast to an alpha of .3, which only uses nine months of data to calculate the statistic. The initial values of the smoothing statistic also are often determined with the initial data from which seasonal factors were calculated. The procedure is to start each of the three smoothing statistics at the earliest month's sales value and then to smooth in actual sales up to the period for which forecasts will be made. The resulting smoothing statistics are used to start forecasting. Another way of establishing smoothing statistics is to use the average monthly sales for all three smoothing statistics as the initial smoothing statistic values. A third procedure is to forecast backwards the past values from a point in time--this is called backcasting. The firm then forecasts from the last backcasted value to 3-27 the present value. The forecasting system updates smoothing values ('s) each time new forecasts are made. Broze and Me'lard (1990) have suggested a maximum likelihood approach to estimating alpha. The alpha used is an important part of building an accurate forecasting model. Research has been done to determine the best alpha level (for example, see Newbold and Bos [1989] and Gardner [1985]). CORRECTING BIAS If a forecast does not have an average error value of zero over time, the forecast is biased. The forecast is consistently either an over-forecast or an under-forecast. Adding or subtracting a constant increases forecasting accuracy. Exponential smoothing models often include the addition of an exponentially smoothed error term to the forecast to correct bias. The process smooths the errors, just as the actual data is smoothed. An error smoothing statistic is calculated. Each time a forecast is made, an error is calculated by subtracting the actual forecast, and the error is smoothed into the old error smoothing statistic. This error smoothing statistic is then added to the next forecast. If the model underforecasts, the errors are positive and the error smoothing statistic is also positive; so the next forecast will be increased. If the forecasts are consistently higher than the actual, the errors are negative, and the error smoothing statistic is negative. Thus, adding the error smooth statistic will reduce the next forecast. Usually only the constant (single smoothed) error smoothing statistic is calculated, and usually a small alpha, such as .1, is used. When a forecast is biased over time, it is most often or usually because the wrong 3-28 model or wrong parameter values are used. Thus the forecaster should use a different model or different parameter values when a bias exists. However, when the bias is small, the forecaster may have the best model and best parameter values. The error correction smoothing statistic makes minor adjustments that modestly improve forecasts. Cipra (1992) has suggested a method of making exponential smoothing robust to outliers which reduces some bias errors. Advantages of Exponential Smoothing Forecasting The advantages of the exponential model are many. Although the process is somewhat tedious when done by hand, the process is straightforward and easy when programmed for a computer. Many computer programs have been written that use exponential smoothing, and they are readily available. Chapter 11 discusses many computer programs that use exponential smoothing forecasting models. Exponential smoothing models adapt to environmental changes and are self-correcting. The firm with many products needs only one program with three equations to forecast all its products' sales. The procedure has proved effective for a wide spectrum of product forecasts. Only past data are used; thus the problem and expense of collecting external data are eliminated. SUMMARY Time series data contain lots of information about the sales processes of the company. Some factors hidden in the data are seasonality, trend, and cyclicality of the sales. Seasonality is dependant on the characteristics of the product and the people 3-29 buying it. Managers attempt to decrease the seasonality of sales. A time series forecast can mathematically explain products' seasonality and show how the seasonality changes over time. Trends and cycles also occur with sales. Trends can be modeled as linear, segmented to linear, or nonlinear. (Cyclical sales can be modeled by an equation using sine or cosine functions). These sales patterns make up the underlying process of sales for the company. Each component of the sales pattern can be detected with the time series data detection which more fully describes the underlying process of sales. Three approaches to breaking the time series data into components are (1) to plot the data and estimate the components, (2) to do a spectral analysis on the data, and (3) to use ARIMA forecasting models (see Chapter Four). Two models explained in the chapter are the running average forecasting technique and the exponential smoothing model. The running average forecast calculates seasonal factors, which are multiplied by the average sales of the previous three months to determine the monthly sales forecast. A trend factor is used to increase the accuracy of the running average method. The exponential smoothing technique is a self-adjusting weighted running averages model that uses a special weighted moving average factor and the seasonal factor to calculate the forecast. The three types of exponential smoothing forecasts are the constant forecast, the linear forecast, and the quadratic forecast. Each uses a different equation with a different weighted moving average (smoothing statistic). Alpha values and bias correction factors adjust exponential smoothing forecasts. 3-30 3-31 Chapter Three Questions 1. What are the three types of variability within time series data? 2. Give three examples of how managers can decrease seasonality effects. 3. Give four factors which can cause trends in sales. 4. After one decomposes a time series and subtracts out the components, why does the remaining data often still show variability? 5. What are the three approaches discussed in the chapter for building a forecasting model? 6. Explain the running average forecasting technique. What kind of data does it work well with? 7. What is a seasonality factor? How is it calculated? 8. Give two problems associated with the running average forecasting technique. 9. What are the three forecasting equations? What kind of data does each equation forecast? 10. What are the smoothing statistics? How are they used in the three forecasting equations? 11. Which variable--trend or seasonality--is included in the smoothing process and which isn't? 12. How does the alpha variable affect the forecast? 13. How is bias subtracted from an exponential smoothing forecast? 3-32 REFERENCES Broze, L. and G. Me'lard (1990), "Exponential Smoothing: Estimation by Maximum Likelihood," Journal of Forecasting Vol. 9, 445-455. Cipra, T. (1992), "Robust Exponential Smoothing," Journal of Forecasting 11, 57-69. Gardner, E. S., Jr. (1983), "Exponential Smoothing: The State of the Art," Journal of Forecasting 4, 1-28. Granger, C. W. J. and P. Newbold (1972), "Economic Forecasting--The Atheist's Viewpoint," Nottingham University Forecasting Project, Note 11. Jenkins, G. M. and G. C. Watts (1968), Spectral Analysis and Its Applications (San Francisco: Holden-Day). Makridakis, S. and M. Hibon (1991), "Exponential Smoothing: The Effect of Initial Values and Loss Functions on Post-Sample Forecasting Accuracy," International Journal of Forecasting 7, 317-330. Naylor, T. H., T. H. Seaks, and D. W. Wichern (1972), "Box-Jenkins Methods: An Alternative to Econometric Models," International Statistics Review Vol. 40, No. 2. Newbold, P. and T. Bos (1989), "On Exponential Smoothing and the Assumption of Deterministic Trend Plus White Horse Data-Generating Models," International Journal of Forecasting 5, 523-527. Parsons, L. J. and W. A. Henry (1972), "Testing Equivalence of Observed and Generated Time Series Data by Spectral Methods," Journal of Marketing Research 9, 391-395. 3-33 Table 3-1 AIRLINE TICKETS SOLD TO HAWAII Three-Month Running Average to Forecast 1970 Previous 3 Months Month Error Jan., 1970 D,N,O,/3 - Feb. J,D,N,/3 - 127 122 5 Mar. F,J,D,/3 - 129 148 19 Apr. M,F,J,/3 - 129 124 5 May A,M,F,/3 - 131 130 1 June M,A,M,/3 - 134 180 46 July J,M,A,/3 - 145 188 43 Aug. J,J,M,/3 - 166 217 51 Sept. A,J,J,/3 - 195 134 61 Oct. S,A,J,/3 - 180 149 31 Nov. O,S,A,/3 - 167 123 44 Dec. N,O,S,/3 - 135 163 28 *All figures in thousands. Forecast 125 Actual 118 7 Table 3-2 AIRLINE TICKETS TO HAWAII TIME SERIES Three-Month Running Average with Seasonal Factors 1970 Forecast S.F. Jan. .85 .86 .89 .84 .97 1.21 1.19 1.20 .94 1.02 .90 Dec. 1.14 x x x x x x x x x S.F. x 3 Mo. Ave. 3 Mo. Ave. Forecast 131 127 129 129 131 134 145 166 195 180 167 135 *All figures in thousands. - 111 109 114 108 127 162 173 199 183 184 144 159 Actual 118 122 148 124 130 180 188 217 134 149 123 162 Error - 7 -13 -34 -16 - 3 -18 - 7 -18 +49 +35 +21 - 3 Table 3-3 SEASONAL FACTORS FOR VISITORS TO HAWAII .12 Seasonal factors are developed for a year. .Forecast is equal to last 3 month average x S.F. Develop seasonal factors by 1. 2. 3. Sum of 12 month sales Divide sum by 12 giving average Divide each month's sales by average seasonal factors 1969 J 110 110/129 = .85 F 111 111/129 = .86 M 115 115/129 = .89 A 108 108/129 = .84 M 125 125/129 = .97 J 156 156/129 = 1.21 J 152 152/129 = 1.19 A 155 155/129 = 1.20 S 121 121/129 = .94 O 131 131/129 = 1.02 N 116 116/129 = .90 D 147 147/129 = 1.14 Total = 1547 1547 = 129 12 The symbol used for seasonal factors is . Table 3-4 TREND CALCULATIONS** (REGRESSION) Column 1 2 Detrended 3 X1 4 Y1 5 X1-X 6 Y1(X1-X) 3024 115000 3528 119000 4032 144000 4536 120000 5040 126000 5544 174000 6048 *182000 6552 210000 7056 128000 7560 141000 8064 119000 8 (X1-X) Sales Dat 0 132000 504 154000 1008 120000 1512 129000 2016 114000 2520 144000 7 1969 Jul. 1 152000 -11.5 -1748000 132.25 Aug. 2 155000 -10.5 -1627500 110.25 Sep. 3 121000 -9.5 -1149500 90.25 Oct. 4 131000 -8.5 -1113500 72.25 Nov. 5 116000 -7.5 -870000 56.25 Dec. 6 147000 -6.5 -955500 42.25 1970 Jan. 7 116000 -5.5 -638000 30.25 Feb. 8 123000 -4.5 -553500 20.25 Mar. 9 148000 -3.5 -518000 12.25 Apr. 10 125000 -2.5 -312500 6.25 May 11 131000 -1.5 -196500 2.25 Jun. 12 180000 -0.5 -90000 0.25 Jul. 13 188000 0.5 94000 0.25 Aug. 14 217000 1.5 325500 2.25 Sep. 15 135000 2.5 337500 6.25 Oct. 16 149000 3.5 521500 12.25 Nov. 17 123000 4.5 553500 20.25 8568 153000 9072 106000 9576 117000 10080 126000 10584 133000 11088 133000 11592 153000 Dec. 18 162000 5.5 891000 30.25 1971 Jan. 19 115000 6.5 747500 42.25 Feb. 20 127000 7.5 952500 56.25 Mar. 21 136000 8.5 1156000 72.25 Apr. 22 144000 9.5 1368000 90.25 May 23 144000 10.5 1512000 110.25 Jun. 24 165000 11.5 1897500 132.25 = 300 X 138000 * Mid-point ** 584000 1150 3312000 = 12.5 Rate of growth of time series b = 584000 / 1150 = 507.83 Table 3-5 CALCULATION OF SEASONAL FACTORS* Total Jul. Ave.X SF 152 + 182 = 334 167 138 1.21 154 + 210 = 364 182 138 1.32 Sep. 120 + 128 = 248 124 138 0.90 Oct. 129 + 141 = 270 135 138 0.98 Nov. 114 + 115 = 229 115 138 0.83 Dec. 144 + 153 = 297 149 138 1.08 Jan. 115 + 106 = 221 111 138 0.80 Feb. 119 + 117 = 236 113 138 0.82 Mar. 144 + 126 = 270 135 138 0.98 Apr. 120 + 133 = 253 127 138 0.92 May 126 + 133 = 259 130 138 0.94 Jun. 174 + 153 = 327 164 138 1.19 Aug. *All figures in thousands. Table 3-6 DATA USED TO BUILD THE MODELS (Phoenix Department Store Sales) Date Actual Sales 1974 January February March April May June July August September October November December 27356 26162 32315 34335 36267 32430 30522 33921 29202 34380 39132 62252 1975 January February March April May June July August September October November December 27356 26678 33820 33095 37616 33733 31402 33895 30903 37299 43412 74401 1976 January February March April May June July August September October November December 31884 31282 38242 39452 39883 38204 37335 37563 35274 40087 48239 84307 1977 January February March April May 34738 36300 44711 43917 42765 Date Actual Sales July August September October November December 40222 40880 39368 44684 54551 94653 1978 January February March April May June July August September October November December 40454 38627 49760 47368 49245 49616 44119 46646 45027 49026 63309 106068 1979 January February March April May June July August September October November December 47214 45922 56728 58598 56725 55832 52031 57071 54709 58701 74400 119907 1980 January February March April May June July August September October November 52168 53264 59909 60377 62341 56022 53273 58744 55393 62947 74187 June 40288 December 124151 Table 3-7 Department Store Forecast and Actual Sales 1981 Actual sales Forecasted sales Xt+1 Error %Error Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov 54169.0 54739.0 69447.0 69628.0 68359.0 64448.0 59743.0 63727.0 58453.0 67251.0 78180.0 54309.4 52566.0 65526.8 67644.1 68500.5 63625.9 59907.5 63641.4 59163.4 65978.7 78506.6 140.4 -2173.0 -3920.2 -1983.9 141.5 -822.1 164.5 -85.6 710.4 -1272.3 326.6 0.26 -3.97 -5.64 -2.85 0.21 -1.28 0.28 -0.13 1.22 -1.89 0.42 Chapter 3 Time Series Forecasting of a Patterned Process May 12, 1992