Solving Kolmogoroff`s Equation

advertisement

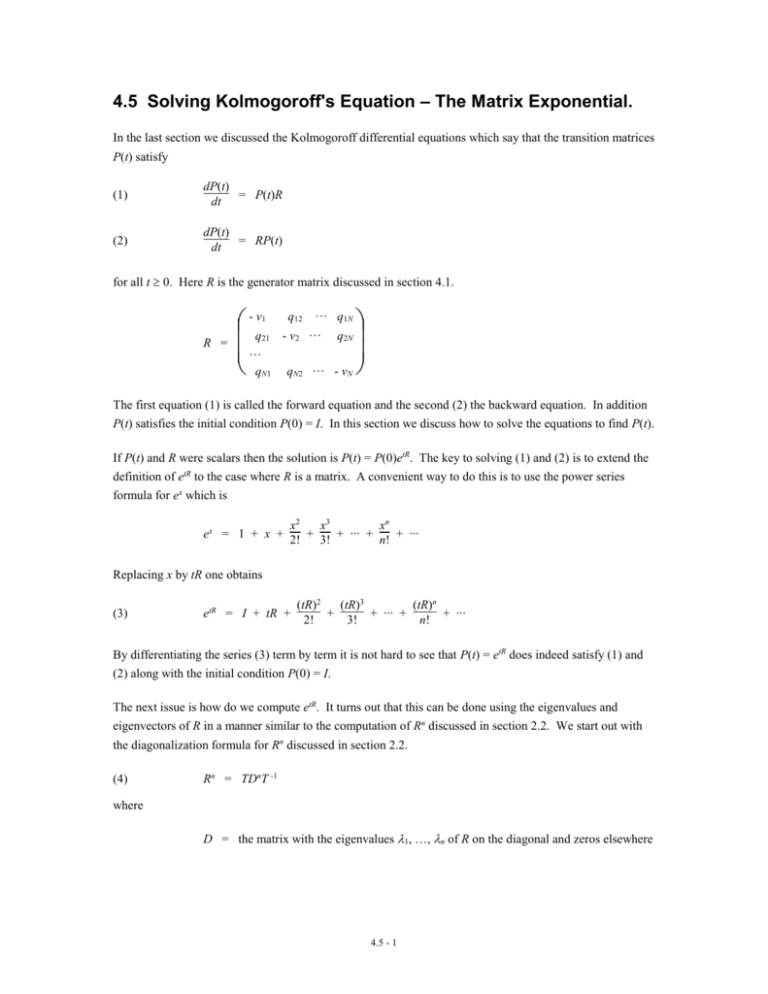

4.5 Solving Kolmogoroff's Equation – The Matrix Exponential.

In the last section we discussed the Kolmogoroff differential equations which say that the transition matrices

P(t) satisfy

(1)

dP(t)

= P(t)R

dt

(2)

dP(t)

= RP(t)

dt

for all t 0. Here R is the generator matrix discussed in section 4.1.

R =

-v

q

q

q12

1

21

- v2

N1

q1N

q2N

qN2 - vN

The first equation (1) is called the forward equation and the second (2) the backward equation. In addition

P(t) satisfies the initial condition P(0) = I. In this section we discuss how to solve the equations to find P(t).

If P(t) and R were scalars then the solution is P(t) = P(0)etR. The key to solving (1) and (2) is to extend the

definition of etR to the case where R is a matrix. A convenient way to do this is to use the power series

formula for ex which is

ex = 1 + x +

x2

x3

xn

+

+ ... +

+ ...

2!

3!

n!

Replacing x by tR one obtains

(3)

etR = I + tR +

(tR)2

(tR)3

(tR)n

+

+ ... +

+ ...

2!

3!

n!

By differentiating the series (3) term by term it is not hard to see that P(t) = etR does indeed satisfy (1) and

(2) along with the initial condition P(0) = I.

The next issue is how do we compute etR. It turns out that this can be done using the eigenvalues and

eigenvectors of R in a manner similar to the computation of Rn discussed in section 2.2. We start out with

the diagonalization formula for Rn discussed in section 2.2.

(4)

Rn = TDnT -1

where

D = the matrix with the eigenvalues 1, …, n of R on the diagonal and zeros elsewhere

4.5 - 1

0

0

0

1

=

0

0

2

n

0

t11

t1n

t21

t2n

T = the matrix whose columns are the eigenvectors v1 = . , …, vn = . of R

tn1

tnn

t11

t21

=

tn1

t12

t22

t1n

t2n

tn2

tnn

Dn = the matrix with the (j)n on the diagonal and zeros elsewhere

(0 )

0

n

1

=

( )

0

(2)n

0

0

0

n

n

If we substitute (4) in to (3) and use I = TT-1 and factor out the T and T-1 we get

t2D2

t3D3

tnDn

+

+ ... +

+ ...]T-1

2!

3!

n!

etR = T[I + tD +

It is not hard to see that

t2D2

t3D3

tnDn

I + tD +

+

+ ... +

+ ... =

2!

3!

n!

s1 0

0 s2

0 0

0

0

sn

where

sj = 1 + tj +

(tj)2

(tj)3

(tj)n

+

+ ... +

+ ... = etj

2!

3!

n!

So

et1

2

2

3

3

n

n

tD

tD

tD

I + tD +

+

+ ... +

+ ...

2!

3!

n!

= etD =

0

0

0

et2

0

0

0

etn

So if D is a diagonal matrix then etD is the diagonal matrix whose diagonal entries are the exponentials etj

of the diagonal entries j of D. So

4.5 - 2

et1

etR = TetDT-1 =

0

T

0

0

et2

0

0

0

etn

T

-1

This gives us a way to compute etR.

Example 1. We have a machine which at any time can be in either of two states, working = 1 or

broken = 2. Let X(t) be the random variable corresponding to the state of the copier at time t. Suppose the

time between transitions between working and broken are exponential random variables with mean 1/2

week so q12 = 2, and the time between transitions between broken and working are exponential random

variables with mean 1/9 week so q21 = 9. Suppose all these random variables along with X(0) are

independent so that X(t) is a Markov process. The generator matrix is

R =

- 2 2 .

9 -9

To get the eigenvalues of R we solve

0 = det( R - I ) =

2

-2-

9 - 9 - = (- 2 - )(- 9 - ) – (2)(9)

= 2 + 11 = ( + 11)

So the eigenvalues are

1 = 0

and

2 = - 11

In general, if R is the generator matrix of a Markov process then at least one eigenvalue is zero. This is

1

1

because the rows sum to zero which implies R = 0 which implies v1 = is an eigenvector for the

1

1

eigenvalue 1 = 0. It turns out the non-zero eigenvalues for a generator matrix are either negative or have

negative real part. This is illustrated in this example by the fact that 2 = - 11 is negative.

1

Thus we automatically have the eigenvector v1 = 1 for the first eigenvalue 1 = 0. To get the eigenvector

x

v2 = y for the second eigenvalue 2 = - 11 we solve

0 = (R - 2I)v = 9 2 x = 9x + 2y

0

9 2y

9x + 2y

0 = 9x + 2y

x

This equation is equivalent to y = - 9x/2. So an eigenvector for 2 = - 11 is any vector v = y with

2

1

y = - 9x/2. So any multiple of the vector - 9 is an eigenvector for 2 =- 11. So we can take v2 = - 6 .

4.5 - 3

So

1 2e

1 -9 0

(0)t

P(t) = etR =

-1

0 1

2

e-11t 1 - 9

=

1 2 1 0-11t 1 - 9 - 2

1 - 9 0 e - 11 - 1 1

1 1 2e-11t 9 2 = 1 9 + 2e-11t

11 1 - 9e 1 - 1

11 9 - 9e

-11t

=

-11t

2 - 2e-11t

2 + 9e-11t

Therefore

Pr{working t days from now | working now} =

9

2 -11t

+

e

11

11

Pr{broken t days from now | working now}

=

2

2 -11t

e

11

11

Pr{working t days from now | broken now}

=

9

9 -11t

e

11

11

Pr{working t days from now | working now} =

2

9 -11t

+

e

11

11

In general the entries of P(t) = etR will be a constant plus a sum of multiples of etj where the j are the

eigenvalues of the generator matrix R.

To be more specific, suppose we want to know the probabilities two days from now. This is t = 2/7 weeks,

so we put t = 2/7 in the formula for P(t) above. We have

2

P =

7

1 9 + 2e-22/7

11 9 - 9e

=

0.826 0.174

0.783 0.217

-22/7

2 - 2e-22/7

2 + 9e-22/7 =

1 9 + (2)(0.043)

11 9 - (9)(0.043)

2 - (2)(0.043)

2 + (9)(0.043)

For example, probability the copier will be broken two days from now if it is working now is 0.174.

Example 2. (see Example 1 of section 4.1) The condition of an office copier at any time t is either

0.05

0.15

- 0.2

0.48 . After

good = 1, poor = 2 or broken = B. Suppose the generator matrix is R = 0.02 - 0.5

0.48 0.12 - 0.6

some computation one obtains the characteristic equation

0 = 3 + 1.32 + 0.3894 = (2 + 1.3 + 0.3894)

The solutions are = 0 and

=

- 1.3

(1.3)2 - (4)(1)(0.3894)

(2)(1)

4.5 - 4

=

- 1.3

1.69 - 1.5576

2

=

- 1.3

0.1324

2

- 1.3 0.363868

2

=

=

- 0.936132

- 1.66387

and

= - 0.468066 and – 0.831934

2

2

So the eigenvalues are

1 = 0

and

2 = - 0.468066

and

3 = - 0.831934

1

x

v1 = 1 automatically is the eigenvector for the first eigenvalue 1 = 0. To get the eigenvector v2 = y

1

z

for the second eigenvalue 2 = - 0.468066 we solve

0.05

0.15 x

0

0.268066

0

0.02

0.0319341

0.48 y

=

(R

I)v

=

2

0

0.48

0.12

- 0.131934 z

=

0.05 y +

0.15 z

0.268066 x +

0.02

x

0.0319341

y

+

0.48

z

0.48 x +

0.12 y - 0.131934 z

0.268066 x +

0.05 y +

0.15 z = 0

0.02 x - 0.0319341 y +

0.48 z = 0

0.48 x +

0.12 y - 0.131934 z = 0

We know the three equations are dependent and only determine x, y and z up to a constant multiple. If we

solve the first two equations for y and z in terms of x we get

y =

- 4.3651 x

z =

- 0.332074 x

1

So we can take v2 = - 4.3651 as an eigenvector for 2 =- 0.468066.

- 0.332074

1

In a similar fashion we find that v3 = 11.6451 is an eigenvector for 3 =- 0.831934.

- 8.09459

So if we round off a bit one has

P(t) = etR =

+ 0.31e

+ 0.06e

0.62

-0.47t + 0.75e-0.83t

0.62

1.37e

0.62 - 0.1e-0.47t - 0.52e-0.83t

-0.47t

=

1

1 1 0 0

1

1 -1

1

1

1 - 4.37 11.65 0 e-0.47t 0 1 - 4.37 11.65

1 - 0.33 - 8.09 0 0 e-0.83t 1 - 0.33 - 8.09

-0.83t

0.12 - 0.14e-0.47t + 0.02e-0.83t

0.12 + 0.63e-0.47t + 0.25e-0.83t

0.12 + 0.05e-0.47t - 0.17e-0.83t

4.5 - 5

0.25 - 0.17e-0.47t - 0.09e-0.83t

0.25 + 0.74e-0.47t - 0.99e-0.83t

-0.47t

-0.83t

0.25 + 0.06e

+ 0.69e

For example, the probability the copier is broken 1.5 days from now given that it is in good condition now

is P(1.5)13 = 0.14601.

As an example of a more complicated question, what is the probability the copier will be poor condition in

half an day and broken in two days given that it is in good condition now? This is

P(0.5)12 P(1.5)23 = 0.00768491.

4.5 - 6