Steady State Probabilities

advertisement

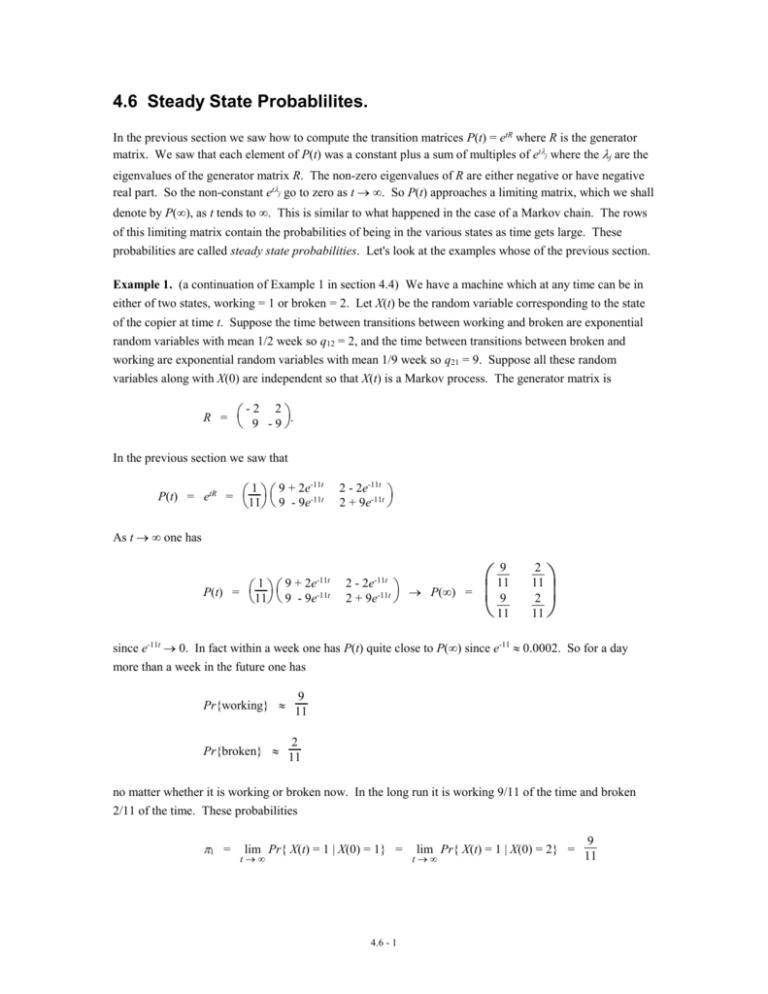

4.6 Steady State Probablilites.

In the previous section we saw how to compute the transition matrices P(t) = etR where R is the generator

matrix. We saw that each element of P(t) was a constant plus a sum of multiples of etj where the j are the

eigenvalues of the generator matrix R. The non-zero eigenvalues of R are either negative or have negative

real part. So the non-constant etj go to zero as t . So P(t) approaches a limiting matrix, which we shall

denote by P(), as t tends to . This is similar to what happened in the case of a Markov chain. The rows

of this limiting matrix contain the probabilities of being in the various states as time gets large. These

probabilities are called steady state probabilities. Let's look at the examples whose of the previous section.

Example 1. (a continuation of Example 1 in section 4.4) We have a machine which at any time can be in

either of two states, working = 1 or broken = 2. Let X(t) be the random variable corresponding to the state

of the copier at time t. Suppose the time between transitions between working and broken are exponential

random variables with mean 1/2 week so q12 = 2, and the time between transitions between broken and

working are exponential random variables with mean 1/9 week so q21 = 9. Suppose all these random

variables along with X(0) are independent so that X(t) is a Markov process. The generator matrix is

R =

- 2 2 .

9 -9

In the previous section we saw that

1 9 + 2e-11t

11 9 - 9e

-11t

P(t) = etR =

2 - 2e-11t

2 + 9e-11t

As t one has

11

9

11

9

1 9 + 2e-11t

11 9 - 9e

-11t

P(t) =

2 - 2e

2 + 9e-11t P() =

-11t

2

11

2

11

since e-11t 0. In fact within a week one has P(t) quite close to P() since e-11 0.0002. So for a day

more than a week in the future one has

Pr{working}

Pr{broken}

9

11

2

11

no matter whether it is working or broken now. In the long run it is working 9/11 of the time and broken

2/11 of the time. These probabilities

1 =

lim Pr{ X(t) = 1 | X(0) = 1} =

t

4.6 - 1

lim Pr{ X(t) = 1 | X(0) = 2} =

t

9

11

2 =

lim Pr{ X(t) = 2 | X(0) = 1} =

t

lim Pr{ X(t) = 2 | X(0) = 2} =

t

2

11

are called the steady state probabilities of being in states 1 and 2. In many applications of Markov

processes the steady state probabilities are the main items of interest since one is interested in the long run

behavior of the system. For the time being we shall assume the following about the generator matrix R.

(1)

The eigenvalue zero is not repeated.

(2)

The other eigenvalues are negative or have negative real part.

Then t

0

T

0

1

P(t) = etR = TetDT-1 =

0

0

0

etn

T

0

et2

-1

approaches

1 t12

1

t22

P() =

1 tn2

=

t1n 1

t2n 0

tnn 0

0

0

0

0

T

0

0

21

12

22

1n

2n

n1

n2

nn

0

0

1

0

1

1 0

0

0

11

-1

0

1

1

0

=

1 0

0

1

2

2

n

n

1

2

n

1

=

0

0

T -1

where we let

21

2

22

n

2n

n1

n2

nn

1

T -1 =

So

1

2

2

n

n

1

2

n

1

P() =

So the rows of P() are all equal to the vector which is the first row of T-1 which is a left eigenvector of R

with eigenvalue 0. In other words

(3)

R = 0

4.6 - 2

where

= (1, 2, …, n)

So the rows of P() are vectors which are solutions of the equation R = 0 and which are probability

vectors, i.e. the sum of the entries of equals 1. The entries of are called steady state probabilities.

Another way to see that the rows of P() satisfy (3) is to start with the Kolmogoroff equation

and let t . Since P(t) P() one has

for

dP(t)

= P(t)R

dt

dP(t)

P()R. However, the only way this can be consistent is

dt

dP(t)

o. So P()R = 0. Since the ith row of P()R is the ith row of P() times R we see the rows of

dt

P() satistfy (3).

Example 1 (continued). Find the steady state vector = (1, 2) in Example 1.

Since R = 0 we get

-2 2

(1, 2) 9 - 9 = (0, 0)

Therefore

- 21 + 92 = 0

21 + 92 = 0

Therefore 2 = 21/9

In order to find 1 and 2 we need to use the fact that 1 + 2 = 1. Combining this with 2 = 21/9 gives 1 +

21/9 = 1 or 111/9 = 1 or 1 = 9/11 and 2 = 2/11.

Example 2. (see Example 1 of section 4.1) The condition of an office copier at any time t is either

0.05

0.15

- 0.2

0.48 . The

good = 1, poor = 2 or broken = B. Suppose the generator matrix is R = 0.02 - 0.5

0.48 0.12 - 0.6

equation R = 0 is

- 0.21 + 0.052

+ 0.153 = 0

0.021 -

+ 0.483 = 0

0.52

0.481 + 0.122 -

0.63 = 0

These equations are dependent and when we solve them we get 1 = 4043/165 and 1 = 163/33. Using 1

+ 2 + 3 = 1 we get 4043/165 + 163/33 + 3 = 1. Thus 6493/165 = 1 or 3 = 165/649 0.254 and

1 = 404/649 0.622 and2 = 80/649 0.123.

4.6 - 3