kunaltech

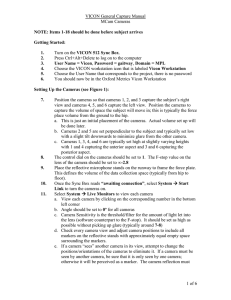

advertisement

Kunal Amarnani L02 – Dr. Smith Helicopter Group Use of Motion Capture in an Autonomous Aerial Vehicle Introduction Motion capture is “involves measuring an object's position and orientation in physical space, then recording that information in a computer-usable form” [1]. It refers to the process of using several cameras to record the movement of objects and translating the objects motion onto a 3D virtual environment. This paper focuses on the use of motion capture cameras to record the movement of an aerial vehicle in a large room. The data received can then be used to control the movement of the vehicle. Commercial Applications of Autonomous Vehicles The demand for autonomous aerial vehicles (such as helicopters) has seen an increase over the past few years, as they have become an important part of military exercises. The use of Unmanned Aerial Vehicles (UAVs) reduces the risk to human life especially during scouting missions. UAVs have been successfully used in the Iraq war and even in the Katrina rescue effort. However, there still exists a lot of difficulty in implementing and coordinating multiple UAVs at the same time. Using a motion capture system to control the UAVs in a controlled environment will enable researchers to explore multivehicle coordination much further [2]. Technology behind Motion Capture Cameras In order to capture the movement of the vehicle, it must first be outfitted with reflective dots. The camera can the follow these dots and triangulate the respective position of the dots onto the virtual 3D environment. The cameras consist of a video camera, a strobe head unit, a lens, and an optical filter. The strobe head unit emits a distinct wavelength of light that is reflected by the surface dots located on the vehicle. The light is emitted as a bright flash that is timed to coincide with the opening of the camera’s shutter. This reduces the interference from outside sources of light. The lens captures the light reflected off the surface dots and forms a focused image on the camera’s sensor plane. In order to remove other wavelengths of light other than those emitted by the LEDs in the strobe head unit, each camera has an optical filter. The filter allows in low-level wavelengths like those emitted by the strobe head unit (e.g. near infra-red light or infra-red light). In order to gain an accurate portrayal of the vehicle in a 3D environment, several cameras are needed to triangulate the relative position of each dot in the room. These cameras must be evenly spaced around the room in order to maximize their efficiency. Implementing the Motion Capture System The system is very hard to build from scratch as it requires a high resolution camera with very shutter speed as well as several infrared emitting diodes that are in perfect sync with the shutter. However, there are several commercial systems readily available for purchase, including the VICON MX. The system comes with several high resolution cameras, all the hardware controllers required to link the cameras up to the computer, as well as the required software to analyze the data. Once the motion capture system has been set up, and the relative position of the vehicle in the room has been found, the vehicle can then be moved autonomously by the computer using its current position and its required destination. [1] Scott Dyer, Jeff Martin, John Zulauf, "Motion Capture White Paper," [Online Document], 12 Dec 1995, Available: http://reality.sgi.com/employees/jam sb/mocap/MoCapWP_v2.0.html [2] J. How, “Raven: Testbed for autonomous UAVs,” Aero-Astro, No. 4, 2006-2007 [3] Maureen Furniss, “Motion Capture,” [Online Document], 19 Dec 1999, Available: HTTP://web.mit.edu/comm-forum/papers/furniss.html [4] Vicon MX Hardware System Reference, Rev 1.6, Vicon, Oxford, UK, 2007 [5] Vicon Products Glossary for Vicon MX and V-series systems, Vicon, Oxford, UK, 2007 [6] Vicon MX [Online]. Available: http://www.vicon.com/products/viconmx.html