ΝΑΙ

advertisement

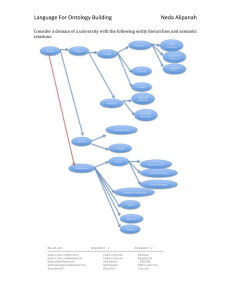

HY-566 Semantic Web Ontology Learning Μπαλάφα Κασσιανή Πλασταρά Κατερίνα Table of contents 1. Introduction 2. Data sources for ontology learning 3. Ontology Learning Process 4. Architecture 5. Methods for learning ontologies 6. Ontology learning tools 7. Uses/applications of ontology learning 8. Conclusion 9. References 1. Introduction 1.1 Ontologies Ontologies serve as a means for establishing a conceptually concise basis for communicating knowledge for many purposes. In recent years, we have seen a surge of interest that deals with the discovery and automatic creation of complex, multirelational knowledge structures. Unlike knowledge bases ontologies have “all in one”: formal or machine readable representation full and explicitly described vocabulary full model of some domain consensus knowledge: common understanding of a domain easy to share and reuse 1.2 Ontology learning General The main task of ontology learning is to automatically learn complicated domain ontologies; this task is usually solved by human only. It explores techniques for applying knowledge discovery techniques to different data sources (html, documents, dictionaries, free text, legacy ontologies etc.) in order to support the task of engineering and maintaining ontologies. In other words is the Machine learning of ontologies. Technical description The manual building of ontologies is a tedious task, which can easily result in a knowledge acquisition bottleneck. In addition, human expert modeling by hand is biased, error prone and expensive. Fully automatic machine knowledge acquisition remains in the distant future. Most systems are semi-automatic and require human (expert) intervention and balanced cooperative modeling for constructing ontologies. Semantic Integration The conceptual structures that define an underlying ontology provide the key to machine-processable data on the Semantic Web. Ontologies serve as metadata schemas, providing a controlled vocabulary of concepts, each with explicitly defined and machine-processable semantics. Hence, the Semantic Web’s success and proliferation depends on quickly and cheaply constructing domain-specific ontologies. Although ontology-engineering tools have matured over the last decade, manual ontology acquisition remains a tedious, cumbersome task that can easily result in a knowledge acquisition bottleneck. Intelligent support tools for an ontology engineer take on a different meaning than the integration architectures for more conventional knowledge acquisition. In the figures below we can see how ontology learning is concerned in semantic integration Semantic Information Integration Ontology Alignment and Transformations ?????? NO RELATION BETWEEN THESE FIGURES!!! Ontology Engineering 2. Data sources for ontology learning 2.1 Natural languages Natural language texts exhibit morphological, syntactic, semantic, pragmatic and conceptual constraints that interact in order to convey a particular meaning to the reader. Thus, the text transports information to the reader and the reader embeds this information into his background knowledge. Through the understanding of the text, data is associated with conceptual structures and new conceptual structures are learned from the interacting constraints given through language. Tools that learn ontologies from natural language exploit the interacting constraints on the various language levels (from morphology to pragmatics and background knowledge) in order to discover new concepts and stipulate relationships between concepts. 2.1.2 Example An example of extracting semantic information of natural text in the form of ontology is a methodology developed in Leipzig University of Germany. This approach is focused on the application of statistical analysis of large corpora to the problem of extracting semantic relations from unstructured text. It is a viable method for generating input for the construction of ontologies, as ontologies use well-defined semantic relations as building blocks. The method’s purpose is to create classes of terms (collocation sets) and how to postprocess these statistically generated collocation sets in order to extract named relations. In addition, for different types of relations like cohyponyms or instance-of-relations, different extraction methods as well as additional sources of information can be applied to the basic collocation sets in order to verify the existence of a specific type of semantic relation for a given set of terms. The first step of this approach is to collect large amounts of unstructured text, which will be processed in the following steps. The next step is to create the collocation sets, i.e. the classes of similar terms. The occurrence of two or more words within a well defined unit of information (sentence, document) is called a collocation. For the selection of meaningful and significant collocations, an adequate collocation measure is defined based on probabilistic similarity metrics. For calculating the collocation measure for any reasonable pairs of terms the joint occurrences of each pair is counted. This problem is complex both in time and storage. Nevertheless, the collocation measure is calculated for any pair with total frequency of at least 3 for each component. This approach is based on extensible ternary search trees, where a count can be associated to a pair of word numbers. The memory overhead from the original implementation could be reduced by allocating the space for chunks of 100,000 nodes at once. Even when using this technique on a large memory computer more than one run through the corpus may be necessary, taking care that every pair is only counted once. The resulting word pairs above a threshold of significance are put into a database where they can be accessed and grouped in many different ways. Further on, except for the textual output of collocation sets, visualizing them as graphs is an additional type of representation. The procedure followed is: A word is chosen and its collocates are arranged in the plane so that collocations between collocates are taken into account. This results in graphs that show homogeneity where words are interconnected and they show separation where collocates have little in common. Polysemy is made visible (see figure below). Line thickness represents the significance of the collocation. All words in the graph are linked to the central word; the rest of the picture is automatically computed, but represents semantic connectedness as well. The relations between the words are just presented, but not yet named. The figure shows the collocation graph for space. Three different meaning contexts can be recognized in the graph: o real estate, o computer hardware, and o astronautics. The connection between address and memory results from the fact that address is another polysemous concept. Collocation graph for space The final step is to identify the relations between terms or collocation sets. The collocation sets are searched and some semantic relations appear more often than others. The following basic types of relations can be identified: o Cohyponymy o top-level syntactic relations, which translate to semantic ‘actor-verb’ and often used properties of a noun o instance-of o special relations given by multiwords (A prep/det/conj B), and o unstructured set of words describing some subject area. These types of relations may be classified according to the properties symmetry, anti-symmetry, and transitivity. Additional relations between collocation sets can be identified with the user’s contribution, such as: o Pattern-based extraction (user defined) e.g. (profession) ? (last name) implies that ? Is in fact a first name. o Compound nouns. Semantic relation between the parts of a compound word can be found in most cases. Term properties are be derived with similar ways. A combination of the results of each of the steps described above forms the ontology of terms included in the original text. The output of this approach may be used for the automatic generation of semantic relations between terms in order to fill and expand ontology hierarchies. 2.2 Ontology Learning from Semi-structured Data. With the success of new standards for document publishing on the web there will be a proliferation of semi-structured data and formal descriptions of semistructured data freely and widely available. HTML data, XML data, XML Document Type Definitions (DTDs), XML-Schemata , and their likes add -- more or less expressive -- semantic information to documents. A number of approaches understand ontologies as a common generalizing level that may communicate between the various data types and data descriptions. Ontologies play a major role for allowing semantic access to these vast resources of semistructured data. Though only few approaches do yet exist we belief that learning of ontologies from these data and data descriptions may considerably leverage the application of ontologies and, thus, facilitate the access to these data. 2.2.1 Example An example of learning ontologies from both unstructured text and semistructured text is the DODDLE system. This approach, which was implemented in Shizuoka University of Japan, describes how to construct domain ontologies with taxonomic and non-taxonomic conceptual relationships exploiting a machine readable dictionary and domain-specific texts. The taxonomic relationships come from WordNet (an online lexical database for the English language) in interaction with a domain expert, using the following two strategies: match result analysis and trimmed result analysis. The non-taxonomic relationships come from domain specific texts with the analysis of lexical co-occurrence statistics. The DODDLE (Domain Ontology Rapid Development Environment) system consists of two components: the taxonomic relationship acquisition module using WordNet and non-taxonomic relationship learning module using domain-specific texts. An overview of the system and its components is depicted in figure 1. Figure1: DODDLE overview Taxonomic relationship acquisition module: The taxonomic relationship acquisition module does spell match between the input domain terms and WordNet. The spell match links these terms to WordNet. Thus the initial model from the spell match results is a hierarchically structured set of all the nodes on the path from these terms to the root of WordNet. However the initial model has unnecessary internal terms (nodes) not to contribute to keeping topological relationships among matched nodes, such as parent-child relationship and sibling relationship. So the unnecessary internal nodes can be trimmed from the initial model into a trimmed model, as shown in Figure 2 process. Figure 2: Trimming process In order to refine the trimmed model, two strategies are applied in interaction with a user: match result analysis and trimmed result analysis. o Match result analysis: Looking at the trimmed model, it turns out that it is divided into a PAB (a PAth including only Best spell-matched nodes) and a STM (a Sub-Tree that includes best spell-matched nodes and other nodes and so should be Moved) based on the distribution of bestmatched nodes. On one hand, a PAB is a path that includes only best-matched nodes that have sense for a given domain specificity. Because all nodes have already been adjusted to the domain in PABs, PABs can stay there in the trimmed model. On the other hand, a STM is such a sub-tree that an internal node is a root and the subordinates are only best-matched nodes. Because internal nodes have not been confirmed to have sense for a given domain, an STM can be moved in the trimmed model. Thus DODDLE identifies PABs and STMs in the trimmed model automatically and then supports a user in constructing a conceptual hierarchy by moving STMs. Figure 3 illustrates the above-mentioned match result analysis. Figure 3: Match Result Analysis o Trimmed result analysis: In order to refine the trimmed model, DODDLE uses trim result analysis as well as match result analysis. Taking some sibling nodes with the same parent node, there may be many differences about the number of trimmed nodes between them and the parent node. When such a big difference comes up on a sub-tree in the trimmed model, it may be better to change the structure of the subtree. The system asks the user if the sub-tree should be reconstructed or not. Figure 4 illustrates the abovementioned trimmed result analysis. Figure 4: Trimmed Result Analysis Finally DODDLE II completes taxonomic relationships of the input domain terms with hand-made additional modification from the user. Non-taxonomic relationship learning module Non-taxonomic Relationship Learning almost comes from WordSpace, which derives lexical co-occurrence information from a large text corpus and is a multi-dimension vector space (a set of vectors). The inner product between two word vectors works as the measure of their semantic relatedness. When two words’ inner product is beyond some upper bound, they are candidates to have some non-taxonomic relationship between them. WordSpace is constructed as shown in Figure 5. Figure 5: Construction Flow of WordSpace The main steps of the WordSpace construction process are: extraction of high-frequency 4-grams, construction of collocation matrix, construction of context vectors, construction of word vectors and construction of vector representations of all concepts. After these two main and parallel modules are concluded, all the resulting concepts are compared for similarity. The user defines a certain threshold for this similarity and a concept pair with the similarity beyond it is extracted as a similar concept pair. A set of the similar concept pairs becomes a concept specification template. Both kinds of concept pairs, those whose meaning is similar (with taxonomic relation) and those who have something relevant with each other (with non-taxonomic relation), are extracted as concept pairs with context similarity in a mass. However, by using taxonomic information from TRA module with cooccurrence information, DODDLE distinguishes the concept pairs which are hierarchically closer to each other than the other pairs as TAXONOMY. A user constructs a domain ontology by considering the relation with each concept pair in the concept specification templates and by deleting an unnecessary concept pair. Figure 6 shows the ontology editor (left window) and the concept graph editor (right window). Figure 6: The ontology editor In order to evaluate how DODDLE is doing in practical fields, case studies have been done in a particular law called Contracts for the International Sale of Goods (CISG). Although this case study was small scale the results were encouraging. 2.3 Ontology Learning from Structured Data Ontologies have been firmly established as a means for mediating between different databases. Nevertheless, the manual creation of a mediating ontology is again a tedious, often extremely difficult, task that may be facilitated through learning methods. The negotiation of a common ontology from a set of data and the evolution of ontologies through the observation of data is a hot topic these days. The same applies to the learning of ontologies from metadata, such as database schemata, in order to derive a common high-level abstraction of underlying data descriptions - an important precondition for data warehousing or intelligent information agents. 3. Ontology Learning Process A general framework of the ontology learning process is shown in the figure below. The ontology learning process The basic steps in the engineering cycle are: o Merging existing structures or defining mapping rules between these structures allows importing and reusing existing ontologies. (For instance, Cyc’s ontological structures have been used to construct a domainspecific ontology o Ontology extraction models major parts of the target ontology, with learning support fed from Web documents. o The target ontology’s rough outline, which results from import, reuse, and extraction, is pruned to better fit the ontology to its primary purpose. o Ontology refinement profits from the pruned ontology but completes the ontology at a fine granularity (in contrast to extraction). o The target application serves as a measure for validating the resulting ontology. Finally, the ontology engineer can begin this cycle again—for example, to include new domains in the constructed ontology or to maintain and update its scope. 3.1 Ontology learning process example A variation of the ontology learning process described in the previous session was implemented in a user-centered system for ontology construction, called Adaptiva, implemented in the University of Sheffield (UK). In this approach, the user selects a corpus of texts and sketches a preliminary ontology (or selects an existing one) for a domain with a preliminary vocabulary associated to the elements in the ontology (lexicalisations). Examples of sentences involving such lexicalisation (e.g. ISA relation) in the corpus are automatically retrieved by the system. Retrieved examples are then validated by the user and used by an adaptive Information Extraction system to generate patterns that discover other lexicalisations of the same objects in the ontology, possibly identifying new concepts or relations. New instances are added to the existing ontology or used to tune it. This process is repeated until a satisfactory ontology is obtained. Each of the above mentioned stages consists of three steps: bootstrapping, pattern learning and user validation, and cleanup. o Bootstrapping. The bootstrapping process involves the user specifying a corpus of texts, and a seed ontology. The draft ontology must be associated with a small thesaurus of words, i.e. the user must indicate at least one term that lexicalises each concept in the hierarchy. o Pattern Learning & User Validation. Words in the thesaurus are used by the system to retrieve a first set of examples of the lexicalisation of the relations among concepts in the corpus. These are then presented to the user for validation. The learner then uses the positive examples to induce generic patterns able to discriminate between them and the negative ones. Pattern are generalised in order to find new (positive) examples of the same relation in the corpus. These are presented to the user for validation, and user feedback is used to refine the patterns or to derive additional ones. The process terminates when the user feels that the system has learned to spot the target relations correctly. The final patterns are then applied on the whole corpus and the ontology is presented to the user for cleanup. o Cleanup. This step helps the user make the ontology developed by the system coherent. First, users can visualize the results and edit the ontologies directly. They may want to collapse nodes, establish that two nodes are not separate concepts but synonyms, split nodes or move the hierarchical positioning of nodes with respect to each other. Also, the user may wish to 1) add further relations to a specific node; 2) ask the learner to find all relations between two given nodes; 3) refine/label relations discovered in the between given nodes. Corrections are returned back to the IE system for retraining. This methodology focuses the expensive user activity on sketching the initial ontology, validating textual examples and the final ontology, while the system performs the tedious activity of searching a large corpus for knowledge discovery. Moreover, the output of the process is not only an ontology, but also a system trained to rebuild and eventually retune the ontology, as the learner adapts by means of the user feedback. This simplifies ontology maintenance, a major problem in ontology-based methodologies. 4. Architecture The general architecture of the ontology learning process is shown in the following figure. Ontology learning architecture for the Semantic Web The ontology engineer only interacts via the graphical interfaces, which comprise two of the four components: the Ontology Engineering Workbench and the Management Component. Resource Processing and the Algorithm Library are the architecture’s remaining components. These components are described below. Ontology Engineering Workbench This component is sophisticated means for manual modeling and refining of the final ontology. The ontology engineer can browse the resulting ontology from the ontology learning process and decide to follow, delete or modify the proposals as the task requires. Management component graphical user interface The ontology engineer uses the management component to select input data—that is, relevant resources such as HTML and XML documents, DTDs, databases, or existing ontologies that the discovery process can further exploit. Then, using the management component, the engineer chooses from a set of resource-processing methods available in the resource-processing component and from a set of algorithms available in the algorithm library. The management component also supports the engineer in discovering task-relevant legacy data—for example, an ontology-based crawler gathers HTML documents that are relevant to a given core ontology. Resource processing Depending on the available input data, the engineer can choose various strategies for resource processing: o Index and reduce HTML documents to free text. o Transform semistructured documents, such as dictionaries, into a predefined relational structure. o Handle semistructured and structured schema data (such as DTDs, structured database schemata, and existing ontologies) by following different strategies for import, as described later in this article. o Process free natural text. After first preprocessing data according to one of these or similar strategies, the resource-processing module transforms the data into an algorithm-specific relational representation. Algorithm library An ontology can be described by a number of sets of concepts, relations, lexical entries, and links between these entities. An existing ontology definition can be acquired using various algorithms that work on this definition and the preprocessed input data. Although specific algorithms can vary greatly from one type of input to the next, a considerable overlap exists for underlying learning approaches such as association rules, formal concept analysis, or clustering. Hence, algorithms can be reused from the library for acquiring different parts of the ontology definition. 5. Methods for learning ontologies Some methodologies used in the ontology learning process are described in the following sections. 5.1 Association Rules A basic method that is used in many ontology learning systems is the use of association rules for ontology extraction. Association-rule-learning algorithms are used for prototypical applications of data mining and for finding associations that occur between items in order to construct ontologies (extraction stage). ‘Classes’ are expressed by the expert as a free text conclusion to a rule. Relations between these ‘classes’ may be discovered from existing knowledge bases and a model of the classes is constructed (ontology) based on user-selected patterns in the class relations. This approach is useful for solving classification problems by creating classification taxonomies (ontologies) from rules. A classification knowledge based system using this method with experimental results based on medical data was implemented in the University of New South Wales, in Australia. In this approach, Ripple Down Rules (RDR) were used to describe classes and their attributes. The form of RD Rules is shown in the following figure, which represents some rules for the class Satisfactory lipid profile previous raised LDL noted. In the first rule there is a condition Max(LDL) > 3.4 and in the second rule there is a condition Max(LDL) is HIGH), where HIGH is a range between 2 real number. An example of a class which is a disjunction of two rules The conclusions of the rules form the classes of the classification ontology. The expert using this methodology is allowed to specify the correct conclusion and identify the attributes and values that justify this conclusion in case the system makes an error. The method applied in this approach includes three basic steps: o The first step is to discover class relation between rules. In this stage, three basic relations are taken into account: 1. Subsumption/intersection: a class A subsumes/intersects with a class B if class A always occurs when class B occurs, but not the other way around. 2. Mutual exclusivity: two classes are mutual exclusive if they never occur together. 3. Similarity: two classes are similar if they have similar conditions in the rules they come from. Based on these relations the first classes of rule conclusions are formed. o The second step is to specify some compound relations which appear interesting using the three basic relations. This step is performed in interaction with the expert. o The final step is to extract instances of these compound relations or patterns and assemble them into a class model (ontology). The key idea in this technique is that it seems reasonable to use heuristic quantitative measures to group classes and class relations. This then enables possible ontologies to be explored on a reasonable scale. 5.2 Clustering Learning semantic classes In the context of learning semantic classes, learning from syntactic contexts exploits syntactic relations among words to derive semantic relations, following Harris’ hypothesis. According to this hypothesis, the study of syntactic regularities within a specialized corpus permits to identify syntactic schemata made out of combinations of word classes reflecting specific domain knowledge. The fact of using specialized corpora eases the learning task, given that we have to deal with a limited vocabulary with reduced polysemy, and limited syntactic variability. In syntactic approaches, learning results can be of different types, depending on the method employed. They can be distances that reflect the degree of similarity among terms, distance-based term classes elaborated with the help of nearestneighbor methods degrees of membership in term classes, class hierarchies formed by conceptual clustering or predicative schemata that use concepts to constraint selection. The notion of distance is fundamental in all cases, as it allows calculating the degree of proximity between two objects—terms in this case—as a function of the degree of similarity between the syntactic contexts in which they appear. Classes built by aggregation of near terms can afterwards be used for different applications, such as syntactic disambiguation or document retrieval. Distances are however calculated using the same similarity notion in all cases, and our model relies on these studies regardless of the application task. Conceptual clustering Ontologies are organized as multiple hierarchies that form an acyclic graph where nodes are term categories described by intention, and links represent inclusion. Learning through hierarchical classification of a set of objects can be performed in two main ways: top-down, by incremental specialization of classes, and bottom-up, by incremental generalization. The bottom-up approach due to its smaller algorithmic complexity and its understandability to the user in view of an interactive validation task is better. The Mo’K workbench A workbench that supports the development of conceptual clustering methods for the (semi-) automatic construction of ontologies of a conceptual hierarchy type from parsed corpora is the Mo’K workbench. The learning model proposed in that takes parsed corpora as input. No additional (terminological or semantic) knowledge is used for labeling the input, guiding learning or validating the learning results. Preliminary experiments showed that the quality of learning decreases with the generality of the corpus. This makes somewhat unrealistic the use of general ontologies for guiding such learning as they seem too incomplete and polysemic to allow for efficient learning in specific domains. 5.3 Ontology Learning with Information Extraction Rules The Figure below illustrates the overall idea of building ontologies with learned information extraction rules. We start with: 1. An initial, hand-crafted seed ontology of reasonable quality which contains already the relevant types of relationships between ontology concepts in the given domain. 2. An initial set of documents which exemplarily represent (informally) substantial parts of the knowledge represented formally in the seed ontology. To take the pairs of (ontological statement, one or more textual representations) as positive examples for the way how specific ontological statements can be reflected in texts. There are two possibilities to extract such examples: Based on the seed ontology, the system looks up the signature of a certain relation searches all occurrences of instances of the concept classes Disease and Cure, respectively, within a certain maximum distance, and regards these co-occurrences as positive examples for relationship R. This approach presupposes that the seed documents have some “definitional” character, like domain specific lexica or textbooks. The user goes through the seed documents with a marker and manually highlights all interesting passages as instances of some relationship. This approach is more work-intensive, but promises faster learning and more precise results. We employed this approach already successfully in an industrial information extraction project Employ a pattern learning algorithm to automatically construct information extraction rules which abstract from the specific examples, thus creating general statements which text patterns are an evidence for a certain ontological relationship. In order to learn such information extraction rules, we need some prerequisites: (a) A sufficiently detailed representation of documents (in particular, including word positions, which is not usual in conventional, vectorbased learning algorithms, WordNet-synsets, and part-of-speech tagging). (b) A sufficiently powerful representation formalism for extraction patterns. (c) A learning algorithm which has direct access to background knowledge sources, like the already available seed ontology containing statements about known concept instance, or like the WordNet database of lexical knowledge linking words to their synonyms sets, giving access to suband superclasses of synonym sets, etc. Apply these learned information extraction rules to other, new text documents to discover new or not yet formalized instances of relationship R in the given application domain. Compared to other ontology learning approaches this technique is not restricted to learning taxonomy relationships, but arbitrary relationships in an application domain. A project that uses this technique is the FRODO ("A Framework for Distributed Organizational Memories") project which is about methods and tools for building and maintaining distributed Organizational Memories in a real-world enterprise environment. It is funded by the German National Ministry for Research and Education has started with five scientific researchers in January 2000. 6. Ontology learning tools 6.1 TEXT-TO-ONTO It develops a semi-automatic ontology learning from text. It tries to overcome the knowledge acquisition bottleneck. It is based on a general architecture for discovering conceptual structures and engineering ontologies from text. Architecture The process of semi-automatic ontology learning from text is embedded in an architecture that comprises several core features described as a kind of pipeline. The main components of the architecture are the: Text & Processing Management Component The ontology engineer uses that component to select domain texts exploited in the further discovery process. The engineer can choose among a set of text (pre) processing methods available on the Text Processing Server and among a set of algorithms available at the Learning & Discovering component. The former module returns text that is annotated by XML and XML-tagged is fed to the Learning & Discovering component. Text Processing Server It contains a shallow text processor based on the core system SMES (Saarbr¨ucken Message Extraction System). SMES is a system that performs syntactic analysis on natural language documents. It organized in modules, such as tokenizer, morphological and lexical processing and chunk parsing that use lexical resources to produce mixed syntactic/semantic information. The results of text processing are stored in annotations using XML-tagged text. Lexical DB & Domain Lexicon SMES accesses a lexical database with more than 120.000 stem entries and more than 12.000 subcategorization frames that are used for lexical analysis and chunk parsing. The domain-specific part of the lexicon associates word stems with concepts available in the concept taxonomy and links syntactic information with semantic knowledge that may be further refined in the ontology. Learning & Discovering component Uses various discovering methods on the annotated texts e.g. term extraction methods for concept acquisition. Ontology Engineering Enviroment-ONTOEDIT It supports the ontology engineer in semi-automatically adding newly discovered conceptual structures to the ontology. Internally stores modeled ontologies using an XML serialization. 6.2 ASIUM ASIUM overview Asium is an acronym for “Acquisition of Semantic knowledge Using Machine learning method". The main aim of Asium is to help the expert in the acquisition of semantic knowledge from texts and to generalize the knowledge of the corpus. It also provides the expert with a user interface which includes tools and functionality for exploring the texts and then learning knowledge which is not in the texts. During the learning step, Asium helps the expert to acquire semantic knowledge from the texts, like subcategorization frames and an ontology. The ontology represents an acyclic graph of the concepts of the studied domain. The subcategorization frames represent the use of the verbs in these texts. For example, starting from cooking recipe texts, Asium should learn an ontology with concepts of "Recipients", "Vegetables" and "Meat". It can also learn, in parallel, the subcategorization frame of the verb "to cook" which can be: to cook: Object: Vegetable or Meat in: Recipients Methodology The overall methodology that is implemented by ASIUM is depicted in the following figure.The input for Asium are syntactically parsed texts from a specific domain. It then extracts these triplets: verb, preposition/function (if there is no preposition), lemmatized head noun of the complement. Next, using factorization, Asium will group together all the head nouns occurring with the same couple verb, preposition/function. These lists of nouns are called basic clusters. They are linked with the couples verb, preposition/function they are coming from. Asium then computes the similarity among all the basic clusters together. The nearest ones will be aggregated and this aggregation is suggested to the expert for creating a new concept. The expert defines a minimum threshold for gathering classes into concepts. Only the distance computation is not enough to learn concepts of one domain. The help of the expert is necessary because any learned concepts can contain noise (mistakes in the parsing for example), some sub-concepts are not identified or over-generalization occurs due to aggregations. Similarity computation is computed between all basic clusters to each other and next the expert validates the list of classes learned by Asium. After this, Asium will have learned the first level of the ontology. Similarity is computed again but among all the clusters, both the old and the new ones in order to learn the next level of the ontology. The advantages of this method are twofold: First, the similarity measure identifies all concepts of the domain and the expert can validate or split them. Next the learning process is, for one part, based on these new concepts and suggests more relevant and more general concepts. Second, the similarity measure will offer the expert aggregations between already validated concepts and new basic clusters in order to get more knowledge from the corpus. The cooperative process runs until there are no more possible aggregations. The output of the process are the subcategorization frames and the ontology schema. The ASIUM methodology SYLEX The preprocessing of the free text is performed by Sylex. Sylex, the syntactic parser of the French society Ingénia, is used in order to parse source texts in French or English. This parser is a tool-box of about 700 functions which have to be used in order to produce some results. In ASIUM, the attachments between head nouns of complements and verbs and the bounds are retrieved from the full syntactic parsing performed by Sylex. The file format that Asium uses to understand the parsing is the following: ---(Sentence of the original text) Verbe: (the verb) kind of complement (Sujet(Subject), COD(Object), COI(Indirect object), CC(position, manière, but, provenance, direction) (adjunct of position, manner, aim, provenance, direction): (head noun of the complement) Bornes_mot: (bounds of the noun in the sentence) Bornes_GN: (bound of the noun phrase in the sentence) Prep:(optional) (the preposition) The resulting parsed text is then provided to ASIUM for further elaboration. The user interface The user interface of ASIUM allows the user to manipulate and view the ontology in every stage of the learning process. The following figures show some of the basic windows of the interface. This window allows the expert to validate the concepts learned by Asium. This window displays the list of all the examples covered for the learned concept. This window displays the ontology like it actually is in memory: i.e. learned concepts and concepts to be proposed for this level. Each blue circle represents a class. It can be labeled or not. This window allows the expert to split a class into several sub-concepts. The left list represents the list of nouns the expert wants to split into subconcepts. The right list contains all the nouns for one sub-concept. Uses of ASIUM The kind of semantic knowledge that is prodused by ASIUM can be very useful in a lot of applications. Some of them are mention bellow: Information Retrieval: Verb subcategorization frames can be used in order to tag texts. The major part of the nouns occurring in the texts will then be tagged by their concept. The search of the right text will be based on a query using domain concepts instead of words. For example, if the user is interested in movie stars, he would not search for the noun "star" but for the concept "Movie_stars" which is really distinct from "Space_Stars". Information Extraction: Such subcategorization frames together with an ontology allow the expert to write "semantic" extractions rules. Text indexing: After the learning of the ontology for one domain, the texts should be enriched by the concepts. The ontology can then be use for indexing the texts. Texts Filtering: As with information extraction, filtering should use rules based on concepts and on the verbs used in the texts. The filtering quality should be improved by this semantic knowledge. Abstracts of texts: The use of subcategorization frames and ontology concepts will allow the texts to be tagged and then it will certainly be a precious help for extracting abstracts from texts. Automatic translation: Creation both in the language of the ontologies and the subcategorization frames and next the use of a method in order to match the concepts of the verbs frames in both languages should improve translators. Syntactic parsing improvement:: Subcategorization frames and concepts of a domain should improve a syntactic parser by letting it choose the right verb attachment regarding the ontology and then by letting it avoids a lot of ambiguities. 7. Uses/applications of ontology learning The ontology learning process and methods described in the previous section can be used and applied in many domains concerning knowledge and information extraction. Some uses and applications are described in this section. 7.1 Knowledge sharing in multi agent systems Discovering related concepts in a multi-agent system among agents with diverse ontologies is difficult using existing knowledge representation languages and approaches. In this section an approach for identifying candidate relations between expressive, diverse ontologies using concept cluster integration is described. In order to facilitate knowledge sharing between a group of interacting information agents (i.e. a multi-agent system), a common ontology should be shared. However, agents do not always commit a priori to a common, predefined global ontology. This research investigates approaches for agents with diverse ontologies to share knowledge by automated learning methods and agent communication strategies. The goal is that agents who do not know the relationships of their concepts to each other need to be able to teach each other these relationships. If the agents are able to discover these concept relations, this will aid them as a group in sharing knowledge even though they have diverse ontologies. Information agents acting on behalf of a diverse group of users need a way of discovering relationships between the individualized ontologies of users. These agents can use these discovered relationships to help their users find information related to their topic, or concept, of interest. In this approach, semantic concepts are represented in each agent as concept vectors of terms. Supervised inductive learning is used by agents to learn their individual ontologies. The output of this ontology learning is semantic concept descriptions (SCD) in the form of interpretation rules. This concept representation and learning is shown in the following figure. Supervised inductive learning produces ontology rules The process of knowledge sharing between two agents, the Q (querying) and the R (responding) agent, begins when the Q agent sends a concept based query. The R agent interpreters this query and if related concepts are found a response is sent to the Q agent. After that, the Q agent takes the following steps to perform the concept cluster integration: 1. From the R agent response, determine the names of the concepts to cluster. 2. Create a new compound concept using the above names. 3. Create a new ontology category by combining instances associated with the compound concept. 4. Re-learn the ontology rules. 5. Re-interpret the concept based query using the new ontology rules including the new concept cluster description rules. 6. If the concept is verified, store the new concept relation rule. In this way, an agent learns from the knowledge provided by another agent. This methodology was implemented in the DOGGIE (Distributed Ontology Gathering Group Integration Environment) system, which was developed in the University of Iowa. 7.2 Ontology based Interest Matching Designing a general algorithm for interest matching is a major challenge in building online community and agent-based communication networks. This section presents an information theoretic concept-matching approach to measure degrees of similarity among users. A distance metric is used as a measure of similarity on users represented by concept hierarchy. Preliminary sensitivity analysis shows that this distance metric has more interesting properties and is more noise tolerant than keyword-overlap approaches. With the emergence of online communities on the Internet, software-mediated social interactions are becoming an important field of research. Within an online community, history of a user’s online behavior can be analyzed and matched against other users to provide collaborative sanctioning and recommendation services to tailor and enhance the online experience. In this approach the process of finding similar users based on data from logged behavior is called interest matching. Ontologies may take many forms. In the described method, an ontology is expressed in a tree-hierarchy of concepts. In general, tree-representations of ontologies are usually polytrees. However, for the purpose of simplicity, here the tree representation is assumed to be singly connected and that that all child nodes of a node are mutually exclusive. Concepts in the hierarchy represent the subject areas that the user is interested in. To facilitate ontology exchange between agents, an ontology can be encoded in the DARPA Agent Markup Language (DAML). The figure below illustrates a visualization of this sample ontology. An example of an ontology used The root of the tree represents the interests of the user. Subsequent sub-trees represent classifications of interests of the user. Each parent node is related to a set of children nodes. A directed edge from the parent node to a child node represents a (possibly exclusive) sub-concept. For example, in the figure, Seafood and Poultry are both subcategories of the more general concept of Food. However, in general, every user is to adopt the standard ontology, there must be a way to personalize the ontology to describe each user. For each user, each node has a weight attribute to represent the importance of the concept. In this ontology, given the context of Food, the user tends to be more interested in Seafood rather than Poultry. The weights in the ontology are determined by observing the behavior of the user. History of the user’s online readings and explicit relevance feedback are excellent sources for determining the values of the weights. In this approach, a standard ontology is used to categorize the interests of users. Using the standard ontology, the websites the user visits can be classified and entered into the standard ontology to personalize it. A form of weight for each category can then be derived: if a user frequents websites in that category or an instance of that class, it can be viewed that the user will also be interested in other instances of the class. With the weights, the distance metric can be used to perform comparisons between interests of different users and finally categorize them. The effectiveness of the ontology matching algorithm is to be determined by deploying it in various instances of on-line communities. 7.3 Ontology learning for Web Directory Classification Ontologies and ontology learning can also be used to create information extraction tools for collecting general information from the free text of web pages and classifying them in categories. The goal is to collect indicator terms from the web pages that may assist the classification process. These terms can be derived from directory headings of a web page as well as its content. The indicator terms along with a collection of interpretation rules can result in a hierarchy (ontology) of web pages. In this way, the Information Extraction and Ontology Learning process can be applied to large web directories both for information storage and knowledge mining. 7.4 E-mail classification KMi Planet “KMi Planet” is a web-based news server for communication of stories between members in Knowledge Media Institute. Its main goals are to classify an incoming story, obtain the relevant objects within the story and deduce the relationships between them and to populate the ontology with minimal help from the user. Integrate a template-driven information extraction engine with an ontology engine to supply the necessary semantic content. Two primary components are the story library and the ontology library. The Story library contains the text of the stories that have been provided to Planet by the journalists. In the case of KMi Planet it contains stories which are relevant to our institute. The Ontology Library contains several existing ontologies, in particular the KMi ontology. PlanetOnto augmented the basic publish/find scenario supported by KMi planet, and supports the following activities: 1. Story submission. A journalist submits a story to KMi planet using e-mail text. Then the story is formatted and stored. 2. Story reading. A Planet reader browses through the latest stories using a standard Web browser, 3. Story annotation. Either a journalist or a knowledge engineer manually annotates the story using Knote (the Planet knowledge editor), 4. Provision of customized alerts. An agent called Newsboy builds user profiles from patterns of access to PlanetOnto and then uses these profiles to alert readers about relevant new stories. 5. Ontology editing. A tool called WebOnto providesWeb-based visualisation, browsing and editing support for the ontology. The “Operational Conceptual Modelling Language," OCML is a language designed for knowledge modeling. WebOnto uses OCML and allows the creation of classes and instances in the ontology, along with easier development and maintenance of the knowledge models. In that point ontology learning is concerned. 6. Story soliciting. An agent called Newshound, periodically solicits stories from the journalists. 7. Story retrieval and query answering. The Lois interface supports integrated access to the story archive Two other tools have been integrated in the architecture: MyPlanet: Is an extension to Newsboy and helps story readers to read only the stories that are of interest instead of reading all stories in the archive. It uses a manually predefined set of cue-phrases for each of “research areas defined in the ontology. For example, for genetic algorithms one cue-phrase is “evolutionary algorithms". Consider the example of someone interested in research area Genetic Algorithms. A search engine will return all the stories that talk about that research area. In contrast, my-Planet (by using the ontological relations) will also find all Projects that have research area Genetic Algorithms and then search for stories that talk about these projects, thus returning them to the reader even if the story text itself does not contain the phrase “genetic algorithms". an IE tool : Is a tool which extracts information from e-mail text and it connects with WebOnto to prove theorems using the KMi-planet ontology. 8. Conclusion Ontology learning could add significant leverage to the Semantic Web because it propels the construction of domain ontologies, which the Semantic Web needs to succeed. We have presented a collection of approaches and methodologies for ontology learning that crosses the boundaries of single disciplines, touching on a number of challenges. All these methods are still experimental and awaiting further improvement progress and analysis. So far, the results are rather discouraging compared to the final goal that has to be achieved, fully automated, intelligent and knowledge learning systems. The good news is, however, that perfect or optimal support for cooperative ontology modeling is not yet needed. Cheap methods in an integrated environment can tremendously help the ontology engineer. While a number of problems remain within individual disciplines, additional challenges arise that specifically pertain to applying ontology learning to the Semantic Web. With the use of XML-based namespace mechanisms, the notion of an ontology with well-defined boundaries—for example, only definitions that are in one file—will disappear. Rather, the Semantic Web might yield a primitive structure regarding ontology boundaries because ontologies refer to and import each other. However, what the semantics of these structures will look like is not yet known. In light of these facts, the importance of methods such as ontology pruning and crawling ??? will drastically increase and further approaches are yet to come. 9. References [1] M.Sintek, M. Junker, Ludger van Est, A. Abecker, Using Information Extraction Rules for Extending Domain Ontologies, German Research Center for Artificial Intelligence (DFKI) [2] M.Vargas-Vera, J.Domingue, Y.Kalfoglou, E.Motta, S.Buckingham Shum, Template-Driven Information Extraction for Populating Ontologies, Knowledge Media Institute (UK) [3] G.Bisson, C.Nedellec, Designing clustering methods for ontology building, University of Paris [4] A.Maedche, S.Staab, The TEXT-TO-ONTO Ontology Learning Environment, University of Karlsruhe [5] A.Maedche, S.Staab, Ontology Learning for the Semantic Web, University of Karlsruhe [6] H.Suryanto,P.Compton, Learning classification taxonomies from a classification knowledge based system, University of New South Wales (Australia) [7] Proceedings of the First Workshop on Ontology Learning OL'2000 Berlin, Germany, August 25, 2000 [8] Proceedings of the Second Workshop on Ontology Learning OL'2001 Seattle, USA, August 4, 2001 [9] ASIUM web page: http://www.lri.fr/~faure/Demonstration.UK/Presentation_Demo.html [10] T. Yamaguchi, Acquiring Conceptual Relationships from domain specific texts, Shizuoka University, Japan [11] G. Heyer, M. Lauter, Learning Relations using Collocations, Leipzig University, Germany [12] C. Brewster, F. Ciravegna, Y. Wilks, User-centered ontology learning for knowledge management, University of Sheffield, UK [13] A. Williams, C. Tsatsoulis, An instance based approach for identifying candidate ontology relations within a multi agent system, University of Iowa [14] W. Koh, L. Mui, An information theoretic approach to ontology based interest matching, MIT [15] M. Kavalec, V. Svatek, Information extraction and ontology learning guided byv web directory, University of Prague [16] C. Brewster, F. Ciravegna, Y. Wilks, Knowledge acquisition for knowledge management, University of Sheffield, UK