MPEG-4 Based Multimedia Information System

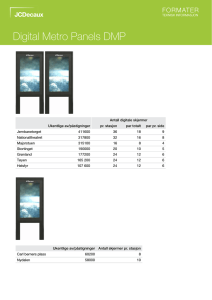

advertisement

Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 MPEG-4 Based Multimedia Information System Ya-Qin Zhang Microsoft Research, China 5F, Beijing Sigma Center No.49, Zhichun Road, Haidian District Beijing 10080, PRC yzhang@microsoft.com ABSTRACT Recent creation and finalization of the MPEG4 international standard has provided a common platform and unified framework for multimedia information representation. In addition to provide highly efficient compression of both natural and synthetic audiovisual (AV)contents such as video, audio, sound, texture maps, graphics, still images, MIDI, and animated structure, MPEG4 enables greater capabilities for manipulating AV contents in the compressed domain with object-based representation. MPEG4 is a natural migration of the technological convergence of several fields: digital television, computer graphics, interactive multimedia, and Internet. This tutorial chapter briefly discusses some example features and applications enabled by the MPEG4 standard. Key words: Multimedia, MPEG4, Digital Video, World Wide Web 1 Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 INTRODUCTION During the last decade, a spectrum of standards in digital video and multimedia has emerged for different applications. These standards include the ISO JPEG for still images [JPEG-90]; ITU-T H.261 for video conferencing from 64 kilobits per second (kbps) to 2 Megabits per second (Mbps) [H261-91]; ITU-T H.263 for PSTN-based video telephony [H263-95]; ISO MPEG-1 for CD-ROM and storage at VHS quality [MPEG1-92]; the ISO MPEG-2 standard for digital TV [MPEG2-94]; and the recently completed ISO/MPEG4 international standard for multimedia representation and integration [MPEG4-98]. Two new ISO standards are under development to address the nextgeneration still image coding (JPEG2000) and content-based multimedia information description (MPEG7). Several special issues of IEEE journals have been devoted to summarizing recent advances in digital image and video compression and advanced TV in terms of standards, algorithms, implementations, and applications [IEEE-95-2,IEEE95-7,IEEE-97-2, IEEE-98-11]. The successful convergence and implementation of MPEG1 and MPEG2 have become a catalyst for propelling the new digital consumer markets such as Video CD, Digital TV, DVD, and DBS. While the MPEG-1 and MPEG-2 standards were primarily targeted at providing high compression efficiency for storage and transmission of pixel-based video and audio, MPEG-4 envisions to support a wide variety of multimedia applications and new functionalities of object-based audio-visual (AV) contents. The recent completion of MPEG4 version1 is expected to provide a stimulus to the emerging multimedia applications in wireless networks, internet, and content creation. The MPEG-4 effort was originally conceived in late 1992 to address very low bit rate video (VLBR) applications at below 64 kbps such as PSTN-based videophone, video email, security applications, and video over cellular networks. The main motivations for focusing MPEG-4 at VLBR applications were: Applications such as PSTN videophone and remote monitoring were important, but not adequately addressed by established or emerging standards. In fact, new products were introduced to the market with proprietary schemes. The need for a standard at rates below 64 kbps was eminent; Research activities had intensified in VLBR video coding, some of which have gone beyond the boundary of the traditional statistical-based and pixel-oriented methodology; It was felt that a new breakthrough in video compression was possible within a five-year time window. This ``quantum leap'' would likely make compressed video quality at below 64 kbps adequate for many applications such as videophone. 2 Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 Based on the above assumptions, a workplan was generated to have the MPEG-4 Committee Draft (CD) completed in 1997 to provide a generic audiovisual coding standard at very low bit rates. Several MPEG-4 seminars were held in parallel with the WG11 meetings, many workshops and special sessions have been organized, and several special issues have been devoted to such topics. However, as of July 1994 in the Norway WG11 meeting, there was still no clear evidence that a ``quantum leap'' in compression technology was going to happen within the MPEG-4 timeframe. On the other hand, ITUT has embarked on an effort to define the H.263 standard for videophone applications in PSTN and mobile networks. The need for defining a pure compression standard at very low bitrates was, therefore, not entirely justified. In light of the situation, a change of direction was called to refocus on new or improved functionalities and applications that are not addressed by existing and emerging standards. Examples include object-oriented features for content-based multimedia database, error-robust communications in wireless networks, hybrid nature and synthetic image authoring and rendering. With the technological convergence of digital video, computer graphics, and Internet, MPEG-4 aims at providing an audiovisual coding standard allowing for interactivity, high compression, and/or universal accessibility, with a high degree of flexibility and extensibility. In particular MPEG-4 intends to establish a flexible content-based audio-visual environment that can be customized for specific applications and that can be adapted in the future to take advantage of new technological advances. It is foreseen that this environment will be capable of addressing new application areas ranging from conventional storage and transmission of audio and video to truly interactive AV services requiring content-based AV database access, e.g. video games or AV content creation. Efficient coding, manipulation and delivery of AV information over Internet will be key features of the standard. MPEG4 MULTIMEDIA SYSTEM Figure 1 shows an architectural overview of MPEG-4. The standard defines a set of syntax to represent individual audiovisual objects, with both natural and synthetic contents. These objects are first encoded independently into their own elementary streams. Scene description information is provided separately, defining the location of these objects in space and time that are composed into the final scene presented to the user. This representation includes support for user interaction and manipulation. The scene description uses a tree-based structure, following the Virtual Reality Modeling Language (VRML) design. Moving far beyond the capabilities of VRML, MPEG-4 scene descriptions can be dynamically constructed and updated, enabling much higher levels of interactivity. Object descriptors are used to associate scene description components that relate digital video to the actual elementary streams that contain the corresponding coded data. As shown in Figure 1, these components are encoded sepa- 3 Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 rately, and transmitted to the receiver. The receiving terminal then has the responsibility of composing the individual objects for presentation and managing user interaction. Display and User Interaction Audiovisual Interactive Scen e Composition and Rendering ... Object Descrip tor Scen e Descrip tion Information Return Channel Codin g Primitive AV Objects Elementary Streams Figure 1. MPEG-4 Overview. Audio-visual objects, natural audio, as well as synthetic media are independently coded and then combined according to scene description information (courtesy of the ISO/MPEG4 committee). Following eight MPEG-4 functionalities, clustered into three classes, are defined [MPEG4-REQ]: Content-based interactivity Content-based manipulation and bit stream editing Content-based Multimedia data access tools Hybrid natural and synthetic data coding Improved temporal access Compression Improved coding efficiency Coding of multiple concurrent data streams Universal Access 4 Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 Robustness in error-prone environments Content-based scalability Some of the applications enabled by these functionalities include: Video streaming over Internet Multimedia authoring and presentations View of the contents of video data in different resolutions, speeds, angles, and quality levels Storage and retrieval of multimedia database in mobile links with high error rates and low channel capacity (e.g. Personal Digital Assistant) Multipoint teleconference with selective transmission, decoding, and display of ``interesting'' parties Interactive home shopping with customers' selection from a video catalogue Stereo-vision and multiview of video contents, e.g. sports ``Virtual'' conference and classroom Video email, agents, and answering machines AN EXAMPLE Figure 2 shows an example of an object-based authoring tool for MPEG-4 AV contents, recently developed by the Multimedia Technology Laboratory at Sarnoff Corporation in Princeton, New Jersey. This tool has the following features: compression/decompression of different visual objects into MPEG4-compliant bitstreams drag-and-drop of video objects into a window while resizing the objects or adapting them to different frame rates, speeds, transparencies, and layers substitution of different backgrounds mixing natural image and video objects with computer-generated synthetic texture and animated objects creating metadata information for each visual objects 5 Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 Figure 2. An example multimedia authoring system using MPEG 4 tools and functionalities (courtesy of Sarnoff Corporation) This set of authoring tools can be used for interactive Web design, digital studio, and multimedia presentation. It empowers users to compose and interact with digital video on a higher semantic level. 6 Chapter 7: MPEG-4 by Zhang Digital Signal Processing Handbook, CRC Press, 1999 REFERENCES [JPEG-90] ISO/IEC 10918-1, ``JPEG Still Image Coding Standard,'' 1990 [H261-91] CCITT Recommendation H.261, ``Video Codec for Audiovisual Services at 64 to1920 kbps,'' 1990 [H263-95] ITU-T/SG15/LBC, ``Recommendation H.263P Video Coding for Narrow Telecommunication Channels at below 64kbps'', May 1995 [MPEG1-92] ISO/IEC 11172 , ``Coding of Moving Pictures and Associated Audio for Digital Storage Media at up to about 1.5 Mbps,'' 1992 [MPEG2-94] ISO/IEC 13818, ``Generic Coding of Moving Pictures and Associated Audio,'' 1994 [IEEE-95-2] Y.-Q.Zhang, W.Li and M.Liou, Ed. ``Advances in Digital Image and Video Compression,'' Special Issue, Proceedings of IEEE, Feb. 1995 [IEEE-95-7] M.Kunt, Ed. ``Digital Television,'' Special Issue, Proceedings of IEEE, July 1995 [IEEE-97-2]Y.-Q.Zhang, F.Pereria,T.Sikora, and C.Reader, Ed, MPEG-4, Special Issue, IEEE Transactions on Circuits and Systems for Video Technology, Feb.1997 [IEEE-98-3] T.Chen, R.Liu and A.Tekalp ed, Multimedia Signal Processing, Special issue on Proceedings of IEEE, May 1998 [IEEE-98-11] M.T.Sun, K.Ngan, T.Sikora, and S.Panchnatham, Ed. Representation and Coding of Images and Video, IEEE Transactions on Circuits and Systems for Video Technology, November 1998 [MPEG4-REQ] MPEG-4 Requirements Ad-Hoc Group, ``MPEG-4 Requirements,'' ISO/IEC JTC1/SC29/WG11/MPEG4,Maceio, Nov.1996 [MPEG4-98] ISO/IEC JTC1/SC29/WG11, ``MPEG-4 Draft International Standard'' October, 1998 7