* Prosiect Adnabod Lleferydd Sylfaenol: Initial findings and

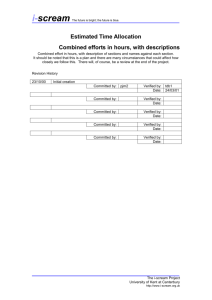

advertisement

Prosiect Adnabod Lleferydd Sylfaenol: Initial findings and recommendations Project Materials Here I list all the project materials I have found to date: Locations: N:\egymraeg\Lleferydd\ N:\rhys\ http://bedwyrvm.bangor.ac.uk/svn/adnabodlleferydd/ Found: recordings of 45 speakers (on bedwyrvm) recording wordlists various admin documents planning documents: o RJJ: the initial 8 step plan; the August Speech recognition recordings report. o BJW: more recent plan sketch (speechrectasks) My understanding of the work breakdown 1. Write Overview Documents An overview document should specify explicitly what kind of applcation is envisaged and how the the user is expected to use it. I have not found any such documentation in the project materials. The nearest is the list of words recorded. Here are some provisional suggestions: Once launched, the speech recognition application runs in the background providing the following services: applications launcher for a clearly defined number of applications (list apps); speech interface for a clearly defined subset of generic commands (e.g., open, close, cut, copy, paste, etc.); comprehensive speech interface for a clearly defined number of applications (e.g., calculator); A functional requirements document should specify a mapping between speech input commands and system commands. Ivan Uemlianin Ad. Lle. Syl. 2 2. Immediate Tasks These tasks have no prerequisites other than the functional requirements mapping. 2.1. Build Acoustic Model Building the Acoustic Model (AM) is the most significant part of the project. The first step is to collect a corpus of training data. Data have been collected from 45 speakers. We also have at our disposal speech data from the WISPR and Lexicelt projects. Most (perhaps almost all) of this data is unlabelled. Training an AM from this data can be done iteratively. This is an outline of the process: initialisation: human experts to label a subset of the data machine to "fake label" the rest of the data cycle: machine to train an AM from the data machine to use the AM to auto-label the data human experts to correct a subset of the labelling When AM errors are below some threshold the AM can be slotted into the recogniser. Size of task: long Champion: IAU Consultant: BJW 2.2. Build Language Model The Language Model (LM) is a relatively simple component of the recogniser. It specifies the expected speech input (or equivalently, the textual output) of the recogniser. The main technical challenge here is that the Sphinx LM builder is available only under a noncommercial license, so some creativity might have to be involved. Size of task: 10 days Champion: IAU Ivan Uemlianin Ad. Lle. Syl. 3 2.3. Build User Interface As the recogniser is a completely separate module, the user interface (UI) can be designed and built independently, using a dummy recogniser (e.g., whatever the user says, the recogniser emits the signal, "Launch Firefox"). Here are some provisional suggestions: For the graphical user interface (GUI) all that is required is an on/off switch, and a list of what commands are recognised. The voice user interface (VUI) must do the following: stream speech to the recogniser; receive signals from the recogniser; run system commands depending on received signals; provide visual response (e.g., display command or 'not recognised' in GUI). The recogniser used by the user interface should be updated regularly as part of the AM building iteration outlined above. For example, every Sunday night a new version of the UI is compiled with the current recogniser. Size of task: 10 days Champion: DBJ Consultant: IAU (interface with recogniser) 3. Build Recogniser Prerequisites: AM, LM The recogniser component of the application includes the Sphinx-based speech recognition code and provides an interface between that and the user interface (UI) component of the application. The recogniser must do the following: receive a speech stream from the UI; perform speech recognition on the speech stream; send signals to the UI. Size of task: 10 days Champion: IAU Consultant: DBJ (interface with UI) 4. Build Final Release Prerequisites: user interface, recogniser Ensure that a version of the UI is available for outside release with the best recogniser. Size of task: 2 days Champion: DBJ Ivan Uemlianin Ad. Lle. Syl. 4 Proposals for the next six months I suggest we commence work on all the Immediate Tasks in parallel, with the aim of having a usable application (even if with very poor recognition accuracy) as soon as possible. The Build Recogniser task requires a working AM but that should be done as soon as possible also: we should not wait until the AM is finished. Task Write overview document Write requirements document Review documents Build Acoustic Model Build Language Model Build User Interface Build Recogniser Build Final Release Oct Nov Dec Jan Feb Mar # # # > > / > > # > > # > > # > # # Once we have a usable application, we can start giving demonstrations, press releases, etc. Questions for meeting What are the important project dates? For example, what are the deadlines for progress reports Is there anything anywhere else not covered in Project Materials? Can we confirm task assignments: Who? IAU IAU ALL IAU/BJW IAU DBJ/IAU IAU/DBJ DBJ What? Write overview document Write requirements document Review documents Build Acoustic Model Build Language Model Build User Interface Build Recogniser Build Final Release Ivan Uemlianin When? OK? 2008/10/06 Mon 2008/10/06 Mon 2008/10/13 Mon Dec., Mar. Dec. Dec. Feb. Mar.