3. Processing raw-text for rough alignment

advertisement

Chapter

5

A comprehensive bilingual word alignment system and

its application to disparate languages: Hebrew and

English

Yaacov Choueka, Ehud S. Conley and Ido Dagan

The Institute for Information Retrieval & Computational Linguistics, Mathematics & Computer

Science Department, Bar-Ilan University, 52 900 Ramat-Gan, Israel1

Key words:

Abstract:

1

parallel texts, translation, bilingual alignment, Hebrew

This chapter describes a general, comprehensive and robust

word-alignment system and its application to the HebrewEnglish language pair. A major goal of the system architecture

is to assume as little as possible about its input and about the

relative nature of the two languages, while allowing the use of

(minimal) specific monolingual pre-processing resources when

required. The system thus receives as input a pair of raw

parallel texts and requires only a tokeniser (and possibly a

lemmatiser) for each language. After tokenisation (and

lemmatisation if necessary), a rough initial alignment is

obtained for the texts using a version of Fung and McKeown’s

DK-vec algorithm (Fung and McKeown, 1997; Fung, this

volume). The initial alignment is given as input to a version of

the word_align algorithm (Dagan, Church and Gale, 1993), an

extension of Model 2 in the IBM statistical translation model.

Word_align produces a word level alignment for the texts and a

probabilistic bilingual dictionary. The chapter describes the

details of the system architecture, the algorithms implemented

(emphasising implementation details), the issues regarding

their application to Hebrew and similar Semitic languages, and

some experimental results.

Email addresses: {choueka,konli,dagan}@cs.biu.ac.il

1

2

Chapter 5

1.

INTRODUCTION

Bilingual alignment is the task of identifying the correspondence between

locations of fragments in a text and its translation. Word alignment is the

task of identifying such correspondences between source and target word

occurrences. Word-level alignment was shown to be a useful resource for

multilingual tasks, in particular within (semi-) automatic construction of

bilingual lexicons (Dagan & Church, 1994; Dagan & Church, 1997; Fung,

1995; Kupiec, 1993; Smadja, 1992; Wu and Xia, 1995), statistical machine

translation (Brown et al., 1993) and other translation aids (Kay, 1997;

Isabelle, 1992; Klavans and Tzoukermann, 1990; Picchi et al., 1992).

There has been quite a large body of work on alignment in general and

word alignment in particular (Brown et al., 1991; Church et al., 1993;

Church, 1993; Dagan et al., 1993; Fung and Church, 1994; Fung and

McKeown, 1997; Gale and Church, 1991a; Gale and Church, 1991b; Kay

and Roscheisen, 1993; Melamed, 1997a; Melamed, 1997b; Shemtov, 1993;

Simard et al., 1992; Wu, 1994). This chapter describes a word alignment

system that was designed to assume as little as possible about its input and

about the relative nature of the two languages being aligned. A specific goal

set for the system is to align successfully Hebrew and English texts, two

disparate languages that differ substantially from each other, in their

alphabet, morphology and syntax. As a consequence, various assumptions

that were used in some alignment programs do not hold for such disparate

languages, due to differences in total text length, partitioning to words and

sentences, part-of-speech usage, word order and letters used to transliterate

cognates. The high complexity of Hebrew morphology requires complex

monolingual processing prior to alignment, converting the raw texts to a

stream of lemmatised tokens.

Another motivation is to handle relatively short "real-world" text pairs, as

are typically available in practical settings. Unlike some carefully edited

bilingual corpora, two given texts may provide only an approximate

translation of each other, either because some text fragments appear in one

of the texts but not in the other (deletions) or because the translation is not

literal.

Examining the alignment problem and the literature addressing it reveals

that the alignment task is naturally divided into two subtasks. The first one is

identifying a rough correspondence between regions of the two given texts.

This task has to track “natural” deviations in the lengths of corresponding

portions of the text, and also to identify where some text fragments appear in

one text but not in the other. Imagine a two-dimensional plot of the

alignment “path” (see Figure 1 in Section 4.5 for an example), where each

axis corresponds to positions in one of the texts and each point on the path

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

3

corresponds to matching positions. If the two texts were a word-by-word

literal translation of each other then the alignment path would have been

exactly the diagonal of the plot. The rough alignment task can be viewed as

identifying an approximation of the path that tracks how it deviates from the

diagonal. Slight deviations correspond to natural differences in length

between a text segment and its translation, while sharp deviations (horizontal

or vertical) correspond to segments that appear only in one of the texts.

Given a rough correspondence between text regions, the second task

would be refining it to an accurate and detailed word level alignment. This

task has to trace mainly local word order variations and mismatches due to

non-literal translations. While it may be possible to address the two

alignment tasks simultaneously, by the same algorithm, it seems that their

different goals call for a sequential and modular treatment. It is easier to

obtain only a rough alignment given the raw texts, while detailed word-level

alignment is more easily obtained if the algorithm is directed to a relatively

small environment when aligning each individual word.

Our project addresses the two alignment tasks by applying and

integrating two algorithms that we found suitable for each of the tasks. The

DK-vec algorithm (Fung and McKeown, 1997; Fung, this volume),

previously applied to disparate language pairs such as English and Chinese,

was chosen to produce the rough alignment path, given only the two

(tokenised or lemmatised) texts as input. Other alternatives that produce a

rough alignment make assumptions that may be incompatible with some of

the target settings of our system. Sentence alignment (Brown et al., 1991;

Gale and Church, 1991) assumes that sentence boundaries can be reliably

detected and that sentence partitioning is similar in the two languages. The

latter assumption, for example, often does not hold in Hebrew-English

translation2. Character-based alignment (Church, 1993) assumes that the two

languages use the same alphabet and share a substantial number of similarly

spelled cognates, which is not the case for many language pairs. DK-vec, on

the other hand, does not require sentence correspondence and does not rely

on cognates. It makes only the reasonable assumption that there is a

substantial number of pairs of corresponding source and target words whose

positions distribute rather similarly throughout the two texts. Therefore we

found this algorithm most suitable for our system, aiming to make it

applicable for the widest range of settings. It should be noted, though, that

other algorithms might perform better than DK-vec in specific settings that

satisfy their particular assumptions.

2

This was found by our own experience and by Alon Itai from the Technion – Israel

Institute of Technology (private communication).

4

Chapter 5

The word_align algorithm (Dagan et al., 1993), an extension of Model 2

in the IBM statistical translation model (Brown et al., 1993), was chosen to

produce a detailed word-level alignment. This algorithm was shown to work

robustly on noisy texts using the output of a character-based rough alignment

(Church, 1993). The algorithm makes only the very reasonable assumption

that source and target words that translate each other would appear in

corresponding regions of the rough alignment, and is able to handle cases

where a word has more than one translation in the other text. Combined

together, the two algorithms rely on the seemingly universal property of

translated text-pairs, namely that words and their (reasonably consistent)

translations appear in corresponding positions in the two texts.

The chapter reports evaluations of the system for a pair of HebrewEnglish texts consisting of contracts for the Ben-Guryon 2000 airport

construction project, containing about 16,000 words in each language. While

system accuracy is still far from perfect, it does provide useful results for

typical applications of word alignment. The system was also tested on a pair

of English-French texts extracted from the ACL ECI CD-ROM I

multilingual corpus, yielding comparable results (not reported here) and

demonstrating the high portability of the method.

The paper is organised as follows. Section 2 discusses linguistic

considerations of Hebrew relevant for alignment tasks. Section 3 presents the

monolingual-processing phase that converts the raw input texts into two

lemmatised streams of tokens suitable for word alignment. This tokenisation

(or lemmatisation) phase is regarded as a pre-requisite for the alignment

system and is the only language-specific component in our alignment

architecture. Sections 4 and 5 describe the two phases of the alignment

process, producing the rough and the detailed alignments. These sections

provide concise descriptions of our implemented versions of DK-vec and

word_align, emphasising implementation details that may be helpful in

replicating these methods. Section 6 concludes and outlines topics for future

research.

2.

HEBREW-ENGLISH ALIGNMENT: LINGUISTIC

CONSIDERATIONS

2.1

Basic Facts

Hebrew and English are disparate languages, i.e. languages with

markedly different linguistic structures and grammatical frameworks,

Hebrew being part of the family of Semitic Languages, English belonging to

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

5

the Indo-European one. Ours is one of the very first attempts to try and align

such disparate languages, and it is our belief that the insights and results

achieved by this experiment can be successfully applied to the alignment of

similar pairs of texts, such as, say, Arabic and Italian.

In order to fully appreciate the context of some of the design principles of

our project, specially those detailed below for the pre-processing stage, we

give here a brief sketch of the most salient characteristics of Hebrew (most

of which are valid also, with varying degrees, for Arabic) that are relevant to

the purpose at hand.

The Hebrew alphabet contains 22 letters (in fact, consonants), five of

which have different graphical shapes when occurring as the last letter of the

word, but this is irrelevant to our purposes. Words are separated by blanks,

and the usual punctuation marks (period, comma, colon and semicolon,

interrogation and exclamation marks, etc.) are used regularly and with their

standard meaning. Paragraphs, sentences, clauses and phrases are welldefined textual entities, but their automatic recognition and delineation may

suffer from ambiguity problems quite similar in nature (but somewhat more

complex) to those encountered in other languages. Only one case is available

for the written characters (no different upper- and lower- cases), and there is

thus no special marking for the beginning of a sentence or for the first letter

of a name. The stream of characters is written in a right-to-left (rather than

left-to-right) fashion; beside causing some technical difficulties in displaying

or printing Hebrew, however, this is quite irrelevant to the alignment task.

No word agglutination (as in German) is possible (except for the very

small number of prepositions and pronouns, as explained below).

2.2

Morphology, inflection and derivation

As a Semitic language, Hebrew is a highly inflected language, based on a

system of roots and patterns, with a rich morphology and a richly textured

set of generation and derivation patterns. A few numbers presented in Table

1 clearly delineate the difference between the two languages in this respect.

While the total number of entries (lemmas) in a modern and comprehensive

Hebrew dictionary such as Rav-Milim (Choueka, 1997) does not exceed

35,000 entries (including foreign loan-words such as electronic, bank,

technology etc.) derivable from some 3,500 roots, the total number of

(meaningful and linguistically correct) inflected forms in the language is

estimated to be in the order of 70 million forms (words). The corresponding

numbers for English, on the other hand, are about 150,000 dictionary entries

(in a good collegiate dictionary) derivable from some 40,000 stems and

generating a total number of one million inflected forms.

6

Chapter 5

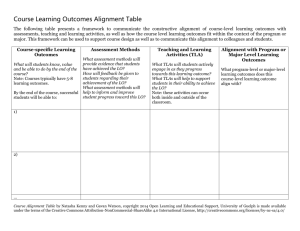

Table 1. Numbers of distinct elements within each morphological level in Hebrew and English

Morphological level

Roots/Stems

Lemmas (dictionary entries)

Valid (inflected) forms

Hebrew

3,500

35,000

70,000,000

English

40,000

150,000

1,000,000

Verbs in Hebrew are usually related to three-letter (more rarely fourletter) roots, and nouns and adjectives are generated from these roots using a

few dozens of well-defined linguistic patterns. Verbs can be conjugated in

seven modes (binyanim), four tenses (past, present, future and imperative),

and twelve persons. The mode, tense and person (including gender and

number) are in most cases explicitly marked in the conjugated form. Nouns

and adjectives usually have different forms for different genders

(masculine/feminine), for different numbers (singular/plural; sometimes also

a third form for dual [pairs]: two eyes, two years, etc.), as well as for

different construct/non-construct states (a construct state being somewhat

similar to the English possessive ’s). About thirty prepositions or, rather,

pre-modifiers such as the, in, from and combinations of prepositions (and in

the, and since, from the,...) can be prefixed to verbs, nouns and adjectives.

Possessive pronouns (my, your,...) can be suffixed to nouns, and

accusative pronouns (me, you,...) to verbs. Thus, each of the expressions mybooks, you-saw-me, they hit him are expressed in Hebrew in just one word.

The number of potential morphological variants of a noun (computer) can

thus reach the hundreds, and for a verbal root (to see) even the thousands.

Two additional important points should be noted here. First, in the

derivation process described above, the original form (lemma) can undergo

quite a radical metamorphosis, with addition, deletion, and permutation of

prefixes, suffixes and infixes. Second, whole phrasal sequences of words in

English may have one-word equivalents in Hebrew. Thus, the phrase and

since I saw him is given by the Hebrew word VKSRAITIV3 (ukhshereitiv)

which has only two letters in common with the lemma to see (RAH);

similarly the phrase and to their daughters is given by VLBNVTIHM

(velivnoteihem) which has only one letter in common with the lemma BT

(bat, daughter).

All of the above point to the fact that no statistical procedures in HebrewEnglish alignment systems can achieve a reasonable success rate without

some normalising pre-processing, i.e. lemmatisation, of the words in the text.

An English text may contain the noun computer or the verb to supervise

dozens of times, while the Hebrew counterparts would appear in dozens of

formally different variants, occurring once or twice each, skewing by this the

3

For the transliteration table see Appendix A.

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

7

relevant statistics. This is clearly shown in Appendix B which presents a few

examples from our texts of an English term and its several (morphological

variants) Hebrew counterparts, which were successfully matched by the

alignment algorithms, and their frequencies. To pick up randomly one such

example, the term document occurs 19 times in the English text, and is

matched by the 12 different Hebrew variants detailed in Table 2 along with

their frequencies.

Table 2. Morphological variants of the Hebrew word MSMK (Mismakh) successfully matched

to the English equivalent ‘ document’, and their frequencies in the Hebrew-English corpus

Meaning

Fr.

Meaning

Fr.

Meaning

Fr.

the-documents

4

in-the-documents-of

2

(a) document

2

and-the-documents

2

from-the-documents

1

the-documents-of

1

from-his-documents

1

and-in-the-documents-of

1

in-the-document

1

documents

1

from-the-documents-of

1

in-the-documents

1

Thus, while lemmatisation procedures are not commonly used, or indeed

looked upon as necessary or helpful in English-French systems, they are, in

the light of our experiments, a must in any alignment task that involves a

Semitic language. This is by all evidence true not only for alignment

systems, but for any advanced text-handling system, such as full-text

document retrieval systems, as was shown already in (Attar, 1977) and

(Choueka, 1983) and, more recently, in (Choueka, 1990).

2.3

Non-vocalisation

Another important feature of Hebrew is that it is basically a nonvocalised language, in the sense that the written form of a spoken word

consists usually of the word’s consonantal part, its actual (intended)

pronunciation being left to the understanding (and intelligence) of the reader.

This situation raises a complex problem of morphological ambiguity in

which a written word may have several different readings (and therefore

several different meanings), the intended one to be derived from the context

and from “general knowledge of the world”. To give the reader a flavour of

that problem, suppose that English was a non-vocalised language, and

consider the following sentence in which only one word has been

devocalised:

The brd was flying high in the skies.

Is it bird, beard, or bread? broad? bored, or bred?

In order to cope with this problem, classical Hebrew devised an elaborate

system of diacritical marks that occur under, above and inside letters to

8

Chapter 5

guide the reader to the intended reading of the word. These marks, however,

are rarely used today; instead, three specific letters (Vav, Yod, Aleph) are

used as vowel markers (for O/U, I/Y/E and A, resp.) and inserted when

appropriate in the word. Despite the fact that strict rules have been devised

for this procedure a long time ago by the Academy of Hebrew Language,

these rules are not commonly applied, and writers insert these letters as they

see fit. This of course adds another dimension to the number of variants that

can be related to the same basic lemma, and reinforces the need explained

above of attaching normalised base forms to the words of a running text in

Hebrew.

2.4

Word Order

Finally, we mention a feature of Hebrew (and Semitic languages) which

might reflect, indirectly at least, on text-alignment systems with Hebrew

texts, and this is its almost free word order, especially in simple sentences.

While the usual order of a simple sentence in English is that of Subject,

Verb, Object, in Hebrew any permutation of these might be equally valid

(although there might be some slight differences of emphasis in the different

variants). Thus the English sentence

The boy ate the apple

may be equally rendered in Hebrew in any one of the 6 possible

permutations of the Hebrew forms of the-boy, ate, and the-apple. In cases of

morphological ambiguity, no syntactic clues can therefore be deduced (in

general) from the specific word order in the sentence.

3.

PROCESSING RAW-TEXT FOR ROUGH

ALIGNMENT

Any text-processing task, whether simple or sophisticated, monolingual

or bilingual, has to start necessarily with a first step of tokenisation, i.e. of

splitting the continuous stream of characters in the text into words (tokens),

sentences, and, in some cases at least, paragraphs. Naturally, in order to get

reasonable results in an alignment system, be it word-, sentence- or

paragraph-oriented, it is important to apply the same set of tokenisation

algorithms on both languages, so as to reduce both texts to comparable

sequences of linguistic items.

When aligning texts of two closely related languages from the same

family, such as English and French, well-tokenised texts can be a good input

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

9

to the alignment system. For the case of Hebrew-English alignment,

however, a lemmatisation pre-processing step is necessary for Hebrew, and,

recalling the principle of “equal treatment” for both languages as formulated

above, the same should be applied to the English text too.

By lemmatisation we mean here reducing every word (token) in the text

to its basic form, i.e. to its lemma (usually this would be its corresponding

dictionary entry) and tagging it with the appropriate part-of-speech. True

enough, alignment systems to not make use in general of the part-of-speech

tags directly. These tags however are essential in choosing the correct lemma

of an ambiguous form in a given context (as in saw, for example, whose

lemma is see if it is a verb and saw if a noun).

In Hebrew, the lemma of a nominal form is its masculine/singular/nonconstruct

form,

and

for

a

verbal

form,

the

thirdperson/singular/masculine/past-tense (in the given mode) variant, the forms

being stripped in both cases from any attached prepositions or pronouns, and

spelled in a unique adopted spelling. An appropriately modified definition

applies to English too.

For Hebrew lemmatisation we used two modules from Rav-Milim, an

ambitious large-scope project for building a broad, multi-module, integrated,

computerised infrastructure for the intelligent processing of modern Hebrew,

developed in the years 1988-1996 by the first author at the Centre for

Educational Technology in Tel-Aviv, with the co-operation of Yoni

Ne’eman and a large team of programmers, lexicographers and

computational linguists. The first module, Milim, is a complete, accurate,

comprehensive, robust and portable morphological analyser and lemmatiser

for modern Hebrew, available today (with some restrictions) for research and

industry-based applications. Milim takes as input any string of characters

(without context) in Hebrew and outputs the set of all its (linguistically

correct) lemmas and corresponding grammatical analyses (including part-ofspeech tags). Milim recognises all common modes of Hebrew spelling, and

can deal also with some extra-linguistic items such as acronyms (abundant in

Hebrew), abbreviations and frequent proper nouns (of persons, places and

products).

Note that Milim processes words in a context-free framework, and

because of non-vocalisation and other ambiguity phenomena discussed

above, it is quite common for that module to output several possible lemmas

and corresponding analyses (three to four in the average, sometimes as many

as ten or even more) of the given input word.

A second component of Rav-Milim, Nakdan-Text (in an earlier version

named Nat’han-Text) takes as input a whole sentence, applies Milim to every

word in it, then, using morphological, syntactical, probabilistic and statistical

10

Chapter 5

procedures, chooses for any ambiguous token in the sentence the lemma and

part-of-speech most suited to its context, with an estimated 95% accuracy.4

Because of some technical reasons, the lemmas produced by Milim are

given through numerical codes, rather than as strings of characters, but this

is irrelevant to the alignment context.

The corresponding parallel pre-processing for English was done using

Morph, an integrated package developed by Yuval Krymolowski, a Ph.D.

student, member of our team. Sentencing is achieved using Mark Liberman’s

sgmlsent, and after performing tokenisation on the sentenced text, it is piped

into Brill’s rule-based part-of-speech tagger (Brill, 1992). Finally, Morph

extracts lemmas from the Wordnet (Miller, 1990) files.

From this point on all the words in the texts are replaced by their base

forms (lemmas for English and lemmas’ numerical codes for Hebrew). Thus,

unless otherwise stated, any further use of “word”, “token”, “text” etc. will

refer to the base form representation of these items.

One can try and move the normalization process one step up from

lemmas to roots/stems, unifying in fact families of words and reducing them

for the purposes of the alignment process to a unique term, thus , i.e.,

looking at the words (lemmas) computer, computation, compute,

computability, computerise, computerisation, etc, as a unique term. It is not

entirely clear, however, whether this procedure will indeed improve the

performance of the algorithms, or, on the contrary, degrade it, and

experimentation with this option has still to be conducted.

Finally, as is common with many text-handling systems, two stop-word

lists of the most common function words (lemmas for Hebrew) in these two

languages have been compiled, and the corresponding items have been

eliminated from the text. Not only do these items occur very frequently

without contributing to the alignment goals, they very often disturb and

negatively affect the frequency and positional statistical analyses so critical

in alignment systems.

4

In fact Nakdan-Text does more than that; it actually vocalises the words in the sentence,

respecting the very complex vocalisation rules of Hebrew, disambiguating and

choosing the correct reading on the fly, with the precision noted above. Note that this is

an essential step in any text-to-speech system for Hebrew. A first version of the system

won the 1992 Israel Information Processing Association Annual Prize for Best

Achievement in Scientific and Technological Applications of Computing, and the

revised version was the recipient of the first Israel Prime Minister Award for Software

in 1997.

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

4.

11

ROUGH ALIGNMENT

4.1

Outline

An alignment is a set of connections, of the form <i,j>, each of them

denoting that position i in one text corresponds to position j in the other. For

convenience, we use the terms source (S) and target (T) texts to denote the

two texts, yet the role of the two texts is symmetric in the alignment

problem.

The DK-vec algorithm (Fung and McKeown, 1997; Fung, this volume)

identifies pairs of corresponding source and target words that are mostly

used to translate each other in the given text pair. The algorithm relies on the

fact that the positions of such word pairs are distributed very similarly

throughout the two texts. To identify this similarity each word is represented

by a vector. Each entry in the vector corresponds to one occurrence of the

word, and its value is the distance between that word occurrence and the

preceding one. Representing occurrences by relative distances rather than by

absolute positions from the beginning of the text makes the method robust to

deletions of segments in one of the texts. Such deletions would affect all

absolute positions of the word occurrences following a deletion but not their

relative distances (apart from occurrences within or immediately following a

deleted segment).

The algorithm computes the similarity between all word pairs, first by

some crude methods and then by applying a dynamic programming

algorithm that computes the “matching cost” of candidate pairs of vectors.

For the best-matched word pairs, referred to as the core bilingual lexicon, the

algorithm identifies some of their matching positions that are used as

“anchor point” connections within the initial rough alignment. The

alignment is made complete through linear interpolation, providing the

necessary input for directing the subsequent detailed alignment step.

4.2

Distance vectors

The DK-vec algorithm receives as input the two tokenised texts, where,

in our case, each token is represented by its lemma, as described in the

previous section. For each word w in these texts the algorithm produces a

distance vector, Dw=<d1w,…,dnw>, which represents the relative distances

between subsequent occurrences of w. diw , the value in the i'th position of

Dw, is the relative distance, in word tokens, between the i'th occurrence of w

12

Chapter 5

and the preceding one. d1w is the distance between w’s first occurrence and

the beginning of the file.

4.2.1

Language Proportion Coefficient

Distance vectors are intended to capture, in a manner robust for deletions,

the similar distribution of corresponding source and target words throughout

the two parallel texts. The DK-vec method, as described below, assumes that

corresponding text fragments will be of similar length, and accordingly

vector entries of corresponding source and target positions will have similar

values. However, this assumption does not hold well for pairs of languages

that differ in their typical text length. For some language pairs parallel text

segments can have a significantly different number of tokens. Fung and

McKeown have already remarked that the English part of their text was

about 1.3 times larger than its Japanese translation. This difference exists

also for Hebrew-English parallel texts and was found to deteriorate the

quality of DK-vec output.

To overcome this problem, we normalised the distance values within the

vectors of one language by a Language Proportion Coefficient (LPC), the

typical proportion between the lengths of parallel segments in the two

languages. For our type of Hebrew-English texts, the length of Hebrew

segments is approximately 0.75 times that of their English equivalents.

Developing an automatic method for tuning the LPC for any given parallel

text may be an interesting issue for further research.

4.3

Generating a Core Bilingual Lexicon

4.3.1

Candidate translation pairs

DK-vec evaluates the potential degree of correspondence between source

and target words by a dynamic programming algorithm that matches their

distance vectors. This is a rather costly process, which, to save computation

time, is applied only to candidate pairs of source and target words that are

likely to yield good matches. Candidate pairs are determined by applying

two simple and easy-to-compute tests to each pair of source and target

words, s and t.

The first filter requires that the ratio between the frequencies of the two

words will not exceed a threshold, which was set in our experiments to 2.

Applying this filter is suitable since the vector-matching algorithm yields

high scores for pairs of words that are used to translate each other in most of

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

13

their occurrences. The filter also requires that each frequency is greater than

2, since matching low frequency words is unreliable.

The second filter tests the likelihood that the values contained in the two

vectors are similar, using the following score:

( s, t ) (mD mD ) 2 ( D D ) 2

s

t

s

t

where m and denote the mean and standard deviation of each vector.

In their paper, Fung and McKeown (1997) suggest that vector pairs

whose distance exceeds 200 should be filtered. We found this threshold

appropriate and used it also in our experiments.

4.3.2

Distance vectors matching cost

DK-vec finds the correspondence between a source word s and its

translation, a target word t, by identifying the high similarity of their

corresponding distance vectors Ds and Dt . The algorithm is likely to identify

such correspondence when the two words are consistently translated by each

other, that is, most occurrences of s are translated by t, and vice versa. In this

case we expect a similar distribution of their occurrences in the text, leading

to high similarity of their distance vectors.

The two distance vectors are considered similar if it is possible to match

(pair-wise) many of their positions, such that the distance values in matching

positions are similar. To justify this reasoning, suppose that two consecutive

occurrences of s, i-1 and i, are translated by the consecutive occurrences j-1

and j of t. The corresponding distance values, dis and djt , measure the lengths

of the corresponding source and target fragments between the two

consecutive occurrences of s and t. These two corresponding fragments are

expected to be of similar length, hence we expect the values dis and djt to be

similar (|dis-djt| is small).

When many consecutive occurrences of s correspond to consecutive

occurrences of t, there are many corresponding pairs in the two distance

vectors that can be matched with each other. Non matching positions are

likely to represent occurrences of s that were not translated by t, and vice

versa. A cost function is defined to quantify the best possible matching

between the two vectors. The cost function is defined recursively, where the

notation C(i,j) represents the cost of matching the first i positions in Ds with

the first j positions in Dt:5

5

This type of cost function is typical for dynamic programming problems. Refer to

(Cormen et al., 1990, chapter 16) for a good introduction to dynamic programming.

Moreover, this cost function, and the matching problem it induces, are analogous to the

14

Chapter 5

C (0,0) 0,

d 0s 0,

d 0t 0

(i) C (i 1, j 1)

C (i, j )

min (ii) C (i 1, j )

(iii) C (i, j 1)

Case (i) corresponds to matching the i'th occurrence of s with the j’th

occurrence of t. The cost of matching these occurrences is defined as the

difference between the corresponding distance values, preferring to match

occurrences whose corresponding distance values are as similar as possible.

To this cost we add, recursively, the cost of matching all preceding

occurrences of s and t.

Case (ii) corresponds to deciding not to match the i'th occurrence of s

with any occurrence of t. The cost of this decision is defined to be the same

as if we were matching the two occurrences, otherwise the cost function

would end up preferring to avoid matches at all (finding an optimal cost for

this case might be an issue for further investigation). This time, however, we

have to recursively add the cost of matching the preceding occurrences of s

with all the first j occurrences of t.

Case (iii) is symmetric to case (ii), corresponding to a decision not to

match the j’th occurrence of t. Notice that any of the cases (i)–(iii) becomes

irrelevant if it yields a negative argument for C. Notice also that the method

yields only monotonic matching, not allowing matches to “cross” each other.

d is

d tj

4.3.3

Computing matching cost

The dynamic programming procedure, MATCH(Ds,Dt), computes the

(minimal) cost of matching the two distance vectors. It maintains a matrix C,

whose dimensions are (Ls + 1) (Lt + 1), where Ls and Lt are the lengths of

the two input vectors. During computation, each cell (i,j) of the matrix is

assigned with the minimal cost of matching the first i occurrences of s with

the first j occurrences of t, which is computed according to the matching cost

function. The additional row and column represent the pseudo-position 0 in

both vectors. By the end of the computation, the desired optimal cost is

found in C(Ls, Lt).

The algorithm maintains another matrix of the same size, denoted by A.

Each cell in A is assigned with one of the values (i), (ii) or (iii), which

represents the matching decision (action) made for the corresponding cell in

problem of finding the minimal edit distance between two strings (Ukkonen, 1983), in

which the charged cost is typically 0 for a match operation and 1 otherwise.

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

15

C (as defined above for the three cases of the cost function). This matrix is

later used to reconstruct the optimal matching decisions, which specify the

pairs of matched occurrences of s and t.

MATCH(Ds, Dt)

1

6

8

9

11

12

14

C(0, 0) 0; ds0 0; dt0 0

2

for i 0 to Ls do

3

for j 0 to Lt do

4

min_cost

5

if (i > 0 AND j > 0)

min_cost C(i – 1, j – 1)

7

A(i, j) (i)

if (i > 0 AND C(i – 1, j) < min_cost)

min_cost C(i – 1, j)

10

A(i, j) (ii)

if (j > 0 AND C(i, j – 1) < min_cost)

min_cost C(i, j – 1)

13

A(i, j) (iii)

C(i, j) min_cost + | dis – djt |

15

return C(Ls, Lt) / (Ls + Lt)

The inner loop of the procedure computes the minimal matching cost for

each matrix cell. Notice that a cell for which A(i, j) = (i) represents a pair of

occurrences that were matched by the algorithm.

The accumulative matching cost is normalised by the sum of the lengths

of the two vectors, which equals the sum of the corresponding word

frequencies. This is done to enable a comparison between the costs of

matching pairs of words with different frequencies.

Normalized matching costs are computed for all candidate translation

pairs. For each source word s a corresponding target word t is chosen for

which the matching cost with s is minimal. The two words are added to the

core bilingual lexicon if their normalised matching cost is lower than a

threshold, which was set to 60 in our experiments.

4.4

4.4.1

Generating the Rough Alignment

Recovering connections for lexicon word pairs

The rough alignment is based on the set of all pairs of matching positions

for the core lexicon words. These matching positions are encoded in the

16

Chapter 5

matrix A for each word pair during matching cost computation. The

following procedure, applied to each word pair s and t in the core lexicon,

recovers these matching pairs of positions and adds them as connections to

the rough alignment:

RECOVERMATCHES (s, t, A)

1

i Ls; j Lt

while (i 0 AND j 0) do

3

case A(i, j) of

(i): Add a connection <i,j> to the initial rough alignment

5

ii–1

6

jj–1

7

(ii): i i – 1

8

(iii): j j – 1

2

4

4.4.2

Filtering and interpolating the rough alignment

After applying RECOVERMATCHES for all word pairs in the core lexicon,

the rough alignment contains a large set of connections, which were

computed independently for each word pair. At this stage it is necessary to

filter out some of the connections, to obtain a coherent alignment path. Two

types of filters are applied, ensuring that the alignment path is monotonic (no

crossing connections) and reasonably smooth.

First, the list of all connections, each of them having the form <i,j>

where i and j are matched positions in the source and target texts, is sorted

by source position (ascending i's).6 Monotonicity is obtained by scanning the

list and eliminating every connection <i,j> for which there is some

preceding connection, <i',j’>, such that j < j’ (the two connections are

“crossing”). While in principle crossing connections are possible, they would

typically occur only within a small distance from each other, due to

differences in word order between the two texts. Identifying crossing

connections of this type requires a much higher level of detail and accuracy

than available by the rough alignment, in which crossing connections are

usually due to erroneous connections.

Aggregating the connections that were obtained from all lexicon word

pairs enables smoothing the rough alignment path by eliminating

connections that do not fit well with other neighbouring connections. Fung

and Mckeown (1997) suggest a difference-based filtering method that

6

Notice that here i and j denote absolute source and target positions in the two texts,

unlike their role in the preceding subsections.

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

17

eliminates a connection <i, j>, immediately preceded by the connection

<i’, j’>, if

|(i – i’ ) – (j – j’)| > MAX_DIFF,

where MAX_DIFF is a relatively small threshold, say 500. A large difference

exceeding the threshold is assumed to indicate an erroneous connection.

We used a somewhat modified method, which was inspired by the method

in (Melamed, 1997a). Melamed observed that if we graphically depict the

alignment path then parallel text segments that do not contain large deletions

in one of the texts have more-or-less constant slopes, which are similar to the

average slope of the entire alignment path (the “diagonal”). Large local

deviations from that slope appear when there are deletions of large text

segments. Based on this observation, Melamed developed an algorithm that

tracks these slopes and aims to cope with such deletions. While we have not

yet tried Melamed’s algorithm, we currently apply a simple filter that

eliminates <i,j> if

| SegSlope DiagSlope |

MAX _ DEV

DiagSlope

where SegSlope

L

i i'

, DiagSlope S

j j'

LT

This filter assumes that a large deviation of the slope of the line

connecting <i’, j’> and <i, j> from the slope of the main diagonal

corresponds to an erroneous connection. Notice that both filters above do not

cope well with large deletions, which might be better addressed by

Melamed’s method.7

Applying the filters above yields a smooth and monotonic alignment

path, whose connections, considered as “anchor points”, cover a subset of

the occurrences of the core lexicon words. To make the rough alignment

complete, as required by the next step of the process, a connection is

produced for each source position i that is not yet covered by the set of

anchor points. The resulting connection, <i,j>, is obtained by linear

interpolation between the two nearest anchor points surrounding i. This

7

In fact, our corpus contained a couple of large segments, of total length of about 1800

words, which were deleted in one of the texts. None of the filtering methods we tried so

far was able to cope with these deletions, so we had to manually remove the nontranslated segments from the corpus. The results reported in this chapter refer to the

modified corpus. It is interesting to note that it was easy to manually identify the

deleted segments when observing the DK-vec alignment path graph, as in these areas

the alignment path was very noisy. This observation may be exploited by future work

for automatic detection of such deletions.

18

Chapter 5

yields the initial rough alignment I, which is used as input by the detailed

word alignment method of the next section. In the following we use the

notation I(j) to denote the source position i that is aligned with j by the initial

rough alignment.

4.5

Evaluation

The quality of the initial rough alignment was evaluated on a random

sample of 424 manually aligned source positions. Figure 1 shows a segment

of the rough alignment path produced by the DK-vec algorithm and the

manual alignment for the sample points falling in this segment. It can be

seen that most sample points fall very close to the obtained alignment path.

Figure 2 presents the distribution of offsets (distances) between the target

positions for the rough alignment connections and the correct target

positions, for the sample points. Notice that 19.1% of the sample points

coincide with the connections of the rough alignment (0 offset). Table 3

gives the details of the offset distribution, presenting the accumulative offset

count and percentage for each offset range. It shows that 90% of the sample

points are within a distance of ±50 tokens from the rough alignment, which

provides a plausible input for the detailed word alignment program.

5.

DETAILED WORD-LEVEL ALIGNMENT

The detailed word alignment phase includes two steps (Dagan et al.,

1993). In the first, a probabilistic bilingual lexicon is created for the

particular pair of texts, based on a probabilistic model that is a modification

of Model 2 in (Brown et al., 1993). The parameters of the model are

estimated in an iterative manner by the Estimation-Maximisation (EM)

algorithm. In the second step, an optimal detailed alignment is found, using a

dynamic programming approach that takes into account the dependency

between adjacent connections.

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

8500

19

Anchor points path

Sample points

8000

Target

7500

7000

6500

6000

5500

5000

4000

4500

5000

Source

5500

6000

Figure 1. Rough alignment path (solid line) and correct alignments for sample points (source

positions 4000–6000)

40

35

30

%

25

20

15

10

5

0

-40

-20

0

Offset

20

40

Figure 2. Distribution of offsets between correct and rough alignment for all sample points

20

Chapter 5

Table 3. Accumulative offset distribution for sample points

Maximal

Count

%

offset

0

81

19.1

±10

279

65.8

±20

325

76.7

±30

350

82.5

±40

373

88.0

±50

381

89.9

±60

395

93.2

±70

397

93.6

±80

400

94.3

±90

412

97.2

±100

422

99.5

±110

424

100.0

5.1

Constructing a Detailed Probabilistic Bilingual

Lexicon

Given the initial rough alignment I between the source and target texts as

input, the next step is to construct a detailed probabilistic bilingual lexicon

that corresponds to the pair of texts.

The lexicon construction method is based on a probabilistic model, which

represents the probability of the target text given the source text based on the

notion of alignment.8 An alignment, a, is defined as a set of connections,

where a connection <i,j> denotes that position i in the source text is aligned

with position j in the target text. The assumed model is directional, in that it

seeks to align each target position j (unless filtered out, see below) with

exactly one source position. Several target positions, though, may be aligned

with the same source position, and some source positions may be left

unaligned, since no target position was aligned with them. According to the

model, the probability of the target text T given the source text S is

represented by

Pr(T | S ) Pr(T , a | S ),

a

where a ranges over all possible alignments.

The model further assumes that all connections within an alignment a are

independent of each other, and that the probability of a connection <i,j>

8

We describe the probabilistic model rather briefly here, at the level needed to motivate

and present the procedure for constructing the probabilistic lexicon. For further details

refer to (Brown et al., 1993) and (Dagan et al., 1993).

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

21

depends only on two types of parameters, which refer to the identity of the

two aligned words and to their corresponding positions in the text:

1. A translation probability, pT(t|s), specifying the probability that a

particular word t of the target language would be aligned with the

word s of the source language. This parameter captures the

likelihood that these two particular words will be used to translate

each other in the given texts.

2. An offset probability, pO(k), where k=i-I(j). I(j) is the source

position that was aligned with the target position j according to the

initial rough alignment I. k is thus the offset between the source

position aligned with j according to the hypothesised alignment a

and the source position determined by the initial rough alignment I.

This parameter captures the assumption that the input rough

alignment I provides a good approximation for the “true” alignment.

Thus, it is expected that pO(k) will get smaller as k increases. As an

input “windowing” constraint, the model assumes that pO(k)=0 for

|k|>w, where w is a window-size parameter. This constraint imposes

that the source position aligned with j must fall within a distance of

w words from I(j). Typical values of w might range between 20 to

50 words, depending on the quality and granularity of the initial

rough alignment. In the experiments reported in this chapter we used

w=50.

According to the probabilistic model, the probability of a connection

<i,j>, which equals the sum of the probabilities of all alignments that

contain this connection, is represented by

Pr( i, j )

W ( i , j )

W ( i ' , j )

i'

where W ( i, j ) pT (t j | si ) pO (i I ( j ))

and tj and si are the target and source words in positions j and i,

correspondingly. i' ranges over all source positions (in the allowed window).

The equation above stems from the independence of connections within

alignments and from the symmetrical structure of the model. It states that the

probability of the connection aligning the target position j with the source

position i is the ratio between the probabilistic “weight” of this connection,

W(<i,j>), to the “weight” of all other possible connections for j (recall that

only connections that fall within the window of ±w words from I(j) are

considered possible). This “weight” is simply the product of the two

22

Chapter 5

parameters which are related to the connection, reflecting the assumed

independence between them.

The Maximum Likelihood Estimator (MLE) is used to estimate the

values of the model parameters, by applying the Estimation Maximisation

(EM) algorithm (Baum, 1972; Dempster et al., 1977). The maximum

likelihood estimates of the model parameters satisfy the following equations:

(k )

pT (t | s )

i , j:t j t , si s

i , j:si s

pO

i , j:i I ( j ) k

i, j

Pr( i, j )

Pr( i, j )

Pr( i, j )

Pr( i, j )

Both equations estimate the parameter values as relative “probabilistic”

counts. The first estimate is the ratio between the probability sum for all

connections aligning s with t and the probability sum for all connections

aligning s with any word. The second estimate is the ratio between the

probability sum for all connections with offset k and the probability sum for

all possible connections.

Parameter values are estimated in an iterative manner using the EM

(Estimation-Maximisation) algorithm (Baum, 1972; Dempster et al., 1977).

First, uniform values are assigned to all possible parameters. The windowing

constraint is applied by setting pO(k)=0 for |k|>w and setting a uniform value

for the legitimate k’s. Then, in the first step of each iteration, we compute

Pr(<i,j>) for all possible connections (within the allowed windows). Having

probability values for the connections, the second step of each iteration

computes new estimates for all parameters pT(t|s) and pO(k).

The iterations are repeated until the estimates converge, or until a prespecified maximum number of iterations is reached. In the experiments

reported here a fixed number of 10 iterations was used. Notice that the EM

algorithm does not guarantee convergence to the “true” maximum likelihood

estimates, but rather to estimates corresponding to a local maximum of the

likelihood function. Yet, empirically the algorithm obtains useful parameter

values, which represent reasonable translation and offset probabilities (as

known to happen in many other empirical application of the EM algorithm).

A few filters were applied to facilitate parameter estimation. At

initialisation, we applied the same frequency-based filter used for the DKvec algorithm: requiring that the ratio between the frequencies of the two

words will not exceed the threshold 2, and that each individual frequency is

greater than 2. PT(t|s) for pairs not satisfying the threshold was set to 0. In

addition, after each iteration, all estimates PT(t|s) which were smaller than a

threshold (0.01) were set to 0. After the last iteration, estimates PT(t|s)< 0.1

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

23

were also set to 0. The output probabilistic bilingual lexicon thus contains all

pairs of source and target words, s and t, with positive PT(t|s).

Table 4 shows a sample of Hebrew words and their most likely

translations in the probabilistic bilingual lexicon, as obtained for our

Hebrew-English text pair. Note that the correct translations are usually near

the front of the list, though there is a tendency for the program to be

confused by words that appear frequently in the context of the correct

translation.

The probabilistic bilingual lexicon is an intermediate resource in our

system, which is used as input for the final detailed word alignment step, as

described below. As such, we have not conducted any formal objective

evaluation of its quality. However, this lexicon may also be a useful resource

on its own, for example in semi-automatic construction of bilingual

dictionaries. Optimising and evaluating the bilingual lexicon quality, as a

standalone resource, may be an interesting issue for further research.

Table 4. An example of the probabilistic dictionary. Hebrew words are transcribed to Latin

letters. Probability of each translation is given in parentheses

Hebrew lemma (s)

Translations (t) & probabilities (PT(t|s))

SMVS (Shimmush, use (noun))

use (0.6), take (0.24)

SMYRH (Shemira, guarding (noun)) safety (0.49), product (0.27), per (0.13)

LBVA (Lavo, to-come)

execution (0.37), accord (0.27),

replace (0.25)

LHYVT MVBA (Lihyot Muva, to-be-brought)

bring (0.51), serve (0.27)

BHYNH (Behina, examination / test)

Examination (0.67), works.9 (0.12)

NDRS (Nidrash, required (adj.))

require (0.61), construct (0.25)

NZQ (Nezeq, damage (noun))

damage (0.33), entitle (0.15),

claim (0.14), right (0.13)

5.2

Finding the Optimal Alignment

The EM algorithm produces two sets of maximum likelihood probability

estimates, translation probabilities and offset probabilities. One possibility

for selecting the preferred alignment would be simply to choose the most

probable alignment according to these maximum likelihood probabilities,

with respect to the assumed probabilistic model. This corresponds to

selecting the alignment a that maximises the product:

9

This token is a result of a tokenisation error. Two tokens should have been identified —

“works” and “.”.

24

Chapter 5

W ( i, j )

i , j a

Computing the weights of each connection by this formula is independent

of all other connections. Thus, it is easy to construct the optimal alignment

by independently selecting for each target position j the source position i

which falls within the window I(j)w and maximises W(<i,j>).

Unfortunately, this method does not model the dependence between

connections for target words that are near one another. For example, the fact

that the target position j was connected to the source position i will not

increase the probability that j+1 will be connected to a source position near

i. Instead, position j+1 will be aligned independently, where offsets are

considered relative to the initial alignment rather than to the detailed

alignment that is being constructed. The absence of such dependence can

easily confuse the program, mainly in aligning adjacent occurrences of the

same word, which are common in technical texts. The method described

below does capture such dependencies, based on dynamically determined

relative offsets.

To model the dependency between connections in an alignment, it is

assumed that the expected source position of a connection is determined

relative to the preceding connection in a, instead of relative to the initial

alignment.10 For this purpose, a’(j), the expected source position to be

aligned with j, is defined as a linear extrapolation from the preceding

connection in a:

a ' ( j ) a ( j prev ) ( j j prev )

Ns

Nt

where jprev is the last target position before j which is aligned by a (in the

preceding connection in a), a(jprev) is the source position aligned with jprev by

a, and Ns and Nt are the lengths of the source and target texts. a'(j) thus

predicts that the distance between the source positions of two consecutive

connections in a is proportional to the distance between the two

corresponding target positions, where the proportion is defined by the

general proportion of the lengths of the two texts.

10

As described below, some of the target positions may

yielding a partial alignment that contains connections

positions. Therefore, the connection preceding <i,j>

position j-1 but rather a smaller position. The order of

target position.

be skipped and left unaligned,

only for a subset of the target

in a may not have the target

connections in a refers to their

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

25

Based on this modified model, the most probable alignment is the one

that maximises the following “weight”:

W (a)

W ' ( i , j )

i , j a

where W ' ( i, j ) pT (t j | si ) pO (i a' ( j )).

The offset probabilities for this model are approximated by using the same

estimates that were obtained by the EM algorithm for the previous model (as

computed relative to the initial alignment).

Finding the optimal alignment in the modified model is more difficult,

since computing W’(<i,j>) requires knowing the alignment point for jprev.

Due to the dependency between connections, however, it is not possible to

know the optimal alignment point for each j before identifying all

connections of the alignment.

To solve this problem a dynamic programming algorithm is applied. The

algorithm uses a matrix in which each cell corresponds to a possible

connection <i,j>. The cell is assigned with the optimal (maximal) “weight”

of an alignment for a prefix of the target text, up to position j, whose last

connection is <i,j>.11

A couple of extensions are required to make the algorithm practical. In

order to avoid connections with very low probability we require that

W’(<i,j>) exceeds a pre-specified threshold, T, which was set to 0.001. If the

threshold is not exceeded then the connection is not considered. If, due to

this threshold, no valid connection can be obtained for the target position j

then this position is dropped from the alignment.12

Because of the dynamic nature of the algorithm it risks “running away”

from the initial rough alignment, following an incorrect alignment path. To

avoid this risk, a’(j) is set to I(j) in two cases in which it is likely to be

unreliable:

11

As an implementation detail, notice that the actual matrix size maintained by the

algorithm can be Ntk (rather than NtNs), where k is a small constant corresponding to

the maximal number of viable (non-filtered) connections that may be found for a single

target position (see the filtering criterion below). In this setting, each cell encodes also

the source position to which it corresponds.

12 Because of this filter we found it easier to implement a “forward-looking” version of the

dynamic programming algorithm, rather than the more common “backward-looking”

version (as in the implementation of DK-vec). For a given cell in the matrix

corresponding to <i,jprev >, the program searches forward for a following target

position j with a viable connection, skipping positions for which no connection within

the allowed window passes the threshold.

26

Chapter 5

1. a’(j) gets too far from the initial alignment: | a’(j) – I(j) | >w.

2. The distance between j and jprec is larger than w, due to failure to

align the preceding w words.

An example of the output of the word alignment program is given in

Figure 3.

Le comité soumet à l'attention de la Commission

The Committee draws the legislative aspect of the

d'experts pour l'application des conventions et

following cases to the attention of the Committee of

recommandations les aspects législatifs des cas suivants

Experts on the Application of Conventions and

:

Recommendations :

Figure 3. An example output of the detailed alignment program (for a French-English text

pair). Dashed arrows indicate the correct alignment where the output is wrong.

5.3

Evaluation

The detailed word alignment program was evaluated on the HebrewEnglish text pair, using the same sample as for DK-vec (424 sample points).

The output alignment included connections for 70% of the sample target

positions, while for 30% of the target positions no viable connection was

obtained.

Figure 4 presents the distribution of offsets (distances) between the

source positions of the produced connections and the correct source

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

27

positions. Almost 52% of the output connections are exact hits.13 Table 5

gives the details of the offset distribution, presenting the accumulative offset

count and percentage for each offset range. It shows that about two-thirds of

the connections are within a distance ±10 tokens from the correct alignment.

The detailed alignment step depends to a certain extent on the accuracy of

the input rough alignment, and is unlikely to recover the correct alignment

when the input offsets are too large. It is clear, however, that the detailed

alignment succeeds to reduce the input offset significantly for a large

proportion of the positions.

6.

CONCLUSIONS AND FUTURE WORK

The project described in this chapter demonstrates the feasibility of a

practical comprehensive word alignment system for disparate language pairs

in general, and for Hebrew and English in particular. To obtain this goal, it

was necessary to avoid almost any assumption about the input texts and

language pair. It was also necessary to convert the texts to a stream of input

lemmas, a difficult task for Hebrew that was performed sufficiently well

using available Hebrew analysis tools. The project also demonstrates the

success of a hybrid architecture, combining two different algorithms to

obtain first an initial rough alignment and then a detailed word level

alignment. While system accuracy is still limited, it is good enough to be

useful for a variety of dictionary construction and translation assistance

tools.

Various issues remain open for future research. An obvious goal is to

improve system accuracy. In particular, it is desired to improve first the

rough alignment algorithm and its filtering criteria. Any improvement at this

stage will lead to improving the final detailed alignment, which relies

heavily on the rough alignment input. Such improvements are also necessary

in order to handle better large deletions in one of the texts. Another goal is to

extend the framework for aligning multi-word terms and phrases rather than

individual words only.

The current system does not use any input other than the given pair of

texts, aligning each input text pair independently. It would be useful to

extend this framework to work in an incremental manner, where the lexicons

13

Notice that the result figures in this evaluation should not be compared directly with

those for DK-vec. Here the evaluation refers to the connections produced, which cover

70% of the sample points, while the evaluation for DK-vec refers to an interpolated

output, which covers 100% of the sample points (in sync with the role it plays in our

system). The difference in quality between the two outputs is clear, though.

28

Chapter 5

(and other parameters) obtained for some text pairs can be used when

aligning similar texts later. A related goal is to use information from external

lexicons and integrate it with the information that is acquired by the

alignment system.

60

50

%

40

30

20

10

0

-40

-20

0

Offset

20

40

Figure 4. Distribution of offsets between correct and output alignment

Table 5. Accumulative offset distribution for obtained connections

Maximal

offset

0

±10

±20

±30

±40

±50

±60

±70

±80

±90

±100

±110

±120

±130

Count

%

125

155

182

198

216

227

231

234

236

237

238

239

240

241

51.9

64.3

75.5

82.2

89.6

94.2

95.9

97.1

97.9

98.3

98.8

99.2

99.6

100.0

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

29

The successful implementation of alignment systems calls for further

development of methods that utilise the data they produce. Most notably,

there is room for further research on creating and evaluating lexicons that are

based both on the actual alignment output and on the lexicons created during

the process.

ACKNOWLEDGEMENTS

We thank the Ben Gurion 2000 project and Hod Ami Publishers for

providing us with parallel Hebrew-English text pairs. We thank Yuval

Krymolowski and other members of the Information Retrieval and

Computational Linguistics Laboratory at Bar Ilan University for fruitful

discussions and tool development. Some of the material in this chapter was

presented in tutorials by the third author at ACL and COLING 1996, and

were informally and briefly described by the first author in the first

SENSEVAL meeting 1998. This research was supported in part by grant

498/95-1 from the Israel Science Foundation.

APPENDIX A: TRANSLITERATION

In order to give the reader a picture as faithful as possible of the issues involved, we

represent Hebrew words in up to 3 forms: a letter-per-letter transliteration (in capital letters)

as per the following table, the word’s pronunciation in italics, and its translation to English.

Name

Aleph

Bet

Gimel

Dalet

He

Tr.

A

B

G

D

H

Table 6. Hebrew letters' transliteration

Name

Tr.

Name

Tr.

Name

Tr.

Vav

V

Kaf

K

Ayin

A

Zayin

Z

Lamed

L

Pe

P

Het

H

Mem

M

Tsadiq

C

Tet

T

Nun

N

Qof

Q

Yod

Y

Samekh

S

Resh

R

Name

Shin

Tav

Tr.

S

T

APPENDIX B: MORPHOLOGICAL VARIANTS

The following are examples of Hebrew/English corresponding lemmas and the

morphological variants of the Hebrew lemma which exist in the Hebrew/English corpus. The

frequency of each form is given in parentheses.

8066—ABVDH (Avoda, work) (410) work (367)

the-work (264), the-works (49), (a)-work (36), from-the-work (16), in-works / in-the-works /

30

Chapter 5

in-the-works-of (10), in-(a)-work / in-the-work (9), works / works-of (8), to-(a)-work / to-thework (3), to-works / to-the-works / to-the-works-of (3), from-the-works (2), that-the-works (2),

and-the-work (2), that-(a)-work (1), and-the-works (1), that-the-work (1), from-(a)-work (1),

the-work-of (1), and-works / and-works-of (1)

17447—HVRAH (Horaà, (an)-instruction) (95) instruction (76)

instructions (29), (an)-instruction (15), the-instruction (10), that-in-the-instruction-of (7), tothe-instructions / to-the-instructions-of (7), the-instructions (3), his-instructions (3), and-tothe-instructions / and-to-the-instructions-of (3), and-in-the-instructions / and-in-theinstructions-of (2), and-instructions / and-the-instructions-of (2), in-instructions / in-theinstructions / in-the-instructions-of (2), in-(an)-instruction / in-the-instruction (2), and-to-hisinstructions (2), to-his-instructions (2), the-instruction-of (2), from-instructions / from-theinstructions-of (1), and-the-instruction (1), to-(an)-instruction / to-the-instruction (1), hisinstruction (1)

632—YVM (Yom, (a)-day) (61) day (45)

(a)-day / the-day-of (24), from-(a)-day / from-the-day-of (14), days (12), in-(a)-day / in-theday-of (3), the-days (2), the-days-of (2), in-his-day (1), and-in-(a)-day / and-in-the-day-of /

and-in-the-day (1), and-in-(a)-day / and-in-the-day-of / and-in-the-day (1), the-day

(sometimes means today) (1)

18555—MSMK (Mismakh, (a)-document) (18) document (19)

the-documents (4), in-the-documents-of (2), (a)-document (2), and-the-documents (2), fromthe-documents (1), the-documents-of (1), the-documents-of (1), and-in-the-documents-of (1),

in-(a)-document / in-the-document-of / in-the-document (1), documents (1), from-thedocuments-of (1), in-documents / in-the-documents (1)

REFERENCES

Attar, R., Choueka, Y., Dershowitz, N., & Fraenkel, A. S. (1978). KEDMA - Linguistic

tools in retrieval systems. Journal of ACM, 25, 52–66.

Baum, L. E. (1972). An inequality and an associated maximization technique in statistical

estimation of probabilistic functions of a Markov process. Inequalities, 3, 1-8.

Brill, E. (1992). A simple rule-based part of speech tagger. Proc. of the DARPA

Workshop on Speech and Natural Language.

Brown, P., Lai, J., & Mercer, R. (1991). Aligning sentences in parallel corpora. Proc. of

the 29th Annual Meeting of the ACL.

Brown, P., Della Pietra, S., Della Pietra, V., & Mercer, R. (1993). The mathematics of

statistical machine translation: parameter estimation. Computational Linguistics, 19

(2), 263–311.

Choueka, Y. (1983). Linguistic and word-manipulation components in textual information

systems. The applications of mini- and micro-computers in information, documentation

and libraries (Keren, C., & Perlmutter, L., Eds.), pp. 405–417. North-Holland:

Elsevier.

Choueka, Y. (1990). RESPONSA: A full-text retrieval system with linguistic components

for large corpora. Computational Lexicology and Lexicography, a volume in honor of

B. Quemada (Zampolli, A., Ed.). Pisa: Giardini Editions.

Choueka, Y. (1997). Rav-Milim: the Complete Dictionary of Modern Hebrew in 6 Vols.

Tel-Aviv: Steimatzki, Miskal and C.E.T.

Church, K., Dagan, I., Gale, W., Fung, P., Helfman, J., & Satish, B. (1993). Aligning

parallel texts: Do methods developed for English-French generalize to Asian

5. A comprehensive bilingual word alignment system and its

application to disparate languages: Hebrew and English

languages?. Proc. of the Pacific Asia Conference on Formal and Computational

Linguistics.

Church, K. W. (1993). Char_align: A program for aligning parallel texts at the character

level. Proc. of the 31st Annual Meeting of the ACL.

Cormen, T. H., Leiserson, C. E., & Rivest, R. L. (1989). Dynamic programming.

Introduction to algorithms (Chapter 16), pp. 301–328. Cambridge, Massachusetts: The

MIT Press.

Dagan, I., & Church, K. (1994). Termight: Identifying and translating technical

terminology. Proc. of ACL Conference on Applied Natural Language Processing, pp.

34–40.

Dagan, I., & Church, K. (1997). Termight: Coordinating humans and machines in

bilingual terminology acquisition. Machine Translation, 12 (1–2), 89–107.

Dagan, I., Church, K., & Gale, W. (1993). Robust bilingual word alignment for machine

aided translation. Proc. of the Workshop on Very Large Corpora: Academic and

Industrial Perspectives, pp. 1–8. To appear also in Studies in Very Large Corpora

(Armstrong, S., Church, K. W., Isabelle, P., Tzoukermann, E., & David Yarowsky,

Eds.). Dordrecht: Kluwer Academic Publishers.

Dempster, A. P., Laird, N. M., & Rubin, D. B. (1977). Maximum likelihood from

incomplete data via the EM algorithm. Journal of the Royal Statistical Society, 39 (B),

1–38.

Fung, P. (1995). A pattern matching method for finding noun and proper noun

translations from noisy parallel corpora. Proc. of the 33rd Annual Meeting of the ACL.

Fung, P., & Church, K. (1994). K-vec: a new approach for aligning parallel texts.

COLING.

Fung, P., & McKeown, K. (1997). A technical word and term translation aid using noisy

parallel corpora across language groups. Machine Translation, 12 (1–2), 53–87.

Gale, W., & Church, K. (1991). Identifying word correspondence in parallel text. Proc. of

the DARPA Workshop on Speech and Natural Language.

Gale, W., & Church, K. (1991). A program for aligning sentences in bilingual corpora.

Proc. of the 29th Annual Meeting of the ACL.

Isabelle, P. (1992). Bi-textual aids for translators. Proc. of the Annual Conference of the

UW Center for the New OED and Text Research.

Kay, M., & Roscheisen, M. (1993). Text-translation alignment. Computational

Linguistics, 19 (1), 121–142.

Kay, M. (1997). The proper place of men and machines in language translation. Machine

Translation, 12 (1–2), 3-23.

Klavans, J., & Tzoukermann, E. (1990). The BICORD system. Proc. of COLING.