GrayWulf - Microsoft Research

advertisement

GrayWulf: Scalable Software Architecture for Data Intensive Computing

Yogesh Simmhan, Roger Barga,

Catharine van Ingen

Microsoft Research

{yoges, barga,

vaningen}@microsoft.com

Maria Nieto-Santisteban, Laszlo

Dobos, Nolan Li, Michael Shipway,

Alexander S. Szalay, Sue Werner

Johns Hopkins University

{nieto, nli, mshipway, szalay,

swerner}@pha.jhu.edu

Abstract

Big data presents new challenges to both cluster

infrastructure software and parallel application

design. We present a set of software services and

design principles for data intensive computing with

petabyte data sets, named GrayWulf†. These services

are intended for deployment on a cluster of

commodity servers similar to the well-known Beowulf

clusters. We use the Pan-STARRS system currently

under development as an example of the architecture

and principles in action.

1. Introduction

The nature of high performance computing is

changing. While a few years ago much of high-end

computing involved maximizing CPU cycles per

second allocated for a given problem, today it

revolves more and more around performing

computations over large data sets. The computing

must be moved to the data as, in the extreme, the data

cannot be moved [1].

The combination of inexpensive sensors,

satellites, and internet data accessibility is creating a

tsunami of data. Today, data sets are often in the tens

of terabytes and growing to petabytes. Satellite

imagery data sets have been in the petabyte range for

over 10 years [2]. High Energy Physics crossed the

petabyte boundary in about 2002 [3]. Astronomy will

cross that boundary in the next twelve months [4].

This data explosion is changing the science process

leading to eScience by blending in computer science

and informatics [5].

For data intensive applications the concept of

“cloud computing” is emerging where data and

computing are co-located at a large centralized

facility, and accessed as well-defined services. The

†

The GrayWulf name pays tribute to Jim Gray who has been

actively involved in the design principles.

Jim Heasley

University of Hawaii

heasley@ifa.hawaii.edu

data presented to the users of these services is often

an intermediate data product containing scienceready variables (“Level 2+”) and commonly used

summary statistics of those variables. The scienceready variables are derived by complex algorithm or

model requiring expert science knowledge and

computing skills not always shared by the users of

these services [6]. This offers many advantages over

the grid-based model, but it is still not clear how

willing scientists will be to use such remote clouds.

Recently Google and IBM have made such a facility

available for the academic community [23].

Data intensive cloud computing poses new

challenges for the cluster infrastructure software and

the application programmers for the intermediate data

products. There is no easy way to manage and

analyze these data today, not is there a simple system

design and deployment template. The requirements

include (i) scalability, (ii) the ability to grow and

evolve the system over a long period, (iii)

performance, (iv) end-user simplicity, and (v)

operational simplicity. All of these requirements are

to be met with sufficient system availability and

reliability at a relatively low cost not only at initial

deployment but throughout over the data lifetime.

2. Design Tenets for Data Intensive

Systems

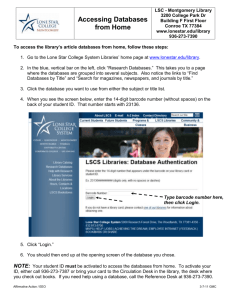

A high level systems view of a GrayWulf cluster

is shown in Figure 1. We separate the computing

farm into shared compute resources (the traditional

Beowulf [7]) and shared queryable data stores (the

Gray database addition).

Those resources are accessed by three different

groups:

Users who perform analyses on the shared

database.

Data valets who maintain the contents of the

shared databases on behalf of the users.

Figure 1. GrayWulf system overview.

Operators who maintain the compute and

storage resources on behalf of both the users and

data valets.

These groups have different rights and

responsibilities and the actions they perform on the

system have different consequences.

Users access the GrayWulf in the cloud from

their desktops. Data intensive operations happen in

the cloud; the (significantly smaller) results of those

operations can be retrieved from the cloud or shared,

like popular photos sharing services, with other cloud

computing collaborators. Specific challenges for a

GrayWulf beyond a Beowulf include:

Present a simple query model. Scientists

program as a means to an end rather than an end

in itself and are often not proficient

programmers. Our GrayWulf uses relational

databases although other models such as

MapReduce [8] could be chosen.

Minimize data movement. This is especially

the case over often poor internet connections to

the science desktop. GrayWulf systems include

user storage – a “MyDB” – which is collocated

with the larger shared databases. The MyDB or

its subset may be downloaded to the user’s

desktop.

Varying job sizes. Accommodate not only

analyses that run for periods of days but also

analyses that run on the order of a few minutes.

The workflow system and its scheduler that

handles the user analyses must accommodate

both.

Collaboration and privacy. Enable both data

privacy and data sharing between users. Having

the MyDB in the cloud enables users to

collaborate.

A powerful abstraction for the user cloud service

is a domain-specific workbench integrating the data,

common analysis tools and visualizations, but

running on standard GrayWulf hardware and

software platform.

The data valets operate behind the scenes to

present the shared databases to the users. They are

responsible for creating and maintaining the shared

database that contains the intermediate data product.

The data valets assemble the initial data, provide all

algorithms used to convert that data to science-ready

variables, design the database schema and storage

layout, and implement all necessary data update and

grooming activities. There are three key elements to

the GrayWulf approach:

“20 queries”. This is a set of English language

questions that captures the science interest used

as requirements for the deployed database [9,

10]. An example of a query might be “find all

quasars within a region and compute their

distance to surrounding galaxies”. The 20 queries

are the first driver for all database and algorithm

design.

“Divide and conquer”. Operating at petabyte

scales requires partitioning which enables

parallelization. Many scientific analyses are

driven by space or time. Partitioning along one

or both of those axes enables the data to be

distributed across physical resources. Once

partitioned, queries and/or data updates can be

run in parallel on each partition with or without a

reduction step [11].

Divide and conquer

approach is the second driver for all efficient

database and algorithm implementation.

”Faults happen”. Fault handling is designed

into all data valet workflows rather than being

added on afterwards. Success is simply a special

case of rapid fault repair and faults are detected

at the highest possible level in the system. Data

availability and data integrity are a concern at

petabyte scale, particularly when dealing with

commodity hardware. Traditional backup-restore

breaks down. Two copies protect against

catastrophic data loss; the surviving copy can be

used to generate the second copy. Three copies

are needed to protect against data corruption; the

surviving two copies can be compared against

known checksums and greatly reduce the

probability that both copies are corrupt.

The GrayWulf system services used by the data

valets have many of the same abstractions as the user

services. The difference is that the data valets

services must operate at petabyte scale with a much

higher need for logging and provenance tracking for

problem diagnosis.

Mediating between the users, data valets and

operators are the GrayWulf cluster management

services which provide configuration management,

fault management, and performance monitoring.

These services are often thin veneers on existing

services or extend those services to become databasecentric. For example, the GrayWulf configuration

manager encapsulates all knowledge to deploy a new

database using known database template onto a target

server.

To illustrate how the GrayWulf system

architecture and approach works in practice, the PanSTARRS system currently under development and

scheduled for deployment in early 2009 will be used.

Why we believe this system generalizes will be

explained as well as specific research challenges still

ahead.

3. The Pan-STARRS GrayWulf

3.1. A Telescope as a Data Generator

The Pan-STARRS project [12] will use a special

telescope in Hawaii with a 1.4 gigapixel camera to

sample the sky over a period of 3.5 years. The large

field of view and the relatively short exposures will

enable the telescope to cover three quarters of the sky

4 times per year, in 5 optical colors. This will result

in more than a petabyte of images per year. The

current telescope (PS1) is only a prototype of a much

larger system (PS4) consisting of 4 identical replicas

of PS1. PS1 saw first light in 2007 and is operating

out of the Haleakala Observatory at Maui, Hawaii.

The images are processed through an image

processing pipeline (IPP) that removes instrument

signatures from the raw data, extracts the individual

detections in each image, and matches them against

an existing catalog of objects. The IPP processes each

3 GB image in approximately 30 seconds. The

objects found in these images can exhibit a wide

range of variability: they can move on the sky (solar

system objects and nearby stars), or even if they are

stationary they can have transients in their light

(supernovae and novae) or can have regular

variations (variable stars). The IPP also computes the

more complicated statistical properties of each object.

Each night, the IPP processes approximately 2-3

terabytes of imagery to extract 100 million detections

on a LINUX compute farm of over 300 processors.

The detections are loaded into a shared database

for analysis. While the initial system and database

design was based on the experience gained from the

Sloan Digital Sky Survey (SDSS) SkyServer

experience [13], the Pan-STARRS system presents

two new challenges:

The data size is too large for a monolithic

database. The SDSS database after 5 years now

contains roughly 290 million objects and is 4

terabytes in size [14]. The Pan-STARRS

database will contain over 5 billion objects and,

at the end of the first year, will contain 70 billion

detections in 30 terabytes. At the end of PS1

project, the database will grow to 100 terabytes.

The updated data will become available to the

astronomers each week. SDSS has released

survey data on the order of every six months.

New detections will be loaded into the PanSTARRS database nightly and become available

to astronomers weekly. The object properties and

the detection-object associations are also updated

regularly.

At a high level, there are two different database

access patterns: queries that touch only a few objects

or detections and crawlers that perform long

sequential accesses to objects or detections. Crawlers

are typically partitioned into buckets to both return

data quickly and bound the redo time in the event of a

failure. User crawlers are read only to the shared

database; data valets crawlers are used to update

object properties or detection-object associations.

3.2. User Access – MyDB and CASJobs

User access to the shared database is through a

server-side workbench. Each user gets their own

database (MyDB) on the servers to store intermediate

results. Data can be analyzed or cross-correlated not

only inside MyDB but also against the shared

databases. Because both the MyDBs and the shared

databases are collocated in the cloud, the bandwidth

across machines can be high and the cross-machine

database queries efficient.

Users have full control over their own MyDBs

and have the full expressivity of SQL. Users may

create custom schemas to hold results or bind

additional code within their MyDB for specific

analyses. Data may be uploaded to or downloaded

from a MyDB and tables can be shared with other

users, thus creating a collaborative environment

where users can form groups to share results.

Access to MyDBs and the shared databases are

through the Catalog Archive Server Jobs System

(CASJobs) initially built for SDSS [15]. The

CASJobs web service authenticates users, schedules

queries to ensure fairness to both long and short

running queries, and includes tools for database

access such as a schema browser and execution query

plan viewer.

CASJobs logs each user action, and once the final

correct result is achieved, it is possible to recreate a

clean path that leads directly to the result, removing

all the blind alleys. This is particularly useful as

many scientific analyses begin in an exploratory

fashion and only then repeated at scale. The clean

path is often obscured by the exploration.

The combination of MyDB and CASJobs has

proved to be incredibly successful [16]. Today 1,600

astronomers – approximately 10 percent of the

world’s professional astronomy population – are

daily users. Some of these users are “power users”

extending the full capabilities of SQL. Others use

“baby SQL” much like a scripting language staring

from commonly used sample queries. The system

supports both ad hoc and production analyses and

balances both small queries as well as long bucketed

crawlers.

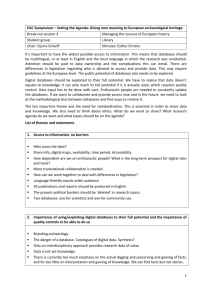

3.3. The PS1 Database

The PS1 database includes three head types of

tables: Detections, Objects, and Metadata. Detection

rows correspond to astronomical sources detected in

either single or stacked images. Object rows

correspond to unique astronomical sources (stars and

galaxies) and summarize statistics from both single

and stack detections which are stored in different sets

of columns respectively. Metadata refers mainly to

telescope and image information to track the

provenance of the detections. Figure 2 shows the

actual tables and their relationships.

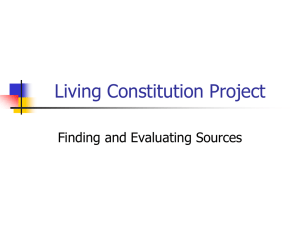

While the number of objects stays mostly

constant over the duration of the whole survey, about

14 billion new stack detections are inserted every six

months and about 120 million single image

detections every day. The gathered data generates an

83 terabyte monolithic database by the end of the

survey (Table 1). These numbers clearly show that to

reach a scalable solution we need to distribute the

data across a database cluster handled by the Gray

Figure 2. Pan-STARRS PS1 database schema.

Table 1. Pan-STARRS PS1 database

estimated size and yearly growth.

Table

Y1

Y2

Y3

Object

2.03

2.03

2.03

P2Detection

8.02

16.03 24.05

StackDetection

6.78

13.56 20.34

StackApFlx

0.62

1.24

1.86

StackModelFits

1.22

2.44

3.66

StackHighSigDelta 1.76

3.51

5.27

Other tables

1.78

2.07

2.37

Indexes (+20%)

4.44

8.18

11.20

26.65 49.07 71.5

Total [terabytes]

Y3 .5

2.03

28.06

23.73

2.17

4.27

6.15

2.52

13.78

82.71

part of the Wulf.

3.4. Layering and Partitioning for

Scalability

The Pan-STARRS database architecture was

informed by the GrayWulf design tenets.

The 20 queries suggest that many of the user

queries operate only on the objects without

touching the detections. There were relatively

few joins between the objects and detections.

Making a significantly smaller unified copy of

just the objects and dedicating servers to only

those queries would allow the server

configuration to be optimized for those queries

and, if necessary, additional copies could be

deployed.

Dividing the detections spatially enables

horizontal partitioning.

Separating the load associated with the data

updates from that due to the user queries enables

simpler balancing and configuration. Servers can

be optimized for one or the other role.

Treating the weekly data update as a special case

of a database or server loss designs the fault

handling in.

As shown in Figure 3, the database architecture is

hierarchal. The lower data layer, or slice databases,

contains vertical partitions of the objects, detections

and metadata. The data are divided spatially using

zones [17] and different pieces of the sky are

assigned to each slice database. Each slice contains

roughly the same number of objects along with their

detections and metadata. The top data layer, or head

databases, presents a unified view of the objects,

detections, and metadata to the users. Head databases

may be frozen or virtual. Frozen head databases

contain only the objects and their properties copied

from the slices. Virtual head databases “glue” the

slice contents together using Distributed Partitioned

Views (DPV) across slice databases.

Slice databases are one of hot, warm, or cold. The

hot and warm slices are used exclusively for

CASJobs user queries. The cold slices are used for all

data loading, validation, merging, indexing, and

reorganization. This division lets us optimize disk

and server configuration for the two different loads.

The three copies also provide fault tolerance and load

balancing. We can lose any slice at any time and

recover.

The head and slice databases are distributed

across head and slice servers. Head servers hold hot

Figure 3. Shared data store architecture.

and warm head databases; slice servers hold hot and

warm slice databases. Each slice server hosts four

databases – two hot and two warm. Each slice

database is about 2.5 terabytes to accommodate to the

commodity hardware. The architecture scales very

nicely by adding more slice blocks as the data grow.

The hot and warm databases are distributed such that

no server holds both the hot and warm from a given

partition; if one database or server fails, another

server can pick the workload while the appropriate

recovery processes take place.

The loader/merger servers hold the cold slice

databases and the load databases. Every night, new

detections from the IPP are ingested into load

databases where the data are validated and staged.

Every week, load databases are merged into the cold

databases. Once the merge has been successfully

completed, the cold databases are copied to an

appropriate slice server. The resulting “waiting

warm” databases are then to be flipped to replace the

old warm databases. This approach does require

copying each (large) cold slice database twice across

the internal network every week. That challenge

seems less than managing to reproduce the load and

merge on the hot and warm slices while still

providing good CASJobs user query performance as

well as fault tolerance and data consistency. We are

taking the “Xerox” path: do the work once and copy.

3.5. Data Releases: How to keep half your

users happy and the other half not

confused

PS1 will be the first astronomical survey

providing access to temporal series in a timely

matter. We expect a weekly delay between when the

IPP ships the new detections and scientists can query

them. However, once the new detections are into the

system, the question remains on how often we update

the object statistics. There are two issues consider

regarding updates: cost and repeatability.

Object updates can be expensive in terms of

computation, and I/O resources. Some statistics, such

as refining the astrometry (better positional

precision), require retrieving complex computations

using all the detections associated with an object.

Celestial objects are not visible to the Pan-STARRS

telescope all year. Objects are “done for this year” at

predictable and known times. Thus, object updates

can be computed in a rolling manner following the

telescope. This distributes the processing over time as

well as presents the new statistics in a timely manner.

The updates can be done as system crawlers.

Since statistics update can be a complex process,

we plan to divide the workload in small tasks and use

system crawlers that scan the data continuously and

compute new statistics. Although, the current PanSTARRS design does not include the “Beo” side of

the wulf, we might well use the force of the Beowulf

if the computational complexity of the statistics

grows. Additionally, updates may affect indexes and

produce costly logging activities that take time and

disk space. While the first issue can be solved by

throwing more or better hardware in the load/merge

machines, the repeatability issue is more subtle and

difficult to address.

Science relies on repeatability. Once the data has

been published, it must not change so results can be

reproduced. While half our users are eager to get to

the latest detections and updated statistics, the other

half prefers resubmitting the query from last week or

last month and getting the exact same results. Since

we cannot afford to have full copies of different

releases we need a more creative solution.

In the prior section we mentioned that the head

database presents a single logical view to the user

using distributed partitioned views or, in other words,

the head database is a virtual database with views on

the contents of the slice databases which are

distributed across slice servers. The virtual head

database has no physical content other than some

light weight metadata. When a new release is

published, we create a frozen instance of the object

statistics and define distributed partitioned views on

the detections using a release constraint. The release

overheads will make the database grow to over a 100

terabytes at the end of PS1 survey (3.5 years of

observation).

3.6. Cluster Management Services

Architecturally, GrayWulf is a collection of

services distributed across logical resources which

reside on abstracted physical resources. Systems

management is a well trod path. The data-intensive

nature and scale of a GrayWulf poses additional

challenges for simple touch-free, yet robust

operations. The GrayWulf approach is to co-exist

with other deployed management applications by

leveraging and layering.

The Cluster Configuration Manager acts as the

central repository and arbiter for GrayWulf services

and resources. Operator recorded changes and

changes by data valet workflows are tracked directly;

out-of-band changes are discovered by survey.

CASJobs and all data valet workflows interact with

the Configuration Manager. The Configuration

Manager also allocates resources between the data

valets and the users; resources may be used by only

valets, both valets and users, or only users at any

time.

The Configuration Manager captures the

following cluster entities and relations between them:

Services: Role definitions. Each machine role is

related to a set of infrastructure services that run

on it. These are servers that are deployed on the

machines and server instances of these that are

running. Database server instances are defined

in the logical view as specializations of server

instances.

Database instances: Templates and maps.

Each unique deployed database is a database

instance. Each instance is defined by a

description of the data it stores (such as the slice

zone), a database schema template, and the

mapping to the storage on which it resides.

Storage: Database to disk. Each database

instance is mapped to files. Files are located in

directories on software volumes. Software

volumes are constructed from RAID volume

extents; software volumes can span and/or subset

RAID volumes. RAID volumes are constructed

from locally attached physical disks.

Machines: Roles for processors, networks,

and disks. The cluster is composed of a number

of machines, and each machine contains physical

disks and network interconnects. Machines are

assigned specific roles, such as a web server or a

hot/warm database server or load/merge database

server. Roles help determine the software to be

installed or updated on a machine as well as

targeting data valet workflows. The knowledge

of the physical disks and networks is necessary

when balancing the applied load or repairing

faults.

The Configuration Manager exposes APIs for

performing consistent operations on the cluster. An

example is creation of a slice database instance using

the current version of that database schema and

mapping on a specific machine; the APIs update the

configuration database as the operation proceeds. All

data valet and operator actions use these APIs. The

operators have a web interface that uses the client

library to view the cluster configuration or modify

the configuration, such as when adding a new

machine.

GrayWulf factors in fault handling because faults

happen. New database deployment is treated as a case

of a failed database; loss of a database instance is

treated as a special case of the load/merge flip. The

fault recovery model is the simple yet effective:

reboot and replace. Failed disks that do not fail the

RAID volume are repaired by replacing the disk;

optionally, the query load on the affected databases

can be throttled. Soft machine failures such as service

crashes or hangs are repaired by reboot. All other

machine failure causes the database instances on that

machine to be migrated to other machines. In other

words when a machine holding a hot slice database is

lost, another copy of that hot slice database is

reconstituted on another machine from the

corresponding cold slice database. The failed

machine can be replaced at a future time; the sole

task requiring operator attention.

Health and fault monitoring tools detect failures

and launch fault recovery workflows. Whenever

possible, the monitoring tools operate at the highest

level first. In other words, if the monitor can query a

database instance, there is no need to ping the

machine. There are also survey validations that

periodically compare the configuration database to

the deployed resources.

Performance can be monitored in three ways.

CASJobs includes a user query log that can be

mined. A performance watchdog gathers non

intrusive, low granularity performance data as a

general operational check. An impact analysis

sandbox is provided to launch a suite of queries or

jobs on a machine and record detailed performance

logs.

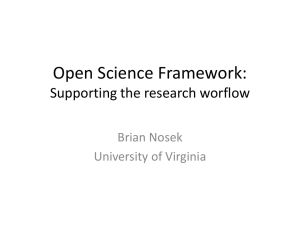

3.7. Data Valet Workflow Support

Services

Workflows are being commonly used to model

scientific experiments and applications for eScience

researchers. GrayWulf uses this same methodology

for the data valet actions. As shown in Figure 4, the

data valet workflow infrastructure includes a set of

services for workflow visual composition, automatic

provenance capture, scheduling, monitoring, and

fault handling. Our implementation uses the Trident

open source scientific workflow system [18] built on

top of the core runtime support Windows Workflow

Foundation [19].

Each data valet action becomes a workflow and is

composed of a set of activities. Workflows are

scheduled with knowledge of cluster configuration

information from the Configuration Manager and

policy information from the workflow registry.

During execution, a provenance service intercepts

each event to create an operation history.

Each activity performs a single action on a single

database or server. This divide and conquer approach

simplifies the subsequent fault handling and

monitoring as the state of the system and the steps to

recover can be simply determined from the

information captured by the provenance service.

Known activities and completed workflows are

tracked in a registry. This registry enables reuse of

activities or workflows in different workflows. For

example, in Pan-STARRS, the workflow for making

a copy of a slice the database on a remote machine

are used both by the workflow for copying updated

slice databases to the head machines, as well as to

make a new replica of a slice database in case of a

disk failure. This ability to reuse activities and

workflows encourages data valets to divide and

conquer when implementing workflows. A graphical

visual interface enables new workflow composition,

adding activities, as well as monitoring workflow

execution.

data valet workflows, this tracking should be

invaluable for complex system debugging.

The workflow infrastructure also maintains a

resource registry that records metadata about

activities, services, data products and workflows, and

their relationships. A resource may even be a data

valet or operator. This allows composition of

workflows that require a human step such as

replacing a disk or visual validation of a result. The

resource registry is also used to manage workflow

versioning or curate of workflows, services, and data

products.

3.8. Workflows as Data Management

Tools

Figure 4. Trident scientific workflow system

modules.

The scheduling service instantiates all data valet

workflows including load balancing and throttling.

The scheduler maintains a list of workflows that can

be executed on servers with specific roles; this

provides simple runtime protections such as avoiding

a copy of a cold slice database to a head server. The

scheduling service interacts with the Configuration

Manager to track database movements and server

configuration changes. At present, the scheduling

service is limited to scheduling one and only one

complete workflow on a specific node for simplicity;

we are evaluating methods to enable more

parallelism.

Workflow provenance refers to the derivation

history of a result, and is vital for the understanding,

verification, and reproduction of the results created

by a workflow [20]. As a workflow executes, the

provenance service intercepts each event to create a

log. Each record represents a change in operational

state and forms an accurate history of state changes

up to and including the “present” state of execution.

These records are sent to a provenance service that

“weaves” these records into a provenance trace

describing how the final result was created. The

provenance service also tracks the evolution of a

workflow as it changes from version to version. For

Applications using GrayWulf are by definition

data intensive and there is constant data movement

and churning taking place. Data is being streamed,

loaded, moved, load balanced, replicated, recovered

and crawled at any point in time by the data valet

tasks. Workflows provide the support required to

manage the complexity of the cluster configuration

and round the clock data updates in an autonomic

manner: reliably without administrator presence. If a

machine or a disk fails, the system recovers onto

existing machines by reconfiguring itself and

administrator’s primary task will be to eventually roll

out that machine and plug in a replacement and let

the system rebalance the load.

There are several types of data valet workflows

that are applicable to GrayWulf applications that are,

to some extent, analogous to Extract Load Transform

(ETL) steps performed in Data Warehouses.

Load workflow. Data streaming from sensors

and instruments need to be assimilated into

existing databases. The load workflow does the

initial data format conversion from the incoming

data format into the database schema. This may

involve data translation, verifying data structure

consistency, checking data quality, mapping the

incoming data structure to the database schema,

determining optimized placement of the “load

databases”, performing the actual load, and

running post load validations. For example, PanSTARRS load workflows take the incoming

CSV data files from the IPP in, validates and

loads them into load databases that are optimally

co-located with slice databases.

Merge workflow. The merge workflow ingests

the incoming data in the load databases into the

cold databases, and reorganizes the incremented

database in an efficient manner. If multiple

databases are to be loaded, as in the case of PanSTARRS, there may be additional scheduling

required to do the updates concurrently and

expose a consistent data release version to the

users.

Data replication workflow. The data replication

workflow creates copies of databases over the

network in an efficient manner. This is necessary

to ensure availability of adequate copies of the

databases, replicating databases upon updates or

failures. The workflow coordinates with the

CASJobs scheduler to acquire locks on hot and

warm databases, determines data placement,

makes optimal use of I/O and network

bandwidth and ensures the databases are not

corrupted during copy. In Pan-STARRS, these

workflows are typically run after a merge

workflow updates an offline copy of the database

to propagate the results, or if a fault causes the

loss of a database copy.

Crawler workflow. Complete scans of the

available data are required to check for silent

data corruption or, in Pan-STARRS, to update

object data statistics. These workflows need to

be designed as minimally intrusive background

tasks on live systems. A common pattern for

these crawlers is to “bucket” portions of the large

data space and apply the validation or statistic on

that bucket. This allows the tasks to be

performed incrementally, recover from faults at

the last processed bucket, balance the load on the

machine and enable parallelization.

Fault-recovery workflow. Recovering from

hardware or software faults is a key task. Fault

recovery requires complex coordination between

several software components and must be fully

automated. Fault and recovery can be modeled as

state diagrams that are implemented naturally as

workflows. These workflows may be associated

as fault handlers for other workflows or triggered

by the health monitor. Occasionally, a fault can

be recovered in a shorter time by allowing

existing tasks to proceed to completion; hence

look-ahead planning is required. In PanSTARRS, many of the fault workflows deal with

data loss and coordinate with the schedulers to

pause existing workflows, launch data

replication workflows and ensure the system

gracefully degrades and recovers, while

remaining in a deterministic state.

Data valet workflows are different from

traditional eScience workflows which are scientist

facing. They have a greater degree of fault resilience

due to their critical nature, the security model takes

into account writes performed on system databases,

and provenance collection needs to track attribution

and database states.

4. Future Visions and Conclusions

Data-intensive science has rapidly emerged as

the fourth pillar of science. The hardware and

software tools required for effective eScience need to

evolve beyond the high performance computational

science that is supported by Beowulf. GrayWulf is a

big step in this direction.

Big data cannot reside on the user’s desktop due

to the cost and capacity limitations of desktop storage

and networking. The alternate is to store data in the

cloud and move the computations to the data. Our

Graywulf uses SQL and databases to simply transport

user analyses to the data, store results near the data

and retrieve summary results to the desktop. Enabling

users to share results in the cloud also promotes

sharing and collaboration often central to multi

disciplinary science. Cloud data storage also

simplifies the preparation and grooming of shared

data products by controlling the location and access

to those data products – the location of the “truth” is

known at all times and provenance can be preserved.

The infrastructure services present in GrayWulf

provide essential tools for deploying a shared data

product in the tens of terabytes to petabyte scale. The

services support the initial configuration, fault

handling, and rolling upgrade of the cluster. Beyond

Beowulf, the GrayWulf approach supports

continuous data updates without sacrificing data set

query performance or availability. These tools are

designed to assist both the operators of the system in

routine system maintenance as well as the data valets

who are responsible for data ingestion or other

grooming..

GrayWulf is designed to be extensible and future

ready. This is aptly captured by its application to

Pan-STARRS which will see its science data size

grow linearly by 30 terabytes per year during the first

3.5 years and four times that when the telescope is

fully deployed. The infrastructure services are

modular to ensure longevity. While the relational

data access and programming model is used today,

we can equally well accommodate patterns like

Hadoop [20], Map Reduce or Dryad-LINQ [21]. The

use of workflow for the data valet operations makes

those tasks simpler and easier to evolve. While we

have focused on the Pan-STARRS system here, we

are also working on other GrayWulf application s

such as a turbulence study on gridded data and crosscorrelations for large astronomical catalogs.

The scalable and extensible software architecture

discussed in this paper addresses the unique

challenges posed by very large scientific data sets.

The architecture is intended to be deployed on the

GrayWulf hardware architecture based on commodity

servers with local storage and high bandwidth

networking. We believe the combination provides an

affordable and simply deployable approach for

systems for data intensive science analyses.

5. Acknowledgements

The authors would like to thank Jim Gray for

many years of intense collaboration and friendship.

Financial support for the GrayWulf cluster hardware

was provided by the Gordon and Betty Moore

Foundation, Microsoft Research and the PanSTARRS project. Microsoft’s SQL Server group, in

particular Michael Thomassy, Lubor Kollar and Jose

Blakeley for the enormous help in optimizing the

throughput of the database engine. We would like

thank Tony Hey, Dan Fay and David Heckerman for

their encouragement and support throughout the

development of this project.

6. References

[1] P. Watson, “Databases in Grid Applications: Locality

and Distribution”, Database: Enterprise, Skills and

Innovation. British National Conference on Databases, UK,

July 5-7, 2005. LNCS Vol. 3567 pp. 1-16.

[2] P. M. Caulk, “The Design of a Petabyte Archive and

Distribution System for the NASA ECS Project", Fourth

NASA Goddard Conference on Mass Storage Systems and

Technologies, College Park, Maryland, March 1995.

[3] J. Becla and D. Wang, “Lessons Learned from

Managing a Petabyte”, CIDR 2005 Conference, Asilomar,

2005.

[4] Panoramic Survey Telescope and Rapid Response

System, http://pan-starrs.ifa.hawaii.edu/

[5] A. S. Szalay and J. Gray, “Science in an Exponential

World”, Nature, 440, pp 23-24, 2006.

[6] J. Gray, D. Liu, M. Nieto-Santisteban, A. S. Szalay, D.

DeWitt and G. Heber, “Scientific data management in the

coming decade”, ACM SIGMOD, 34(4), pp 34-41, 2005.

[7] T. Sterling, J. Salmon, D. J. Becker and D. F. Savarese,

“How to Build a Beowulf: A Guide to the Implementation

and Application of PC Clusters”, MIT Press, Cambridge,

MA, 1999, also http://beowulf.org/.

[8] J. Dean and S. Ghemawat, “MapReduce: Simplified

Data Processing on Large Clusters”, 6th Symposium on

Operating System Design and Implementation, San

Francisco, 2004.

[9] J. Gray and A. S. Szalay, “Where the Rubber Meets the

Sky: Bridging the Gap between Databases and Science”,

MSR-TR-2004-110, October 2004, IEEE Data Engineering

Bulletin, December 2004, Vol. 27.4, pp. 3-11.

[10] M. Nieto-Santisteban, T. School, A. Szalay and A.

Kemper, “20 Spatial Queries for an Astronomer’s

Bench(mark)”, Proceedings of Astronomical Data Analysis

Software and Systems XVII, London, UK, 23rd - 26th

September 2007.

[11] M. A. Nieto-Santisteban, J. Gray, A. S. Szalay, J.

Annis, A. R. Thakar and W. J. O’Mullane, “When

Database Systems Meet the Grid”, Proceedings of ACM

CIDR 2005, Asilomar, CA, January 2005).11.

[12] D. Jewitt, “Project Pan-STARRS and the Outer Solar

System”, Earth, Moon and Planets 92: 465–476, 2003.

[13] A. R. Thakar, A. S. Szalay, P. Z. Kunszt and J. Gray,

“The Sloan Digital Sky Survey Science Archive: Migrating

a Multi-Terabyte Astronomical Archive from Object to

Relational DBMS”, Computing in Science and

Engineering, V5.5, Sept 2003, IEEE Press. pp. 16-29, 2003.

[14] J. Adelman-McCarthy et al., “The Sixth Data Release

of the Sloan Digital Sky Survey”, The Astrophysical

Journal Supplement Series, Volume 175, Issue 2, pp. 297313, 2007.

[15] W. O’Mullane, N. Li, M. Nieto-Santisteban, A. S.

Szalay and A. R. Thakar, “Batch is Back: CASJobs,

Serving Multi-TB Data on the Web”, International

conference on Web Services 2005: 33-40.

[16] V. Singh, J. Gray, A. R. Thakar, A. S. Szalay, J.

Raddick, W. Boroski, S. Lebedeva and B. Yanny,

“SkyServer Traffic Report – The First Five Years”,

Microsoft Technical Report, MSR-TR-2006-190, 2006.

[17] M. A. Nieto-Santisteban, A. Thakar, A. Szalay and J.

Gray, “Large-Scale Query and XMatch, Entering the

Parallel Zone,” Astronomical Data Analysis Software and

Systems XV ASP Conference Series, Vol 351, Proceedings

of the Conference Held 2-5 October 2005 in San Lorenzo

de El Escorial, Spain. Edited by Carlos Gabriel, Christophe

Arviset, Daniel Ponz, and Enrique Solano. San Francisco:

Astronomical Society of the Pacific, 2006. p.493.

[18] Barga, R.S., Jackson, J., Araujo, N., Guo, D., Gautam,

N., Grochow, K. and Lazowska, E., “Trident: Scientific

Workflow Workbench for Oceanography”, International

Workshop on Scientific Workflows (SWF 2008).

[19] P. Andrews, “Presenting Windows Workflow

Foundation”, Sams Publishing, 2005.

[20] Y. Simmhan, B. Plale and D. Gannon, “A Survey of

Data Provenance for eScience”. SIGMOD Record, Vol. 34,

No. 3, 2005.

[21] http://hadoop.apache.org/

[22] M. Isard, M. Budiu, Y. Yu, A. Birrell, and D. Fetterly,

“Dryad: Distributed Data-Parallel Programs from

Sequential Building Blocks”, EuroSys, 2007.

[23] S. Lohr, “Google and I.B.M. Join in ‘Cloud

Computing’ Research”, New York Times article, October

8, 2007.