Science Report KS23 Pilot WJEC

Key Stages 2/3 Cluster Group External Moderation, Pilot 2010

Chief Moderator’s Report – SCIENCE

Section 1: Main messages for school clusters/local authorities (written in style to share with the pilot audiences)

Range of evidence received by moderators against expectations (i.e. based on WJEC’s guidance) :

The majority of profiles included a sufficient number of enquiries. However, a significant number did not sufficiently evidence the breadth of the Skills section of the programme of study. This was often due to a limited use of different types of enquiry e.g. the enquiries used were mainly fair testing enquiries. A small number of clusters based their evidence around a large number of short tasks focusing on individual skills. Although opportunities for developing individual skills are an important part of the learning process, the tasks tend not to be set in interesting open-ended contexts which allow learners to demonstrate the full range of enquiry skills.

The skills within Planning were generally appropriately addressed with the exception of

‘determine success criteria’. Many of the success criteria identified were related to the steps within the enquiry rather than considering what success in the task might look like.

Modelling success is essential to support learners in developing this skill.

The skills most frequently missing were ‘review success, ‘evaluate learning’ and ‘link learning’ within Reflecting.

The majority of enquiries were set within the context of the appropriate programme of study. However there were a small number of profiles from KS3 using contexts from the KS4 programme of study e.g. radiation, aspects of electricity. Similarly at KS2, some enquiries were more suited to the Foundation Phase because of their limited demand; others were more suited to the KS3 programme of study e.g. enquiries based on photosynthesis, food webs.

In general, the contexts used for enquiries were traditional and the work did not reflect a significant change in methodology. Assessment for learning strategies were not clearly evident. However, there were some limited examples of the use of tools such as diamond ranking, KWL grids, mind mapping, Venn diagrams and strategies such as hot seating

,skimming and scanning. These provided learners with an opportunity to construct their own thinking and learning.

Just over half of the 20 clusters involved in the pilot selected profiles representing best-fit attainment at either the lower or top end of the relevant level, as requested. The majority of the remainder presented profiles which were assumed by moderators to be securely within the level. For the purpose of the pilot, it was decided that these would be moderated. A small number did not have evidence for the levels requested and either sent evidence for other levels, as a replacement or no evidence at all. One cluster presented a ‘mock’ profile representing the work of a number of learners from which they determined a best-fit judgement. This was moderated in this pilot exercise although an end of key stage learner profile should be compiled from a range of work of an individual learner.

The profiles from the vast majority of clusters showed attainment against Curriculum 2008.

However the annotation on some enquiries indicated that the work had been carried out prior to 2008 and suggested that the profiles might not reflect end of key stage assessment.

The vast majority of profiles were presented as hard copy. The remainder were presented electronically although these did not include examples of video or audio material which

could provide useful additional evidence. No models or artefacts were received. Some profiles included digital photographs of learners carrying out tasks and ICT was further used for PowerPoint presentations, word processing and for communicating data as charts and graphs.

Most learner profiles were a compilation of samples of a learner’s work. A small number presented learners’ exercise books. In theory, the latter can provide useful evidence but they need to be carefully referenced in order for the profiles to be useful future reference sources for the cluster and also to aid the moderation process.

Main findings from the pilot in terms of cluster groups’ understanding of national standards.

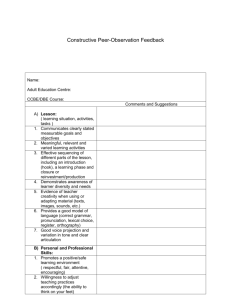

The commentaries on individual enquiries were presented in a number of ways:

(i) as continuous prose, describing the learner’s achievement; the quality of the commentaries varied from very good to insufficient. Those that were very good described clearly the contexts in which the enquiries were set and the ways in which they were undertaken. They referred accurately to the level characteristics displayed in the learner’s work and cross referencing between the two was clear and easy to follow. They provided an effective resource for future use by the cluster. Where the commentaries were insufficient, there was too little or no information about the contexts in which the enquiries were set and how they had been carried out. Neither was there information to demonstrate the cluster’s understanding of the level descriptions. There were a small number of examples, particularly those involving the use of learners’ books as profiles, where it was difficult to cross reference between the commentary and the tasks within the books.

(ii) as annotation on the learner’s work; this proved to be an effective way of locating the evidence within the learner’s profile.

(iii) by highlighting aspects of the level descriptions or highlighting characteristics of levels from the DCELLS ‘strands in progression table’; this method alone, unsupported by further commentary, did not provide sufficient evidence about the contexts of the enquiries and in most cases, did not pin-point the evidence in the individual enquiries.

There were several examples of clusters identifying level characteristics which were not evident in the learner’s work and no further supporting evidence was provided through the commentary; for example, the commentary stated that the learner had made a prediction but did not state what the prediction was; the cluster stated that the learner had recorded their observations in a table but did not provide information as to whether this had been done independently nor detail of the data collected. In both these examples the outcome could have displayed characteristics of a range of levels.

The level characteristics for the majority of skills were generally accurate within one level.

However, there were a number of examples in which clusters decided that if a learner’s work did not meet a particular level characteristic, it would automatically be assigned the adjacent level characteristic (either above or below) without considering the descriptions of these.

Some clusters incorrectly made best-fit judgements for individual enquiries.

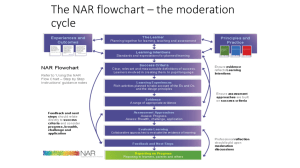

The clusters’ best-fit summary commentaries were presented as either :

(i) continuous prose that described the learner’s attainment. The quality of the commentaries varied from very good to insufficient. Those that were very good described clearly why the best-fit judgement had been made. This judgement had been checked against adjacent level descriptions to ensure that the level judged to be the most appropriate was closest to the overall match to the learner’s performance. The judgement was also based on sufficient evidence. In some cases, although the commentary took account of the evidence available, the latter was insufficient to make a valid judgement. or

(ii) by highlighting aspects of the level descriptions or highlighting characteristics from the

DCELLS ‘strands in progression table’. Although this type of summary commentary went some way in explaining the cluster’s best-fit judgement, it did not fully describe the rationale on which the judgement was made.

Identification of cluster group good practice/areas for development

The following aspects of good practice were noted:

A common format was used by both KS2 and KS3 teachers in a small number of clusters. This suggested that the process of cluster moderation had been jointly planned.

One cluster produced a checklist for moderation which was attached to each profile. This was extremely useful in ensuring that all the requirements for moderation had been met.

The learner profiles from two clusters contained an introductory paragraph describing the learner in terms of his/her preferred learning style, the ability to work independently and interdependently as well as any additional needs where appropriate. This usefully provided the profile with a context.

Summary of key messages for clusters on identifying future learner profiles and commentary.

1. Statutory requirements re moderation

Clusters are reminded of the statutory requirements for moderation in that primary and secondary schools are required to have in place effective arrangements for cluster group moderation. Within each cluster group, all schools should therefore play an active part in :

identifying learner profiles to use as a source of evidence;

attending cluster group meetings to moderate selected learner profiles;

agreeing the moderated outcomes and adopting the cluster’s moderated learner profiles as benchmarks within their individual schools; and

applying these benchmarks to future teacher assessment and in particular to end of

KS2/3 teacher assessment.

Profiles provided for external moderation need to reflect this practice.

2. What good practice might look like in a science learner profile?

A useful learner profile should be based on a number of enquiries which :

provide evidence for Communication, Planning, Developing and Reflecting from the

Skills section of the Programme of Study;

show sufficient coverage of the breadth of the individual skills within the above Skill areas; and

are set in the context of the Range of the relevant programme of study.

Using open-ended ‘big questions’ as contexts for enquiries allows learners to demonstrate effectively their skills, knowledge and understanding and therefore would be appropriate to achieve the above. These would also allow for the use of ‘thinking tools and strategies’ to support Planning,

Developing and Reflecting.

The individual enquiries in the profile need to be annotated clearly so that the source of the evidence can be identified easily. The individual enquiry profile identifies level characteristics displayed within the work.

Each profile should be accompanied by a summary commentary which provides the rationale as to how the ‘best-fit’ judgement has been made.

Summary of possible implications for teaching (i.e. task setting) and assessment.

Learners should be given the opportunity to carry out different types of enquiry in order to allow the development of a wide range of enquiry skills within the Skill areas of

Communication, Planning, Developing and Reflecting.

Contexts for enquiries need to be motivating and relevant to learners and allow them a degree of independence in the way that they work. Using open-ended ‘big’ questions is likely to engage more learners and improve their achievements. When using ‘big questions’ learners will need to plan carefully and initially will need support through the teacher asking probing questions.

Learners need a repertoire of thinking tools and strategies to organise their thoughts and ideas so that the planning, developing and reflection processes are clear and meaningful .

Learners need support in identifying success criteria. To begin with, they think that completing a task indicates success and do not consider the quality of the outcomes. Initially questioning is important in order to generate a set of success criteria that the teacher and learners agree on. As learners become more confident, they will gradually be able to identify their own success criteria and eventually be able to select and justify a set of specific success criteria that can be used to judge the outcome of a task.

Teachers should allow time for learners to reflect on how they have learned as well as what they have learned. Another feature of the reflection process is the evaluation and refinement of success criteria. To begin with learners need support to link outcomes with success criteria. Gradually they are more able to recognise their achievements and difficulties independently when evaluating their learning. As this develops learners use the success criteria to analyse their learning (through metacognitive questions) and eventually they are able to refine their success criteria and apply the refinements when developing success criteria for other activities.

Learners need support in linking their learning. This can be done through the use of focused questioning.