2014-2015 Assessment Activities Report

advertisement

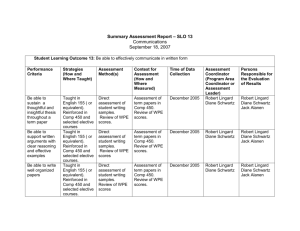

Report of the Office of Assessment and Program Review on 2014-15 Pilot Assessments of the University Undergraduate Core Competencies In 2014-15, the Office of Assessment and Program Review undertook its own direct assessments of student learning via two pilot projects in cooperation with the administrators of the university Writing Proficiency Exam and the College of Humanities. These projects were undertaken to provide the university with a multilayered system of assessments to satisfy new WASC requirements to assess in a uniform manner the Five Core Competencies of Written Communication, Critical Thinking, Quantitative Literacy, Oral Communication, and Information Literacy at both the entry and the exit level of student learning. Consistent with the university’s commitment to diversity in all its manifestations, the OAPR’s pilot projects are designed to supplement, not replace, existing programmatic teaching and assessment activities with respect to the Core Competencies, thus preserving the diverse understandings and approaches to the competencies practiced by a diverse array of departments, while at the same time offering a university-wide measure in accordance with WASC requirements. At the same time, the pilot projects were designed to demonstrate the essential connections between departmental and university-wide commitments to the Competencies by directly involving faculty from a variety of departments in the administration, design, and scoring of the assessment instruments, thus exemplifying the kind of integrative approach that the university’s Mission and Values statements commits us to, and that the upcoming university Meaning, Integrity, and Quality of Degree (MQID) statement will require. The first of these projects was conceived by the Director of the OAPR in cooperation with the Vice Provost and the Associate Vice President for Undergraduate Affairs. The essence of the pilot was the realization that the Writing Proficiency Exam was originally conceived as, and has always essentially been, an assessment instrument, designed to assess the written communication capabilities of every CSUN student, graduate as well as undergraduate—even though it has never actually been designated as an assessment instrument in so many words. Generally taken in the Junior year (for undergraduates) the WPE stands at a near-exit point for our students, and thus is capable of serving as a WASC-compliant assessment instrument, with the added advantages that it already exists, is fully funded, is taken very seriously by students, and does not add an additional burden to faculty because it already involves a pool of experienced CSUN scorers who receive extra compensation that is funded by existing test-taking fees. What is new in the pilot project is a redesign of the WPE to extend its existing scope to include not only the traditional pass/fail proficiency scoring that is required by the exam but an added dimension of Core Competency assessments as well, which were carefully designed not to interfere with a student’s ability to pass or fail the WPE. The Competencies assessed were Critical Thinking, Quantitative Literacy, and Information Literacy. (Since Oral Communication cannot be assessed via a written exam, that Competency is receiving special attention through other OAPR projects described later in this report.) The Director of the OAPR, in cooperation with WPE staff, accordingly redesigned the WPE as an assessment instrument, choosing readings, and creating prompts and new scoring guides. The readings were specially chosen to involve topics of the kind that our students are likely to encounter not only in their current daily lives but in their future working lives as well, topics that involve a need for critical thinking skills, an ability to understand and work with numbers, charts, and quantities, and an ability to describe how one would go about gathering further information in order to make any conclusions about the topic. The selected readings provided all of the information needed for a two hour timed exam essay. A text on the “Got Milk?” advertising campaign was chosen for the first pilot, and a text on the recent rise in the sales of flavored sparkling water beverages was selected for the second. Separate prompts were written for each exam. The pilots were administered April 25th and May 25th, 26th, 27th, and 29th, and were scored by the same faculty who read the traditional WPE. A total of 340 volunteering students, who were informed that the exam they were taking was not the same as that which the majority of students were taking, took the test. The exam was administered and scored via computer, as opposed to the paper exams of the traditional WPE. The Director of the OAPR was present at both scoring sessions to explain the details of the pilot version and be on hand in case of any questions or confusions. Students also were given an opportunity to provide feedback. The results of the pilots are listed below: WPE pilot #1 On a scale of 2 to 12, with 8 being the minimum passing score for the WPE portion of the assessment, the average score (N=172) was 8.116. On a scale of 2 to 12 (N=172), the average score for Critical Thinking was 8.069. On a scale of 2 to 12 (N=172), the average score for Quantitative Literacy was 7.697. On a scale of 2 to 12 (N=172), the average score for Information Literacy was 7.232. The overall pass rate for pilot WPE #1 was 72% (which compares to a 71% pass rate for traditional WPE administered in the same period). The native speaker pass rate was 80% (which compares to a 78% pass rate for the traditional WPE administered in the same period). The ESL pass rate was 57% (which compares to a 62% pass rate on the traditional WPE administered in the same period). Student feedback was uniformly favorable, and the scorers remarked that they had read some of the best student writing that they had ever seen on the WPE. WPE pilot #2 On a scale of 6 to 10 (a 3-4-5 scoring system was used this time reduce scorer fatigue), with 8 being the minimum passing score for the WPE portion of the assessment, the average score (N=168) was 7.964. On a scale of 6 to 10 (N=168), the average score for Critical Thinking was 7.887. On a scale of 6 to 10 (N=168), the average score for Quantitative Literacy was 7.458. On a scale of 2 to 12 (N=168), the average score for Information Literacy was 7.857. The overall pass rate for pilot WPE #2 was 78%. The native speaker pass rate was 85%. The ESL pass rate was 62.18% Student feedback was favorable for both tests, and the scorers again remarked that they had read some of the best student writing that they had ever seen on the WPE. While the purpose of the pilot was more to assay the practicability of the project than to provide an actionable measure of student learning, the results do indicate a continuing gap between Native Speaker and English Learner pass/fail WPE scores. With a growing population of international students, this result (consistent with the results of the traditional WPE) will require closing-the-loop attention in the future. The Director of the OAPR has begun consultations with the appropriate administrators accordingly. Otherwise, every element of the pilots was a success and the project is being continued in 2015-16, with new readings selected, new prompts written, and a fine tuning of the rubric in response to scorer experiences and comments. The assessment measures of the pilots are not yet extensive enough to make any loop closing plans, but especial attention to Information Literacy seems indicated, (the Director of the OAPR is in consultation with the Library Faculty to address this), and a goal of increasing the average scores in all categories to somewhere beyond 8 (and environs) would be desirable. College of Humanities Written Communication Assessment The second project, involving a partnership with the College of Humanities, was designed to assess student Written Communication skills (and only Written Communication) at the entry level by using Stretch Composition classes as sites for the administration of a specially designed assessment instrument. Faculty volunteers from every program that teaches Stretch writing were solicited. Eight faculty, from the English Department, Central American Studies, Chicana & Chicano Studies, and Queer Studies, participated. In cooperation with the Director of the OAPR, a writing task was composed, along with a prompt and a scoring rubric. Faculty administered the writing task in their classes in late April (an attempt to use the EAS failed when students were unable to load their papers into the system, so they were printed off and read as hard copies). In a scoring session shortly afterwards, 154 papers were assessed by eight readers, each reading two sets of papers. The papers were normed and there was no third reading to adjudicate disagreements: the scores were simply added together and averaged. Six sections of 113 B (N=118 students) and 2 sections of 114 B (N=36 students) were scored. A rubric with four criteria was used: 1. Responds to Topic; 2. Effectiveness of Expression; 3. Organization; 4. General Grammar. Three scores were possible: Exceeds Expectations (3); Meets Expectations (2); Does Not Meet Expectations (1). Aggregate scores (averaged): Responds to Topic: 2.15 Effectiveness of Expression: 1.88 Organization: 1.74 General Grammar: 1.86 Disaggregated scores (averaged) by course number: 114 B: Responds to Topic: 2.36 Effectiveness of Expression: 2.14 Organization: 2.01 General Grammar: 2.10 113B: Responds to Topic: 2.09 Effectiveness of Expression: 1.80 Organization: 1.65 General Grammar: 1.79 It can be noted that the numbers verified the qualitative judgment by the readers after the reading that there was a significant difference between the 113 B and 114 B papers. The 114 B papers (aggregated and averaged) all scored at between Meets Expectations and Exceeds Expectations. The 113 B papers (aggregated and averaged) scored at between Meets Expectations and Exceeds Expectations for Responds to Topic, but otherwise all scored below Meets Expectations in the other three assessed categories. For both 113 B and 114 B, the weakest assessed categories were Organization and General Grammar, most notably for 113 B papers, with over a third of the 113 B papers (37%) scoring Does Not Meet Expectations in both the categories of Organization and Grammar. While certainly a limited data set, the pilot provides some evidence that the goal of having students in the last few weeks of 113 B and 114 B achieve a relative par in writing ability is not being met. This result has been brought to the attention of the appropriate administrators, and the project is being continued into the Spring of 2016 to elicit more data to determine whether loop-closing measures are in order. Oral Communication As noted above, Oral Communication is not something that can be assessed in a written exam. Individual departments are already assessing this competency in their annual assessment activities, but after checking with WASC executives to ensure its suitability, the Director of the OAPR decided to take a complementary approach by asking the preceptors of CSUN interns to assess the Oral Communication skills of our students. A pilot project was launched accordingly in partnership with the Department of Health Sciences. A rubric was jointly constructed by the faculty of Health Sciences and the OAPR director, which was incorporated in the rubric that preceptors have already been using to assess CSUN Health Sciences interns. The preceptors rated CSUN interns at an average score of 4.26 (on a 1-5 scale). The success of this pilot has lead to a continuation of the assessment by Health Sciences in 2015-16, and plans are underway to extend it into Service Learning courses. Plans for 2015-16 In addition to the continuation of the WPE and COH pilot assessments, the OAPR will conduct a pilot assessment of Critical Thinking, making use of GE Paths courses in Sustainability, Social Justice, and Global Studies as sites for the assessment. Volunteer faculty teaching those classes will design, in cooperation with the OAPR director, a special test to be administered to their students online (via Moodle) to assess student ability to think critically about a real-world phenomenon that involves issues central to the three paths.