A fundamental misunderstanding

advertisement

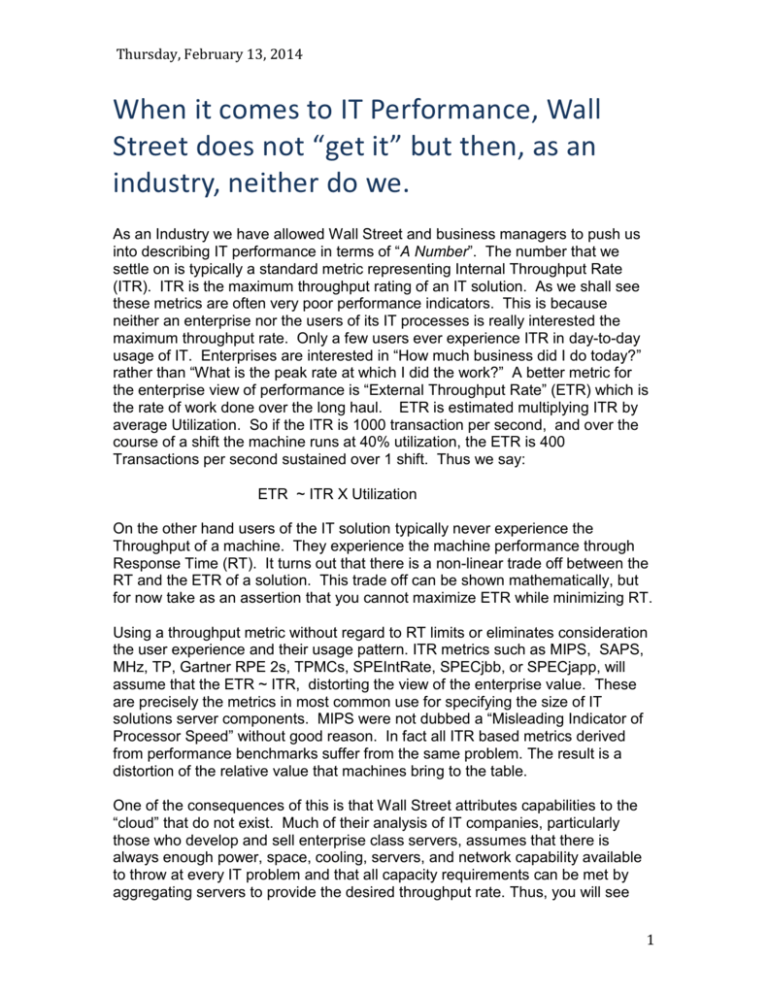

Thursday, February 13, 2014 When it comes to IT Performance, Wall Street does not “get it” but then, as an industry, neither do we. As an Industry we have allowed Wall Street and business managers to push us into describing IT performance in terms of “A Number”. The number that we settle on is typically a standard metric representing Internal Throughput Rate (ITR). ITR is the maximum throughput rating of an IT solution. As we shall see these metrics are often very poor performance indicators. This is because neither an enterprise nor the users of its IT processes is really interested the maximum throughput rate. Only a few users ever experience ITR in day-to-day usage of IT. Enterprises are interested in “How much business did I do today?” rather than “What is the peak rate at which I did the work?” A better metric for the enterprise view of performance is “External Throughput Rate” (ETR) which is the rate of work done over the long haul. ETR is estimated multiplying ITR by average Utilization. So if the ITR is 1000 transaction per second, and over the course of a shift the machine runs at 40% utilization, the ETR is 400 Transactions per second sustained over 1 shift. Thus we say: ETR ~ ITR X Utilization On the other hand users of the IT solution typically never experience the Throughput of a machine. They experience the machine performance through Response Time (RT). It turns out that there is a non-linear trade off between the RT and the ETR of a solution. This trade off can be shown mathematically, but for now take as an assertion that you cannot maximize ETR while minimizing RT. Using a throughput metric without regard to RT limits or eliminates consideration the user experience and their usage pattern. ITR metrics such as MIPS, SAPS, MHz, TP, Gartner RPE 2s, TPMCs, SPEIntRate, SPECjbb, or SPECjapp, will assume that the ETR ~ ITR, distorting the view of the enterprise value. These are precisely the metrics in most common use for specifying the size of IT solutions server components. MIPS were not dubbed a “Misleading Indicator of Processor Speed” without good reason. In fact all ITR based metrics derived from performance benchmarks suffer from the same problem. The result is a distortion of the relative value that machines bring to the table. One of the consequences of this is that Wall Street attributes capabilities to the “cloud” that do not exist. Much of their analysis of IT companies, particularly those who develop and sell enterprise class servers, assumes that there is always enough power, space, cooling, servers, and network capability available to throw at every IT problem and that all capacity requirements can be met by aggregating servers to provide the desired throughput rate. Thus, you will see 1 Thursday, February 13, 2014 analyses that say that HP, IBM, and Oracle are under pressure from “The Cloud” and assertions that these long time industry leaders “cannot keep up”. Even Intel is attacked because ARM processors look better on pure ITR metrics, particularly when price and “Servers per square meter” are used to as the key cost and capacity metrics. This is what my grandmother called “Donkey Dust”. My dad would have referred to a horse rather than a donkey. Enterprise servers have been attacked by wave after wave of parallel processing schemes during the 4 decades that I have been in the industry. Every one of the distributed solution alternatives evolved into a more Enterprise Server like solution over time. Cloud is no different. The problem is that the resulting solutions always carry some baggage from the original distributed source designs. Now even classically distributed servers such as those based on Intel are under attack by HyperScaled Computing using ARM processors. If you look closely at the arguments you will seen an assumption that “compute power” or performance are based on ITR metrics. This is the same “Donkey Dust” in a new shovel. Like I said, Wall Street and many in our Industry don’t “get it”. On the other hand we have no one to blame but ourselves. We cave to the pressure of “Just give me the number”, or “what is the ratio”, or “tell me the (fill in the ITR metric of your own choice) for the machine you are talking about.” We use ITR numbers and ratios for marketing, sales, pricing, design sizing, and (God bless us) even capacity planning. We do this even though they are only vaguely related to what users experience and what enterprises value about machines. In order to improve the situation we need to have some view of RT to represent the users’ point of view and some notion of Utilization to understand the business point of view. Without these the ITR values hang in a vacuum of misunderstanding and don’t really tell us what we need to know. Fortunately there are metrics and models that can help us here. The first model relates utilization to service level and workload usage pattern. This is expressed as “Rogers’ Equation”: Average Utilization = 1/(1+kc) Since the ETR is estimated by multiplying ITR by average utilization, we get: ETR ~ ITR/(1+kc) Since Rogers’ equation was derived from the “Central Limit theorem” of statistics, k and c have statistical definitions. Suffice to say that larger k implies a tighter service level and larger c means more variable load. We don’t have to use the statistics to gain insight here. Note that Average utilization is the Peak load divided by the Peak to Average Ratio. If we assume that the peak short term utilization is 100%, the denominator is the peak to average ratio of the workload usage pattern. This means that: 2 Thursday, February 13, 2014 ETR ~ ITR/Peak to Average Ratio of the Load This says the ETR approaches the ITR only if the Peak to Average Ratio approaches 1. That is it only happens when there is a loose service level or low variability in the load. ETR/ITR ETR and Peak to Average Ratio 1 0.9 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 2 4 6 Peak to Average Ratio 8 10 There are five cases when the Peak/Average Ratio approaches one. 1.When the nature of the workload results in low variability over the long haul (think supercomputers, analytics, long structured queries, etc.) 2. When the variability is absorbed by a batching process (load levelers, queue building solution tiers like MQSeries, etc.) to create such a load. 3. When loads are generated for benchmarks that measure ITR. 4. When consolidation reduces the variability of random loads. 5. When the service level is disregarded to maximize throughput Now return to the original equation. The peak to average ratio approaches one only if k or c approaches zero. The first three cases are examples of situations for which c approaches zero simply because the work being done by a single workload has low variability. The fourth case is the major reason that we have virtualization. The last case is when the enterprise desire for high throughput outweighs the users’ need for reasonable response time. For high ETR we need high Average Utilization, but individual application loads show high variability. Consolidation reduces the variability raising the average and with it the ETR and the associated business value. It can be shown that Rogers’ equation for a group of workloads of equal size and parameter c: Average Utilization = 1/(1+ kc/SQRT(n)) That is 3 Thursday, February 13, 2014 consolidated c = distributed c/SQRT(n). This implies that: distributed c = consolidated c x SQRT(n). It is the main reason that distributed systems tend to have low utilization when driven by random business activity Since low utilization means low ETR, distribution leads to a reduction of business value which is corrected by virtualization. Of course we could let k go to zero, but that is essentially saying that we don’t care about the service level. This is where the model links ETR to RT and describes the tradeoff between the ETR represented by utilization and RT represented by kc. This was fairly technical and complex, but the key message is that the Business Value of an IT resource is related to its average utilization which is traded off against the service level, and varies inversely with the variability of the load. Distributed loads have higher variability than consolidated loads but some distribution may be required to meet the service level RT at reduced utilization which affects the ETR. There is no getting around this. It is baked into the system science and resulting numbers. While distributed matrices of small machines can deliver high ITR at low cost, they will not necessarily deliver ETR at the response time required. Using ITR as a metric will almost always favor distributed solutions, even when the ETR suffers as the result of low utilization or the response time suffers due to high utilization and reduced service level. Rogers’ model delivers insight, but we would still like to approach this more directly from the point of view of Response time. The TPI metric from TeamQuest can fill this role. It is especially useful for assessing if the operating point of a machine is at a reasonable level of trade off between ETR and RT. To do this TeamQuest assumes that the machine is delivering the desired ETR and then determines a value called TPI: TPI = 100 X Service Time/Response Time We know that: Response Time = Service Time + Wait Time. So: At zero wait time TPI = 100 When Wait Time >> Service Time TPI 0 And: 4 Thursday, February 13, 2014 When the machine is idle TPI=100 When the machine is at 100% utilization TPI = 0 Different workloads take different trajectories between 100 and zero as utilization increases. When the parameter c = 1, the trajectory is a straight line and TPI = 50 at 50% utilization. For higher c the trajectory is a curve which initially approaches zero rapidly and TPI= 50 is at less than 50% utilization. For lower c the curve is initially flat and the TPI = 50 and greater than 50% utilization. It follows that Enterprise Servers have more favorable TPI curves because they can consolidate more high c workloads than distributed solutions. This reduces the effective value of c below 1 flattening the curve and driving up the utilization of the TPI = 50 point. The low c of HPC applications does the same thing. This is why supper computers are massively parallel. Unfortunately this also happens in benchmarks yielding ITR metrics, leading to a distortion of machine value. However when individual load components have high variability, distribution of the load on multiple servers drives c above one resulting in TPI= 50 at low utilization. The difference between massively parallel “Hyper Scale Computing” and Enterprise computing is that Enterprise Computing can deliver ETR and RT for randomly varying loads via massive consolidation and the distributed solutions cannot. You have to be able to tolerate a low utilization level when you limit consolidation. Each generation of parallel computing has been propelled forward as “cost effective” until the cost of sprawl caused a need to improve utilization. ARM processors are less expensive and low power, but they will also run at low utilization and the latency due to distribution is still much greater than the latency within an SMP or NUMA design with larger server elements. 5 Thursday, February 13, 2014 TeamQuest uses measurements and a sophisticated queuing model to gather the values to generate a TPI for a system in operation. Depending on the ETR and service level requirements at hand, the tools will flag TPI dipping below 50 as cause for concern. At Low Country North Shore Consulting we have a model based on Rogers equation and use a simple queuing model for RT to generate estimated TPI during design of IT solutions. We relate these to ITR either by estimating from machine specification and/or including actual ITR results of interest in the model. The model also includes information about the ability to stack work on individual threads without large increases in response time due to cache misses. We call the resulting body of work “performance architecture” because it can be applied to get better performance assumptions into IT designs very early in the design cycle. For more information, go to www.lc-ns.com. Please use the contact us page to request information or performance architecture services, or to make comments. Joe Temple 6