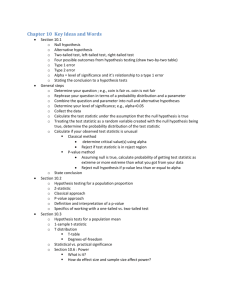

Power Analysis

advertisement

Skip to main content My Environmental Education Evaluation Resource Assistant You are here Home » Plan an Evaluation » Related Topics Search Search Power Analysis, Statistical Significance, & Effect Size If you plan to use inferential statistics (e.g., t-tests, ANOVA, etc.) to analyze your evaluation results, you should first conduct a power analysis to determine what size sample you will need. This page describes what power is as well as what you will need to calculate it. Table of Contents What is power? How do I use power calculations to determine my sample size? What is statistical significance? What is effect size? Return to: Step 5: Collect Data What is power? To understand power, it is helpful to review what inferential statistics test. When you conduct an inferential statistical test, you are often comparing two hypotheses: The null hypothesis – This hypothesis predicts that your program will not have an effect on your variable of interest. For example, if you are measuring students’ level of concern for the environment before and after a field trip, the null hypothesis is that their level of concern will remain the same. The alternative hypothesis – This hypothesis predicts that you will find a difference between groups. Using the example above, the alternative hypothesis is that students’ posttrip level of concern for the environment will differ from their pre-trip level of concern. Statistical tests look for evidence that you can reject the null hypothesis and conclude that your program had an effect. With any statistical test, however, there is always the possibility that you will find a difference between groups when one does not actually exist. This is called a Type I error. Likewise, it is possible that when a difference does exist, the test will not be able to identify it. This type of mistake is called a Type II error. Power refers to the probability that your test will find a statistically significant difference when such a difference actually exists. In other words, power is the probability that you will reject the null hypothesis when you should (and thus avoid a Type II error). It is generally accepted that power should be .8 or greater; that is, you should have an 80% or greater chance of finding a statistically significant difference when there is one. How do I use power calculations to determine my sample size? Generally speaking, as your sample size increases, so does the power of your test. This should intuitively make sense as a larger sample means that you have collected more information -- which makes it easier to correctly reject the null hypothesis when you should. To ensure that your sample size is big enough, you will need to conduct a power analysis calculation. Unfortunately, these calculations are not easy to do by hand, so unless you are a statistics whiz, you will want the help of a software program. Several software programs are available for free on the Internet and are described below. For any power calculation, you will need to know: What type of test you plan to use (e.g., independent t-test, paired t-test, ANOVA, regression, etc. See Step 6 if you are not familiar with these tests.), The alpha value or significance level you are using (usually 0.01 or 0.05. See the next section of this page for more information.), The expected effect size (See the last section of this page for more information.), The sample size you are planning to use When these values are entered, a power value between 0 and 1 will be generated. If the power is less than 0.8, you will need to increase your sample size. Increase your sample size to be on the safe side! Power analysis calculations assume that your evaluation will go as planned. It is quite likely, however, that you will not be able to use some of the data in your sample, which will decrease the power of your test. You may lose data, for example, when participants complete a pretest but drop out of the program before completing the posttest. Likewise, you may decide to exclude some data from your analysis because it is incomplete (e.g., when a participant only answers a few questions on a survey). To ensure that you have enough power in the end, estimate how many individuals you may lose from your sample and add that many to the sample size suggested by the power calculation. The following resources provide more information on power analysis and how to use it: Getting the Sample Size Right: A Brief Introduction to Power Analysis Jeremy Miles Intermediate Advanced This page, which is easier to understand if you have some basic statistics knowledge, provides a solid introduction to the concept of power analysis, explaining what it is and how to conduct the two most common types of power analysis, a priori and post-hoc. Appendices 1 and 2 provide further reading about power analysis as well as links to several free power analysis software programs. G Power software Intermediate Advanced G Power is a free online power analysis software program. It can perform power analysis tests for all of the most common statistical tests in behavioral research, including those most commonly used in EE. If you want to avoid the trial-and-error process of finding a sufficient sample size, G Power will allow you to input the desired power (e.g., 0.8) along with your statistical test type, alpha value, and expected effect size to generate the minimum sample size needed. Optimal Design software Intermediate Advanced Optimal Design is another free software tool for conducting power analyses for regressions, hierarchical linear models, and complex designs. What is statistical significance? There is always some likelihood that the changes you observe in your participants’ knowledge, attitudes, and behaviors are due to chance rather than to the program. Testing for statistical significance helps you learn how likely it is that these changes occurred randomly and do not represent differences due to the program. To learn whether the difference is statistically significant, you will have to compare the probability number you get from your test (the p-value) to the critical probability value you determined ahead of time (the alpha level). If the p-value is less than the alpha value, you can conclude that the difference you observed is statistically significant. P-Value: the probability that the results were due to chance and not based on your program. P-values range from 0 to 1. The lower the p-value, the more likely it is that a difference occurred as a result of your program. Alpha (α) level: the error rate that you are willing to accept. Alpha is often set at .05 or .01. The alpha level is also known as the Type I error rate. An alpha of .05 means that you are willing to accept that there is a 5% chance that your results are due to chance rather than to your program. What alpha value should I use to calculate power? An alpha level of less than .05 is accepted in most social science fields as statistically significant, and this is the most common alpha level used in EE evaluations. The following resources provide more information on statistical significance: Statistical Significance Creative Research Systems, (2000). Beginner This page provides an introduction to what statistical significance means in easy-tounderstand language, including descriptions and examples of p-values and alpha values, and several common errors in statistical significance testing. Part 2 provides a more advanced discussion of the meaning of statistical significance numbers. Statistical Significance Statpac, (2005). Beginner This page introduces statistical significance and explains the difference between one-tailed and two-tailed significance tests. The site also describes the procedure used to test for significance (including the p value). What is effect size? When a difference is statistically significant, it does not necessarily mean that it is big, important, or helpful in decision-making. It simply means you can be confident that there is a difference. Let’s say, for example, that you evaluate the effect of an EE activity on student knowledge using pre and posttests. The mean score on the pretest was 83 out of 100 while the mean score on the posttest was 84. Although you find that the difference in scores is statistically significant (because of a large sample size), the difference is very slight, suggesting that the program did not lead to a meaningful increase in student knowledge. To know if an observed difference is not only statistically significant but also important or meaningful, you will need to calculate its effect size. Rather than reporting the difference in terms of, for example, the number of points earned on a test or the number of pounds of recycling collected, effect size is standardized. In other words, all effect sizes are calculated on a common scale -- which allows you to compare the effectiveness of different programs on the same outcome. How do I calculate effect size? There are different ways to calculate effect size depending on the evaluation design you use. Generally, effect size is calculated by taking the difference between the two groups (e.g., the mean of treatment group minus the mean of the control group) and dividing it by the standard deviation of one of the groups. For example, in an evaluation with a treatment group and control group, effect size is the difference in means between the two groups divided by the standard deviation of the control group. mean of treatment group – mean of control group standard deviation of control group To interpret the resulting number, most social scientists use this general guide developed by Cohen: < 0.1 = trivial effect 0.1 - 0.3 = small effect 0.3 - 0.5 = moderate effect > 0.5 = large difference effect How do I estimate effect size for calculating power? Because effect size can only be calculated after you collect data from program participants, you will have to use an estimate for the power analysis. Common practice is to use a value of 0.5 as it indicates a moderate to large difference. For more information on effect size, see: Effect Size Resources Coe, R. (2000). Curriculum, Evaluation, and Management Center Intermediate Advanced This page offers three useful resources on effect size: 1) a brief introduction to the concept, 2) a more thorough guide to effect size, which explains how to interpret effect sizes, discusses the relationship between significance and effect size, and discusses the factors that influence effect size, and 3) an effect size calculator with an accompanying user's guide. Effect Size (ES) Becker, L. (2000). Intermediate Advanced This website provides an overview of what effect size is (including Cohen’s definition of effect size). It also discusses how to measure effect size for two independent groups, for two dependent groups, and when conducting Analysis of Variance. Several effect size calculators are also provided. References: Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). New Jersey: Lawrence Erlbaum. Smith, M. (2004). Is it the sample size of the sample as a fraction of the population that matters? Journal of Statistics Education. 12:2. Retrieved September 14, 2006 from http://www.amstat.org/publications/jse/v12n2/smith.html Patton, M. Q. (1990). Qualitative research and evaluation methods. London: Sage Publications.