DiscreteRVs01

advertisement

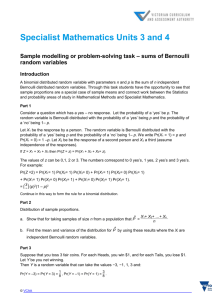

Discrete Random Variables Real-Valued “Random” variables: X , Y , , , , Events, Propositions: X 3 , Y a , b , a b , 3 , , a, b Probability: Same as the general case Discrete Real-valued Random Variables: PMF and CDF Expectation, Expected Value Terminology Expectation and of a linear function Expectation of linear combinations Variance Terminology Variance of a linear function Variance of linear combinations of independent random variables Indicator random variable, a.k.a. Bernoulli random variable Def: Let A U , be any event or proposition of interest, and define a random variable I on U by 1, u A I u 0, u A (1) Notes: A is often called a “success”, so A success and A failure . I is often called an indicator random variable, or the indicator of the event A, and denoted by IA. I is often called a Bernoulli random variable, and its distribution, (1), is called the Bernoulli distribution. Document1 1 2/9/2016 PDF f y | P I y | y 1 , 1 y y 1 , y 0, 1 f y | 1 , y 0 0, elsewhere 0, elsewhere (2) Interpretation Freq and rel freq of event A in one observation or trial Provides quantification of qualitative variables, e.g., A Outcome success I 1 (3) In (3), Outcome is a qualitative random variable and I is a quantitative random variable. Expectation and variance are not defined for qualitative random variables, but they are for quantitative random variables. Expectation E I A P A (4) The probability of the proposition or event of interest, i.e., the population proportion (if there is a population), is the expectation or population mean of the indicator, i.e., proportion has properties of mean Variance Var I A P A P A 1 (5) 0.3 0.25 pi(1 - pi) 0.2 0.15 0.1 0.05 0 0 0.2 0.4 0.6 0.8 1 pi Document1 2 2/9/2016 Graph of variance, 1 , as a function of reveals Max variance = 0.25 at 12 , i.e., when 1 , i.e., when P A P A Min variance = 0 at P(A) = 0 and 1 The variance of the indicator “measures” the uncertainty about A. Binomial Random Variable Def Let I1 , I 2 , , In (6) be a sequence of independent, identically distributed (iid) Bernoulli random variables. A random variable Y defined by n Y Ij (7) j 1 is called a binomial random variable, and its distribution is called the binomial distribution with parameters n and , where is the common (identical) Bernoulli probability P I j 1 , j 1, 2, , n. (8) The sequence of Bernoulli random variables implies the existence of a sequence of events or propositions A1 , A2 , , An (9) such that I j I Aj , j 1, 2, ,n (10) and Aj I j 1 , j 1, 2, (11) ,n and, of course, P Aj P I j 1 , j 1, 2, ,n (12) The independence of the sequence of random variables (6) implies the independence of the sequence of events (or propositions) (9). Document1 3 2/9/2016 A sequence of iid Bernoulli random variables is a model of independent, identical trials of an experiment that has a dichotomous categorical result, e.g., success or failure, e.g., A or A . Likewise, a sequence of iid Bernoulli random variables is a model of the results of sampling with replacement from a population, U. The three assumption connoted by “iid Bernoulli” are the necessary and sufficient conditions for a binomial random variable (and a binomial distribution): 1. dichotomous outcomes (Bernoulli): Aj , Aj , j 1, 2, ,n 2. independent trials: P Ai Aj P Ai P Aj , i j 3. identical trials: P Ai P Aj , i, j Given the assumptions and the connotation of Aj as a “success”, we can summarize the whole model for such an experiment by saying Y is the number, or frequency, of successes in n iid trials. Likewise, in the context of sampling with replacement, Y is the sample sum of the indicators. It follows that the random variable (Y/n) is the sample proportion, or relative frequency of success, and the sample mean of the indicators, i.e., Y 1 n Ij I n n j 1 (13) Moreover, we have the identity of events or propositions regarding the frequency and relative frequency of success, or equivalently, regarding the sample sum and the sample mean of the indicators: y Y y I Y y n n n (14) for any number y. Thus, also for any number y, y Y y P I P P Y y n n n Document1 4 (15) 2/9/2016 This, i.e., (14) and (15) means that knowledge of the distribution of the number of success Y gives us complete knowledge of the distribution of the proportion of successes Y/n. Finally, the fact that the frequency and relative frequency of success are sample sums and sample means of iid quantitative random variables (the indicators) implies that the Central Limit Theorem applies: The frequency of success Y and the relative frequency of success (Y/n) are approximately Normally distributed for large samples. PDF Formula Derivation (Pascal’s Triangle) Each path to y successes has probability π y(1 – π)(n – y) Number of paths to y is the binomial coefficient, n n n! Cy y y ! n y ! n n 1 n y 1 y y 1 1 n n 1 n 2 y y 1 y 2 y 1 2, , n n C0 1 0 n n C y 0, y 0, 1, y n y 1 , 1 (16) n , n (17) Properties of binomial coefficient Symmetry Document1 n n y n y (18) n n 1 0 n (19) 5 2/9/2016 n n n 1 n 1 (20) Recursion n 1 n n y 1 y y 1 (21) Sumx(f(x)) = 1 CDF Mean(Y) = n π Var(Y) = n π (1 – π) Sample proportion of success = Y /n = Sum(I)/n = Sample Mean(I) = Ibar E(Y /n) = E(Y)/n = n π / n = π = E(I) = E(Ibar) Var(Y /n) = π (1- π) / n = Var(Ibar) = Var(I)/n Bernoulli(π) = Binomial(1, π) Hypergeometric Poisson Document1 6 2/9/2016 Distribution Bernoulli (π) PDF y 1 , f y | 1 , y 0 0, elsewhere y 1 1 y , y 0, 1 elsewhere 0, Mean (i.e., Expected Value) Variance E Y | Var Y | 1 E Y | Var Y | f y | Binomial (n, π) n y n y , y 0, 1, , n 1 y 0, elsewhere n n 1 f y | N , R, n Hypergeometric (N, R, n) R N R y n y , y 0, 1, , n N n 0, elsewhere E Y | N , R, n Var Y | N , R, n R n N R N n R n 1 N N N 1 f y | Poisson (μ) Document1 y , y 0, 1, e y! 0, elsewhere 7 E Y | Var Y | 2/9/2016