Section 5 – Expectation and Other Distribution

advertisement

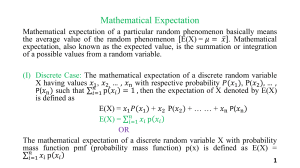

Section 5 – Expectation and Other Distribution Parameters Expected Value (mean) • As the number of trials increases, the average outcome will tend towards E(X): the mean • Expectation: E[X] X – Discrete E[X] x p(x) x1 p(x1) x2 p(x2 ) ... – Continuous E[X] x f (x)dx Expectation of h(x) • Discrete E[X] x p(x) x1 p(x1) x2 p(x2 ) ... E[h(X)] h(x) p(x) h(x1) p(x1) h(x 2 ) p(x 2 ) ... x • Continuous E[X] x f (x)dx E[h(X)] h(x) f (x)dx Moments of a Random Variable • n: positive integer • n-th moment of X: E[X n ] – So h(x) = X^n – Use E[h(X)] formula in previous slide • n-th central moment of X (about the mean): E[(X ) n ] – Not as important to know Variance of X • Notation • Definition: Var[X] V[X] Var[X] E[(X X ) ] 2 E[X 2 ] (E[X])2 E[X ] 2 2 X 2 X 2 Important Terminology • Standard Deviation of X: X 2X Var[X] • Coefficient of variation: X X – Trap: “Coefficient of variation” uses standard deviation not variance. Moment Generating Function (MGF) • Moment generating function of a random tX variable X: MX (t) E[e ] – Discrete: MX (t) etx p(x) – Continuous: M X (t) e tx f (x)dx Properties of MGF’s M X (0) 1 M'X (0) E[X ] M''X (0) E[X ] 2 M (n )X (0) E[X n ] d2 ln[M X (t)] |t 0 Var[X] 2 dt Two Ways to Find Moments 1. E[e^(tx)] 2. Derivatives of the MGF Characteristics of a Distribution • Percentile: value of X, c, such that p% falls to the left of c – Median: p = .5, the 50th percentile of the distribution (set CDF integral =.5) • What if (in a discrete distribution) the median is between two numbers? Then technically any number between the two. We typically just take the average of the two though • Mode: most common value of x – PMF p(x) or PDF f(x) is maximized at the mode • Skewness: positive is skewed right / negative is skewed left E[( X ) 3 ] 3 – I’ve never seen the interpretation on test questions, but the formula might be covered to test central moments and variance at the same time Expectation & Variance of Functions • Expectation: constant terms out, coefficients out E[a1h1 ( X ) a2 h2 ( X ) b] a1E[h1 ( X )] a2 E[h2 ( X )] b E[aX b] aE[ X ] b • Variance: constant terms gone, coefficients out as squares Var[aX b] a2Var[X] Mixture of Distributions • Collection of RV’s X1, X2, …, Xk – With probability functions f1(x), f2(x), …, fk(x) – These functions have weights (alpha) that sum to 1 • In a “mixture of distribution” these distributions are mixed together by their weights – It’s a weighted average of the other distributions f (x) 1 f1(x) 2 f 2 (x) ... k f k (x) Parameters of Mixtures of Distributions E[X n ] 1 E[X1n ] 2 E[X 2n ] ... k E[X kn ] M X (t) 1 M X1 (t) 2 M X 2 (t) ... k M X k (t) • Trap: The Variance is NOT a weighted average of the variances • You need to find E[X^2]-(E[X)])^2 by finding each term for the mixture separately