Numerical Measures of Samples*Statistics

advertisement

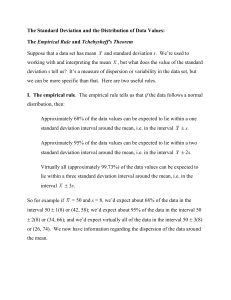

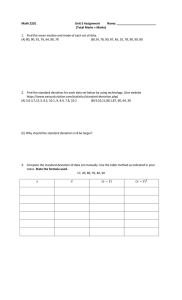

Numerical Measures of Samples—Statistics Measures of Central Tendency The three measures of central tendency that we examined for a (finite) population have analogous measures for samples. In fact, although they are distinguished from the population parameters by notation, they are computed in exactly the same way. The measure that we wish to focus on is the sample mean. Let n = sample size (number of data points in the sample), xi = value of the ith sample point. Then n x x i 1 i n Example: We have taken a sample of size 5 and obtained 6, 3, 6, 1, 2. What is x ? Notice that x might be different if we take another sample. Also recall that the population we are sampling from has some mean μ. In general, x . However, we might expect x to be “close” to μ. What is the median of the sample? What is the mode? 19 Measures of Dispersion As before, we can compute the range of our sample. What is the range of the sample 6, 3, 6, 1, 2? Another measure of dispersion is the sample variance, s2, computed by n (x s2 i 1 i x)2 n 1 Recall that N 2 (x i 1 )2 i . N In what ways are the measures similar? An “easier” way to compute s2 is to use the formulae n s2 x i 1 2 i nx 2 n 1 n n x i 1 2 i ( xi ) 2 i 1 n n 1 n as nx 2 ( xi ) 2 i 1 n . For our example, x = (1 + 2 + 3+ 6 + 6)/5 = 3.6, and 20 xi 1 2 3 6 6 xi - 3.6 -2.6 -1.6 -0.6 2.4 2.4 (xi - 3.6)2 6.76 2.56 0.36 5.76 5.76 xi2 1 4 9 36 36 18 0 21.2 86 Then n s2 (x i 1 i x)2 n 1 21.2 5.3 (5 1) or n n s2 x i 1 2 i ( xi ) 2 i 1 n n 1 86 (18) 2 5 5.3 4 The sample standard deviation, s, is obtained by s s 2 5.3 2.302 Chebysheff’s Theorem You might be wondering at this point what exactly does μ and σ tell us about a population, or what does x and s tell us about a sample. We will use this information to our advantage in multiple ways, but one easy answer to this question is given by Chebysheff’s Theorem. The proportion of observations in any sample (or population) that lie within k standard deviations of the mean is at least 1 1 for k 1. k2 21 Just what is within k standard deviations of the mean? For a sample, compute the interval ( x ks, x ks) . For a population, compute the interval (μ – kσ, μ + kσ). To illustrate, let k = 1.5. For our sample on the last page, we get ( x ks, x ks) (3.6 (1.5)2.302, 3.6 (1.5)2.302) (0.147, 7.053) This theorem guarantees that at least 1 1 1 1 1 .44 .56 2 k 1.5 2 or 56% of the sample will fall in this interval. What proportion of the sample does fall in this interval? While this might seem weak, the point is that Chebysheff holds for all samples. In fact, you can find examples for which Chebysheff holds exactly, so no stronger statement can be made. The Empirical Rule In the case that the histogram of the population is unimodal and symmetric (bellshaped), a much stronger statement can be made using the empirical rule. Approximately 68% of the observations fall within one standard deviation of the mean, (μ – σ, μ + σ). Approximately 95% of the observations fall within two standard deviation of the mean, (μ – 2σ, μ + 2σ). Approximately 99.7% of the observations fall within three standard deviation of the mean, (μ – 3σ, μ + 3σ). We will encounter the empirical rule in a formal way in the next few weeks. There are a variety of other measures which are important in particular applications. For example, skewness measures the degree of asymmetry in a distribution. One possible way of measuring this asymmetry is by computing 22 𝑠𝑘𝑒𝑤𝑛𝑒𝑠𝑠 = (𝑥̅ − 𝑚𝑒𝑑𝑖𝑎𝑛) 𝑠 (in a population use μ and σ). Another possibility is n ws ( xi x ) 3 i 1 n (in a population use μ). 23