Transcript

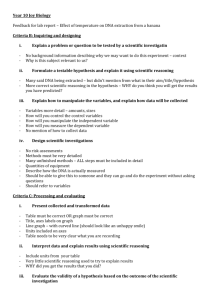

advertisement

We turn now to discuss the 2nd level of processing in the Joint Directors of Laboratories (JDL) data fusion

processing model.

The objectives of this topic are to introduce the JDL level 2 processing concept, survey and introduce

some methods for approximate reasoning such as the Bayes method, Dempster-Shafer’s method and

others, and describe some challenges and issues in automated reasoning. In this lesson, we will focus

on report-level fusion while the next lesson will introduce contextual reasoning methods such as rulebased systems and intelligent agents.

We refer again to the JDL processing model and put the level 2 process in perspective. We have

previously indicated that the JDL model is not sequential nor hierarchical. We don’t necessarily perform

level 1 fusion first, followed by level 2, etc. However, the level 2 fusion processes tend to operate on

the evolving products of level 1 fusion. Thus, in level 2 fusion, we are seeking to develop insight into an

evolving situation that involves entities such as targets, events, and activities. Level 2 fusion processing

seeks to understand the context of level-1 products, such as how entities, activities and events relate to

each other and to the environment.

The original specification of the JDL model identified the following types of sub-processes or functions

for level-2 processing. These include:

•

Object aggregation – Object aggregation involves looking a multiple entities or objects and

seeking to establish potential relationships such as geographical proximity, temporal

relationships (e.g., this object or event always appears after that object or event),

communications among entities and perhaps functional dependence (e.g., in order for this event

to occur, the following functions need to be performed).

•

Event/activity aggregation – Event and activity aggregation involves determining possible links

between events and activities and higher level interpretation. For example, girls wearing white

gowns, and multiple picture taking events, and a large dinner or banquet might indicate a

wedding event.

•

Contextual interpretation and fusion – Many events and activities are affected by (and must be

interpreted in light of) the environment, weather, doctrine and socio-political interpretations.

Doctrine refers to the formal or informal rules by which an activity occurs.

•

Multi-perspective assessment – Finally, for adversarial situations, we can use a red, blue and

white perspective assessment. Red refers to our opponents (regardless of their political or

organizational affiliation), blue refers to our own forces or players, and white refers to the

environment that affects both red and blue. Thus for military operations, the white view

would look at factors such as terrain and how that affects the ability for ground-based vehicles

to traverse from one point to another. This terrain affects both the red and the blue ground

vehicles.

While we had indicated that JDL processing is not necessarily hierarchical, there is a kind of hierarchy of

abstraction and associated processes. In this chart we show that reasoning and related data may

proceed from very physical and specific levels to very general and abstract levels.

So we may start with sensor data or human inputs to establish “what’s out there” – the existence and

observable features of an entity. At a more abstract level, we are interested in the identity, attributes

and location of observed entities. Proceeding to a higher level of abstraction, we might be interested in

the behavior of entities and the relationship of one entity to others. At a still higher level of

abstraction, we seek to understand the meaning of an evolving situation. And finally, we seek to

develop hypotheses about the future implications of the current situation.

Correspondingly the methods for reasoning about this hierarchy of abstraction range from signal and

image processing techniques (for determining the existence and features of an entity), to estimation

techniques such as Kalman Filters, cluster algorithms, neural networks, Bayesian Belief Nests and

Evidential reasoning, to finally more abstract reasoning techniques such as expert systems, use of

frames, templating, scripts, case-based reasoning and intelligent agents.

Some examples of data fusion inferences for different application domains are provided in this chart.

These include:

•

Military tactical situation assessment – involves basic inferences such as the location and

identification of low level entities and objects. Higher level inferences would seek to identify

complex entities, relationships among entities and a contextual interpretation of the meaning of

these entities. Types of reasoning would involve estimation, spatial and temporal reasoning,

establishing functional relationships, hierarchical reasoning and context-based analysis.

•

Threat or consequence assessment – for military or emergency management applications

involves many of the same types of reasoning as for tactical situation assessment. However, in

this case we are focuses on reasoning about the future and the consequences of the current

situation. Hence, reasoning methods are focused on the development of alternative

hypotheses, prediction of the consequences of anticipated actions, the development of

alternative scenarios, and cause-effect reasoning.

•

Complex equipment diagnosis – The proliferation of sensors and computers embedded into

complex mechanical systems such as automobiles and airplanes provides the opportunity to

monitor the health and potential future maintenance needs of the mechanical systems. The

basic inferences sought for this application includes determining the basic state of the

equipment by monitoring data such as temperature, pressure and vibration, locating and

identifying fault conditions and pre-cursors to fault conditions, and identification of abnormal

operating conditions. Higher level inferences involve establishment of cause-effect

relationships, analyses of processes, development of recommendations for diagnostic tests and

recommendations for maintenance actions. Some types of reasoning include failure-effect and

mode analysis (FEMA) methods.

•

Medical diagnosis – Medical diagnosis is a very complex domain that potentially involves

integrating the results of sensor measurements (e.g., X-rays, sonograms, biological tests) with

human observations by a paramedic, nurse or physician, and self-reports from a patient. We

seek to combine this information to assess symptoms, locate injuries (both external and

internal), determine abnormal conditions, and establish a diagnosis of the patient’s condition.

Types of reasoning include analysis of the relationships among symptoms, linking symptoms to

potential causes, recommending diagnostic tests, and identification of diseases.

•

Remote sensing – Finally, the chart shows inferences and reasoning associated with remote

sensing. This could be for understanding environmental damage and evolution, agricultural

applications, or dealing with crisis events. Basic inferences may include location and

identification of crops, vegetation, minerals or geographic features, the identification of features

of objects or areas of interest, and the identification of unusual phenomena. Types of

reasoning involve the determination of the relationships among geographical features,

interpretation of data, spatial and temporal reasoning, and context-based reasoning.

In general, reasoning for level-2 and level-3 processing involves context-based reasoning and high level

inferences. The techniques are generally probabilistic and entail representation of uncertainty in data

and inferential relationships. Often, the reasoning involves working at a semantic (word-based) level.

Examples of methods at the semantic level include; rule-based methods, graphical representations,

logical templates, cases, plan hierarchies, agents and others. In principle, we are trying to emulate the

types of reasoning that involves human sensing and cognition. As shown in the chart, basic techniques

and functions required include pattern matching, inference (reasoning) methods, search techniques and

knowledge representation. These are basic functions in the realm of artificial intelligence, which seeks

to develop methods to emulate functions ordinarily associated with humans and animals, e.g., vision

(computer vision), purposeful motion (robotics), learning (machine learning), reasoning (expert

systems), and speech and language understanding (natural language processing).

There are many challenges in developing computer methods to automate human reasoning or

inferencing. Humans have general sensing, reasoning and context-based situation awareness

capabilities. They tend to have continual access to multiple human senses, perform complex pattern

recognition such as recognizing visual objects, can perform reasoning with words, and have extensive

knowledge of “real-world” facts relationships and interactions. All of these must be developed

explicitly for computer systems. Also, humans tend to be able to perform rapid assessments and make

quick decisions based on rules of thumb and heuristics. Finally, humans can readily understand the

context of a situation, which affects the understanding of the sensory data and the decisions to be

made.

By contrast, computers lack real-world knowledge, are challenged by the difficulties of dealing with

English or other human languages, and require explicit methods to represent knowledge and to reason

about that knowledge. However, computers have some advantages over humans including the ability

to perform complex mathematical calculations and hence use physics-based models. They are

unaffected by fatigue, emotions or bias. In addition, computers can process huge data sets and use

machine learning techniques to obtain insights that would be difficult for a human.

There are numerous types of relationships that we may need to represent in order to perform situation

awareness or assessment. Types of relationships include:

•

Physical constituency – What are the components or elements of a system, event, activity or

entity? We may use representation techniques such as block diagrams, specification trees, or

physical models to represent physical constituency.

•

Functional constituency – What are the functions required for an event or activity. The

question seeks to determine, what does an event or activity involve, requires or provides.

Examples of representation methods include functional block diagrams, decomposition trees,

interpretive structural modeling and other methods.

•

Process constituency – What processes are involved in an unfolding situation? Does an entity

or group of entities perform one or more processes. We may use mathematical functions,

logical operations, rules or procedures to describe these processes.

•

Sequential dependency – Is there a sequence of events, activities or processes that define a

situation. What must come first? What are precursory steps required before something else

can happen? Examples of representation techniques include PERT (Program Evaluation Review

Technique) charts such as schedules or GANT charts, petri-nets, scripts and operational

sequence diagrams.

•

Temporal dependency – Related to sequential dependency is temporal dependency in which

events or activities need to be coordinated in time. These may be represented by event

sequences, timelines, scripts or operational sequence diagrams.

•

Finally, we may utilize techniques such as computer simulations, access to sample real-world

data, physical models, or other means to seek to represent and decompose a complex

environment into understandable and predictable components.

It is beyond the scope of this course to address all of the current methods used for automated

reasoning. We provide a brief list here of three major aspects of automated reasoning and types of

techniques used. The three main aspects include:

•

Knowledge representation – We need to be able to represent facts, relationships, and

interactions between entities and their environment. Common representation methods

include; rules, frames, scripts, semantic nets, parametric templates and analogical methods such

as physical models.

•

Uncertainty representation – along with representing knowledge, we need to represent

information about the uncertainty of this information. Examples of techniques include

confidence factors, probability, Demspter-shafer evidential intervals, fuzzy membership

functions, and other methods.

•

Reasoning methods and architectures – Given the ability to represent data, facts and

uncertainty, we seek to reason with or process that information in order to allow automated

generation of results such as new relationships and inferences. Methods include the following.

•

Implicit reasoning methods such as neural nets and cluster algorithms operate directly

on data without explicit guidance or semantic information;

•

Explicit reasoning methods seek to incorporate semantic knowledge such as rules or

other incorporation of human expert knowledge to guide the reasoning. Pattern

templates such as templating or case-based reasoning incorporate both parametric data

as well as semantic knowledge.

•

Process reasoning methods such as script interpreters or plan-based reasoning use

information about processes such as causal relationships, sequential requirements,

interaction among functions or actors, etc. to perform automated reasoning.

•

Deductive methods include decision trees, Bayesian belief nets and Dempster-shafer

belief nets.

•

Finally, hybrid architectures include intelligent software agents, blackboard systems and

hybrid symbolic and numerical systems.

We will review some of these methods in this and the next lesson.

In the previous lesson, we introduced pattern recognition methods such as cluster algorithms and neural

networks. We indicated that these methods could be applied to individual sensors or sources of

information to result in a “declaration of identity” based on each sensor or source alone. For example,

one might process data from a radar and use the radar cross section data as a function of time and

aspect-angle to classify the type of aircraft or object being observed. Similarly, one might use size and

shape information from a visual or infrared sensor in order to make another declaration of target type

or class.

We now turn to decision-level identity fusion. In this case, we want to fuse the identity or classification

declarations from the individual sensors and sources to arrive at a consensus – a fused declaration of

identity. This process represents a transition from Level-1 identity fusion to Level-2 fusion related to

complex entities, activities, events. The reasoning is performed at the semantic (report) level. The

concept is illustrated in this flow chart.

However, prior to introducing techniques such as voting methods, Bayesian inference, and DempsterShafer’s method, it is necessary for a brief review of some concepts from probability and logic.

Classical statistical inference was first introduced in the early 1700s and became formalized into modern

statistical inference in the 1920s by publications by Fisher and the 1930s by Neyman and Pearson.

We start by defining three types of probabilities.

i)

Classical probability involves statements about a situation in which we know all possible

outcomes of an “experiment”. For example if we flip a two sided coin with one side having a

“head” and one side having a “tail”. We can ask, what is the probability that the coin will show

a head when flipped once. If the coin is not biased, then the probability of see a Heads is

simply given ½ (that is, we could either see a H or a T). Suppose we flip the coin twice is

succession. What is the probability of seeing two head? In classic probability we consider all

the possible combinations of outcomes; that is, (H,H), (H,T), (T, H), and (T,T). Out of four

possible outcomes, showing two heads would occur once. We conclude that the probability of

seeing (H,H) is ¼. This concept can be extended to games of chance involving cards, roulette

and other forms of gambling. Indeed, the original development of probabalistic concepts arose

by French mathematicians seeking to help nobility win at games of chance.

ii)

Empirical probability – Suppose we cannot define all of the possible outcomes of an experiment

and hence cannot enumerate the possible outcomes. Empirical probability seeks to determine

probability based on observations of many experiments. For example, we seek to determine the

probability of having a flat tire on our automobile versus having a transmission failure. There

are many ways in which we could obtain a flat tire, and many ways in which a transmission

could fail. To determine these probabilities, we observe how often these events occur “in

nature” and use the relative number of occurrences (e.g., versus miles traveled) to estimate

probability. Similar means are used to determine the likelihood of being affected by a disease

or medical condition. Of course, these occurrences depend upon other conditions, but for the

moment we ignore those dependencies.

iii)

Finally, subjective probability involves a human assessment of the likelihood of the occurrence

of an event or activity. We often use subjective probabilities to determine how to live our lives

– should we take an umbrella today? Will there be heavy traffic on I-270? Subjective

probability is tempting to use, since we don’t actually have to enumerate outcomes or collect

data about underlying distributions of events or activities. Unfortunately, humans are

notoriously poor at estimating probabilities. In particular our estimate of the probability of an

event, A, (P(A)), and the probability that A does not happen, Not-A (P(Not-A)) do not add up to

one. In classic probability, the probability of event, P(A), plus the probability of event Not-A,

P(Not-A) should equal one. After all, an event either will happens or it will not. However,

when humans make these estimates (even experienced statisticians), they fail this condition.

Returning to statistical inference, we define a statistical hypothesis as a statement about a population,

which, based on information from a sample, one seeks to support or refute. For example, we

hypothesize that a coin being used by a gambling house is biased. To test this hypothesis, we would

observe the coin being flipped a number of times and seek to either refute the hypothesis (that is,

determine that the evidences does not support a biased coin), or affirm the hypothesis (state that the

coin is biased).

A statistical test is a set of rules whereby a decision on the hypothesis H is reached. A measure of the

test accuracy involves a probability statement regarding how accurate our statistical test is. Again

using the biased coin hypothesis, the measure of test accuracy would specify, for example, how many

times we would need to flip the coin to either affirm or refute the hypothesis.

The classical statistical inference proceeds as follows:

We start with a hypothesis, say H0, and it’s alternate, H1. Using the biased coin example, we assume a

hypothesis, H0, that the coin is fair or unbiased. The alternate hypothesis, H1, is that the coin is biased.

The test logic proceeds as follows: First, assume that the null hypothesis is true – assume H0, is true.

We collect data – in this case observations of the coin flips. Using the language of statistical inference,

this is known as “examining the consequences of H0, being true in the sampling distribution for the

statistic”. If the observations have a high probability of occurring, we can say that the “data do not

contradict, H0”. Thus, we do not declare that the hypothesis is true, we can only say that the data

collected does not tend to contradict the hypothesis being true. Conversely, if the observed data do

not support the hypothesis being true, we cannot say that it is false, only that the data contracts the

hypothesis. The level of significance of the test is the probability level that is considered to low to

warrant support of H0.

This may seem arcane and a stuffy mathematical set of statements, but the student is reminded that

such tests are used to determine things like, does a particular drug reduce the effects of a specific

condition or disease.

How can statistical inference be used for target or entity classification? Let’s consider an example of

emitter identification, e. g. for situation assessment related to a Department of Defense problem. In

particular, we have an electronic support measures (ESM) device on our aircraft and want to devise a

means of knowing whether or not we are being illuminated by an emitter of possible hostile intent.

Note that this example can be translated directly into other applications such as medical tests,

environmental monitoring, or monitoring complex machines. In fact, such an application is very similar

to the use of a “fuzz buster” to monitor the presence of a police speed trap.

Consider two types of emitters, emitter type E1, and emitter type, E2. Based on observing emission

characteristics such as pulse repetition frequency (PRF), and frequency of emission (F), we want to

determine whether we are being illuminated by an emitter of type, E1, or an emitter of type, E2. Why

would this make a difference? Suppose emitter type, E1, is a common weather radar, while emitter

type, E2, is associated with a ground to air aircraft missile defense unit. We want our electronic

support measure (ESM) device to provide a warning if we are being illuminated by a radar associated

with an anti-aircraft missile defense unit. How would statistical inference proceed?

Continuing this example, suppose assume that we have previously collected information about both

types of emitters and have the empirical distributions about each emitter based on pulse repetition

interval (PRI) - We will only use PRI at the moment, because it is easier to show than showing both PRI

and Frequency. The graph on the left shows two curves, one for each emitter. The curve is the

probability that an emitter of either type, E1 or type E2, would exhibit a specific value of PRI. So in the

figure, the cross-hatched area is the probability that an emitter of type, E2, would be observed emitting

with a pulse repetition interval between, PRIN and PRIN+1.

Note that the two curves overlap. Each emitter has a range of pulse repetition agility – they both exhibit

pulse repetition intervals over a wice range. But this range of PRIs overlap. If each emitter emitted

only in a narrow range of values of PRI) AND these ranges were not overlapping, then the inference

would be easy. The challenge comes when these ranges of PRI overlap as shown on the lower right

hand side of the figure.

In order to make a decision about identity, we select a critical value of PRI (call it PRIc) and decide to use

the following rule:

•

If the observed value of PRI is greater than PRIc, we declare that we have seen an emitter of

type, E2

•

Conversely, if the observed value of PRI is less than PRIc, we declare that we have seen an

emitter of type, E1.

Of course, even when we use this rule, we can still be wrong in our declaration. As shown on the right

hand side of the figure, if the observed value of PRI is greater that PRIc, there is still a probability that we

have NOT seen an emitter of type E2, but rather have seen an emitter of type E1. This is the crosshatched area under the distribution to the right of the vertical PRIc line. The similar argument holds for

declaring that we have seen an emitter of type, E1, if the observed value of PRI is less than PRIc. Each of

these errors in declaration are called a type 1 versus type 2 error, respectively.

How then do we choose the value of PRIc? Unfortunately the mathematics does not help us. This is a

design choice. If we are concerned about the threat of being illuminated by an emitter of type , E2,

because it is associated with a threatening anti-aircraft missile unit, we may elect to set the value of

PRIc, very low (move it to the left). This would reduce the false alarms in which we mistakenly declare

that we’re being illuminated by an emitter of type, E1, when in fact we are being illuminated by a radar

of type, E2. The problem is that we will get more false alarms of the other type and be constantly “on

alert” when it is not necessary. Under these conditions, a pilot might tend to ignore the alarms or even

turn off the ESM device.

These are challenging issues with no easy solutions. Think of alarm systems used in operating rooms by

anesthesiologists, or medical tests for dire diseases. False alarms can have fatal consequences.

One method to reduce issues related to false alarms is the use of Bayesian Inference. This is based on a

theorem developed by an English clergyman, Thomas Bayes, and published in 1763, two years after his

death.

Bayesian inference is an extension of classical statistical inference in several ways. First, rather than

considering only a null hypothesis, H0, and it’s alternate, H1, we consider a set of hypotheses, Hi, which

are mutually exclusive. That is, each one is separate from all other hypotheses, and exhaustive. This

collection of hypotheses represents every anticipated condition or “explanation” that could cause

observed data.

One form of the Bayes theorem is shown here. In words, the theorem says, “if E represents an event,

that has occurred, the probability that a hypothesis, Hi, is true, based on the observed event, is equal to

the a priori probability of the hypothesis, P(Hi), multiplied by the probability that if the hypothesis were

true we would have observed the event, E, P(E/Hi), divided by the sum of all the hypotheses, Hi, times

the corresponding probability that the event would have been seen or caused by each hypothesis”.

In simpler terms, the probability that an hypothesis, Hi, is true (even before we’ve seen any evidence of

it) times the probability that, if the hypothesis were true it would have caused the event of evidence to

be observed, normalized by the other ways in which the evidence could have been caused. So, Bayes

rule could also be written as; P(H|E) = P(E|H)P(H)/P(E); the probability of the hypothesis H, given that

we’ve observed E, is equal to the probability of H (prior to any evidence) times the probability that we

would have seen E given that H is true, divided by the probability that we would have observed E if any

hypothesis was true.

Returning to our electronic support measures (ESM), we can use Bayes rule to compute the probability

that we have seen (or been illuminated by) an emitter of type E (either E1 or E2), given the observation

of a value of PRI0 and Frequency, F0.

We could extend this to many different types of emitters that could be observed by our ESM system.

For each possible candidate emitter, we compute the joint probability that we have seen emitter of

type, X, given the observed values of PRI and Frequency. In each case we can provide a priori

information about that likelihood that the emitter would be observed or located in the area we are

flying. For example, if our hypothetical pilot is flying near Cedar Rapids, Iowa, it is unlikely that there is

an emitter associated with an anti-aircraft missile facility in the area. We can include information

about the relative numbers of different types of emitters, and estimate the probabilities of the evidence

given the evidence. Also we could use subjective probabilities if we do not have empirical probability

data.

Before proceeding to discuss, decision-level fusion, we introduce here the concept of a declaration

matrix. The idea is shown conceptually here for a radar observing an aircraft.

The radar output is an observed radar cross section (RCS) of the aircraft. Using techniques previously

described such as signal processing, feature extraction, pattern classification and identity declaration,

we could obtain an identity declaration matrix as shown on the figure. The rows of the matrix are

possible declarations made by the sensor. That is, the radar could declare that it has seen an object of

type 1 (e.g. perhaps a commercial aircraft), OR, it could declare that it has seen an object of type 2 (e.g.,

a weather balloon), etc.

For each of these possible declarations, D1, D2, etc. the matrix would provide an estimate of the

probability that the declaration of object identity or type actually matches reality. The columns of the

matrix are the actual types of objects or entities that could be observed. Each element of the matrix

represents a probability, P(Di|Oj). This is the probability that the sensor would have declared it has

seen an object of type, “I” (viz., Di ) , given that in fact it has actually observed an object of type “J” (Oj).

The diagonal of the matrix, containing the probabilities, P(Di|Oi), are the probabilities that for each type

of entity or object, “I”, the sensor will make the correct identification. All of the off diagonal terms are

the probabilities of false identity declarations.

We note that, in general, these probabilities would change as a function of observing conditions,

distance to the observed target, calibration of the sensor, and many other factors. How could we

determine these probabilities? The short answer is that we need to either; i) develop physics based

models of the sensor performance, ii) try to calibrate the sensor on a test range to observed these

probability distributions, or iii) use subjective probabilities. In general this is a difficult problem.

However, for the remaining part of our discussion, we will assume that we could obtain these values in

some way.

In principle, if we have a number of sensors observing an object or entity, we need to determine the

probabilities associated with the declaration matrix for each sensor or sensor type. In the previous

example, we implied that the declaration matrix was square – that the number of columns must equal

the number of rows.

In fact, this is not necessarily true. For example, we might have a sensor that is unable to identify some

entities. Instead of making a separate declaration for objects O1, O2, …, to ON, the sensor may only be

able to identify objects 1 through M and group everything else into a single declaration we’ll call “I don’t

know” (viz., Di don’t know). It is important to note here, that while it is acceptable to have a sensor declare,

“I don’t know” for a declaration, we must assume that we can account for every possible type of entity

that it might observe and result in an observation. Hence, a sensor can declare, “I don’t know”, but we

can’t create an “I don’t know” entity or set of entities. We’ll see later how this can be modified.

In order to fusion multiple sensor observations or declarations, we will use Bayesian inference. We can

process the declaration matrix of each sensor using Bayes' rule. Suppose sensor A declares that it has

“seen” an object of type 1 – viz., it makes a declaration, D1. We can compute a series of probabilities; i)

the probability that the sensor has actually seen object 1 given it’s declaration that it has seen object 1

(P(O1|D1), ii) the probability that the sensor has actually seen object 2 given that it has declared it has

seen an object of type 1, (P(O2|D1), etc. This can be done for each sensor.

Given the multiple observations or declaration, Bayes rule allows fusion of all of those declarations as

shown in the equation above. Hence, for each type of object, object type O1, O2, etc, we compute the

joint probability that the collection of sensors has observed each type of object. Finally, based on these

joint probabilities, we select which one is the most likely (viz., we choose the highest probability.

The concept of using Bayes rule is shown in this diagram for multiple sensors. Notice that Bayes

combination formula does not tell us what the “true” object is that has been jointly observed by the

suite of sensors – it merely computes a joint probability for each type of object. Now you may see why

we need to know all the possible types of objects (or entities) that could be observed. This is because

we need to know all the possible causes of the sensor observations. Again, while it is ok for a sensor to

declare “I don’t know”, it is not ok in Bayesian inference not to know all of the possible “causes” for the

observations.

So a brief summary about Bayesian inference. First, the good news. This approach allows

incorporation about prior information, such as the likelihood that we would see or encounter a

particular object, entity or situation. This is done via the use of the “prior” probabilities for each

hypothesis, P(Hi). It also allows the use of subjective probabilities – although the issues regarding

humans ability to estimate these probabilities still holds. The Bayesian approach also allows iterative

updates – we can “guess” at the prior probabilities, collect sensor information, update the probabilities

of these objects, events or activities and then use these updated probabilities as the new “priors” for yet

further observations and updates. Finally, this approach has a kind of intuitive formulation that “makes

sense”..

Now for the bad news (actually not bad news – just limitations and constraints). While we can use the

prior probabilities P(Hi) to provide improved information for the inference process, we may not always

know what these are. In this case, we resort to what mathematicians call, the “principle of

indifference”, a fancy way of saying we can set them all equal (viz., P(Hi) = 1/N, where N is the number

of hypotheses). This is convenient, but may significantly misrepresent reality.

The second issue is that we need to identify ALL of the hypotheses – the possible causes of our sensors

observing “something” (an object, event, or activity). If there is an unknown cause that could induce an

observation, this must be identified; otherwise our reasoning is flawed. For example, if a medical test

for a disease could be positive if the disease is present, and also positive if the patient has taken another

drug or uncommon food item, we would need to know that. Otherwise, our conclusions would be

biased.

A third issue involves dependent evidence. The formulae that we have shown thus far have assumed

that all of the sensor observations are independent – that one observation does not depend upon or is

influenced by another one. There are ways of accounting for such conditional dependencies, but the

formulae become complicated.

Finally, our reasoning could produce some unreasonable results if we do not consider evidential

dependencies. The classic example is observing wet grass and concluding that it must have rained. If

we knew that there was an automatic sprinkler system that came on in the early morning, our reasoning

would be flawed because we did not include these logical dependencies.

We turn now to an extension of Bayesian inference introduced by Arthur Dempster and Glen Shafer. In

1966, Arthur Dempster developed a basic theory of how to represent the uncertainties for expert

opinion using a concept of “upper” and “lower” probabilities. In 1976, Glen Shafer refined and

extended the concept to “upper probabilities” and “degrees of belief”, and in 1988 George Klir and Tina

Folger introduced the concepts of “degrees of belief” and “plausibility’. We will use these latter

concepts in our discussion of what is commonly termed “Dempster-Shafer” theory.

The basic concept involves modeling how humans observe evidence and distribute a sense of belief to

propositions about the possibilities in a domain. Conceptually we define a quantity called a “measure of

belief” that evidence supports a proposition, A. (e.g., m(A)). We can also assign a measure of belief to a

proposition, A, and it’s union with another hypothesis, B; e.g., m(AUB). A couple of comments are

needed here. First, a measure of belief is not (quite) a probability – I’ll explain momentarily. Second, a

proposition may contain multiple, perhaps conflicting hypotheses. And third, the Dempster-Shafer

theory becomes identical to Bayesian theory under some restricted assumptions.

In the Dempster-Shafer concept, we say that the probabilities for propositions, are “induced” by the

mass distribution according to the relationship shown above. That is, the Probability of Proposition, A,

P(A) is equal to the sum of all of the probability masses, m, that both directly relate to proposition, A,

and to propositions that contain A as a subset. If we assign probability masses ONLY to hypotheses

rather than to general propositions, then the relationships reduce to a Bayesian approach.

A key element of the Dempster-Shafer approach is that measures of belief can be assigned to

propositions that need not be mutually exclusive. This leads to the notion of an evidential interval

{Support (A), Plausibility (A)}. We define an interval that includes a measure that the belief supports the

proposition A directly, and a plausibility measure – a measure of the extent to which the assigned belief

supports the proposition A indirectly. The plausibility is a measure of the extent that the belief does

not directly refute the proposition, A.

Let’s turn to an example.

Consider the classic probability problem of throwing a die. There are six observable faces that could be

shown if a die is thrown; namely, “the number showing is “1”, the number showing is “two”, etc. In

classic probability and in Bayesian inference, we only assign evidence or probabilities to the

fundamental hypotheses; “hypothesis 1 – the die will show a 1”, “hypothesis 2 – the die will show a

“2”,” etc. However, in the Dempster-Shafer approach we can assign measures of belief not only to

these fundamental hypotheses, but also to propositions such as, “the number showing on the die is

even”, the number showing on the die is “odd”, and finally, the number showing on the die is either a 1

or 2 or 3 or 4 or 5 or 6. This latter proposition is equivalent to saying, “I don’t know” – it is a measure

of belief assigned to a general level of uncertainty.

In the Dempster-Shafer approach, we introduce two special sets – the set, Θ (theta), of the fundamental

hypotheses, and the set 2Θ, of general propositions. The set, Θ, is called the “frame of discernment”.

The classic rules of probability show us how to compute the probabilities of combinations of hypothesis;

the Bayesian rules of combination show us how to combine probabilities (including a priori information),

and the Dempster-Shafer rules of combination show is how to combine probability masses for

combinations of propositions. These are shown on the next two charts.

Here are the equations to define the support for a proposition, A, and the plausibility of proposition, A.

Support is the accumulation of measures of belief for elements in the set, Θ, AND the set, 2Θ, that

directly support the proposition, A. Similarly, the plausibility of A, is the sum of the evidence that

directly refutes proposition, A.

The support for A is the degree to which the evidence supports A, either directly or indirectly, while the

plausibility is the extent to which the evidence fails to refute the proposition, A. The support for A and

the plausibility for NOT-A must sum to a value of 1.

Consider the following two examples; i) First, suppose the support for A is 0 and the plausibility for A is

0. That means there is NO evidence that supports A (either directly or indirectly) and there IS evidence

that directly refutes A – hence the proposition must be false. ii) Second, suppose the support for A is

0.25 and the plausibility is 0.85. This means there is a 25% amount of evidence that supports A directly,

and 15% of evidence that directly refutes proposition A. The difference between the plausibility and

the support is called the evidential interval. In this case there is evidence that both supports AND

refutes proposition, A.

Clearly, if the support for proposition, A, was 1 (indicating 100% evidence supports proposition, A, AND

the plausibility of A was 1 (meaning there was 0 % evidence to refute A, then the proposition must be

true. The evidential interval provides a measure of the uncertainty between support and refutation for

a proposition.

Let’s consider an example of a threat warning system (equivalent to an Electronic Support Measures

(ESM) system that we previously introduced). This example is adopted from an article by Thomas

Greer. We suppose that the threat warning system (TWS) measures the pulse repetition frequency or

interval (PRF) and the radio frequency (RF) of radars that might be illuminating our flying aircraft. We

suppose that we’ve collected previous information or developed models, so we can understand the

relationship between the PRF, RF observations and the type of radar that emits or exhibits that PRF and

RF. What we’re especially concerned about are those radars that are associated with surface to air

missile (SAM) units. In addition, we have some evidence and models that allow us to determine what

mode of operation such a radar is in; for example in a target tracking (TTR) mode, or an acquisition mode

(ACQ).

For those who are unfamiliar with such a radar, I note that as an aircraft is flying towards a military unit

having a surface to air missile weapon, the SAM radar might transition from a general surveillance

mode, to a target tracking mode, to an acquisition mode, and finally to a mode in which the radar is

guiding a missile to shoot down our aircraft! Hence, we are concerned not only about what type of

radar is observing us, but what mode of operation it is in. This mode of operation would provide

evidence of whether or not we are a potential target of a surface to air missile.

In this example, we suppose that we have a special “Dempster-Shafer” sensor that observes RF and PRF,

and based on some model assigns probability masses (measures of belief) to what type of radar is being

observed and what mode of operation it is in. Sample values are shown here. The term, SAM-X,

means, “a surface to air missile radar of type X”. In the example, above, the warning system assigns,

measures of belief to; i) having seen a surface to air missile radar of type X (SAM-X = 0.3), ii) having seen

a surface to air missile radar of type X in the target tracking mode (SAM-X, TTR = 0.4), iii) having seen an

surface to air missile radar of type X in the acquisition mode (SAM-X, ACQ = 0.2) and finally, iv)

assignment of general uncertainty of a value of 0.1. Given this, the chart uses the previous DemspterShafer relations to compute the evidential intervals for various propositions.

Suppose we had two Dempster-Shafer sensors. How would the evidence be combined? This chart

shows a numerical example of two sources or sensors providing measures of belief about surface to air

missile radars. The example indicates the resulting credibility or evidential intervals for various

propositions. In turn, these can be combined using Dempster’s rules of combination to produce fused

or combined evidential intervals. These combined results are shown in the next slide.

For this example, this chart shows the fused measures of belief and evidential intervals based on the

combined evidence from the two Dempster-Shafer sensors. How did we get these results?

To get the fused results, we use Dempster’s rules of combination. These are summarized as follows;

•

The product of mass assignments to two propositions that are consistent leads to another

proposition contained within the original (e.g., m1(a1)m2(a1) = m(a1)).

•

Multiplying the mass assignment to uncertainty by the mass assignment to any other

proposition leads to a contribution to that proposition (e.g., m1()m2(a2) = m(a2)).

•

Multiplying uncertainty by uncertainty leads to a new assignment to uncertainty (e.g,

m1()m2() = m()).

•

When inconsistency occurs between knowledge sources, assign a measure of inconsistency

denoted k to their products (e.g., m1(a1)m2(a1) = k).

This chart, adapted from an example provided by Ed Waltz, shows a graphical flow of how multiple

sources of information are combined using the Dempster-Shafer approach. For each source or sensor,

we obtain the measures of belief for propositions and subsequently compute the evidential or

credability intervals. We compute the composite belief functions using Dempster’s rules of

combination and subsequently compute the joint or fused credibility intervals for the pooled evidence.

In our previous discussion of the Bayesian approach, we noted that the approach only provided us with

resulting probabilities for hypotheses, but that we still had to apply logic to select the actual hypothesis

we believed to be “true”. Similarly, the Dempster-Shafer approach only provides us with joint or fused

credibility intervals for various propositions, but does not select which proposition should be accepted

as being “true”. This final selection must be based on logic that we provide.

This chart is analogous to the chart shown for the Bayesian inference process. It shows the flow from

sensors, to declarations of probability masses for propositions, to combination of these declarations

using Dempster’s combination rules, to a final decision using decision logic.

As with Bayes method, we will highlight some “good news” and “bad news” about Dempster-Shafer

inference. First, the good news; i) The method allows incorporation of prior information about

hypotheses and propositions (just as Bayes method did using “prior probabilities”); ii) It allows use of

subjective evidence, iii) it allows assignment of a general level of uncertainty – that is, it allows

assignment of probability mass to the “I don’t know” proposition, and finally iv) it allows for iterative

updates – similar to the Bayesian approach.

Now for some issues – the “bad news”; i) As with Bayes method, it requires specification of the “prior

knowledge” – that is, if we’re going to use information about the prior likelihood of propositions, we

must provide that information, ii) this is not a particularly “intuitive” method – while it is aimed at trying

to model how humans assign evidence, it is not as intuitive as the use of probabilities, iii) this method is

computationally more complex and demanding that the Bayesian approach, iv) it can become complex

for dependent evidence, and finally, v) this may still produce anomalous results if we do not take into

account dependencies. This is similar to the issue with Bayesian inference.

A final note involves the use of a general level of uncertainty. A strength of this method is that we can

assign evidence to a general level of uncertainty. This would represent for example a way to

The final decision-level fusion technique to be introduced in this lesson is the use of voting. The idea is

simple: Each sensor or source of information provides a declaration about a hypothesis or proposition,

and we simply us a democratic voting method to combine the data. In the simplest version, each

source or sensor gets an equal vote. More sophisticated voting techniques can use weights to try to

account for the performance or reliability of each source, or other methods. As with each of the

previous methods introduced, we will need decision logic to determine what final result we will select as

being “true”. Thus, we might use logic such as, “the majority rules” (select the answer based on which

gets the most “votes”), or plurality or other logic. Voting techniques have the advantage of being both

intuitive and relatively simple to implement.

This slide simply summarizes some of the fusion techniques for voting, weighted decision methods, and

Bayesian Decision processing.

Young researchers in automated reasoning and artificial intelligence wax enthusiastically about the

power of computers and their potential to automate human-like reasoning processes (saying in effect

“aren’t computers wonderful!”)

Later in their careers these same researchers admit that it is a very difficult problem and believe they

could make significant progress with increased computer speed and memory

Still later, these researchers realize the complexities of the problem and praise human reasoning (saying

in effect, “aren’t humans wonderful!”). The message is that, while very sophisticated mathematical

techniques can be developed for decision-level fusion, humans still possess some remarkable

capabilities, especially in the use of contextual information to shape decisions.