(descriptive), bivariate (both basic crosstabs and cross tabs)

advertisement

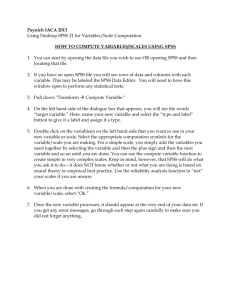

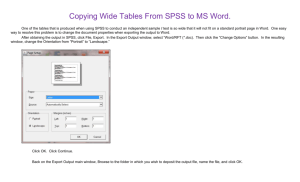

Downloading a Pew Dataset and Using SPSS to and for Analysis: Part Two Once you have completed recoding your variables, you will want to begin doing some basic univariate analyses of your data. There are basically two main purposes for univariate analysis. The first is to summarize the “central tendency” of some quality that has been explored in a survey, e.g., “the mean income for survey respondents was $43.4.” Depending on what you are looking at, means, medians, and modes are reported measures of central tendency. The other main purpose of univariate stats are to show how a given quality is distributed, e.g., we could look at the standard deviation associated with our survey’s mean income statistic to see whether the incomes most people tend to cluster around the average income for the sample or if incomes are more broadly distributed. We could look at a statistic of skewness to see if the distribution deviates from a bell curve (e.g. income is skewed towards…e.g. the sharp tail points at…the wealthy in that lots of people are quite poor and comparatively few people are rich). Since standard deviations statistics and measurements of skewness are difficult aren’t something that most people are familiar with, we might choose to instead summarize the distribution and skew of income in our sample by reporting means for people at each quartile, quintile, or decile. Generating univariate statistics and charts if appropriate is something you can do from the syntax window. If you are working with a previously saved syntax file, you will need to open it up and run the whole thing to recreate your modified dataset (i.e., the one that has all of your recoded and computed variables). Then, do the following: 1. Staying on your syntax page, select “Analyze” “Descriptive Statistics” “Frequencies”. 2. Select the variable (or several if you want) that you are interested in analyzing and click the button. 3. In order to find out additional information on this variable, select “statistics” and then click on “mean,” median,” or mode depending on what makes the most sense for what you want to know. If you’ve created a “dummy variable” (coded 0-1) that identifies people who belong to a certain group, selecting the mean option will allow you to see the percentage of respondents belonging to the category. Click “continue” when finished in that dialogue box. 4. You may want to look at your data in a graph or chart; if so, select “charts” and your preference of chart type, then click “continue.” If you are dealing with a variable that has nominal categories, bar charts are usually the easiest to read. For variables that are continuous and have lots of values (age in years or income for example), a line chart or histogram is your best option. Whatever chart you do, you will make it more readable if you check the options to display your data in percentages 1 rather than counts (e.g., if comparing men and women, you want to see percentages rather than the number of respondents in each category). 5. Once you have finished selecting your various options, you can hit the OK button to run your statistics. If you want to see the SPSS coding for these procedures, once again click “paste” and this will paste the command code in the syntax file. Highlight the command code and once again select “Run” and “Selection”…and presto…you will have your chart and frequencies. In all cases, carefully at the results in your output file to make sure that something doesn’t look really strange as a consequence of a variable coding mistake. 6. If for some reason, you want to produce univariate stats for just a subgroup or to see stats for two groups, you can use the command DataSplit (checking the “compare groups” option). This command would allow you see various statistics for just “Republicans” (as well as for the “NonRepublicans”) if you split your dataset on the dummy variable for partisanship that we created in the example above). After your are done with your analyses using subgroups, you need to go back through the split command to tell SPSS to resume analyzing “all cases.” An important note: If you are comparing groups to see if there are statistically significant differences among them (e.g., trying to see if Republicans are different than Democrats), you can’t use this procedure. To compare the means between two groups or more, you need to use either a T-test or ANOVA procedure as described below. 7. As you shut down SPSS, you may or may not want to save any syntax related to these procedures. On the one hand, you might want to save your coding for these analyses somewhere so you can cut and paste it into your syntax sheet to quickly use it for other variables you want to analyze later on (When you will be doing pretty much the same thing with more than one variable, it will be much quicker to cut and paste a command in the syntax file than to renegotiate a menu command and its various dialog windows). On the other hand, if you are going to make further modifications to the dataset based on what you found, it may make sense to not have this material cluttering up your syntax file. ANALYZING DATA IN SPSS: BIVARIATE ANALYSES Most of you will want to see what, if any, statistically significant relationship exists between two variables. What exactly do we mean by a statistically significant “relationship”? Regardless of which specific “test of association” you choose use to generate a “coefficient of association,” you will ask SPSS to do four things. First, you will run some type of procedure to learn if two or more variables (that is, properties) in your dataset are associated, which is to say tend to vary together, as if they were connected in some way. Second, if there appears to be some type of “covariance,” you want to know if this seeming association is due purely to chance, which is to say that you want to know if you were to redo this 2 survey 100 times in the same population at the same time, would you consistently (that is at least 95% of the time) find that these two variables vary together. Third, you want to know how “strong” the association is; in other words, how consistently do the two variables co-vary. Finally, if the variables have some internal order (e.g., the range in value from less to more), you want to know “the direction of the relationship” by which we mean whether an increase in the value of one the variables appears to co-vary positively or negatively with the value of the other variable. Before you do anything below, the first quick step in bivariate analysis is to take a look at a correlation matrix that includes all of the variables you are interested in. To do this, go to: Analyzecorrelationbivariate correlation. Check just the option to return just the Pearson’s correlation coefficient and the go to the left window and double-click on every variable in which you are interested—including both dependent and independent variables. You will get quick and dirty view of which variables are significantly correlated (i.e., those starred by SPSS or that carry a significance statistic that is <.05). The Pearson’s measurement also gives you some idea of how strong a negative or positive relationship is. If the coefficient is really small and insignificant, it is unlikely that the more precise tests described below will add much information to your story. 1. To get more precise with bivariate analysis, you need to determine is what kind of variables you will be working with. A quick review of the basic variable types: Categorical (aka) nominal variables are indicators where different values represent different names or categories for something (e.g. gender, party identification, or religion). A dummy variable is just a yes/no categorical variable that has been coded 0/1. Ordinal variables are indicators where different values represent a rank ordering of some sort (e.g., a question that asks respondents if they “strongly agree, agree… strongly disagree). Interval variables have ordered values where any one-unit increase in the indicators value is approximately equal to any other one-unit increase. Age is an example of this. 2. If you are looking to see if there is a relationship between two categorical/nominal variables, you will use the crosstabs command: Analyze descriptive statistics cross-tabs. The most appropriate statistical test for two variables of this type usually is lamda, so you will need to check this option by opening the statistics window. The lamda test of association produces a statistic that ranges from zero (no relationship) to one (completely associated). Under certain circumstances, lamda returns a 0 when there actually is a relationship between the two variables. If you get a zero for association, use Cramer’s V instead. Keep in mind that the procedures for analyzing one or more nominal variables do not provide any information about the direction of the relationship because these variables’ coding (e.g., parties randomly assigned the numbers 1, 2, or 3) reflects names rather (e.g., Repub., Dem., Libertarian) rather than some underlying, ranked order. Here’s a rough guide to use when analyzing the strength of categorical and ordinal coefficents of association: Under .1 = very weak relationship; .1 to .2 = weak; .2-.29 moderate; anything above .4 3 = strongly correlated unless we would expect the two variables to be more strongly linked. 3. If you are examining the relationship between a categorical and an ordinal variable, you still use cross-tabs, but the appropriate measure of association is Cramer’s V. It also ranges between zero and 1, and is interpreted like Lamda. 4. If both of your variables are ordinal, you will still use cross-tabs, but the appropriate measure of association is usually Gamma (although researchers use several other measures interchangeably). If your variables both happen to have the same number of ordered categories, the best test of association is tau-b. These measures range from -1 to 0 or 0-1, depending on whether the two variables have a negative or positive association (e.g., people who agree that schools should be improved may also be more likely to agree that taxes should increase). 5. To test the association between a categorical and an interval variable, use crosstabs and use the eta measurement. 6. Here’s a visual example of how to use cross-tabs: When using the cross-tabs dialog window, it is customary to set up your table so that different values of your dependent variable will be listed in the rows (drawing from the example above, let’s say you want to see whether considering yourself to be a born-again Christian related to being a Republican). Notice in the example below that the “cell display” options are checked for “observed” counts and “percentage” column boxes: Before, we look at the example, we will need to recode the “born” variable (Do you consider yourself to be a born-again Christian) to deal with “don’t know” and/or missing responses: RECODE born (9=SYSMIS) (2=0) (ELSE=Copy) INTO bornagain2. VARIABLE LABELS bornagain2 'Consider yourself born again Christian'. VALUE LABELS bornagain2 1 'Yes, born again' 0 'No'. EXECUTE. EXECUTE. 7. Now we can use the cross-tab procedure: 4 5 After pasting the syntax code into the syntax file and running that selection of code, here’s what we end up with on our output screen: First we see that Republicans in the survey were more likely say they are born-again: Our output tells us that relationship between the two variables is statically significant (the Approx. Sig is <.05, and in this case tells us that if we were to conduct this survey 1000 separate times, we would find that being Republican and being Born-Again covary together and in the same way in every survey). One of the cardinal rules of stats is that statistical significance is the not the same as substantive importance. Here, the strength of the association between the two variables is not especially strong, especially in light of the popular perception that most born-again Christian’s are Republican. Specifically, the Cramer V statistic (we won’t rely on the Lamda coefficient because this is one of those circumstances where it comes up zero), tells us that there are lots of Democrats who are born-again and lots of Republicans who are not. Symmetric Measures Value Nominal by Nominal N of Valid Cases Approx. Sig. Phi .132 .000 Cramer's V .132 .000 2775 6 How is correlation different from the other measures of association? In cases involving an interval variable and another variable whose coding has a logical rank order, we will need to examine whether there is a correlation between the two variables. Because of the number of responses categories involved, we don’t want to use the cross-tabs procedure. 1. If you want to examine the direction and strength between an ordinal and an interval variable, Spearman’s Rho is the appropriate test: AnalyzeCorrelateBivariate (check just the Spearman option). This test’s output will return a “correlation coefficient” that ranges from -1 to 0 or 0 to 1, depending on whether the association is positive (that is that an increase in one variable’s value corresponds to an increase in the other) or not. SPSS also will provide a significance statistic indicating whether the Rho correlation coefficient is statistically different from zero (pr= .05 means that there is a 95% chance that the correlation is different from zero and that the +/- sign on the coefficient is correct). If the sig. is >.05, we can’t be confident enough to say that there is a relationship between the two variables that isn’t due to normally sampling variation. 2. If you want to examine the direction and strength between two interval variables, Pearson’s R is the appropriate test: AnalyzeCorrelateBivariate (check just the Pearson’s option). It is interpreted the same way as Spearman’s Rho. Comparing the means of populations. Sometimes we want to see if different groups have statistically different means for an interval or ordinal variable (e.g., Do Republicans, on average, make more or less money than Democrats? Do they differ with respect to their average level of agreement with the statement: you can trust government most of the time?). 1. If you are comparing means for just two groups that are coded as different values of the same variables (for instance you want to compare men and women using a gender indicator that is coded 0,1), you will use the Independent Samples T test: AnalyzeCompare MeansInd. Samples T Test. The interval/ordinal variable goes into the window marked “dependent variable.” If your categorical variable happens to contain three or more subgroups (e.g., Democrats =1, Republicans=2, and others=3), you can still compare the means for just two of them; you just need to use the “Define groups” option indicate which two values of the variable should be compared (e.g., groups 1 and 2 if you want to compare the means for Dems and Repubs). 2. If you want to compare means using categorical variable that identifies more than three groups, you have two options. First, you can use the Anova command to examine whether every group’s mean is different from all of the others’ means (e.g., test whether or not the mean level of support for government among Libertarians, Democrats, and Republicans is different from each and all of the other groups). The appropriate test for this situation is: AnalyzeCompare MeansOne-way Anova (The “factor” variable is your categorical variable; the dependent variable is your interval 7 variable). Keep in mind that Anova will return a statistically insignificant “F-statistic” if any group’s mean is not statistically distinguishable from any one the other groups, which may well happen if one of the groups has a small sample size and thus a large margin of error. A second option to compare the means of several subgroups is to use the independent Sample-T test and to just change out the groups being compared. Thus if you have a variable where you want to compare the means of subgroups 1,2,3. You would run three separate T-tests with different “define groups” settings: 1 and 2, 2 and 3, 1 and 3. Although slightly more work, this is the preferred procedure when comparing multiple means. ANALYZING DATA IN SPSS: MULTIVARIATE ANALYSIS Many of you will not be satisfied with using your research project to explore how just one or two variables impacts a given outcome. One way to look at associations while adding an additional control variable or two is to use the “layers” options in the cross-tabs procedure (e.g., you could compare men and women with respect to the existence, strength, and significance of a relationship between being a born again Christian and being Republican). While this is nice for charts, most social scientists take things a step further. Have you ever wondered why most studies reported in political science, natural science, and even medical journals use “regression” models even though most Americans have no idea what these procedures entail or how to interpret their output? Most social science research today uses somewhat more sophisticated methods than crosstabs for two reasons. First we often want to consider how changes in each one of many different independent variables will an outcome in which we are interested. Frankly, life is complicated, and controlling for more variables makes it clearer how our variables of interest shape the outcome we are studying. So, rather than looking at how race impacts voting, how gender impacts voting, how education impacts voting, etc., social scientists instead frequently want to know how differences in race, gender, and education all interact to impact voting. Second “regression” analytical techniques also allow us to answer the question of whether there is a relationship between an independent variable of special interest and our dependent variable/s, once other relevant factors are taken into account. As an example of how we can use regression models to answer a question that would be impossible to get at with bivariate methods, consider a study that wants to know if Mexican Americans vote at the same rate as other Americans. Let’s assume that previous research has suggested that 1) Mexican Americans vote in presidential elections at lower rates when we just compare mean rates of turnout, 2) that Mexican Americans, on average, have lower socioeconomic indicators, and 3) that people with lower socioeconomic indicators vote at lower rates. Thus our research thus might want to see whether Mexicans still vote at lower rate than other Americans once we “take into account,” that is “control” for various indicators of socio-economics that impact voting. In other words, we want to know whether there is something about being a Mexican American leads to lower voting rather than other qualities that disproportionately apply to this group. To answer this question, we can use a statistical program 8 like SPSS to work out all of the permutations necessary to compare the voter turnout of Mexicans and other Americans across varying levels of income, educational attainment, occupational status, etc. Our results might allow us to say something like, “while the odds that the typical Mexican-American will vote in a presidential election is approximately half those of other Americans, once the effects on voting of the socio-economic differences between the two groups are taken into account, the odds that a nonMexican will vote in a presidential election is only 20 percent higher than the odds for a MexicanAmerican.” Why most of you will want to use the same specific type of regression: Logistic regression. With the type of datasets you will be working with, the most straight-forward way to analyze the impact of several variables on a phenomenon is to use or to make a dependent variable that is dichotomous (more specifically, to use a variable constructed in a yes/no format). This type variable allows the use of logistic regression: Analyze-> Regression->Binary logistic to explore how variations in a given dependent variable increase or decrease the odds that our outcome of interest will occur. If you are incredibly fortunate and your dependent variable of interest is an interval variable with a wide range of values, you will be able to use the most easy-to-interpret type of regression: ordinary least squares. If, however, you have a dependent variable that takes the form of a count (e.g., how many times have you voted in the last year?) or an ordinal ranking (e.g., do you support the torture of suspected terrorists “often,” “some of the time,” “rarely,” or “never”?) the interpretation of the appropriate regression model is sufficiently complicated that we will ask you to convert your dependent variable into a dichotomous variable so that you can use logistic regression. In the example dataset we will be working with in class, we being our work by recoding the question related to torture: Do you think the use of torture against suspected terrorists in order to gain important information can often be justified, sometimes be justified, rarely be justified, or never be justified? Instead, we will ask: Who supports the regular use of torture on terrorism suspects? By supports, we mean who told Pew’s surveyors that torture is either sometimes or often justified (=1) and who didn’t (all other responses = 0). After recoding several of independent variables we think may be relevant to explain levels of support, we can use logistic regression see how the various factors impact the likelihood that a given person will support the widespread use of torture on terrorism suspects. Specifically, in the model whose results are pasted below, we use logistic regression to ask how: being a born-again Christian, being more wealthy (as measured by income ranging across 9 increasing brackets), having more education (as measured across 7 increasing levels), being male, and being Republican impact the likelihood that a respondent will say that the use of torture at least “sometimes” justified. 9 Here’s the output that we would get back from SPSS: At the very end of the output screen (SPSS reports a lot of output before it gets to the important part, so you should ignore that part of the output), we see the table “the Model Summary.” Here, we learn in this case that our variables collectively don’t explain very much about what makes people likely to support terrorism at least sometimes. In fact, the normal way to read these two versions of a “pseudo Rsquared statistic” is to say that this regression model “explains” about 4 percent of variance in whether or not someone will support torture. Put another way, even after we know your gender, income, political party, educational attainment and whether or not you consider yourself to be a born-again Christian, we still know very little about whether or not you are likely to support the torture of suspected terrorists. Model Summary Step Cox & Snell R Nagelkerke R Square Square -2 Log likelihood 1 615.626 a .035 .048 a. Estimation terminated at iteration number 3 because parameter estimates changed by less than .001. Despite the model’s “poor fit” (e.g., it model doesn’t include most of the factors that impact support for torture), we can still get quite a lot of information out of the procedure. Specifically, we determine the influence of any one of the variables, by itself, once we hold the influence of the other variables constant: Variables in the Equation B Step 1a S.E. Wald df Sig. Exp(B) BornAgain2 .165 .195 .718 1 .397 1.179 income .036 .049 .526 1 .468 1.036 educ -.082 .070 1.385 1 .239 .921 Male .251 .194 1.670 1 .196 1.286 Republican .720 .215 11.213 1 .001 2.055 Constant .013 .343 .001 1 .969 1.013 a. Variable(s) entered on step 1: BornAgain2, income, educ, Male, Republican. The only statistics that you want to pay attention to in this part of the output are in the significance and Exp(B) columns. Here’s what the results say: 10 Of all the variables in the model, being a Republican is the only indicator that we say with certainty has an influence on supporting torture because the other variables are not statistically significant (i.e., it is the only one where the significance is less than .05). Holding the other variables constant, the model “predicts” that being a Republican increases the odds of believing that the torture of terrorism suspects is justified at least sometimes by a little over 200 percent. If the education variable were significant—which it is not— we would interpret the results in the following way: “When all other variables are held constant, every one unit increase in educational attainment on Pew’s seven-level index corresponds to an 8 percent reduction in the odds that a respondent believes that the torture of terrorism suspects is justified at least sometimes.” CONCLUDING COMMENTS: We know that we have given you a lot of information in this document (keep in mind that you will probably only use a fraction of the methods covered, depending on what kinds of variables you use in your project). This isn’t supposed to make sense with no further explanation. Instead we are looking forward to working with each of you individually, and hope this material will get you off to a solid start. 11