Passenger model design report

advertisement

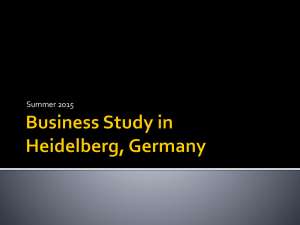

SEVENTH FRAMEWORK PROGRAMME THEME [SST.2010.1.3-1.] [Transport modelling for policy impact assessments] Grant agreement for: Coordination and support action Acronym: Transtools 3 Full title: „Research and development of the European Transport Network Model – Transtools Version 3 Proposal/Contract no.: MOVE/FP7/266182/TRANSTOOLS 3 Start date: 1st March 2011 Duration: 36 months MS.51 - “Passenger model design report” Document number: TT3_WP8_MS51_TECH_Passenger model design report_0a Workpackage: WP8 Deliverable nature: N/A Dissemination level: N/A Lead beneficiary: KTH (3), Svante Berglund Due data of deliverable: Sept. 2011 Date of preparation of deliverable: 11. Sept. 2011 Date of last change: 22. Sept. 2011 Date of approval by Commission: N/A Abstract: This report is a description of the new passenger models that will be developed in Transtools 3. Two models will be developed one short distance model for trips shorter than 100 km and one model for trips longer than 100 km. The models will cover all relevant modes and trip purposes. In the report we discuss availability of data, the limitations and possibilities in that respect. We also outline the formulations for both models where we pay special attention to non linear utility functions which is of great importance for models at a geographical scale of Europe. A key input in travel demand comes from models of car ownership. Currently there is no model available at the European level which surprised us. Beside the crucial importance of car ownership in models of travel demand car ownership is subject to policy decisions at different levels. The rate of growth in car ownership in Europe, particularly in regions where the economy grows from low levels, got a potential to be a very important change in the European transport system. Keywords: Short distance trips, Long distance trips, Travel demand models, ETIS+, Travel data, car ownership, Non linear utility functions Author(s): [Algers, Staffan], [Berglund, Svante] Disclaimer: The contents of this report reflect the views of the author and do not necessarily reflect the official views or policy of the European Union. The European Union is not liable for any use that may be made of the information contained in the report. The report is not an official deliverable under the TT3 project and has not been reviewed or approved by the Commission. The report is a working document of the Consortium. MS51: Passenger model design report Report version 0b 2011 By Svante Berglund, KTH. Copyright: Published by: Reproduction of this publication in whole or in part must include the customary bibliographic citation, including author attribution, report title, etc. Department of Transport, Bygningstorvet 116 Vest, DK-2800 Kgs. Lyngby, Denmark Request report from: www.transport.dtu.dk Content Summary ........................................................................................................................................6 1.1 Data requirements ...............................................................................................................6 1.2 Short distance model ...........................................................................................................6 1.3 Long distance model ............................................................................................................7 2. 2.1 2.2 Introduction ..........................................................................................................................8 Objective of the deliverable ..................................................................................................8 Methodology.........................................................................................................................8 3. 3.1 3.2 3.3 3.4 3.5 Data requirements ...............................................................................................................9 Data to support estimation - long distance trips ...................................................................9 Data to support base case application .................................................................................9 Data to support estimation - short distance trips ...............................................................10 Zoning system adjustment .................................................................................................10 Process ETIS+ (Rewrite wrt to what we will get from Otto concerning variables etc) .......10 4. 4.1 4.2 4.3 4.4 Modelling approach – some general issues ......................................................................11 Car ownership ....................................................................................................................11 Cost sharing car driver/car passenger ...............................................................................15 Vehicle fleet composition ...................................................................................................15 Work trip cost deductions ...................................................................................................15 5. 5.1 5.2 Short distance model .........................................................................................................16 Scope .................................................................................................................................16 Calibration ..........................................................................................................................18 6. 6.1 6.2 6.3 6.4 6.5 6.6 6.7 6.8 Long distance models ........................................................................................................20 Data ....................................................................................................................................20 Segmentation by trip purpose ............................................................................................20 Seasonal variation of demand ...........................................................................................21 Geographical segmentation ...............................................................................................22 Non infrastructure network variables - Barriers and affinities ............................................23 Trip duration .......................................................................................................................23 Trip generation modelling .....................................................................................................24 Model for access and egress trips. ....................................................................................25 7. 7.1 7.2 Estimation procedures .......................................................................................................26 Short distance trips ............................................................................................................26 Long distance trips .............................................................................................................26 Appendix 1: Using Box-Cox approximations to estimate nonlinear utility functions in discrete choice models ......................................................................................................29 Background ..................................................................................................................................29 MS51: Passenger model design report Definition ......................................................................................................................................30 Possible approximations ..............................................................................................................30 Using approximations in real applications ....................................................................................37 References ...................................................................................................................................40 Appendix 2 Equivalence of Box-Cox and ‘Gamma’ functions ......................................................41 MS51: Passenger model design report Summary The scope of WP8 is to further develop and refine the passenger models from Transtools2. The modelling work will consist of development of two models: one for short distance trips below 100 km and one for long distance trips above 100 km. The scope of these two models is summarised below together with data requirements. A previous version of this document has been discussed during meetings at DTU and comments and suggestions has as far as possible been added to the current document. 1.1 Data requirements Model estimation will be separate for short distance trips and for long distance trips. For Long distance trips, we will mainly rely on the Dateline data source from 2000 for observed travel behaviour. In order to maintain consistency between dependent data (observed behaviour) and independent data (Level of Service (LOS) and land use (LU)) that represents the situation in year 2000 is needed for the estimation of long distance models. Background data will be delivered by an ongoing project ETIS+. For long distance trips, the following data are needed from ETIS+: LOS variables. LOS data consists of two components: network data (the infrastructure) and the traffic provided on the infrastructure i.e. travel times, frequency and capacity (?). LU variables are variables describing the attraction of each zone as destination for trips. According to the ETIS+ and Transtools3 DOW’s, base matrices for short distance trips by modes and travel purposes will be established. These matrices can be used as aggregate demand. For this, population data by category, LOS and LU data are needed for the base case year. This includes data for zone internal trips. This data will also support the base case application. 1.2 Short distance model The scope is to establish a set of models by travel purpose containing mode, destination and frequency choice. The model should be able to respond to transport policy changes with respect to infrastructure and pricing as well as to background variables like land use, economic growth and changes in car ownership. Car ownership is a key variable in travel demand models and care is needed in formulation these variables. Somewhat surprisingly there is no forecasting model for car ownership of reasonable quality available for Europe in the TREMOVE/SCENES framework. The short distance model will use the following modes: car as driver, car as passenger, public transport, slow modes (walk and bike) will be treated in a simplistic way. The available zone size and the European scale are the reasons for the treatment of slow modes. The short distance model will consist of the following trip types: Commuting, business, private and holiday trips. 6 1.3 Long distance model The long distance models will have a similar structure as the short distance model but there will be some differences. The segmentation in the long distance model will differ from the short distance model and most likely will the utility functions differ from the short distance model where non linear utility functions will be important in the long distance model. For the long distance model the DATELINE survey from 2000 will be an important source of observed travel behaviour. The long distance model will use five modes: air, car as driver, car as passenger, bus and rail. Available trip purposes in the long distance model will be: holiday, business and private trips. Commuting trips could either be present in both in the long and short distance model or we could extend the range of commuting trips in the short distance model above 100 km. The final choice in this issue will be based on empirical and technical considerations. 7 2. Introduction The scope of WP8 is to establish a set of passenger demand models by travel purpose containing mode, destination and frequency choice. The model should be able to respond to transport policy changes with respect to infrastructure and pricing as well as to changes in background variables like land use, investment schemes, economic growth and car ownership developments. The models that will be the outcome of WP8 will be one central part of Transtools 3. 2.1 Objective of the deliverable The model design report is the manuscript to the future work in WP8. We discuss important aspects of the passenger demand models that will be developed within the WP and identifies possible problems that may be of importance for the future work. Since the different WPs in Transtools is highly depending on each other the report serves as an information to other WPs of what will be delivered and what input the system must provide the passenger models with. In the model design report we also raise some questions regarding supporting models (e.g. car ownership) absence of important roles (tax deduction) and issues concerning model formulation. Some of these questions are of empirical nature and will be solved during the project and some are a matter of resources. 2.2 Methodology The model design report is the outcome of an iterative process between the staff of WP8 and other participants in the Transtools project. This report has been discussed during two expert meetings at DTU (spring 2011 and autumn 2011) and suggestions and comments have been included in the final version. During the process we have discussed different data sources and their merits and shortcomings. The passenger demand models will end up as a part within the Transtools framework and ongoing process is to create an understanding of how the pieces should fit together. 8 3. Data requirements The data requirements need to serve two purposes: one is to support model estimation, and the other one is to support generation of a base year application. 3.1 Data to support estimation - long distance trips Model estimation will be separate for short distance trips and for long distance trips, possibly with the exception of commute trips. For Long distance trips, we will mainly rely on the Dateline data source from 2000 for observed travel behaviour. DATELINE suffers from some known problems e.g. with trip frequencies and poor coverage in part of the EU. To overcome these problems we need to use additional data sources in the modelling process. In order to maintain consistency between dependent data (observed behaviour) and independent data (Level of Service (LOS) and land use (LU)) that represents the situation in year 2000 is needed for the estimation of long distance models. Background data will be delivered by an ongoing project ETIS+. For long distance trips estimation, the following data are needed from ETIS+: LOS variables. LOS data consists of two components: network data (the infrastructure) and the traffic provided on the infrastructure i.e. travel times, frequency and capacity (?). LU variables are variables describing the attraction of each zone as destination for trips. Network data from 2001 is of poor quality which has been enhanced since then. In order to improve model quality we will use LOS data from 2005 to approximate the situation in 2001. This is a departure from our ambition of maintaining consistency despite the difference in time the 2005 data is regarded as a better representation of the situation in year 2000 due to quality improvement. LOS may change over time but usually quite slowly so we regard this as a minor problem compared to data quality in the previous LOS data. Additional data sources (Sigal, and others) 3.2 Data to support base case application For the base case application year, data on population is needed by category in addition to LOS and LU data. This will mainly be supplied by the ETIS+ project. Data on gross income would also be desirable, as would more disaggregate data on car ownership. The car ownership issue is discussed further in section 4.1. 3.3 Data to support estimation - short distance trips According to the ETIS+ and Transtools3 DOW’s, base matrices by modes and travel purposes will be established. These matrices can be used as aggregate demand. For this, population data by category, LOS and LU data are needed for the base case year. This includes data for zone internal trips. This data will also support the base case application. 3.4 Zoning system adjustment The models are to be developed for the NUTS3 zone level. The current zones differ in size from just over 10 000 inhabitants to more than 6 million inhabitants and this range is problematic to cover in a model. In particular, congestion will be difficult to estimate correctly if the zones differ too much in size. As discussed at the kickoff meeting, it is highly desirable to modify the zoning system to become more consistent over countries. This will be handled within WP 5, with the aim of providing data delivered by ETIS+ at the revised zone level. There is ongoing work with zone design and the new zone system will have approximately 1600 zones. 3.5 Process ETIS+ (Rewrite wrt to what we will get from Otto concerning variables etc) It was agreed at the model design meeting that is desirable to receive the ETIS+ data successively as it is ready, and not wait until all data is ready. 4. Modelling approach – some general issues In this section we discuss the formulation of fundamental input variables such as car ownership, car occupancy/cost sharing, vehicle fleet composition and work trip cost deduction. These variables are in contrast to simple data (such as number of inhabitants) subject to a formulation process that depends on the modeller and could as such be discussed. 4.1 Car ownership Information on car ownership is essential for modelling the car mode. How the information is used will depend on the type of information available. ETIS+ provides only an average number of cars for each zone. Passenger travel demand forecasting (including model estimation) requires assumptions or forecasts on car ownership levels. Car ownership, or rather the vehicle stock, is included in the ETIS+ project defined as follows: “The data provided for the indicator ”vehicle stock” contains the numbers of different types of vehicles and is differentiated by the application number of "passenger cars", "buses", "goods road vehicles", "motorcycles", "special vehicles", "total utility vehicles", "road tractors", "trailers" and "semitrailers" in the respective region (NUTS3).” The current DoW does however not explicitly specify a car ownership model, and Transtools 3 forecasts would therefore have to rely on external data sources for car ownership projections. Another issue is the vehicle fleet composition, which will have an impact on emissions as well as car running costs. For Transtools 3 to be able to analyze policies in CO2 dimensions and to take account of car ownership changes caused by policies or general economic growth, a consistent approach to car ownership and vehicle fleet composition is needed. The TREMOVE model is designed to consider car ownership effects and vehicle fleet composition. When considering the use of TREMOVE models in Transtools 3, it appears that the treatment of car ownership is quite a weak point. TREMOVE applies a “scrap and sales” cohort model for the vehicle fleet composition, in which car ownership levels is an input that is needed to define the number of new cars purchased in each year. This number is defined as (quote from the TREMOVE final report p 56): “2. NEW SALES From the demand module, we know the needed vehicle-km in year t. This is converted into the number of vehicles needed to perform these, based on the average mileage of the vehicle category (cars). This average mileage is calculated based on historic fleet and transport volume statistics23. This number of vehicles is the desired stock. The difference between desired stock and surviving stock are the sales of new vehicles (cars) in year t.” The assumption of a fixed mileage per car is of course a strong assumption. Another problem is that the forecasted total mileage (to be divided by the average mileages per car) is taken from the SCENES model applied in the TREMOVE system. In the TREMOVE final report (p 222) the following is said: “The reference scenario in the TREMOVE demand module – called the baseline - is based on output of the European transport model SCENES120 121. The run that has been used, is the P-scenario from the ASSESS project, October 2005122.” Looking at the ASSESS documentation (Final report, Annex VI p 19), it appears that car ownership is an input to the SCENES model: “The passenger demand model also requires a forecast car ownership per 1000 head in each of the forecast years 2010 and 2020, for each EU25 country. These are based on national forecasts collected by WSP during the TREMOVE 2 project in 2003 (TML, 2005; for car ownership data see table below). Car stock forecast is built up based on data from Tremove at the country level. By using the Eurostat Year 2000 car stock data and Tremove year 2020 car stock projections, year 2010 data is estimated using linear interpolation. “ So, neither TREMOVE nor SCENES model car ownership. Consequently, Transtools 3 cannot rely on these models to obtain car ownership forecasts. The treatment of car ownership as an exogenous input is also a very weak part in the vehicle fleet composition model in the TREMOVE system. It is therefore very desirable indeed to find a better solution to this problem, be it in the TREMOVE system or in the Transtools system. In the final TREMOVE report different system improvements are suggested (although not mentioning car ownership as one of these). In Annex F (Possible Approaches To Revise The Demand Module In Tremove) to the Final report, the following option is outlined as an alternative to revising the TREMOVE demand module: “Given that both TRANS-TOOLS and TREMOVE operate at the EU scale and have been developed on behalf of the Commission, it might be sensible to think of a possible integration of the two models. Thus, instead of developing a new simplified demand generation module in TREMOVE it could be chosen to use the capability of TRANS-TOOLS to model transport demand in detail and integrate in its structure modules from TREMOVE. Namely, three modules from TREMOVE could be integrated within the TRANS-TOOLS model: - the vehicle stock module; - the fuel consumption and emission module; - the welfare module In this perspective, the existing TRANS-TOOLS modules would be used to simulate transport demand in detail (network level) while the specific features of the TREMOVE model – fleet development, emissions, welfare computation - would continue to live within TRANS-TOOLS improving its capabilities. Their development could continue in terms of “stand alone” modules (e.g. adding new pollutants, improving vehicle choice algorithm, making scrapping rates dependent on energy price, etc.) while, at the same time, gets the benefits of other developments of TRANS-TOOLS.” Given the strong interdependence between passenger travel demand, car ownership and vehicle fleet composition, it seems obvious that this should be handled within the Transtools framework. The development of the necessary models and integrating the TREMOVE vehicle fleet composition model in Transtools is however a major task that is currently not included in the Transtools budget. Beside the crucial importance of car ownership in models of travel demand car ownership is subject to policy decisions at different levels. The rate of growth in car ownership in Europe, particularly in regions where the economy grows from low levels, has got a potential to be a very important change in the European transport system. The ASTRA model does contain a mechanism for car ownership forecasting at the NUTS 2 level. In the ASTRA model, the ENV sub model generates the total car stock. This is done in the following way (ASTRA Deliverable D4 p 114 ff): “[i] Purchase Model for Development of Passenger Car Vehicle Fleet Actually it is not the whole vehicle fleet that is calculated by the model but it is the changes of the fleet between two time-steps that are caused by endogenous influences like personal income, population density and exogenous influences like fuel price. In the past growing disposable personal income was the major source for the increase of the car vehicle fleet. ASTRA D4 ENVIRONMENT SUB-MODULE (ENV), Page: 115 While the increase in density has an counteractive effect. For regions with higher population the vehicle fleet is smaller than for low densely populated regions with the same population. This leads to the following basic equation: VF = el_Inc * INC + el_PD * PD + el_FP * FP (eq. 9) where: VF = change of vehicle fleet el_Inc = fleet elasticity for income changes (>0) INC = change of income el_PD = fleet elasticity for changes of population density (<0) PD = change of population density el_FP = fleet elasticity for fuel price changes (<0) FP = change of fuel price” The elasticities used are presented as follows (ASTRA D4 Annnex A p 60): 12.4.9 Parameters for the Car Vehicle Fleet Model The following table presents the elasticities that are applied in the vehicle fleet model explained in chapter 6.4.3.1 of ASTRA D4. In the rows 2-3 the suggestions of Johansson, Olof; Schipper, Lee (1997): “Measuring the Long-Run Fuel Demand of Cars”, in: Journal of Transport Economics and Policy, Sep. 1997.are shown. However, currently the best fit for the four macro regions is reached with the optimised values of row 5-8. Table 73: Elasticities for the Car Vehicle Fleet Model The REM submodel then distributes the stock on different subgroups (ASTRA D4 Annnex A p19 ff), which are as follows: “In each functional zone in the passenger model the population is segmented into 4 groups based on age and economic position The groups are: Population under 16 (P1). - All persons under 16 years. Population 16-64 Employed (P2). - This category includes all persons in full time and part time employment. Population 16-64 Not in Employment (P3). - This category includes all persons between 16 and 64 who are either unemployed or economically inactive. Population over 64 (P4). - All persons over 64 years old. At the same time the population of each functional zone is also segmented into three car availability categories. No car (C0) - Persons in households with no car Part car (C1) - Persons in 2+ households with only one car i.e. part car Full car (C2) - Persons in 1 adult households with 1+ cars and persons in 2+ adult households which have 2+ cars, “ The ENV sub model also includes a distribution mechanism on vehicle type to reflect differences in fuel consumption and emissions. Using the ASTRA capability would however be quite awkward when running the Transtools system. The level of ambition in treating the car ownership issue in Transtools has to be further discussed with the commission. 4.2 Cost sharing car driver/car passenger In order to correctly specify the travel cost by car we need to handle car occupancy. A simple way is to divide the cost by the average car occupancy by trip type. Dividing the cost by car occupancy could be overly simplistic since sharing car does not necessary mean shared costs. There is a dependency between trip length and car occupancy. Longer trips are more likely to be done in groups which will interact with a cost damping function. For long distance trips we will consider segmentation on party size, which will be assumed to be exogenous to mode choice. Short trips, long distance trips 4.3 Vehicle fleet composition For getting LOS estimation data compatible with application data we need to apply the same procedure as for application, i.e. a fuel cost based on fractions of car types with corresponding fuel consumption rates and fuel prices. In previous Transtools versions, country specific fuel prices were used because fuel taxation rules differ between countries. Fuel costs were calculated per link and the cost for each link was based on the fuel cost of the country the link falls within. It is important to separate the tax fraction from the raw fuel cost. If the taxation is different from country to country, the relative effect of increased taxation will depend on the current taxation offset. 4.4 Work trip cost deductions Several countries apply tax deduction schemes for commute trips. This is the case in Belgium, Denmark, Finland, France, Germany, the Netherlands, Norway, Sweden and Switzerland according to a German study. In some cases there are special rules for commuting across borders. These schemes are different and will have different impacts on trip distance and mode choice. This has to be considered in order not to create bias in parameter estimation, and to allow for policy assessment. In order to avoid bias in parameter estimation the costs used in the estimation phase must be calculated taking tax deduction into account. Depending on the formulation of the tax deduction scheme it may have several implications on other policies that will be assessed using Transtools. The effect of monetary policies such as taxes, tolls and fees could be less effective if tax deduction is allowed. Evaluation of pricing schemes will by erroneous if tax deduction not is taken properly into consideration. The schemes are more or less complicated, and it may be that some simplified way of handling this issue will be needed. 5. Short distance model 5.1 Scope The scope is to establish a set of models by travel purpose containing mode, destination and frequency choice. The model should be able to respond to transport policy changes with respect to infrastructure and pricing as well as to background variables like land use, economic growth and car ownership developments. It was noted during the meeting that car ownership is a difficult question in a model that covers areas with large income differences. Since car ownership is a key variable in travel demand models care is needed in formulation these variables. License holding is another variable that often is present in models of travel demand together with car ownership for calculation of car competition. Commuting may take place over 100 km trip length. This may conflict with long-distance trips, if the work purpose is defined in both categories. One option is to allow for longer work trips in the short distance model and relax the upper limit of 100 km for that travel purpose. Estimating a separate model for work trips in the long distance model will suffer from a low number of observed trips (about 300 in DATELINE). Long commuting trips are typically less frequent than shorter ones. There are different options to reflect different behaviour with respect to commuting distance. One is to introduce a choice between long and short commute, the definition of which can be less than the 100 km criterion. Another option is to use nonlinear utility function that will be more sensitive to the relatively few long distance commutes. The definition of a commuting trip is not clear cut. Long distance commuting is often done on a weekly basis where the commuter got a combination of an apartment close to work and house more far away. The long commuting trip (Monday and Friday) will thus not be that burdensome and show up in a non linear utility function in the cost dimension. In practise the long commute in the example could or should be defined as leisure trip instead of commuting. 5.1.1 Assumed data availability: A tour matrix (T) divided by mode (m) and purpose (p) from i to j on a day basis. In other words, the matrix represents both the out-bound and the home-bound trip (i.e. a Generation –Attraction tour). Level of service matrix (LOS) by mode (m), purpose (p) and type of time-component (k) Monetary transport costs by mode (m) and purpose (p) Car ownership and if possible licence holding by origin zone. . Land use variable vector (LU) by destination, Socioeconomic variable vector by origin (SE) 𝑝 𝑝 𝑝 i.e. 𝑇𝑖𝑗𝑚 , 𝐿𝑂𝑆𝑖𝑗𝑚𝑘 , 𝑇𝐶𝑖𝑗𝑚 , 𝐶𝑂𝑖 𝐿𝑈𝑗 , 𝑆𝐸𝑖 Some data could be difficult to obtain and estimates of LOS variables will probably be necessary. An approach that could be used is to estimate transit speed e.g. with regard to presence of metro. 5.1.2 Modelling approach (Update wrt no slow modes and accommodating of different tincome levels): We start by taking a parsimonious approach, trying to avoid additional assumptions as much as possible. Further discussion may modify the approach. We then specify a purpose specific model, assuming a nested logit structure where destination is at the bottom, mode in the middle and frequency at the top. This can of course be tested and changed if necessary, but will (hopefully) serve as a base for initial model estimations. The utility functions at the different levels are specified below (Greek letters are parameters to be estimated, C is a country or country group specific and P is a purpose specific: Destination: 𝐶𝑃 𝑃 𝐶𝑃 𝐶𝑃 𝐶𝑃 𝐺𝑇𝐶 𝑉𝑖𝑗𝑚 = 𝛽𝑚 ∗ 𝑓(𝑇𝐶𝑖𝑗𝑚 , 𝐺𝐶𝑖𝑗𝑚 , 𝑤𝑘𝐶𝑃 ) + +𝛾𝑆𝐿 ∗ 𝐵𝑜𝑟𝑑𝑒𝑟𝑖𝑗𝑆𝐿 + 𝛾𝑂𝐿 ∗ 𝐵𝑜𝑟𝑑𝑒𝑟𝑖𝑗𝑂𝐿 + 𝛿 𝐶𝑃 ∗ 𝑆𝑖𝑧𝑒𝑗𝐶𝑃 _𝐶𝑃 𝐶𝑃 𝐶𝑃 𝐶𝑃 𝐶𝑃 Mode: 𝑉𝑖𝑚 = 𝐴𝑆𝐶𝑚 +𝜃𝑚 ∗ 𝐿𝑜𝑔𝑠𝑢𝑚_𝑑𝑒𝑠𝑡𝑖𝑚 + 𝑎𝑚 ∗ 𝐶𝑂𝑖 _𝐶𝑃 𝐶𝑃 𝐶𝑃 Frequency: 𝑉𝑖𝑡𝑜𝑢𝑟 = 𝑇𝑜𝑢𝑟_𝑐𝑜𝑛𝑠𝑡 𝐶𝑃 + ∑𝑆𝑠=1 𝜎𝑠𝐶𝑃 ∗ 𝑆𝐸𝑖𝑠𝑃 + +𝜃𝑚 ∗ 𝐿𝑜𝑔𝑠𝑢𝑚_𝑚𝑜𝑑𝑒𝑖𝑚 The value-of-time is represented by 𝑤𝑘𝐶𝑃 and the representation of time and cost (in the 𝑓 –function) may be specified in different flavours; If represented in monetary terms the model would be 𝐾 𝑃 𝐶𝑃 𝑃 𝑓(𝑇𝐶𝑖𝑗𝑚 , 𝐺𝐶𝑖𝑗𝑚 , 𝑤𝑘𝐶𝑃 ) = 𝑇𝐶𝑖𝑗𝑚 +∑ 𝑘=1 𝐶𝑃 𝑤𝑘𝐶𝑃 ∗ 𝐿𝑂𝑆𝑖𝑗𝑚𝑘 If formulated in time units it would be 𝐾 𝑃 𝐶𝑃 𝑃 𝑓(𝑇𝐶𝑖𝑗𝑚 , 𝐺𝐶𝑖𝑗𝑚 , 𝑤𝑘𝐶𝑃 ) = 𝑇𝐶𝑖𝑗𝑚 /𝑤𝑘𝐶𝑃 + ∑ 𝑘=1 𝐶𝑃 𝑞𝑘𝐶𝑃 ∗ 𝐿𝑂𝑆𝑖𝑗𝑚𝑘 Where 𝑞𝑘𝐶𝑃 is an internal weighting of the different time components (take account of the fact that congestion time may be weighted higher than free-flow time). From an estimation perspective the two approaches are not different in a linear specification, however, implementation-wise they are as the increase in 𝑤𝑘𝐶𝑃 will affect the forecast in different ways. The two above forms are two extremes and the famous Train and McFadden paper discuss the intermediate models and suggest that the time-unit model is the more likely (very small scale estimation). Also the TT2 did apply the second version in the implementation but the first in the estimation. 𝑆𝑖𝑧𝑒𝑗𝐶𝑃 is a composite destination size measure, defined as 𝑇 𝑆𝑖𝑧𝑒𝑗𝐶𝑃 = ∑ 𝐶𝑃 exp(𝜏𝑡 ) ∗ 𝐿𝑈𝑡𝑗 𝑡=1 The data will facilitate estimation of an aggregate model. The dependent data will be market shares corresponding to the ETIS+ data, associated with weights also given by the ETIS+ data. The shares are found in the tour matrix elements fulfilling the conditions that the car distance is less than 100 km or represent intra-zone traffic. As many zones will be relative large the only clear stratification if both of these conditions apply. We realize that this may be rather crude for a number of matrix elements, but ignore this as a first approximation. The market shares for trip makers are defined as 𝑃 𝑇𝑖𝑗𝑚 𝑃 𝑃𝑖𝑗𝑚 = 𝑃𝑜𝑝𝑖 And for non trip makers 𝑃 𝑃𝑓=0 =1− 𝑃 ∑ 𝑇𝑖𝑗𝑚 𝑃𝑜𝑝𝑖 where 𝑃𝑜𝑝𝑖 is the population in the relevant category. The weights will be the population numbers in each origin (possibly for a given category). This approach will enable estimation of the short distance model making the sole assumption of the 𝑤𝑘𝐶𝑃 factors corresponding to values of time for the various travel time components. These may be obtained form Heatco and by making some additional assumptions for countries not included in the Heatco work for the value of riding time, and by compiling information on aggress/egress time and headway weights from other sources (such as the Wardman meta studies etc). The choice between different sources of values of travel time components has been discussed during project meetings. The values of HEATCO was questioned during our meetings and alternatives that was suggested was to use values t derived by using proportionality to the wage rate or to use a PPP index (Purchasing Power Parity-Index) . This proportionality to wage rate or PPP index can be derived using values of time studies of known good quality (preferably more than one) and compute conversion factors. ITS will look at this problem more in detail before a final decision is taken. The model can be estimated simultaneously for the mode, destination and frequency choices. It can also allow for different scales for different countries or country groups by separate estimation or scale factors. The Transtools 2 model used x modes (car as driver, car as passenger and public transport,). Slow modes were not covered by Transtools 2, with regard to the zone size this is a reasonable solution. Slow modes will most likely not be frequent choice in a model with the current scale for trips between zones. For trips within zones slow modes is important but intra zone trips will not be assigned to the network in the standard way. Still we think it is important to model intra zone trips in a reasonable realistic way. For short distance trips intra zone trips will be the vast majority of trips and is important to be able to calculate realistic trip rates regardless of zone size. Congestion will be depending on trips with origin and destination within the same zone and in order to compute the number of cars that contribute to congestion we thus think it is necessary to do some simplified mode split for intra zone trips, including slow modes. It is also important to have a reasonable relation between the size of the working population/number of work places and the number of generated work trips per zone, perhaps in the generation step. Let the generation step be a choice between three choices: trip outside the zone, trip within the zone, no trip. In the national Swedish transport model generation is just the logsum from lower levels which is an alternative if zones are of reasonable similar size. 5.2 Calibration This approach will also facilitate a simple calibration procedure, by adding as many calibration parameters as wanted to the utility functions. The calibration will then simply be an additional estimation on the same data, but by fixing the estimated parameters to the estimated values and allowing for the estimation of calibration parameters. Models will be estimated for four trip purposes: Commuting Private trips Holiday trips Business trips 6. Long distance models The long distance models will have a similar structure as the short distance model but there will be some differences that will be discussed in this section. In this section we take a look at data in the Dateline survey and try to provide a background for a discussion on the following issues: Segmentation with regard to journey duration and geography Introduction of variables for barriers and affinities Attraction variables Use of seasonal dummy-variables 6.1 Data The main data that will be used is the Dateline survey from 2001. There may be questions related to this data source (Kay Axhausen). We will check (Jeppe) with Kay what these problems may be, and if there exists a “cleaned” or pre-processed version of the data. We will also look at previous model work (Hackney). To the extent it is possible to add other more recent data sources this will be done. Data sources for the Danish National model as well as UK may be used. DTU will provide data related to Denmark for further inspection. In Table 1 we give an overview of the data in the Dateline survey. The total number of observations in the data is sufficient for model estimation but as we will see below segmentation must be done with some care. Of practical interest for the project is journeys and trips, excursions will not be modelled. The number of commuting trips in the data is of limited use. Table 1. Basic information about the Dateline survey. Number of journeys Number of trips Number of excursions Commuting 97 195 (60% domestic) 131 841 4 029 477 6.2 Segmentation by trip purpose In the frequency table below we can see the number of journeys by purpose which sets a limit on segmentation. About 55 % of all journeys in the material are holiday trips, another 35 % are other private purposes and 10 % are business or work trips. The number of observations can be seen in the table below. Table 2. Journey by purpose in the Dateline survey. Purpose Holiday Missing Frequency Percent 53316 54,9 Other 1068 1,1 Short holiday 1913 2,0 Visiting relatives/friends 5988 6,2 Leisure general 5053 5,2 Business/Work 9783 10,1 None 5497 5,7 Other 2757 ,0 Total 85375 87,8 System 11820 12,2 97195 100,0 Total N of observations About 55% of the journeys in the dateline survey were holidays. This calls for a rich description of attraction variables related to holidays. Traditional “mass-variables” such as number of jobs or inhabitants cannot explain the travel patterns of holidays where the purpose of the trip could be to go to a place with nice climate. Suggestions: 1. Winter holiday area, in the data numerous trips goes to small villages in the Alps. Without special attraction it is impossible to understand why someone goes to small places like these. 2. Summer holiday area, see pt. 1 above 3. Temperature 4. Price level (in 2001) 5. Cultural heritage One available variable that could be used as an attraction is the number of beds in hotels per zone. An argument against that is that the number of beds is a response to demand rather than a pure attraction variable. An argument to use beds as an attraction variable is that the number of beds is a proxy for the attraction of the zone and can be regarded as exogenous from the travellers point of view. 6.3 Seasonal variation of demand Distribution of trips over the year is one issue that was discussed within the project group. Different destinations will attract trips on a seasonal basis. Utilisation of the transport system will in areas with a large tourism sector differ depending on the season. The same could be true for the level of service in the public transport system where “seasonal peak hour traffic” could be the case. It will however be computationally time consuming to do assignments for different seasons in addition to the assignments for different time of day. Assignment in the long distance model will be done for an average weekday (7 days). 16000 14000 12000 Other 10000 Holiday Short holiday 8000 Vis rel, friends 6000 Leisure 4000 Business 2000 0 Jan Feb Mar Apr MayJune Jul Aug Sept Oct Nov Dec Figure 1. Trip distribution over the year. Source: Dateline. Seasonal variation is limited for all purposes except holiday trips. 6.4 Geographical segmentation Geographical segmentation by region could be considered, e.g. southern Europe, northern Europe ... Table 3 below shows the number of observations by country and gives some indication of what segments that could be possible to use. The number of observations per country differs considerably and indicates that segments by country must be made by groups if any. Table 3. Number of observations by country of departure. Source: Dateline. Type of journey Business Country code Holliday Private Total 835 2095 3279 6209 of departure AS 0 1 0 1 AU 205 948 348 1501 BE 438 2134 872 3444 BO 0 1 0 1 BR 0 1 0 1 CE 0 0 1 1 DA 178 1417 508 2103 EI 75 326 158 559 EZ 0 0 1 1 290 1027 797 2114 FI FR 2188 10036 4347 16571 GM 2380 9669 5094 17143 GR 358 2273 1493 4124 HU 0 1 1 2 ID 0 1 1 2 IS 0 1 0 1 IT 992 3328 1428 5748 LU 18 232 56 306 MN 0 2 1 3 NG 0 1 0 1 NL 717 3363 1371 5451 PL 0 3 0 3 PO 372 1852 1401 3625 SP 1144 10944 3449 15537 SW 511 1230 912 2653 SZ 91 749 189 1029 UK 1329 5039 2683 9051 US 1 6 1 8 VE 0 1 1 2 12122 56681 28392 97195 Total Dateline contains no information about the organizational form of the journey e.g. charter, but contains information on the number of non household members participating in the journey. 6.5 Non infrastructure network variables - Barriers and affinities Different types of barriers and affinities could be considered in a modelling process, keeping in mind that supply data probably are correlated with this kind of phenomenon. In order not to end up in an ad hoc search for variables to enhance goodness of fit we rely on variables that have been used successfully in previous studies. Examples of barriers and affinities that could be considered are: national borders, language (common, similar or different), difference in costs, cultural similarities/differences. 6.6 Trip duration Segmentation on trip duration has turned out to be useful in the Swedish long distance model recently estimated by Algers (2011). In the Swedish study the following segments (expressed in number of nights away) were used: 0. 1-2, 3-5, 6+. Main effects related to number of nights away from home: Decreasing travel time sensitivity with regard to nights away Decreasing importance of first wait time Decreasing importance of travel cost Increasing importance of summer house areas Increasing importance for attraction variables associated with winter sports Table 4. Trip duration (nights away) by type of journey in the Dateline survey. Journey type Business Duration Total Holiday Private Total ,00 4712 2331 7498 14541 1,00 1545 0 2912 4457 2,00 1041 1 4834 5876 3,00 628 0 2985 3613 4,00 533 5712 532 6777 5,00 268 4078 252 4598 6,00 144 4074 111 4329 7,00 106 8691 187 8984 8,00 340 24446 492 25278 9317 49333 19803 78453 The number of observations for business trips limits the number of segments with regard to trip duration. The number of observations by duration for holiday journeys raises some questions. The difference between a holiday journey and a private journey, which isn’t too clear, could be a problem. During our meeting it was suggested that holiday and private trips could by merged and then segmented by duration. 6.7 Trip generation modelling Commuting could be one trip purpose in the long distance model (see discussion above). An important issue in long distance commuting is the frequency model. Frequency will depend on distance (time) and we will need some model to estimate the development of long distance commuting over time. 6.7.1 Intra zone level of service Intra zone traffic flows need to be modelled. One approach to obtain intra zone travel costs is use the cost of travel to neighbouring zones*0.5 as an estimate. The estimate of intra zone traffic can then be used to increase volumes on the network in order to obtain realistic levels of congestion.. 6.8 Model for access and egress trips. A long distance model will need support from a model of access and egress trips to the terminal of the main mode of the trip. Most likely there will be a need to formulate different models for access and egress trips respectively since the available modes will differ between the two trip types. Access and egress trips will be included in the assignment routine and the work in WP8 will concern formulation of a utility function of these trips. As for the long distance model we will depend on Dateline but will also consider using national travel surveys with more detailed descriptions of long distance trips and terminal trips. 7. Estimation procedures 7.1 Short distance trips For short distance trips, estimation is reasonably straight forward. Software capable of estimating aggregate nested models as well as simultaneously estimating composite size variables is sufficient for this task. WP8 staff has considerable experience of using the Alogit software which meets these requirements. The Alogit developer is also participating in the project, guaranteeing best possible support. Depending on how commuting will be defined with regard to the upper limit of trip length it might be necessary to estimate nonlinear utility functions for short distance trips (cost damping). If commuting only will be present in the short distance model it will be necessary to use a different cut off value for these trips in the short distance model and consequently we must consider non linear utility functions. In the next section on long distance trips different alternatives to estimate nonlinear utility functions will discussed in detail. The reasoning for long distance trips also applies for short distance trips. If nonlinear utility functions will be used or not is an empirical question, non linear functions could be tested also for the short distance trip purposes that will be limited to < 100 km. 7.2 Long distance trips For long distance trips, software capable of estimating nested models as well as simultaneously estimating composite size variables for disaggregate data is also needed. An additional requirement is the ability of estimating nonlinear utility functions. This can be done in different ways, having different implications for resources needed and types of results obtained. The following approaches can be defined. Piecewise linear functions Grid search procedures Iterative search procedures Direct estimation of form parameters (for example Box-Cox transformations) Direct estimation of form parameter approximations 7.2.1 Piecewise linear functions The possibility of defining nonlinearities by using piecewise linear functions is as old as the MNL model. It has the advantage that it is simple, but the drawback of having to specify the intervals. The approach also consumes one degree of freedom for each segment. A version of this approach is to use information for the relative effects of the different segments to define a transformation of a variable, and to estimate only one parameter for the piecewise transform. In the Swedish national model Sampers this approach has been used for the headway variable, for which information on the relative weights from a Stated Choice experiment for a set of headway intervals has been used to define one single variable which is then piecewise nonlinear. 7.2.2 Grid search procedures Another possibility is to define a continuous nonlinear transform, such as the Box-Cox transformation. Then a set of models can be estimated for a corresponding set of transformation parameters, and the optimal transformation parameter can be identified by comparing log likelihood values. An advantage of this approach is that continuous nonlinear transforms are estimated, but the obvious drawback is that a large number of runs is required, specifically if there are several nonlinear variables. The procedure will give an estimate of the transformation parameters, but not of its standard deviation which would also be useful to have. This approach has been partly used in the recent Swedish long distance model research project. 7.2.3 Iterative search procedures Instead of the grid search procedure in which the result of one model run is not used as input to another run, a procedure that uses the outcome of a specific run to guess a better form parameter in the next run can be used. In the recent Swedish long distance model research project, such a procedure was implemented in the R environment using Alogit as a sub process. 7.2.4 Direct estimation of form parameters Estimation of Box-Cox for parameters implies nonlinear utility functions and is therefore more difficult to estimate. Dedicated software like Trio (Gaudry) and Biogeme (Bierliere) has been developed for this task. While solving the problem of direct estimation of form parameters, other capability like allowing for choices at different levels or composite size variables has been lacking. The added complexity has also implied longer run times. This approach (using Biogeme 1.8) was tested in context with the recent Swedish long distance model research project but was abandoned because of run times. 7.2.5 Direct estimation of form parameter approximations In a recent project for estimating long distance models for UK, approximations of form parameters have been directly estimated. The approximation is defined by combining a logarithmic and a linear term for the nonlinear variable and the resulting nonlinear variable is obtained by adding the two together. The approach has been demonstrated to work well in the UK case. It was also successfully tested in the recent Swedish long distance model research project, in which the approach has also been generalised to include also negative form parameters (which cannot be estimated using log and linear combinations). It must however be noted that the transformation parameter may have to be constrained to the unit interval at least for some parameters. This generalisation and some Swedish experience are included in the appendix. 7.2.6 Choice of approach The approximation procedure may seem quite appealing. A possible drawback is that the form parameter is not directly identified. Some tests (see appendix) suggest that the form parameter can be retrieved from the approximation parameters, but probably without information on the standard deviation. The retrieved parameter may however be used as a starting value in a direct form estimation, thereby significantly reducing the run times. There is also an ongoing software development process “out there”. A new version of Biogeme is recently launched, allowing the user to compose his own likelihood function in Python, giving additional flexibility and much faster performance. This version has not been tested in the recent Swedish long distance model research project. It is suggested that the approximation procedure is adopted in the Transtools project. Appendix 1: Using Box-Cox approximations to estimate nonlinear utility functions in discrete choice models By Staffan Algers Background Discrete choice models are usually estimated using linear utility functions. Exceptions are models with nested alternatives, and models using composite size variables which imply nonlinearity along certain dimensions. But for central variables like cost and time components, a linear specification is usually adopted. For quite some time it has however been argued that the linear specification should be extended to allow for nonlinearities. Gaudry (2011) contains an overview and a compilation of research efforts in this field. Recently attention has been given to this issue in the UK (the Cost Dampening project) and in Sweden (High speed rail modeling project). Allowing for nonlinearities is also a prerequisite for the models in the Transtools 3 project, which is the main reason for this paper. Estimation of models with nonlinear utility functions obviously involves nonlinearities, which may make the estimation more computationally burdensome. Nonlinearities, to the extent that they have been introduced, have often been formulated as piecewise linear functions, or as predefined transformations (like the logarithmic form or the square root). In this way functions involving nonlinear parameters have been avoided – but at the price of not actually estimating the nonlinearity, only testing specific nonlinear forms. A more general nonlinear formulation is the Box-Cox transformation, which allows a continuous transformation as a function of a transformation parameter which can be estimated simultaneously with the other parameters. Dedicated software has been developed to tackle this estimation problem, like the TRIO program (Gaudry 2008) and Biogeme (Bierlere 2003). These software tools are capable of estimating the BoxCox transformation parameter, but may have other limitations that make them less capable of handling other estimation problems at the same time, like composite size variables or choices at different choice dimensions (like using data for mode and destination choice where the destination is not known for part of the data). The run time for these software tools may also be quite long, which is a consequence of the more elaborate estimation procedure. For estimation tasks involving large choice sets and complex nesting structures, there is a need for an estimation process that is fast and feasible. Using approximations of the Box-Cox transformation has been tested to some extent, and may be one interesting option. In recent modeling work for long distance travel in the UK, combinations of linear and logarithmic terms were used to capture nonlinear effects. This approach has been found to be useful also in the Swedish context. In this paper we will describe how this option can be generalized and how it can be used in practical work. The paper is organized in the following way: We first describe the Box-Cox transformation, and possible approximations. Then we show an example of a real application of these approximations. The Box-Cox transformation Definition The Box-Cox transformation is usually defined in the following way (Box and Cox 1964): Special cases are when is one or minus one, which corresponds to a linear function and the inverse function. Another obvious special case is when is zero, which corresponds to the logarithmic function. In figure 1, transformations for some values are shown. Figure 1. Functional forms for different values Functional forms for different lambda 50 40 -0,5 30 -0,3 20 0 0,3 10 0,5 10 40 70 100 130 160 190 220 250 280 310 340 370 400 430 460 490 520 550 580 0 -10 In our applications, we would expect a negative parameter to be associated with the transformed parameter, yielding a negative digressive function for parameters below 1. Possible approximations Assuming that the true functional form has the same general form as the Box-Cox transformation, we want to find an approximation that will correspond to the unknown value. Intuitively, one would think that such a function could be obtained by a combination of two known functions – e.g. the function with = 0.3 in figure 1 could be approximated by a combination of the functions with = 0.5 and = 0 respectively. More generally this may be expressed as 𝑎∗ 𝑦 𝜆 −1 𝜆 + (1 − 𝑎) ∗ 𝑦 𝜆+𝑑 −1 𝜆+𝑑 ∼ 𝑦 𝜆+𝑘 −1 𝜆+𝑘 where is the combination parameter, and d are known constants and k is the unknown parameter that we want to obtain by identifying the combination parameter. The unknown k will be a function not only of the combination parameter, but also of x – thus implying an approximation. We want the approximation to be as close as possible, and for this we need a measure of the fit. The sum of the squared differences is a standard measure of fit, which we adopt here as well. The combination parameters can then be estimated by a linear regression on the two known transforms. As the approximation depends on x, the combination parameter as well as the fit will then also be depending on the range of x. In Figure 2, for a range of x values we have plotted a Box-Cox function with a 0.5 transformation parameter and a utility parameter equal to minus one. We have also plotted the corresponding functions obtained by linear regression of the logarithm ( = 0) and the linear ( = 1) transformations in two partly overlapping ranges. The Range 1 function has been estimated for x values between 20 and 620, whereas the Range 2 function has been estimated for x values between 120 and 720. The plots are all in the 20 – 720 range. Figure 2 0 20 60 100 140 180 220 260 300 340 380 420 460 500 540 580 620 660 700 -10 -20 BC(X) -30 Approx_Range 1 Approx_Range 2 -40 -50 -60 This is an illustration of the nature of the approximation. As can be seen, the approximation is quite good in the range for which it is estimated, but deviates notably outside this range. This can be further illustrated by looking at the residuals in figure 3. Figure 3 2 1 0 20 60 100 140 180 220 260 300 340 380 420 460 500 540 580 620 660 700 -1 Diff_Range 1 -2 Diff_Range 2 -3 -4 -5 The R2 for Range 2 is larger than for Range 1 (0,99974 and 0,99903 respectively). The fit to data within each range is obviously quite good, but will be worse if the estimated functions are extrapolated, specifically in the lower range (where the slope of the function changes faster). Using the logarithmic and the linear functions as known functions has been applied in real applications. Daly (2011) also has shown the equivalence between the Box-Cox function and the gamma function, see appendix 2. But this may not be the optimal approach. Intuitively, we would expect a closer approximation if we use known functions that are closer to the true function. In figure 4 we again plot the true Box-Cox function having a transformation parameter of 0.5 and a utility parameter of minus 1, together with the two estimated approximations, now using Box-Cox functions with transformation parameters 0.7 and 0.3. Figure 4 0 20 80 140 200 260 320 380 440 500 560 620 680 -10 -20 BC(X) -30 Approx_Range 1 Approx_Range 2 -40 -50 -60 It is now very difficult to observe any differences. The fit of the two approximations has now increased to an R2 of 0.999969 and 0.999992 for Range 1 and Range 2 respectively. The residuals are plotted in Figure 5. Figure 5 0.4 0.2 20 60 100 140 180 220 260 300 340 380 420 460 500 540 580 620 660 700 0 -0.2 Diff_Range 1 Diff_Range 2 -0.4 -0.6 -0.8 The pattern of the differences is still the same, but reduced in size. So, by using functions that are closer to the true function, we may achieve closer approximations. It also turns out that using two closer functions is more important than using functions which are embedding the true function. In figure 6 we plot approximations using known functions with transformation parameters 0.7 and 0.6. Figure 6 0 20 80 140 200 260 320 380 440 500 560 620 680 -10 -20 BC(X) -30 Approx_Range 1 Approx_Range 2 -40 -50 -60 These functions perform even better than using the 0.7 and 0.3 transformation parameters. The residuals are now even smaller (Figure 7), which depends on the fact that we now use known functions that are now even closer to the true function (the transformation parameters 0.7 and 0.6 are closer the true transformation being 0.5). Figure 7 0.35 0.3 0.25 0.2 0.15 Diff_Range 1 0.1 Diff_Range 2 0.05 0 -0.05 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 -0.1 -0.15 Another limitation of the approach with the logarithmic and the linear approach, i.e. using the transformation parameters 1 and 0, is that it is difficult to approximate true functions with negative transformation parameters. In figure 8 we plot a true Box-Cox function with the transformation parameter -0.5, and approximations using the 0 and 1 (Approx_Range 1) respectively 0 and -1 transformation parameters (Approx2_Range 1). For simplicity, we use only the lower data range (Range 1). Figure 8 -1 20 60 100 140 180 220 260 300 340 380 420 460 500 540 580 620 660 700 -1.2 -1.4 BC(X) -1.6 Approx_Range 1 Approx2_Range 1 -1.8 -2 -2.2 If the known functions with transformation parameters 0 and 1 are used, the regression parameters get different signs. This implies that the resulting approximation is no longer monotonically decreasing, as the positive component eventually will more than offset the negative component. This is of course a very undesirable property, which has prohibited the use of this particular approximation in the negative range of the Box-Cox transformation parameter. The approximation is still fairly close in terms of R2 (0.99757), but the approximation using 0 and -1 as transformation parameters for the known functions is closer, and does not have the undesirable property. As before, we may inspect the residuals for the different approximations (figure 9): Figure 9 0.5 0.4 0.3 Diff_Range 1 0.2 Diff_Range 2 Diff2_Range 1 0.1 Diff2_Range 2 0 20 60 100 140 180 220 260 300 340 380 420 460 500 540 580 620 660 700 -0.1 -0.2 In Figure 9, residuals from four approximations are plotted. The 0/1 transformation approximations are denoted Diff, and the 0/-1 transformation approximations are denoted Diff2. It is quite obvious that the 0/-1 parameter approximation performs better than the 0/1 approximation parameters in both ranges, giving an R2 of 0.99994 and 0.99999 for the low and high ranges compared to 0.99757 and 0.99968 for the 0/1 transformation parameter approximation. As for positive true transformation parameter cases, we can improve the approximation by using closer known functions. In figure 10, we plot the residuals for the 0/-1 transformation parameter approximation (labeled Diff) as well as for a -0.3/-0.7 transformation parameter approximation (labeled Diff2). Figure 10 0.04 0.02 0 1 3 5 7 9 11 13 15 17 19 21 23 25 27 29 31 33 35 -0.02 Diff_Range 1 -0.04 Diff_Range 2 -0.06 Diff2_Range 1 Diff2_Range 2 -0.08 -0.1 -0.12 -0.14 It is obvious from the figure that the -0.3/-0.7 transformation parameter approximation gives a closer approximation, especially when extrapolating the approximation. It is of course desirable to obtain as close an approximation as possible. As has been shown, the approximation can be improved by closing in on the true (but unknown) transformation. A question is then how we can know how to change the known function transformation parameters to become closer to the unknown transformation parameter. One way is of course to plot the known functions and the estimate using a wider transformation parameter range for the known functions (such as the 0/1 range). Another way would be to reverse engineer the regression parameters to obtain the Box-Cox parameters that minimize the (squared sum of) the residuals between a Box-Cox function and the estimated function value. That will give a transformation parameter value that can be used to guide the choice of transformation parameters for the known functions. Using approximations in real applications Up to this point we have examined data without any disturbances, and not really in a discrete choice setting. In real applications however, we will use approximations in a discrete context where random disturbances and correlation among different variables will influence the estimates. We first note that real applications of the method has been used in modelling work in the UK (Fox et al 2009), who used the 0/1 transformation parameter combination for the known functions. In recent Swedish research, also the 0/-1 transformation parameter combination was used. In this research, mode and destination models were estimated for tours longer than 100 km single trip. The tours were segmented according to purpose and duration, and in this section we will use the model for mode and destination for private tours arriving and departing from the destination on the same day. The model structure was a nested logit model, having mode choice at the top and then a destination level at the municipality level (290 municipalities in Sweden) in the middle and a second destination level at the bottom with 670 zones. To keep the number of alternatives to a reasonable size, 20 municipalities were sampled at random, and then 2 zones were sampled from each municipality. The modes comprised car, bus, train and air. For this data set, the 0/1 approximation was first applied. The results are shown in table 2 together with a linear model version. For simplicity, a number of variables (mostly dummy variables associated with different modes) are excluded from the table. Table 2 Name Observations Final log(L) D.O.F. Rho²(0) AEA AccEgrBT LinFW LinTC LogTTBA LinTTBA LogC_0 LinC_0 LogC_12 LinC_12 LogC_34 LinC_34 LogC_56 LinC_56 SizeCS SizeSH Theta1 Theta2 TT_C_A1 TT_C_A2 FW_A1 FW_A2 Linear 4320 -9586,0713 33 0,5390 -0,02613 -0,03598 -0,00207 -0,02782 0,00000 -0,01170 0,00000 -0,01077 0,00000 -0,00737 0,00000 -0,00550 0,00000 -0,00370 1,00000 0,19361 0,69854 0,78149 t-value (-4,4) (-9,6) (-2,4) (-24,8) (*) (-14,3) (*) (-9,8) (*) (-9,4) (*) (-7,9) (*) (-2,6) (*) (2,3) (38,0) (14,5) First approximation 4320 -9035,4795 40 0,5655 -0,02475 -0,04267 t-värde t-value (-3,8) (-10,3) Second approximation 4320 -8994,9004 40 0,5675 -0,02501 -0,04201 0,00000 -1,00381 -0,00296 -2,10315 -0,00308 -2,31340 -0,00092 -2,17654 -0,00065 -1,33745 -0,00126 1,00000 0,26786 0,72459 0,56673 -3,96185 0,00747 -0,68848 0,00335 (*) (-3,5) (-2,6) (-6,4) (-2,3) (-8,0) (-1,2) (-7,7) (-1,0) (-2,6) (-0,7) (*) (3,1) (39,2) (11,4) (-13,1) (6,8) (-4,4) (2,2) 0,00000 -1,10896 -0,00224 -1,95771 -0,00394 -2,19586 -0,00144 -2,08627 -0,00106 -1,25979 -0,00178 1,00000 0,30440 0,74810 0,53996 -0,05646 -343,49939 -0,47483 2,26343 (*) (-4,0) (-2,1) (-6,2) (-2,8) (-7,9) (-1,9) (-7,7) (-1,6) (-2,5) (-1,0) (*) (3,5) (40,1) (11,7) (-0,2) (-10,7) (-3,2) (0,5) (-3,9) (-10,2) The parameters reported here concern access/egress time and headway for public transport, separate in vehicle times for car and public transport, income segment specific cost, zone size and the nesting parameters. All these are significant at normal risk levels in the linear model. Allowing for nonlinearities by adding a logarithmic term for headway, in vehicle times and costs improves the model quite a lot in terms of log likelihood units. For the public transport in vehicle time variables, the two parameters appear negative which suggests transformation parameters in the unit interval. For the cost variables this is also the case, but some of the linear components are not statistically significant. For headway and car time, the linear components are positive, suggesting that the true functions have negative transformation parameters. As an illustration, we plot the estimated approximation of the headway (Y) as well as the closest possible Box-Cox transformation Est(BC(X)) over the value range in the data(Figure 11). It must however be noted that the transformation parameter may have to be constrained to the unit interval at least for some parameters (see Daly 2010). Figure 11 10 40 70 100 130 160 190 220 250 280 310 340 370 400 430 460 0 -0.5 -1 -1.5 -2 Y=F1par*F1 + F2par*F2 Est(BC(X)) -2.5 -3 -3.5 The problem with the approximation is obvious. The Box-Cox transformation parameter giving the best fit has the value -0.37. This suggests that the approximating functions should be closer to this value. Correspondingly, the in vehicle car time transformation giving the best fit to a Box-Cox function has the value -0.08. We therefore change the transformation parameters of the known functions from 0 and 1 to 0 and -1 for both headway and car in vehicle time. We then get the results labelled “Second approximation” in Table 2. We now get another improvement in terms of log likelihood. References Bierlaire, M. (2008). "An introduction to Biogeme", http://biogeme.epfl.ch/ Box, G.,E.;P. and Cox, D.R. (1964) An Analysis of Transformations. Journal of the Royal Statistical Society. Series B (Methodological). Vol 26, No2 pp 211 – 252 Daly, A. (2011) Equivalence of Box-Cox and ‘Gamma’ functions, unpublished note Fox, J., Daly A., Patruni B. (2009) Improving The Treatment Of Cost In Large Scale Models Paper presented at the 2009 ETC conference Gaudry, M. (2008) Non linear logit modelling developments and high speed rail profitability, Agora Jules Dupuit, Publication AJD-127, www.e-ajd.org. Gaudry, M., Duclos, L-P., Dufort, F., Liem, T. (2008) TRIO Reference Manual, Version 2.0 http://www.e-ajd.net/ In the following examples of tables, figures and formulas are provided with headings and heading numbers which must be updated in order to provide the right number. Appendix 2 Equivalence of Box-Cox and ‘Gamma’ functions Andrew Daly, RAND Europe, 2 November 2011 This note explores the equivalence between Box-Cox and ‘Gamma’ functions and their use in choice modelling. Basic equations Box-Cox is defined as usual by ( ) x 1 , when 0 x ( ) log x , when 0 x Inspired by old-fashioned transport planning jargon, we use the ‘Gamma’ label to apply to functions defined by1 𝑥 [∝] =∝ 𝑥 + (1−∝)𝑙𝑜𝑔(𝑥)−∝ Note that when x=1, both transformations take the value 0, whatever the value of . Moreover, if we differentiate ′ 𝑥 (∝) = 𝑥 ∝−1 ′ 𝑥 [∝] =∝ +(1−∝)𝑥 −1 both of which are equal to 1 at x=1, whatever the value of . Finally, when we differentiate a second time, we obtain ′′ 𝑥 (∝) = (∝ −1)𝑥 ∝−2 𝑥 [∝] ′′ = −(1−∝)𝑥 −2 both of which are equal to (-1) at x=1. So at this specific value of x, the functions are equal and have the same slope and curvature. It also seems that the function of is the same in each case. 1 More standard mathematical nomenclature is to define the gamma function as the integral of the exponential of this function (see http://en.wikipedia.org/wiki/Gamma_function). The subtraction of in this formula makes the functions equal at x=1. However, the question is what happens when we move away from x=1. Clearly this depends on the value of : this is explored in the following graphs, which show first the transformed values for =0.333... and =0.666... for values of x from close to 0 to 5; then the transformed values for x=0.2, x=0.5, x=2 and x=5 for the full range of values between 0 and 12. Looking first at the curves for fixed , we see that for high and low values there is some difference, but for a substantial range around 1 the curves are very close. Difference between Gamma and Box-Cox at different values of transformed x value 3 2 1 0 0 0.5 1 1.5 2 2.5 3 3.5 4 4.5 5 -1 -2 -3 -4 x BC (a=0.33) Gamma (a=0.33) BC (a=0.67) Gamma (a=0.67) Looking at the curves for fixed x, we note that for =0 and =1 the functions are identical, so it is only for intermediate values that there is a difference. For x=2 the maximum difference occurs at x=0.52 and is about 2%, too small to be clear on the graph. For x=5, the maximum difference is also at x=0.52 and is about 13%. For x=0.5 we have a maximum difference less than 2%, but for x=0.2 the difference is as much as 8%. Again, for x close to 1 the differences are small, but for large and small values larger differences occur but with the range of a factor of 5 larger or smaller the differences are not large. Values of outside this range are not compatible with the framework of the problem; see Daly, A. (2008) The relationship of cost sensitivity and trip length, 2 http://www.dft.gov.uk/pgr/economics/rdg/costdamping/pdf/costdamping.pdf. Difference between Gamma and Box-Cox at different values of x transformed x value 5 4 3 2 1 0 0 0.1 0.2 0.3 0.4 0.5 0.6 0.7 0.8 0.9 1 -1 -2 BC (x=2) Gamma (x=2) BC (x=5) Gamma (x=5) BC (x=0.2) Gamma (x=0.2) BC (x=0.5) Gamma (x=0.5) Conclusion We conclude that for values of x close to 1 these transformations are performing the same function and are closely equal. Further from 1 the differences are not so small, but they are still not large. Moreover, simply by using the same parameter values (noting the subtraction of from the Gamma formulation) we can get exact equality when x=1 and when =0 or =1. The parameter has essentially the same meaning in the two functions. Use in choice modelling In choice modelling using RUM the Gamma formulation is much easier to use than Box-Cox as we can set it up as a linear-in-parameters model of utility 𝑉 = ⋯ + 𝛽1 𝑥 + 𝛽2 𝑙𝑜𝑔(𝑥) with ∝= 𝛽1 ⁄(𝛽1 + 𝛽2 ). To maintain the range of in [0, 1] we need not to have parameters with opposite signs, which is necessary in any case to fit with the logic of utility functions. ALOGIT is not able to estimate directly models that are not linear in parameters; while Biogeme can do this, it tends to be very slow in converging, so in this case also the Gamma formulation is preferable. We showed close equivalence between Gamma and Box-Cox for values of x close to 1. In practice this can be achieved by using a value in the modelling divided by the mean, so that a clustering of values close to 1 would be expected and values such as 5 might be unusual. It would be interesting to make some estimations of Gamma models and then to repeat the runs using Box-Cox with the same formulation to see how similar the results appeared to be. In conclusion, the Box-Cox and ‘Gamma’ transformations give very similar results when the same transformation parameter is used. There is no reason to regard one of these functions as having higher status than the other, so that we may use whichever is more convenient. For choice model estimation this is the gamma function.