Word Grammar for Encyclopedia of Language and Linguistics

advertisement

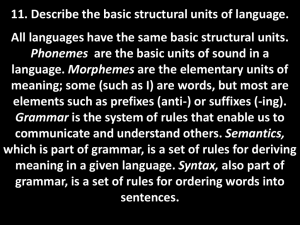

1 In Ronald Asher (ed.) ENCYCLOPEDIA OF LANGUAGE AND LINGUISTICS, Oxford: Pergamon, 1994, 4990-3. `Word Grammar' Richard Hudson [Please look at the end for the Postscript written in 1997!] Word Grammar (WG) is a theory of language structure which is most fully described in Hudson (1984, 1990a). Its most important distinguishing characteristics are the following: a. that knowledge of language is assumed to be a particular case of more general types of knowledge, distinct only in being about language; and b. that most parts of syntactic structure are analysed in terms of dependency relations between single words, and constituency analysis is applied only to coordinate structures. To take a simple example, the grammatical analysis of Fred lives in London can be partially shown by the diagram in [1]. [1] 0 Each arrow in this diagram points from a word to one of its dependents, but there are no extra nodes for phrases or clauses. The similarities between linguistic and non-linguistic knowledge are of various kinds which are outlined in the first section. 1. Linguistic and non-linguistic knowledge WG is an example of what has come to be called `Cognitive Linguistics' (Taylor 1989), in which the similarities between language and other types of cognition are stressed. These similarities are of three main types: a. in the data structures; b. in the categories of grammar; c. in the processing mechanisms. In each case it is possible to find at least prima facie evidence of non-linguistic knowledge which parallels the knowledge of language. Although the analysis of both the linguistic and the non-linguistic examples is a matter for further research and discussion, the extreme `modularity' assumed by Chomskyan linguists appears at present to be untenable. On the other hand, if it should turn out that even the `Cognitive linguistics' research program cannot find a convincing non-linguistic parallel for some part of language structure, then this evidence will be all the more convincing for not having arisen out of our initial assumptions. A WG grammar consists of an unordered list of propositions, which we can call `facts'. All WG propositions have just two arguments and a relation, and all relations can be reduced to a single one, `=', although for convenience we also allow `isa' and `has'. Examples follow: type of object of verb = noun. DEVOUR isa verb. DEVOUR has [1-1] object. These facts are self-explanatory except for a few technical expressions (`isa' means `is an instance of', and `[1-1]' means `at least 1 and at most 1'). This very simple database defines a 2 network of concepts whose links are of many different types (e.g. `type of object of'). It is a commonplace of current cognitive psychology that knowledge in general is organised in this way, so the data-structures of language are similar in fundamental respects to those of general knowledge. Furthermore the `isa' relations (e.g. between DEVOUR and `verb', and between `verb' and `word') are exploited by inheritance, whereby DEVOUR inherits all the properties of `verb', and via `verb' all those of `word'. It is quite uncontroversial to assume that this is also how general knowledge is organised, so here too we find a similarity between the datastructures of language and of non-language. Section 3 discusses inheritance in more detail. The second similarity between language and non-language involves the categories to which the grammar refers. Specifically it can be shown that every category referred to in the grammar is a special case of some general category which is part of general knowledge. For example: `word' is a special kind of `action'; `dependent' is a special kind of `companion', a relation between two events linked in time (e.g. between the two events referred to by John followed Mary into the room); `conjunct' (a relation used in the syntax of coordination) and `stem' (referred to in morphology) are each a special kind of `part'. Thus every linguistic concept inherits some properties which are also available for nonlinguistic concepts, so an analysis of a grammar is incomplete unless it is part of a more general analysis of these parts of general knowledge. These parallels are supported by other connections between the categories of language and those of general knowledge. Although syntactic dependencies typically link one word to another, there are a few points in a grammar which allow a dependency to link a word to something which is not a word. The clearest example of this in English is the word go, whose complement may be some non-linguistic action (e.g. He went [wolf-whistle]). In this example non-linguistic categories function like linguistic ones; but the converse can also be found, with linguistic categories replacing the more normal non-linguistic ones. This is the case when language is used to talk about language; e.g. the meaning Fred said a word contains the concept `word', which is part of the grammar, alongside clearly non-linguistic concepts like `Fred'. The third similarity between linguistic and non-linguistic knowledge involves the processes by which we exploit them. According to WG, sentences are parsed by applying the same general procedures as we use when understanding other kinds of experience: a complex mixture of segmentation, identification and inheritance. This is probably the weakest part of WG theory because so little is known about processing that it is hard to show close similarities between language and non-language. One particular kind of processing of knowledge is learning. According to WG the cognitive structures that a child has developed before it learns to talk are similar to the grammatical structures which it has to learn. This makes the feat of learning language much less impressive and mysterious than in the modular view where the grammar is a unique structure which would have to be built from scratch if it were not innate. At the same time, however, we have to recognise some research challenges which arise under either assumption; in particular, it is hard to explain how children learn that some structure is not possible (e.g. *I aren't your friend; cf. Aren't I your friend?) in the absence of negative evidence. 2. Enriched dependency structures 3 The other highly controversial characteristic of WG is its use of dependency analysis as the basis for syntax. In this respect WG is like Daughter Dependency Grammar, out of which it developed; however unlike DDG, it makes no use of phrases or clauses, categories which are central to the theory out of which DDG was developed, Systemic Grammar. `Dependency analysis' is an ancient grammatical tradition which can be traced back in Europe at least as far as the Modistic grammarians of the Middle Ages, and which makes use of notions such as `government' and `modification'. In America the Bloomfieldian tradition (which in this respect includes the Chomskyan tradition) assumed constituency analysis to the virtual exclusion of dependency analysis, but this tradition was preserved in Europe, and particularly in Eastern Europe, to the extent of providing the basis for grammar teaching in schools. However there has been very little theoretical development of dependency analysis, in contrast with the enormous amount of formal, theoretical and descriptive work on constituent structure. In one respect WG is stricter than typical European dependency grammar, namely in its treatment of word order. According to WG all dependency structures (in all languages) are controlled by an `adjacency principle' (also known in Europe as the principle of `Projectivity'; it should be distinguished carefully from the `Adjacency principle' of Government Binding theory). The adjacency principle can most easily be explained by referring to the derived notion `phrase'. A dependency analysis defines `phrases' as by-products (rather than as the basic categories of the analysis): for each word (W) there is a phrase consisting of W plus the phrases of all the words that depend on W. (W is called the `root' of this phrase.) For example, in He lives in London the dependency analysis defines in London as a phrase because only London depends on in; but it also defines the whole clause as a `phrase' with is as its root. Given this definition of `phrase', the adjacency principle requires all phrases to be continuous (with one important exception discussed below). For example, the phrase in large cities is fine, but large cannot be placed before in (*large in cities) because this would produce a phrase (large cities) separated by a word which is not part of that phrase. The adjacency principle is assumed to apply to all languages, but it remains to be seen whether the apparent counter-examples can all be explained. This version of the adjacency principle is roughly equivalent to a context-free phrasestructure grammar, so even English contains a great many structures which cannot be analysed, and which show that this version of the adjacency principle is too strict. Take a socalled `raising' example like (1). (1)It kept raining. It is generally agreed that it is the subject of raining as well as of kept. But this means that the phrase whose root is raining contains it but not kept, so this phrase is discontinuous but grammatical. To allow for such counter-examples, without abandoning the adjacency principle, the rules of traditional dependency grammar are relaxed in various ways. First one word is allowed to be a dependent of more than one other word, a move which is needed in any case for (1) in order to show that it is the subject of the participle as well as of kept. This gives the structure in [2]. [2] 0 4 Second, discontinuity is permitted just in case the interrupter is the head both of the phraseroot and also of its separated dependent, as in [2]. This is the exception to the simple adjacency principle mentioned above, and it is built into the universal adjacency principle. The dependency configuration in [2] can be described as the `raising pattern' (Hudson 1990b). The raising pattern is responsible for many of the complexities of syntax, in addition to raising structures themselves (and the closely related `control' structures). It is responsible for the extraction found in examples like Beans you know I like, where beans is the object of like. The WG structure for this example is shown in [3]. [3] 0 The crucial innovation in [3], compared with traditional dependency analyses, is the dependency relation `visitor', which is functionally equivalent to the `(spec of) comp' relation which is provided for extractees in PSG-based analyses. The visitor dependency defines the extractee as a part of a series of phrases: first the phrase whose root is know, and second the one whose root is like. Although the latter is discontinuous, it is permitted by the Adjacency Principle because the interrupter, know, is the head of both beans and like. The example [3] illustrates the way in which the relatively rich dependency structures of WG combine both `deep' and `surface' information into a single structure, thereby making transformations unnecessary. Similar analyses are provided for other structures which are often taken as evidence for multiple levels of structure related by transformations. Passives, for example, are given a structure in which the subject also has the dependency relation `object' as in [4]: [4] 0 Section 3 will show how structures like this are generated, but the main point to be noted here is that the power of WG comes from the possibility of multiple dependency analyses applied to a single, completely surface, structure. As will be seen in Section 4, this kind of analysis promises to be very suitable for mechanical parsing. 3. Inheritance and Isa relations It has already been seen that a WG grammar consists of a list of propositions, and that some of the propositions have the predicate `isa', which links concepts hierarchically in so-called `isa-hierarchies'. One isa-hierarchy contains all the various word-types, from the most general type, `word', through sub-types like `noun', `pronoun' and `reflexive-pronoun', down to individual lexical items (and even further, as we shall see). Another hierarchy contains all the different dependency relations, from `dependent' at the top down through relations like `postdependent' and `complement' to the most specific concepts like `indirect object'. These are 5 the richest hierarchies involved in grammar, but there are many more smaller ones. So the isa-hierarchy plays a fundamental part in a WG grammar. Isa-hierarchies are exploited by means of `inheritance', whereby facts about higher categories automatically apply to categories below them in the hierarchy. For example, suppose a grammar contained the following facts (where `it' is a variable standing for the italicised word). (2a)position of predependent of word = before it. (2b) subject isa predependent. (2c) RUN isa word. By inheritance it can be inferred from (2) that (3) is also true. (3)position of subject of RUN = before it. Not only grammars but also sentence structures consist of lists of facts; dependency diagrams are just convenient summaries of a small subset of these facts. Since the words in a sentence are different from the lexical items of which they are instances, they are given different names as well - names like W1, W2, and so on. The structure of the sentence Fred ran is thus given in part by the following propositions (4a-e): (4a)structure of W1 = <Fred>. (4b)structure of W2 = <ran>. (4c)W1 isa FRED. (4d)W2 isa RUN. (4e)subject of W2 = W1. It is probably easy to see how a grammar acts as a set of well-formedness conditions on sentence-structures. A structure is generated provided that each word in the sentence can be assigned, as an instance, to some word in the grammar, and that all the facts which are true of that grammar-word can be inherited onto the sentence-word without contradiction. For example, we know that the subject of RUN should precede it (3), so since W2 isa RUN (4d) the subject of W2 should also precede it; and since W1 is the subject of W2 (4e) it should therefore be the case that W1 precedes W2. Grammars are applied to sentences by ordinary logic, which again supports the claim that language is similar to non-linguistic knowledge. One consequence of locating all grammatical facts in isa-hierarchies is that there is no distinction, either in principle or in practice, between `the grammar proper' and `the lexicon'. To the extent that the lexicon can be identified at all, it is just the set of facts that refer to categories in the lower regions of the total isa-hierarchy. It is tempting to classify WG as an extremely `lexicalist' theory of grammar, because of the central role assigned to individual words, but this is a meaningless claim in the absence of a boundary between the lexicon and the rest of the grammar. The system of inheritance in WG deserves a little more explanation because the treatment of exceptions is unique. Inheritance is generally called `default inheritance', because facts are inherited only by default, i.e. for lack of any specified alternative. For example, the default fact about past verbs is (5), where `mEd' is the name of the morpheme -ed. (5)structure of past verb = stem of it + mEd. It is usually assumed that an inheritable fact applies only by default, i.e. when a more specific fact is not available; so fact (6) should block (5). (6)structure of past DREAM = <dreamt>. This prediction is incorrect, because DREAM allows a regular past tense as well as the irregular one, and the same is true of a significant number of English verbs. An inheritance system in which inheritance is strictly `by default' cannot allow alternatives (except by listing, which obscures the fact that one alternative is in fact inherited regularly). 6 The WG system of inheritance reverses the statuses of alternative and blocking facts. According to WG, every fact about some category (C) is normally inheritable by every other category which is below C in an isa-hierarchy, regardless of whether or not more specific facts provide alternatives. Cases where a more specific fact overrides a more general one are treated as exceptions, which have to be listed individually. The mechanism for recording an overriding fact is a special kind of proposition, introduced by `NOT'. For example, the past tense of SING is recorded as follows in (7): (7a)structure of past SING = <sang>. (7b)NOT: structure of past SING = stem of it + mED. This analysis suggests that SING is more irregular than DREAM, because SING needs one more fact - [7b] - than DREAM. This seems correct. This inheritance system, with stipulated rather than automatic overriding, applies throughout the grammar, and not just in morphology. Take the passive example given earlier, in [4]: Fred was chosen. If Fred is the object of chosen, Fred would be expected to occur in the normal position for objects. It does not because the normal rule is overridden by a very general rule which applies to all predependents (a category which includes both `subject' and the `visitor' relation that we met in discussing extraction). (8a)position of dependent of word = after it. (8b)position of predependent of word = before it. (8c)NOT: position of predependent of word = after it. English is basically a head-initial language (8a), but some types of dependent systematically precede their heads (8b), and cannot follow it (8c). Since Fred is the subject of chosen as well as its object, fact (8c) applies to it and blocks inheritance of the normal object position. 4. Applications This article has concentrated on the syntax of WG, but it should be emphasised that the theory has already been extended to some parts of morphology, and to large areas of semantics. On the other hand, it has not yet been applied to phonology, nor has it been applied extensively to languages other than English; so the theory is in some respects still in an early stage of development. It is not too soon, however, to anticipate some possible applications: (a) computer systems, (b) lexicography, (c) language teaching. Regarding computer applications, any dependency-based system has the attraction of requiring less analysis than a constituency-based one, because the only units that need to be processed are individual words. Furthermore the relatively rich dependency structures allowed by WG mean that the completely surface analysis gives a lot of structural information, of the kind shown in other theories by empty categories or underlying structures. This rich dependency structure is relatively easy to identify, because the additional structure is generally predictable from a simple basic structure; and once it is built, it is very easy to map onto semantic structure. It also provides a much more suitable basis for corpus analysis aimed at establishing cooccurrence statistics than constituency structure does. The logical basis of the inheritance system makes WG grammars particularly easy to use in a logic-based computer system, and encourages a very clear conceptual distinction between the grammar and the parser. Various small WG parsers have already been written in Prolog (Fraser 1993). As far as lexicography is concerned, WG theory is nearer than most linguistic theories to the practice of modern lexicographers. Some general trends in lexicography can be identified: 7 (a) the blurring of the distinction between grammars and dictionaries; (b) the blurring of the distinction between dictionaries and encyclopedias; (c) the treatment of dictionaries as databases with a network structure rather than as lists of separate lemmas; (d) the inclusion of rich valency information in terms of dependency types like `object'; (e) the inclusion of increasingly sophisticated sociolinguistic information about words. In all these respects lexicography has followed the same path as WG, so the latter may provide some helpful theoretical underpinnings for lexicography (Hudson 1988b). Language-teaching is another area in which WG may prove useful, though this remains to be demonstrated. It is still a matter of debate what role, if any, explicit facts should play in language teaching, but it seems beyond doubt that at least the teacher should `know' the facts of the object-language quite consciously, and should also know something about how language, in general, is structured. Even in the present rather early stage in the development of linguistic theory we have achieved agreement on a great many issues which teachers ought to know about (Hudson 1992). Some of WG's attractions are similar to those listed above for lexicography, but dependency analysis also provides a convenient notation for sentence structure which allows the indication, for example, of selected grammatical relations without the building of a complete sentence structure. This is not surprising considering that WG, and dependency theory in general, is a formalisation of traditional school grammar. It is worth remembering that the classic of dependency theory (Tesnière 1959) was written explicitly in order to improve the teaching of grammar in schools. Bibliography Fraser, N 1993. The Theory and Practice of Dependency Parsing. London University PhD Dissertation Hudson, R A 1984 Word Grammar. Blackwell, Oxford Hudson, R A 1985 The limits of subcategorization. Linguistic Analysis, 15: 233-55 Hudson, R A 1986 Sociolinguistics and the theory of grammar. Linguistics, 24: 1053-78 Hudson, R A 1987 Zwicky on heads. Journal of Linguistics, 23: 109-32 Hudson, R A 1988a Coordination and grammatical relations. Journal of Linguistics, 24: 30342 Hudson, R A 1988b The linguistic foundations for lexical research and dictionary design. International Journal of Lexicography, 1: 287-312 Hudson, R A 1988c Extraction and grammatical relations. Lingua, 76: 177-208 Hudson, R A 1989a English passives, grammatical relations and default inheritance. Lingua, 79: 17-48 Hudson, R A 1989b Gapping and grammatical relations. Journal of Linguistics, 25: 57-94 Hudson, R A 1990a English Word Grammar. Blackwell, Oxford. Hudson, R A 1990b Raising in syntax, semantics and cognition. UCL Working Papers in Linguistics, 2. Hudson, R A 1992 Grammar Teaching. Blackwell, Oxford. Taylor, J R 1989 Linguistic Categorisation: An essay in cognitive linguistics. Oxford University Press, Oxford. Tesnière, L 1959 Eléments de Syntaxe Structurale. Klincksieck, Paris. Postscript, August 1997 8 This article was published in 1994, but written in 1990. Since then I've changed my mind on a number of the things discussed here. Here's a brief list. I've abandoned `stipulated overriding', the idea that defaults can be overridden only by explicit rules of the form `NOT ...'. Nobody else has ever believed this and there are much easier ways to handle the (rather rare) examples where an irregularity coexists as an alternative to the regular default (e.g. dreamed/dreamt). I've abandoned the `adjacency principle' in favour of a `surface structure principle' which says that a sentence's total dependency structure must include one which is equivalent to a phrase-structure analysis - it must be free of tangling dependencies, and every word must have a parent. I now give the sentence-root a `virtual' partent shown by an infinitely long vertical arrow. The new stress on `surface structure' is reflected in my sentence diagrams. Instead of putting `visitor' links below the words and all the others above, I draw the surface structure (including some visitor links) above the words and the rest below. I now put less stress on the logical structure of the `facts', which I now write in ordinary prose rather than the pseudo-formal style used here. I prefer to use network diagrams where possible, because the idea of language (and knowledge) as a network strikes me as particularly important. As I say here, networks and propositions are equivalent, but I find that humans can process a complicated collection of data more easily when it is presented in a diagram than as a list of propositions. I've developed an interest in how syntactic structure makes some sentences harder than others to process, and believe that the structural difficulty can be measured in terms of `dependency distance'. I've made a tentative move towards attaching variable strengths to `isa' links in the network. This is in my `Inherent variability and linguistic theory', based on data from sociolinguistics.