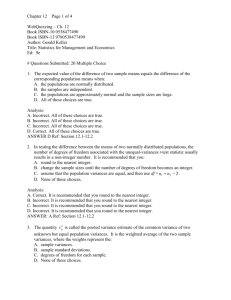

Hypothesis Tests to Compare Two Population Variances

advertisement

Hypothesis Tests to Compare Two Population Variances In some applications where there are two populations, it is of interest to compare the variances of the populations. (Just like situations we encountered where we were interested in comparing the populations’ means, or the populations’ proportions.) As a first example, to use a pooled t-test it was necessary to assume that 𝜎12 = 𝜎22 . We would like to test that assumption. In comparing two populations means, 𝜇1 − 𝜇2 , or population proportions, 𝑝1 − 𝑝2, we used differences to make our comparisons. To say that the two means were equal was equivalent to stating that 𝜇1 − 𝜇2 = 0. In our new context of comparing variances, we will test hypotheses of the form H0: 𝜎12 = 𝜎22 H0: 𝜎12 = 𝜎22 or Ha: 𝜎12 ≠ 𝜎22 H0: 𝜎12 = 𝜎22 or Ha: 𝜎12 Notice that under H0, 𝜎12 = 𝜎22 , implying that > 𝜎22 Ha: 𝜎12 < 𝜎22 𝜎2 𝜎12 ⁄ 2 = 1, or 2⁄ 2 = 1. Thus if H0 is true, we 𝜎2 𝜎1 𝑠2 𝑠12 ⁄ 2 and 2⁄ 2 to be near one. Looking at a ratio of two numbers instead of 𝑠2 𝑠1 differences is another way of making a comparison. How do we decide which way to do this? In the case of comparing means (when we knew the values of 𝜎12 𝑎𝑛𝑑 𝜎22 ), it turned out that the difference of sample means, 𝑥̅1 − 𝑥̅2 , had a well understood distribution under repeated sampling, i.e., the normal distribution. (This is not obvious to someone who has not studied the 𝑥̅ topic in depth.) The ratio 1⁄𝑥̅ does not have an easily recognized distribution, so it makes 2 sense to use the difference 𝑥̅1 − 𝑥̅2 to get a test statistic. would expect The situation is reversed in the case of variances. Imagine that we take repeated samples of sizes n1 and n2, respectively, from populations 1 and 2, and compute 𝑠12 𝑎𝑛𝑑 𝑠22 . For each pair of samples, the test statistic 𝐹= 𝑠12 ⁄𝜎12 𝑠22 ⁄𝜎22 has an F-distribution with 𝑛1 − 1 numerator and 𝑛2 − 1 denominator degrees of freedom. Under the null hypothesis H0: 𝜎12 = 𝜎22 , the F statistic reduces to 𝑠12 ⁄2 𝑠2 Hence, to test H0, we compute either this ratio or its reciprocal and look for unusual values of F. The left hand tail corresponds to unusually small values of F, while the right hand tail corresponds to unusually large values of F. 𝐹= 1 F(4,9) 0.8 0.7 0.6 0.5 0.4 0.3 0.2 0.1 0 0 1 2 3 4 5 Example 1—Suppose we wish to test H0: 𝜎12 = 𝜎22 Ha: 𝜎12 ≠ 𝜎22 We have taken samples of size 𝑛1 = 25 and 𝑛2 = 16, and computed 𝑠12 = 2 and 𝑠22 = 5. Is there evidence to reject H0 at α = .05? What is the decision rule? See the spreadsheet F-distributions.xlsx. Note: One way to do a two-tailed test is to always compute F by putting the largest sample variance in the numerator, thus assuring that F>1. Then you can look up the point that cuts α/2 in the right hand tail to perform the test; or equivalently, compute the p-value by computing the area above your F value and multiplying it by 2. Example 2—Consistency in the taste of beer is an important quality in retaining customer loyalty. The variability in the taste of a given beer can be influenced by the length of brewing, variations in ingredients, and differences in the equipment used in the brewing process. A brewery with two production lines, 1 and 2, has made a slight adjustment to line 2, hoping to reduce the variability of the taste index. Random selections of 𝑛1 = 10 and 𝑛2 = 25 eight ounce glasses of beer were selected from the two production lines and were measured using an instrument designed to index the beer taste. The results were 𝑠12 = 1.04, and 𝑠22 = 0.51. Does 2 this present sufficient evidence to indicate that the process variability is less for line 2 than line 1 at α = .05? What are the hypotheses? Note: For an alternative hypothesis, Ha: 𝜎12 > 𝜎22 , you can always compute F by 𝑠12 ⁄2 𝑠2 so that you will only reject H0 for Ha if F is sufficiently large. If Ha: 𝜎22 > 𝜎12 , then compute F by 𝐹= 𝐹= 𝑠22 ⁄2 𝑠1 and look for large F values to reject. 3