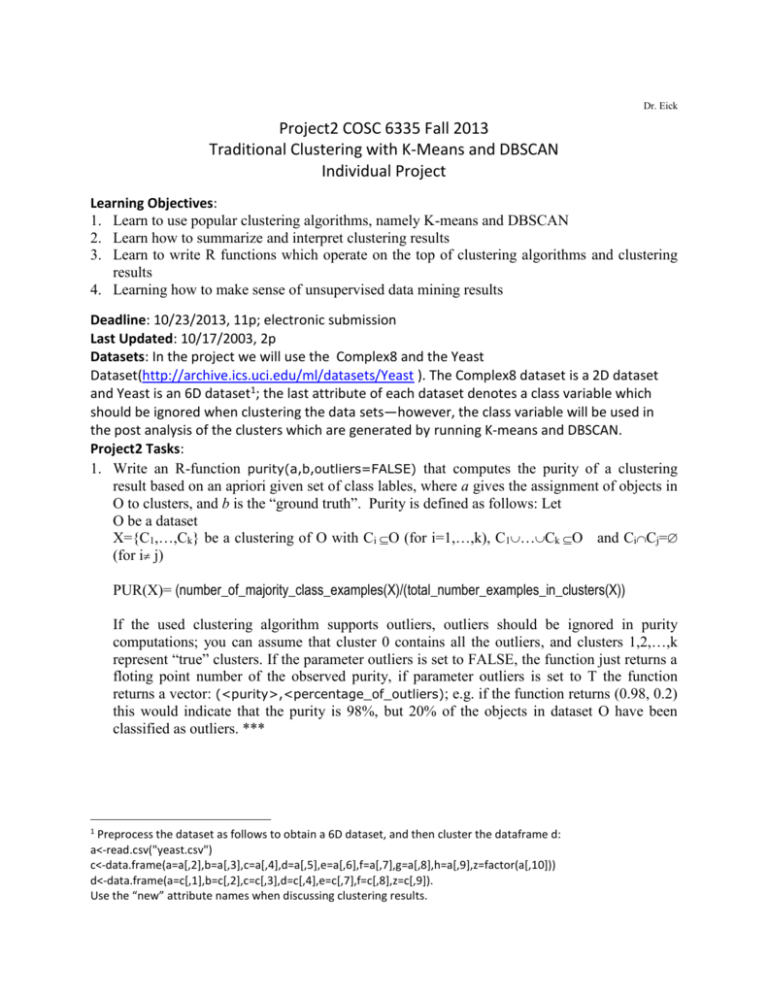

Project2 Scores

advertisement

Dr. Eick

Project2 COSC 6335 Fall 2013

Traditional Clustering with K-Means and DBSCAN

Individual Project

Learning Objectives:

1. Learn to use popular clustering algorithms, namely K-means and DBSCAN

2. Learn how to summarize and interpret clustering results

3. Learn to write R functions which operate on the top of clustering algorithms and clustering

results

4. Learning how to make sense of unsupervised data mining results

Deadline: 10/23/2013, 11p; electronic submission

Last Updated: 10/17/2003, 2p

Datasets: In the project we will use the Complex8 and the Yeast

Dataset(http://archive.ics.uci.edu/ml/datasets/Yeast ). The Complex8 dataset is a 2D dataset

and Yeast is an 6D dataset1; the last attribute of each dataset denotes a class variable which

should be ignored when clustering the data sets—however, the class variable will be used in

the post analysis of the clusters which are generated by running K-means and DBSCAN.

Project2 Tasks:

1. Write an R-function purity(a,b,outliers=FALSE) that computes the purity of a clustering

result based on an apriori given set of class lables, where a gives the assignment of objects in

O to clusters, and b is the “ground truth”. Purity is defined as follows: Let

O be a dataset

X={C1,…,Ck} be a clustering of O with CiO (for i=1,…,k), C1…CkO and CiCj=

(for i j)

PUR(X)= (number_of_majority_class_examples(X)/(total_number_examples_in_clusters(X))

If the used clustering algorithm supports outliers, outliers should be ignored in purity

computations; you can assume that cluster 0 contains all the outliers, and clusters 1,2,…,k

represent “true” clusters. If the parameter outliers is set to FALSE, the function just returns a

floting point number of the observed purity, if parameter outliers is set to T the function

returns a vector: (<purity>,<percentage_of_outliers); e.g. if the function returns (0.98, 0.2)

this would indicate that the purity is 98%, but 20% of the objects in dataset O have been

classified as outliers. ***

1

Preprocess the dataset as follows to obtain a 6D dataset, and then cluster the dataframe d:

a<-read.csv("yeast.csv")

c<-data.frame(a=a[,2],b=a[,3],c=a[,4],d=a[,5],e=a[,6],f=a[,7],g=a[,8],h=a[,9],z=factor(a[,10]))

d<-data.frame(a=c[,1],b=c[,2],c=c[,3],d=c[,4],e=c[,7],f=c[,8],z=c[,9]).

Use the “new” attribute names when discussing clustering results.

2. Run K-means for k=8 and k=11 twice for the Complex8 dataset2. Visualize and interpret the

obtained four clusterings! Also compute their purity using the function you developed in

Task1. **

3. Write an R-function agreement(X,Y) *** that computes the agreement between two

clusterings X and Y of dataset O; agreement should be computed, as follows:

Let n be the set of objects in the dataset O and X and Y are clusterings3 of O.

a. Counter:= 0

b. Iterate over all pairs of objects oj and or of the dataset O

i. Case 1: jr (“Increase counter if both objects are in same cluster

in X and Y or if both objects are in a different cluster in X and

Y”)

Increase

Counter

by

one

if

((X$cluster(j)=X$cluster(r)

and

Y$cluster(j)=Y$cluster(r))

or

(X$cluster(j)X$cluster(r)

and

Y$cluster(j)Y$cluster(r)))

ii. Case 2: j=r (“Increase counter if object j is an outlier in both

clustering X and Y or if object j belongs to a cluster in both X

and Y”)

Increase Counter if ((X$cluster(j)=0 and Y$cluster(r)=0) or (X$cluster(j)>0

and Y.$cluster(r)>0))

c. Report (Counter/((n*(n+1)/2)) as the the results of Agreement(X,Y)!

Using the agreement function you compute the agreement for each of 4 DBSCAN

clusterings of the Iris dataset which were obtained using the following

parameter settings:

c1<-dbscan(iris[3:4], 0.15, 3)

c2<-dbscan(iris[3:4], 0.2, 4)

c3<-dbscan(iris[3:4], 0.4, 6)

c4<- dbscan(iris[3:4],0.55, 17)

4. Run K-means for k=5 for the Yeast dataset. Using techniques of your own liking, try to

characterize the contents for the 5 clusters (additionally, you might compare the statistics

of the 5 clusters with those of the complete dataset, and assess the quality of each of the 5

clusters)—what do the objects in belonging to the same cluster have in common? More

sophisticated and thorough approaches4 will obtain more credit. Summarize your findings!

********

5. Run DBSCAN multiple times for the Yeast dataset and report and interpret a single

clustering that contains between 3 and 13 clusters with less than 20% outliers. **

2

It can be found at: http://www2.cs.uh.edu/~ml_kdd/Complex&Diamond/Complex8.data ; it has been visualized

at: http://www2.cs.uh.edu/~ml_kdd/Complex&Diamond/2DData.htm

3

You can assume cluster 0 contains the outliers, if there are any.

4

Some approaches will be discussed in the course lectures in early October. In general, you will need to come up

with your own approaches for Task4 of this project and you have a lot of freedom in choosing methods to

accomplish the goals of Task4; I expect that solutions for Task4 will differ a lot between students; the key points is

to produce something reasonable which characterizes the content of the 5 clusters, and (optionally) assesses the

quality of the obtained 5 clusters.

6. Write a search procedure in R that searches for the best clustering by exploring different

settings for the (MinPoints, epsilon) parameters of DBSCAN. The procedure maximizes

purity of the obtained clustering, subject to the following constraints:

i. There should be between 2 and 20 clusters

ii. The number of outliers should be 10% or less.

The procedure returns the “best” DBSCAN clustering found and the accomplished purity as

its result; please limit the number of tested (MinPoints, epsilon)-pairs to 1000 in your

implementation! *******

Explain how your automated parameter selection method works and demonstrate your

automated procedure using an example!

7. Apply the procedure you developed in Task6 to the Yeast and Complex8 datasets and report

the best clustering you found. Are you happy with the obtained solution?

If you did not succeed in writing the function in Task6, manually seek for the best clustering

and report those two clusterings. *

Deliverables for Project2:

A. A Report5 which contains all deliverables for the six tasks of Project2.

B. An Appendix which describes how to run the procedure that you developed for Task 6.

C. An Appendix which contains the software you developed as part of this project; in particular

the R-functions you wrote for tasks 1, 3, 6 should be included.

D. Delivery of Project2 Reports: send an e-mail to ceick@aol.com using the subject

Project2_<your lastname>_Report and call the attached file <last name>_P2.docx (or <last

name>_P2_.pdf )

5

Single-spaced; please use an 11-point or 12-point font!

COSC 6335 Project2 2013 Scores

1

5

4

1

3

4

5

5

5

5

5

5

5

2

5

5

2

5

5

2

4

5

5

2

5

3

4

--5

5

5

5

2

5

2

4

3

3

3

4

4

4

4

4

4

4

3

3

3

3

3

4

3

3

3

3

4

3

4

3

3

3

3

3

3

4

3

4

3

5

5

5

4

1

5

5

5

5

2

5

1

4

5

5

5

5

4

3

5

5

2

4

5

5

5

4

5

5

5

5

5

5

4

7

7

3

5

6

7

9+1

9

6

10

8

6

3

1

9

4

5

6?

7?

5

--3

4

8+1

6?

9

0

7

5

10+2

7

6?

10+2

5

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2

2+1

2

2

2

2

2+1

6

7

8

2+1?

34

5

1

27

0

0

13

1

0

18

4

0

21

9

2

34

10

2

38

8

2

35

5

2

29

8

2

33

9

1

34

8

2

27

2

0

16

5

0

21

4

1

29

0

0

16

7

2

30

1?

1

22

3?

1

21

4

2

25

8

2

25

4

1

21

0

0

15

6

2

33

3?

1

23

6

2

31

1

--10

7

2

32

7

2+1?

30

8

2+1

38

7

1

31

3?

1

22

10+2 2+1 38+5

Early

St_id

34

28.5

13

18

22

35.5

38

37

29

33

35.5

28.5

16

22

30.5

16

30

22

21

26.5

25

22

15

34.5

23

31

10

33.5

30

40

32.5

22

?

499

956

975

171

157

370

065

945

756

877

387

049

395

875

445

459

213

815

797

696

9748

967

281

279

506

553

505

957

681

941

378

8748

Remark: Scores are still preliminary, and subject to verification!

Project2 Statistics:

Average (before early submission adjustments): 26.1

Adjustment Early Submission (5% bonus, rounded to the next halfpoint): Zhai, Wu,

Tadakaluru, Sant, Man, Latif, Jana, Kidd, Gopiseeti, Dou, Dhawad, Cao, Bector, Barman

(*:=early submission)

?:= score not finalized and might drop; need to do some checking…