Linear Regression Checklist

advertisement

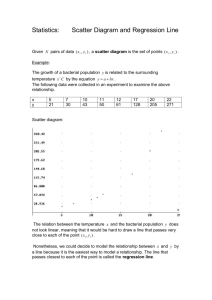

“Linear Regression. Part I OLS.” _Dataset can be found on the Poli 496 Course Website and helping1.sav at: http://wps.ablongman.com/ under “jump to” datasets. Extended Linear Regression Procedure: _In the first lecture we produced the results for a simple linear regression (by hand). Today we will do the same for a multiple of independent variables, hence termed multiple regression analysis. Basics: _Opening the file: if an excel file (indicate “read variable names”) – save in new name. (1). Getting your own data: Collect and input interval level cross-sectional data in rows (variable values in columns). _Exceptions: If DV is dichotomous, use logistic regression. If data is longitudinal, use time series regression. _Data Editor: spreadsheet: rows = cases; columns = variables (recall names). _Look at the dataset we are using: n=81, 20 variables, on how people help each other. _The model of interest: we want to know how peoples’ feelings towards other people affects whether they help them. _This is the dataset provided with the text. _Variable Definitions: _Presumably acquired through a survey of 81 persons facing people in need. zhelp: Z-scores (normalized) of the amount of time spent helping a friend on a -3 to +3 scale. Sympathy: Sympathy felt by helper in response to a friend’s need on a little (1) to much (7) scale. Anger: Anger felt by helper in response to friend’s need; 7-point scale above. Efficacy: Self-efficacy [ability] of helper in relation to friend’s need; 7-point scale above. Dsex: Gender: dummy variable: 1 = female and 0 = male. (2). Measuring Skewness and Kurtosis: _The independent variables should have approximately normal distributions, otherwise the findings will be biased (not relevant for dichotomous dummy variables). _Skewness measures the extent to which the left or right bell curve is drawn out. _Kurtosis measures the extent to which the bell curve rises or flattens. _Procedure: >> Analyze >> Descriptive Statistics >> Descriptives: INPUT anger, sympathy, efficacy. _Options: tick Kurtosis and Skewness >> Continue >> Paste [OK] >> Run. _Saving Syntax: I need all your assignments and your final paper to be accompanied by the pasted codes: File >> Save As >> Name. This can be opened by Word. _Save the Paste in a text file for later collation (this will permit me to see if you followed the correct procedures). 1 _Result Indicator: Skewness and Kurtosis are normal if they are within +/-1, and at tolerable limits if at +/-2 (beyond which the distribution of the independent variable will bias the results of the regression). _Results: Efficacy and Sympathy are within required parameters; anger is within tolerable parameters. _Solution: Increase sample size to achieve an approximately normal distribution. (2). Run Pearson’s r between each IV to control for multicollinearity. If r > 0.75, then two IVs are too closely related and measure the same thing. Including them will bias the results. _Procedure: >> Analyze >> Correlate >> Bivariate: INPUT anger, sympathy, efficacy, dsex (Pearson): Paste [OK] >> Run. _Results: anger, efficacy, sympathy and dsex are all uncorrelated. Interpretation: _Each IV is regressed against the others: the table provides the Pearson correlation (r), the t-test significance of the correlation, and the n, or number of observations. _This means that the two variables explain essentially the same thing and are either two different aspects of the same thing, or are themselves caused by an antecedent variable. _The t significance test, or t-test, is a measure of the probability that the correlation you are examining is the probability of luck and not a genuine correlation. Thus the lower its value the better. _T-tests commonly vary in three levels: significance at the 10% level, at the 5% level (most common), and at the 1% level (the best). _If any IV correlates at greater than (r=) 0.75, then one of the two IVs should be excluded (or combined through factor analysis) or it will bias the regression results. _Solution: (i) exclude IV, (ii) or (Solution): run factor analysis to combine related IVs. (3). Scatterplot individual IVs with DV to assure linearity. If IV does not have a linear association with the DV, then the IV must be (Solution): transformed. Otherwise the regression results will be biased. Do not transform DVs into linear representations (this is sometimes but requires re-plotting all the IVs). Proceed through each individual IV (not relevant for dichotomous (dummy) IVs). Procedure: >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter >> Define >> Y Axis (put the DV: zhelp) >> X Axis (put the IV: each of anger, efficacy, and sympathy in different scatterplots) >> Paste syntax >> Run. _Examine the scatterplot for each of the IVs to ensure they are linear. _Results: Sympathy: zhelp and sympathy: diffuse but linear. Anger: zhelp and anger: skewed to the left (low values of the IV), but linear. Efficacy: zhelp and efficacy: slight skewness to the right, but linear. _Assessment: there is no transformation required in these data. _Solution: if the IV-DV line is not linear, then the IV must be transformed. _Let us examine a non-linear relationship that is in need of transformation: 2 _Get anxiety.sav dataset from the Poli 644 website. N=74 _Variable Definitions: _Exam (DV): The score on a 100-point exam. _Anxiety (IV): A measure of pre-exam anxiety measured on a low(1) to high(10) scale. _First step is to diagnose the linearity of the relationship. _Procedure: >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter >>Define >> Y Axis (put the DV: exam) >> X Axis (put the IV) anxiety >> Paste syntax >> Run. _Examine the scatterplot for each of the IVs to ensure they are linear. _Results: evident “inverted U” curvilinear relationship. _Theory: Some anxiety is beneficial, but too much hampers performance. _Solution: the IV anxiety must be transformed to render the relationship linear. _The next step is to determine which mathematical transformation fits best: Conversions of Data for Linearity: (a). If the data is an “F Curve,” get the log (in SPSS compute = Ln(10) or LG10(X) ) of the X-axis (the independent variable). (b). If the data is a “L curve,” then transform the IV on the X-axis into its reciprocal (divide it by 1). (c). If the data is a “bell curve,” then use the quadratric (multiply the IV by itself) or the cubic (multiply the IV by itself three times – the cube). (d). If the data is a “soft Z curve,” get the log of both the DV (Y-axis) and the IV (Xaxis). _This procedure is very time consuming and benefits from extensive trial-and-error, and the solutions are rarely perfect. _Note: Logs will not work with 0 or negative values. _All transformed IVs must be regressed against the DV in a scatterplot above to confirm the success of the linear transformation. _Assessment: Cubic and Quadratic = transform the IV by that value. _The next step is to quadratically transform anxiety. _Procedure: >> Transform >> Compute Variable >> Target Variable >> [IV new name] INPUT (qanxiety) >> Functions INPUT >> anxiety*anxiety >> OK. _Procedure: >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter >> Define >> Y Axis (put the DV: exam) >> X Axis (put the IV) qanxiety >> Paste syntax >> Run. _Assessment: the transformation had little impact in this case. _Return to original dataset. (4). We must identify and potentially exclude data-points that are significantly farther from the mean than two standard deviations (termed outliers). Not to do so will skew the generalizability of our regression results. _Normally the variables would be normalized, but looking for an approximate bell curve is sufficient. _EG: We would exclude Superman if we wanted to make generalizations about human strength. 3 _Procedure: >> Graphs >> Legacy Dialogs >>Histogram >> INPUT: Display Normal Curve >> INPUT: zhelp >> Paste syntax >> Run. _Repeat for each variable: zhelp, sympathy, anger, and efficacy. _Interpretation: zhelp: Some data points at 3 SD (which is expected as help is “normalized.”) Sympathy: Seems approximately a normal distribution. Anger: Clearly a non-normal distribution, but Kurtosis and Skewness was acceptable. No major outliers. Efficacy: Has a nearly non-normal distribution, but tolerable, and without major outliers. _Procedure: If there are outliers, they must be removed temporarily to determine if their exclusion would significantly affect the regression findings. _Solution: Increase data size to decrease proportion of extreme datapoints (5). Dummy Variables: _Dummy variables are included in the regression (equation) when there is a dichotomous independent variable such as sex, in which there are two values: 1 for female and 0 for male. _Procedure: create a dummy variable as you would any variable. (6). After linear transformations, re-run Pearson’s r between each IV to control for multicollinearity. See #2 above. (7). Run the Multiple Linear Regression: _Run model, examining the strength of the relationship (R2), and the slope/standard error for the t-test of significance for each of the IVs. _Procedure: Analyze >> Regression >> Linear >> INPUT: Dependent: zhelp >> INPUT: Independent: anger, efficacy, sympathy, dsex >> INPUT: Save: Predicted: unstandardized, standardized >> Save: Residuals: unstandardized, Studentized >> Continue >> INPUT: Method: Enter >> INPUT: Statistics: Durbin-Watson >> Continue >> Paste [OK] >> Run. _Interpretation of the Results: Paste Output. R: 0.641 This is termed the variance. R-Square: 0.411 This identifies the proportion of variance in the DV accounted for by the IVs. Thus, 38% of the variance in exam values is explained by the three IVs. Adjusted R-Square: 0.381 (moderate value) Adjusted R-Square compensates for higher likelihood of correlations in the sample, and therefore provides a more accurate estimate for the population. Strong value: 0.6 to 0.8 Moderate value: 0.3 to 0.5 Weak value: 0.1 to 0.2 4 ANOVA: This provides a general estimate of the significance of the model’s findings. df: degrees of freedom: the number of independent variables. _For the residual, its n (the number of cases) minus the number of independent variables. _As there are fewer cases and more variables, degrees of freedom falls and the likelihood of obtaining unbiased regression estimates is decreased. F-statistic: The mean square regression divided by mean square residual. Used to test differences in means. Significance of F-statistic: Likelihood that the finding could occur by chance (0.000). Co-Efficients: B (Constant) = -4.272 (standard error and significance test not relevant). This fits into the equation that facilitates prediction. Anger: Unstandardized B Co-Efficient: 0.300. Standard Error: 0.081 _This is the expected standard deviation of the expected values for a population in which there was no actual association. _Confidence intervals: _67% of all cases in a normal distribution fall within the values of B +/- 1*(S.E.). _95% of all cases in a normal distribution fall within the values of B +/- 2*(S.E.). _EG: For Anger, 67% of observations are within: 0.3 +/- 0.081 or 0.219 to 0.381; 95% of observations are within 0.138 to 0.462. t-Test: 3.681 (B divided S.E.) KEY MEASURE Significance: 0.001 _Significance Tests: Significance tests help us determine whether the statistical correlations and relationships we observe in our samples are the product of chance or whether they are genuine and can be used to generalize to the larger population. _Specifically: Statistical significance test tell us what probability there is that the relationship we observe in our sample could occur if there was no such relationship in the larger population or universe of cases. _Strength of the significance test: (0.1 to 0.5 is usually the minimum significance in the scientific community): t-test sig < 0.01 = 1% chance of finding a false positive (false association). t-test sig < 0.05 = 5% chance of finding a false positive (false association). t-test sig < 0.1 = 10% chance of finding a false positive (false association). _Note: we can never be certain that the association isn’t just wild luck, but we can be pretty sure. _Solution: If significance is too low, the sample size should be increased. _There are dozens of different significance tests. _Proceed to determining the value of t: _The t-distribution is an approximately normal distribution. 5 _Use one-tailed test in the hypothesis testing. _Step 1: determine the degrees of freedom: n (# of cases) – IVs. _Step 2: determine in what column (significance level) the t-statistic value is greater than the chart number. _General metric: SE of 2.00 is usually good enough BETA: (Anger): 0.328 _Beta = Standardized (z-score) regression coefficients. _Beta is a measure of the relative contribution of the IV if the other IVs were controlled for. It is also known as the “partial r.” It is the most accurate measure of the relative impact of the IV on explaining the outcome in the DV. Sympathy: Unstandardized B Co-Efficient: 0.499 Standard Error: 0.098 t-Test: 5.082 Significance: 0.000 BETA: 0.455 Efficacy: Unstandardized B Co-Efficient: 0.435 Standard Error: 0.129 t-Test: 3.359 Significance: 0.001 BETA: 0.300 Dsex: Unstandardized B Co-Efficient: -0.455 (negative means inverse relationship) Standard Error: 0.224 t-Test: -2.030 Significance: 0.046 BETA: -0.180 _Regression Estimate: Overall the four IVs explain a moderate amount of variance in the DV. Sympathy explains the most variance, followed by anger, efficacy, and then dsex. (8). Identifying significant variables: _Exclude insignificant IVs and re-run model. (9). Method of Testing Linearity Assumption: since the sum of the residuals (the unexplained variance should equal zero), regressing the residuals and the predicted values should show no pattern. _Procedure: >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter Define >> Y Axis (pre_1) >> X Axis (res_1) >> Paste syntax >> Run. 6 _Result: Output shows random distribution with no patterns, which is good: linear association. _Solution: The solution is weighted least squares. (10). Heteroskedasticity: _Heteroskedasticity occurs when there is a pattern in the residuals, specifically when the standard deviations of the residuals are uneven. Homeskedasticity occurs when there is no pattern among the residuals. _Method of Testing Equality of Variance Assumption: The error term (residuals) has a zero mean and is normally distributed, and so regressing the Studentized residuals and standardized predicted values should show no pattern. The Studentized residuals should also be regressed against each independent variable. _Procedure: >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter Define >> Y Axis (sdr_1) >> X Axis (zpr_1) >> Paste syntax >> Run. >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter Define >> Y Axis (sdr_1) >> X Axis (anger) >> Paste syntax >> Run. >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter Define >> Y Axis (sdr_1) >> X Axis (sympathy) >> Paste syntax >> Run. >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter Define >> Y Axis (sdr_1) >> X Axis (efficacy) >> Paste syntax >> Run. >> Graph >> Legacy Dialogs>> Scatter/Dot >> Simple Scatter Define >> Y Axis (sdr_1) >> X Axis (dsex) >> Paste syntax >> Run. _Result: Output shows random distribution with no patterns, which is good: the error term has a zero mean. _Solution: The solution to achieve homoskedasticity is weighted least squares. Or it is to add whatever omitted variable caused the pattern. (11). Autocorrelation (First Order Serial Correlation): _Method of Testing the Independence of Error Assumption: This is a test of serial correlation and indicates whether there is a sequential relationship between residuals. Serial correlation violates the assumption of the independence of the residuals. _Procedure: The Durbin-Watson statistic was provided by the regression results = 2.133. _Durbin-Watson statistics vary between 0 to 4. _Consult Table B-4: significance of 0.05. _Step 1: Determine the number of cases: n = 74. _Step 2: Determine the number of independent variables: IV = 3 (= k). _Step 3: Locate 2.133 in relation to (dL=1.53) and (dU=1.70). _Result: 2.133 is greater than dU of 1.70, so there is no first order autocorrelation. _General Indicator: a Durbin-Watson of greater than 2 usually indicates no autocorrelation. _Solution: The solution is weighted least squares. To calculate outcome: Outcome = constant + IV1*(B Coefficient) + IV2*(B Coefficient) + IV3*(B Coefficient). 7 _Slope Interpretation: _The slope is 0.77. _Therefore, for every one-unit increase in the independent variable, there is a 0.77 unit increase in the dependent variable. Data Assumptions of Linear Regression: To get BLUE = “Best Linear Unbiased Estimate.” (1). Interval level data (not nominative/categorical, or ordinal).1 Solution: use scatterplots. (2). Data has a normal distribution (as per the CLT). Solution: seek larger n. (3). There are no omitted variables (creates omitted variable bias). Solution: include the omitted variables. (4). The data is linearly distributed: It does not deviate or curve or skew in any direction. It does not have significant bulges. It does not significantly increase its dispersal. Solution: transform the data. (5). No multicollinearity: No IV is a perfect linear function of other explanatory variables (r=0.75). Solution: exclusion of the replicated variable. (6). There is no (serial) autocorrelation: The error terms are uncorrelated with each other (implies there is omitted variable bias). The individual residuals do not covary with any IV (this implies there is an omitted variable). Solution: Weighted Least Squares. (7). The error term is homoskedasticick (does not suffer from heteroskedasticity). The error term has constant variance (if not, significance of findings drop): Solution: Weighted Least Squares. (8). The error term has a zero mean and is normally distributed: It does not deviate or curve or skew in any direction (this implies omitted variable bias). Solution: Weighted Least Squares. 1 Exception (thanks to Prof Perella): 1- Categorical data can be converted to dummy variables, which are compatible for regression; 2Categorical can be combined into an index/scale, which qualify as continuous, and thus, appropriate for regression. 8