Consider the usual regression model

advertisement

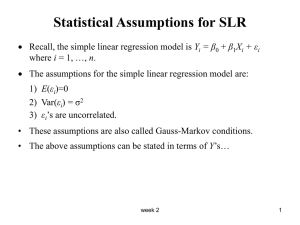

REGRESSION ANALYSIS OF VARIANCE Consider the usual regression model Yi = 0 + 1 xi1 + 2 xi2 + … + k xik + i for i = 1, 2, 3, …, n with the usual assumptions on the 1, 2, …, n : These are unobserved random quantities. These are statistically independent of each other. E i = 0 and Var i = 2 for each i. These are normally distributed. The final assumption, regarding normal distributions, is needed to get exact tests and confidence intervals. The matrix form of the model is Y = X + in which the design matrix X is n-by-p. We use p = k + 1. Let PX be the projection matrix into £col(X), the space spanned by the columns of X. If X has full rank p, then £col(X) is a space of dimension p, and we can certainly write PX = X (X´ X)-1 X´ Certainly PX is symmetric. You can check that PX is a projection matrix, as PX = PX PX . If X does not have full rank, then PX is still uniquely defined, but it cannot be computed in the form just given. (The computation of PX in that case will not be discussed here.) The remainder of this document will use PX = X (X´ X)-1 X´ , along with the assumption that £col(X) has dimension p. Let the fitted vector be Y = PX Y = X (X´ X)-1 X´ Y . This vector of course lies in the space £col(X). Since 1 is a column of X, it follows that PX 1 = 1. Equivalently, 1´ PX = 1´. Thus we can show that 1´ Y = 1´ PX Y = 1´ Y In words, the statement above says “sum of the observed = sum of the fitted.” The residual e is defined as e = Y - Y = Y - PX Y = (I - PX ) Y. This lies in the n - p dimensional space which is orthogonal to £col(X). In words, “residual = observed minus fitted.” Page 1 gs2011 REGRESSION ANALYSIS OF VARIANCE In fact, the vectors Y, Y , and e form the corners of a right triangle. This gives the Pythagorean statement Y´ Y = Y ´ Y + e´ e The vectors lie in spaces of dimensions n, p, and n - p, respectively. This right triangle result is very easy to verify using PX = X (X´ X)-1 X´ . The regression analysis of variance table, however, is based on a somewhat different right triangle. The regression exercise is directed toward understanding the relationship between Y and the k independent variables. The design matrix X usually contains a column 1, corresponding to the intercept 0, and there is no inferential interest in 0. Accordingly, we remove the means by using the “mean sweeper” matrix M, where 1 M = I - n F1 G G = G G G H 1 n 1 n 1 n 1 n 1n 1 1 n 1n 1n 1 n 1n 1 1n 1n 1n 1 n 1n 1 1n I J J J J J K By direct calculation, you can see that FY Y I G Y YJ G J MY = G Y YJ G J G J G HY Y JK 1 2 3 n The matrix M is also a projection, and it’s easily confirmed that M M = M . Also, M´ = M. Certainly M 1 = 0. n You can also see that (M Y)´ (M Y) = Y´ M Y = cY Y h= 2 i SYY . i 1 Because the matrix X contains the column 1, it follows that PX 1 = 1, (I – PX) M = M (I - PX) = (I - PX). Note that PX M = M PX . Now apply the projection PX to M Y: M Y = PX (M Y) + (I - PX ) (M Y ) Page 2 gs2011 REGRESSION ANALYSIS OF VARIANCE However, (I - PX ) (M Y ) = [ (I - PX ) M ]Y = (I - PX ) Y = e, so we write this as M Y = PX (M Y) + e A Pythagorean result applies here as well: (M Y)´ (M Y) = [ PX (M Y) ]´ [ PX (M Y) ] + e´ e or (M Y)´ (M Y) = (M Y)´ PX PX M Y + e´ e = (M Y)´ PX M Y + e´ e It is this final form that is the basis of the regression analysis of variance. Observe that M Y lies in a space of dimension n - 1; as a consequence PX M Y lies in a space of dimension p - 1 = k. You might also note that PX M Y = M PX Y = M Y . These results are usually laid out in an analysis of variance table in this format: Source of Variation Degrees of Freedom Sum Squares Mean Squares Regression k SSregr = (M Y )´( M Y ) MSregr = Residual n-k-1 SSresid = e´ e Total n-1 SStot = SYY = (M Y)´(M Y) SSregr k SSresid MSresid = n k 1 F F= MSregr MSresid The Degrees of Freedom column and the Sum Squares column show exact additions. The Mean Squares column is the division of the Sum Squares by Degrees of Freedom. It is conventional not to show the division in the Total row. The F statistic is used to test the hypothesis H 0 : 1 = 0, 2 = 0, …, k = 0. Rejection of this hypothesis is rather common, and the rejection simply means that you have significant evidence that at least one of the ’s is not zero. Observe that the intercept 0 is not part of this inference. Page 3 gs2011