SLR Statistical Assumptions: Gauss-Markov & Error Variance

advertisement

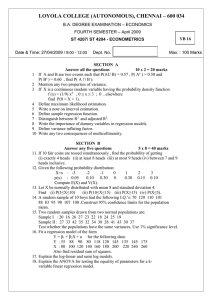

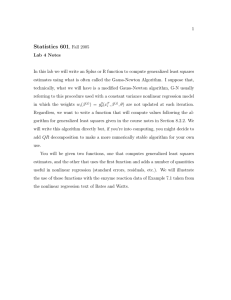

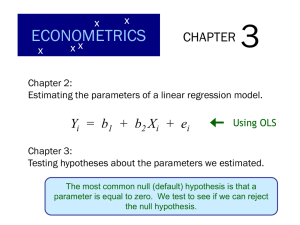

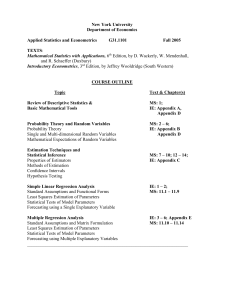

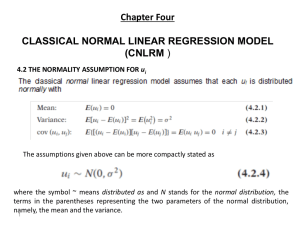

Statistical Assumptions for SLR Recall, the simple linear regression model is Yi = β0 + β1Xi + εi where i = 1, …, n. The assumptions for the simple linear regression model are: 1) E(εi)=0 2) Var(εi) = σ2 3) εi’s are uncorrelated. • These assumptions are also called Gauss-Markov conditions. • The above assumptions can be stated in terms of Y’s… week 2 1 Possible Violations of Assumptions • Straight line model is inappropriate… • Var(Yi) increase with Xi…. • Linear model is not appropriate for all the data… week 2 2 Properties of Least Squares Estimates • The least-square estimates b0 and b1 are linear in Y’s. That it, there exists constants ci, di such that , b0 ciYi , b1 d iYi • Proof: Exercise.. • The least squares estimates are unbiased estimators for β0 and β1. • Proof:… week 2 3 Gauss-Markov Theorem • The least-squares estimates are BLUE (Best Linear, Unbiased Estimators). • Of all the possible linear, unbiased estimators of β0 and β1 the least squares estimates have the smallest variance. • The variance of the least-squares estimates is… week 2 4 Estimation of Error Term Variance σ2 • The variance σ2 of the error terms εi’s needs to be estimated to obtain indication of the variability of the probability distribution of Y. • Further, a variety of inferences concerning the regression function and the prediction of Y require an estimate of σ2. • Recall, for random variable Z the estimates of the mean and variance of Z based on n realization of Z are…. • Similarly, the estimate of σ2 is 1 n 2 s ei n 2 i 1 2 • S2 is called the MSE – Mean Square Error it is an unbiased estimator of σ2 (proof in Chapter 5). week 2 5 Normal Error Regression Model • In order to make inference we need one more assumption about εi’s. • We assume that εi’s have a Normal distribution, that is εi ~ N(0, σ2). • The Normality assumption implies that the errors εi’s are independent (since they are uncorrelated). • Under the Normality assumption of the errors, the least squares estimates of β0 and β1 are equivalent to their maximum likelihood estimators. • This results in additional nice properties of MLE’s: they are consistent, sufficient and MVUE. week 2 6 Example: Calibrating a Snow Gauge • Researchers wish to measure snow density in mountains using gamma ray transitions called “gain”. • The measuring device needs to be calibrated. It is done with polyethylene blocks of known density. • We want to know what density of snow results in particular readings from gamma ray detector. The variables are: Y- gain, X – density. • Data: 9 densities in g/cm3 and 10 measurements of gain for each. week 2 7