Science of Sound

advertisement

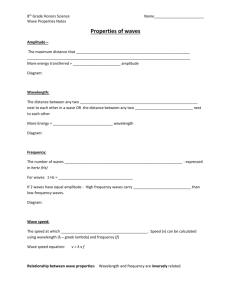

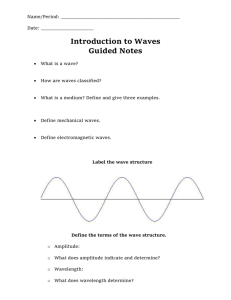

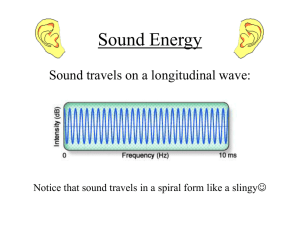

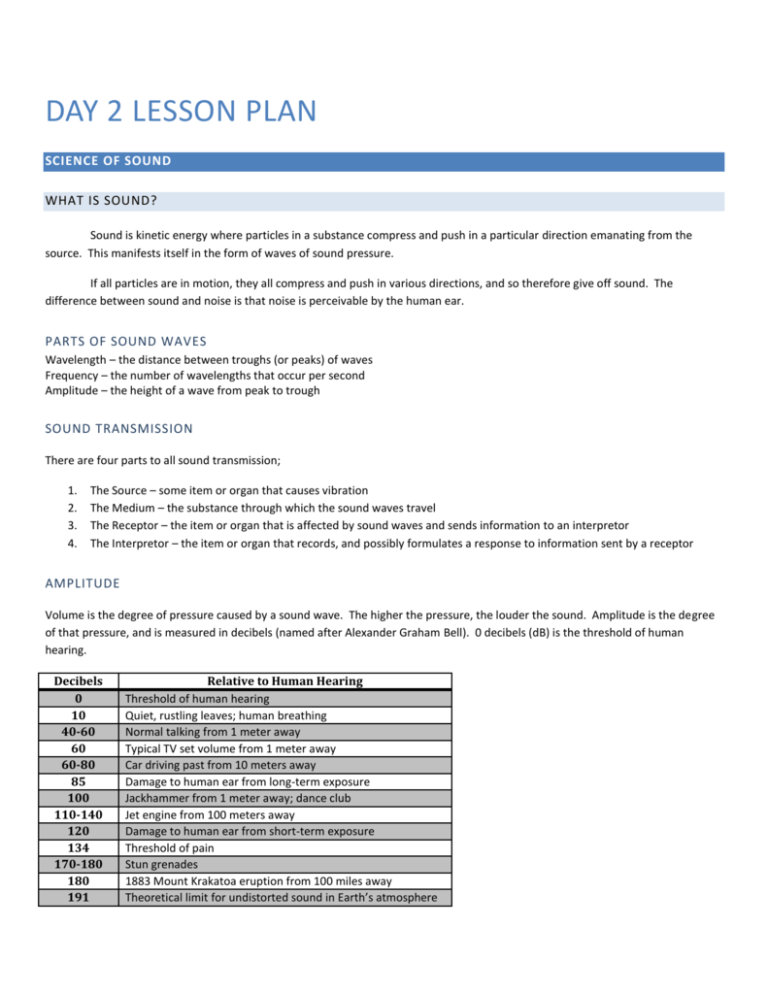

DAY 2 LESSON PLAN SCIENCE OF SOUND WHAT IS SOUND? Sound is kinetic energy where particles in a substance compress and push in a particular direction emanating from the source. This manifests itself in the form of waves of sound pressure. If all particles are in motion, they all compress and push in various directions, and so therefore give off sound. The difference between sound and noise is that noise is perceivable by the human ear. PARTS OF SOUND WAVES Wavelength – the distance between troughs (or peaks) of waves Frequency – the number of wavelengths that occur per second Amplitude – the height of a wave from peak to trough SOUND TRANSMISSION There are four parts to all sound transmission; 1. 2. 3. 4. The Source – some item or organ that causes vibration The Medium – the substance through which the sound waves travel The Receptor – the item or organ that is affected by sound waves and sends information to an interpretor The Interpretor – the item or organ that records, and possibly formulates a response to information sent by a receptor AMPLITUDE Volume is the degree of pressure caused by a sound wave. The higher the pressure, the louder the sound. Amplitude is the degree of that pressure, and is measured in decibels (named after Alexander Graham Bell). 0 decibels (dB) is the threshold of human hearing. Decibels 0 10 40-60 60 60-80 85 100 110-140 120 134 170-180 180 191 Relative to Human Hearing Threshold of human hearing Quiet, rustling leaves; human breathing Normal talking from 1 meter away Typical TV set volume from 1 meter away Car driving past from 10 meters away Damage to human ear from long-term exposure Jackhammer from 1 meter away; dance club Jet engine from 100 meters away Damage to human ear from short-term exposure Threshold of pain Stun grenades 1883 Mount Krakatoa eruption from 100 miles away Theoretical limit for undistorted sound in Earth’s atmosphere PITCH Pitches sensed by ear are simply our way of interpreting frequency. The higher the frequency (the more wavelengths per second), the higher the pitch. Frequency is measured as waves per second, or Hertz (Hz). All waves (light, radio, electromagnetic) are measured using this same scale, sound is just those waves that can be perceived by the human ear. Because all particles at the very least vibrate, they give off a certain frequency of sound, although often inaudible. If that frequency is matched by another sound source, it increases the amplitude of the waves to be the sum of the two amplitudes. http://www.youtube.com/watch?v=3mclp9QmCGs If two sound sources produce two different, unrelated frequencies (where the higher frequency is not a multiple of the lower frequency), they sound “crunchy” against each other, as they don’t increase each other’s amplitude evenly. HARMONICS Different pitches are related to each other. If you double a frequency, the pitch goes to the next “harmonic”, which is an exponential increase on the previous pitch. It is upon this principle that musicians are able to identify notes. For example; The musical note A is at 440 Hz (440 wavelengths per second). The pitch at 880 Hz is two octaves higher, just a higher version of the musical note A. The pitch at 1760 Hz is one octave higher, another higher version of the musical note A. The pitch at 3520 Hz is a fifth higher, the musical note E above the second A. The pitch at 7040 Hz is a fourth higher, the musical note A again. Etc. DOPPLER SHIFT This is a phenomenon when sound waves are compressed even more as the source moves towards the receptor, and are compressed less as the source moves away from the receptor. Similar phenomenons occur in light transmission, where compression is called “blue-shifting” and extension is called “red-shifting”. ANALOG VERSUS DIGITAL When discussing sound in our class, we will discuss it in two contexts – analog and digital. Analog sound is natural sound that undergoes no electrical changes as it moves towards the human receptor (the ear). Digital sound is sound created or recorded by electrical impulses converted into sound. As computers are only able to work in bits (zeros and ones), there is always a limit to the amount they can interpret. Analog sound still occur in between the “ons and offs” that computers save their data in. While our technology continually improves, and allows us to interpret more and more digitally, there is still always a limit to what a computer can produce and record. DIGITAL PROBLEMS WITH SOUND FEEDBACK Positive Feedback occurs when a sound source creates a closed circuit with an interpretor. As an example, a microphone is plugged into an amplifier, that amplifier in turn sends information to a speaker, and the speaker is pointed at the microphone. The microphone will pick up any sound coming from the speaker, adding to the sound it was already picking up. The result is an amplification whine. Positive Feedback is often confused with the term Negative Feedback. Negative Feedback would be a case where sound is muted. However, you might receive negative feedback if you end up creating Positive Feedback. LOST QUALITY Because electrical signals only work in “ons and offs”, some sound wave information can be lost. However, compression of files on computers can compound the issue, such as in the case of MP3s. MP3s take much of the extra unnecessary sound wave information saved in a recording to make the file smaller. This guarantees a loss in quality, as it removes much of the original sound, although it can be useful when trying to rid a recording of “white noise”.