3.2: Least-Squares Regression

advertisement

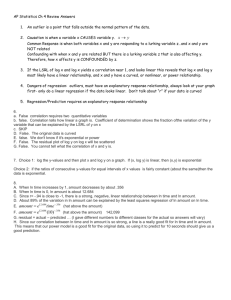

AP Statistics Chapter 3 Outline 3.1 Scatterplots and Correlation Identify explanatory and response variables Construct scatterplots to display relationships Interpret scatterplots Measure linear association using correlation Interpret correlation Scatterplots on the calculator Pg. 151 Homework: 3.1 #1, 11, 13, 14-18, 21 3.2 #35-47 odd, 3.2 #53, 54, 56, 58-61, 63, 65 Review Sheet NoteCards: def. & pic/example 3.2 Least-Squares Regression Interpret a regression line Calculate the equation of the least-squares regression line Calculate residuals Construct and Interpret residual plots Determine how well a line fits observed data Interpret computer regression output LSRL on calculator Residual Plots and s on the calculator Explanatory/Response Variables Interpret/Create Scatterplot Correlation LSRL Residual/Residual Plot Coefficient of determination Outliers & Influential Observations 3.1 Scatterplots and Correlation A scatterplot shows the relationship between two quantitative variables measured on the same individuals. The values of one variable appear on the horizontal axis, and the values of the other variable appear on the vertical axis. Each individual in the data appears as a point in the plot fixed by the values of both variables for that individual. A response variable measures the outcome of a study. An explanatory variable helps explain or influences change in a response variable. A scatterplot shows the relationship between two quantitative variables measured on the same individuals. The values of one variable appear on the horizontal axis, and the values of the other variable appear on the vertical axis. Each individual in the data appears as a point in the plot fixed by the values of both variables for that individual. Interpreting a Scatterplot In any graph of data, look for overall pattern and for striking deviations from that pattern. You can describe the overall pattern of a scatterplot by the direction, form, and strength of the relationship. An important kind of deviation is an outlier, an individual value that falls outside the overall pattern of the relationship. Correlation r: Measures the direction and strength of the linear relationship between two quantitative variables r xi x y i y 1 n 1 s x s y Interpreting Correlation Coefficients: 1. 2. 3. 4. 5. 6. 7. 8. 9. The value of r is always between –1 and 1. A correlation of –1 implies two variables are perfectly negatively correlated A correlation of 1 implies that there is perfect positive correlation between the two variables A correlation of 0 implies that there is no correlation (relationship) between the two variables. Positive correlations between 0 and 1 have varying strengths, with the strongest positive correlations being closer to 1. Negative correlation between 0 and –1 are also of varying strength with the strongest negative correlation being closer to –1. r does not have units. Changing the units on your data will not affect the correlation. r is very strongly affected by outliers. Correlation makes no distinction between explanatory and response variables. It makes no difference which variable you call x and which you call y when calculating the correlation. 3.2: Least-Squares Regression Least Squares Regression (linear regression) allows you to fit a line to a scatter diagram in order to be able to predict what the value of one variable will be based on the value of another variable. The line of best fit, linear regression line, or least squares regression line, (LSRL) and has the form yˆ a bx where: 𝑦̂: predicted value of the response variable y for a given value of the explanatory variable x 𝑎: y intercept 𝑏: slope of the line Extrapolation is the use of a regression line for prediction far outside the interval of values of the explanatory variable x used to obtain the line. Such predictions are often not accurate. A residual is the difference between an observed value of the response variable and the value predicted by the regression line. residual = observed y – predicted y =y–ŷ The least-squares regression line of y on x is the line that makes the sum of the squared residuals as small as possible. 𝑦̂ = 𝑎 + 𝑏𝑥 𝑏=𝑟 𝑠𝑦 𝑠𝑥 𝑎 = 𝑦̅ − 𝑏𝑥̅ How well the line fits the data: Residual Plots: scatterplot of the residuals against the explanatory variable. They help us assess how well a regression line fits the data Standard Deviation of the residuals (s) ∑ 𝑟𝑒𝑠𝑖𝑑𝑢𝑎𝑙𝑠 2 ∑(𝑦1 − 𝑦̂)2 𝑠=√ =√ 𝑛−2 𝑛−2 The coefficient of determination: 𝑟 2 in regression: The fraction of the variation in the values of y that is accounted for by the least squares regression line of y on x. 𝑟2 = 1 − 𝑆𝑆𝐸 𝑆𝑆𝑇 𝑆𝑆𝐸 = ∑ 𝑟𝑒𝑠𝑖𝑑𝑢𝑎𝑙 2 𝑆𝑆𝑇 = ∑(𝑦1 − 𝑦̂)2