Online User Feedback and Rating in e

advertisement

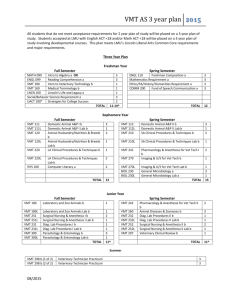

The Effects of User Feedback/Ranking to Enhance VMT Brian Smith, Drexel University, 3141 Chestnut Street, Philadelphia PA 19104, bps23@drexel.edu Abstract: Throughout the published research of the Virtual Math Team (VMT) environment, there is significant attention given to classifying users within the system. The classification can be as explicit as the Moderator/Participant relationship but more commonly, the research analysis also explores the how particular users interact; specifically their familiarity and involvement with the topic at-hand. Within this context, the researchers have been able to draw categorical conclusions about the users. These conclusions however can provide valuable information back to the users in the system to enhance the VMT environment and other users’ experience. By examining the results of these systems, and comparing to the interactions of the VMT environment, it is possible to conclude how implementing a similar type of rating or ranking system associated to each user account would affect the usefulness of the tool. Introduction The Virtual Math Team program is one that fosters and relies on peer interaction between groups of users to solve a mathematical problem. As a result, the explicit human interaction is a major contributing factor in the success of the system. Part of how that interaction occurs is defined by how any one individual user participates relative to the VMT tool and the rest of the users in the tool. At the same time, there is growing precedent for online tools’ use of user feedback systems to allow users to assume some level of expectation. These social indicators have become seen as essential components various tools and can be implemented into the VMT tool, provided that the feedback system introduces similar types of advantages and does not detract from the benefits already provided by the VMT tool. Online User Feedback and Rating in e-commerce Two popular online environments that take advantage of this type of user rating feedback system are eBay.com (eBay) and Amazon.com (Amazon). Both are e-commerce websites that rely on user feedback systems to foster interaction between a buyer and a seller; but at a more simple level, both are still electronic systems with internal users interacting. Within this respect, it shares a number of environmental similarities with the VMT tool. Analysis of the pros and cons of the feedback systems of these e-commerce environments can be extrapolated into the VMT environment, with consideration given to how the VMT tool does differ. The eBay user feedback system is one that transpires after an auction listed by the user known as the seller is won by the user known as the buyer, money is exchanged, and the item in question is received. Both users are then afforded the ability to rate the transaction based on a five star rating system for each of four categories: How accurate was the item description? How satisfied were you with the seller's communication? How quickly did the seller ship the item? How reasonable were the shipping and handling charges? (eBay). The composite of these scores is compiled into a single five-star rating that is attributed to the user’s account. A “composite of composites” over the course of all transactions in which the user was a participant is then displayed as the visible ‘user rating’ within the system. In addition, the number of ratings (which corresponds to the number of transactions) is displayed. This adds to the user confidence: as the number of ratings increases, the more credence is assumed with the starred ratings system. Figure 1 - View of an eBay User Feedback Rating Figure 1 shows the user feedback that is seen wherever user information is displayed in the system (an example of this is expanding in Figure 2 as well). The convention in displaying the star rating along with the number of reviews (as well as the percentage information) provides consistency that all users have come to understand; it now “means something” in defining expectations for user interactivity. Figure 2 - eBay Auction Screen One of the most notable considerations of the feedback system is how quickly it establishes a relationship amongst the users: “eBay’s feedback system is, arguably, their most valuable asset. It provides the grease necessary to make complete strangers comfortable enough to buy and sell from each other.” (TechCrunch) This is a key factor of eBay and Amazon, but a similar sentiment concerning the users of VMT is given early in the VMT text: “Since the students did not know each other from before the chat and could not observe each other except through the behavior that took place in the chat room, they could only understand each other’s messages and actions based on what took place inside the chat room.” (Stahl, 2006, p.50) Therefore, similar to the e-commerce tools, the users are relying solely on stimuli from the system as the basis for their opinions: “But it’s a closed system – only eBay transactions can affect a user’s feedback score.” (TechCrunch). The Amazon.com feedback works in much the same way Figure 3 - View of an Amazon User Feedback Rating Figure 4 - Amazon Item Purchase Screen Online User Content Feedback and Rating There are also non-e-commerce sites that employ a user feedback ratings, however news reporting sites such as Yahoo! News direct the user feedback to be indicative of the actual content of the post. Yahoo! News’ feedback allows users to leave feedback on the news story being reported. The comments left by the users are subject to a “thumbs up/thumbs down” rating that gives the users a chance to provide feedback based on specific items; however since a comment must be left by a user, the feedback can be correlated to a specific user. Over time, an average of comment ratings can be turned into a “user” score. Figure 5 - Yahoo! News User Feedback screen Figure 6 - Yahoo! News content rating option Similarly, the Drexel Blackboard site has instituted a post-by-post feedback system that allows users to rate the quality of the posts made, and again, each post has a specific user correlated to it. This would allow for an aggregate system to be employed to show a user rating. Figure 7 - Blackboard's post rating Blackboard’s system has a star ranking system, tucked away in the real estate in the standard interface. It is present in every post, and with the system requiring a login to make any post, there is traceability back to the user. The interface is conventional, and has the same affordances as the Yahoo! News system. Figure 8 - Blackboard post's "star" rating Issues With Existing Systems The feedback systems are not perfect representations of the users’ activity. The fact that it is based off of human intervention explicitly introduces human error into the ranking system. This has also been noted by the ecommerce tools, both in terms of ongoing revisions the tools make to the feedback process as well as fundamental flaws that user feedback systems inherently have – that may not be resolvable. The eBay site is making some major changes to eBay feedback and one of the biggest is always that sellers will no longer be able to leave comments for buyers. The contention here is that sellers were using bad information as being a way to prompt slow payers to pay up and also utilizing the reviews as being a forum to air personal grievances. (eZine) If someone is selling something I really want then their eBay suggestions may not be as large of a concern unless it was one hundred percent unfavorable. (eZine) People are afraid of what is referred to in the eBay community as "retaliatory feedback." That's when a seller (or a buyer--it can happen on both sides) becomes annoyed or enraged at a negative comment posted by someone else about them, and in retaliation posts a negative comment in return. The system obviously has no way of determining for sure the motivations of the various people posting. It therefore happens--and happens quite a lot, according to some estimates--that an innocent person's reputation suffers. (Forbes) Weber said most buyers don't understand the purpose of Amazon's feedback mechanism and don't understand the consequences of their venting that can damage sellers' reputation and ability to earn money. (Steiner) Tying Into VMT Thus far, the discussion has focused on a feedback system with respect to e-commerce sites. The VMT tool is not offering products or purchases that are subject to buyer/seller confidence. So, the consideration must then be given to how users of an educational knowledge sharing system differ from e-commerce users; specifically, how would user feedback differ. As a corollary to how the user feedback differs; analysis of how feedback would affect how users natively interact within the VMT tool must be considered before implementing such a feature. In the Stahl text, the authors dedicate extensive time to analyzing entire specific interactions within the tool. Involved in this is the classification of users into relevant categories. However, in the text the analysis is done for the benefit of the Stahl text researchers and their understanding of user interaction and refinement of the VMT tool. It is the contention of this paper’s research that these same categorizations can A) benefit the users if rolled back into the system, and B) be determined by the user base itself. To represent how the user base could conceivably reach the conclusions as determined by the Stahl text researchers, three user determinations from the “Agentic Movement” analysis section are in focus: “When we began this analysis we tended to see Bwang as the “math student” because Bwang was very good at taking a given problem and expressing it in an equation. He had a certain math orientation and was often the first to create mathematical objects that the group later worked with”. (Stahl, 2006, p.218) As the system is primarily focused on solving math problems, someone with Bwang’s mathematical background would presumably be beneficial to any group within the VMT system. His frequency in interaction and dedication to solving the problem and adding to the group knowledge indicates that he could also be an asset to a team independent of his mathematical skills, though his responses are fewer while more deliberate. “Aznx was very skilled at being creative in thinking about new problems and facilitating interaction, caring about the group as we saw above”. (Stahl, 2006, p.218) The user, Aznx, is noted as having excellent interpersonal skills within the group, and bringing an additional perspective to the conversation, without explicitly having a particularly strong mathematical background. That said, the content of the chats contained in the Stahl text (p. 216-222) show that this user does have a solid understand of the math concepts. “Quicksilver was harder to get a sense of”. (Stahl, 2006, p.218) The third user, Quicksilver, doesn’t appear to have an y particular categorization associated to him. However, this is actually a categorization unto itself. It would be the same classification that could be granted to any new user to the system, or any user that only takes a minimal involvement in the tool. Within the confines of a problem solving session of three users, it is evident that a simple scoring system could convey similar thoughts in defining each user. A numeric score for math knowledge and a numeric score for user interaction would quantify each of the above. Using a 1-5 scoring system (1 indicating a low ranking and 5 indicating a high ranking) for both math and interaction respectively, Bwang could rate as a 5-4 while Aznx could rank as a 3-5. With Quicksilver, the Stahl text researchers indicate that they are unable to draw any conclusions; a lack of a score within a feedback system would indicate the same To round out examples from the Stahl study to further show the quantification of the user categorization a quick example may be referenced: … it appeared that one student (Mic) who seemed particularly weak in math was clowning around a lot and that another (Cosi) managed to solve the problem herself despite this distraction in the chat room. (Stahl, 2006, p.50) The user Mic could conceivably be conveyed as a 1-1 for not having particularly strong math skills and for distracting from the group learning effort. Similarly a user like Cosi was able to handle the mathematical component, but did so independent of the group. With no indication from the Stahl text researchers that Cosi made any exceptional attempt to communicate with the group, even despite the cost of Mic’s “clowning around”, Cosi may be conveyed as a 4-2. Scoring Systems for VMT While the details of the scoring system can be refined by the total number of available ratings or the visible format (eg. stars, numbers, slide chart), with relatively minimal effort, the Stahl text researchers’ user analysis could be turned into quantifiable data that now affords those viewing to gain an expectation of each users’ general capabilities without having to read through extensive textual analysis and without having to analyze the individual case studies from where the determinations were made. That said, the expert researcher analysis is thus far the only thing responsible for that conclusion. It is the conjecture of this paper that, over time, the user-inputted feedback will resolve to similar conclusions. The input from any one single user may skew the data due to a lack of understanding of the feedback process, personal experience, ulterior motives, or any other non-expert review; however the average of all users input should reveal what the expert analysis is able to conclude. The e-commerce tools as described in this paper share comparable online environmental user stimuli and thus have shown that there is a precedent for a user ranking system. The Stahl paper researchers have successfully quantified the user interactions. As such, the remaining consideration concerns how introducing a feature like the user feedback function, and specifically how users would react to other users of varying ratings, would affect the user experience with VMT. Figure 5 shows a simple example of an existing Stahl text screenshot, that has includes a ranking system indicator for the users involved. The scoring system does not affect on, or intrude upon any of the existing VMT tool. Figure 6 shows the rating in more detail, consistent with the potential average scorings as described previously in this document. Figure 9 - VMT Mock-up of User ratings Figure 10 - Close-up of VMT Mock-up Upon completion of the VMT session, as defined from first login to conclusion of the work (when the problem is solved and/or when the Group session is closed), each user would have the ability to offer this feedback to their peer users in much the same way as the post-transaction feedback is available in the e-commerce tools. Citing certain tenants of the Stahl book under the Preconditions for Cognitive Processes by Groups (p.525), there are /particular points to which implementing the user feedback tool and user status would apply. “Shared intentionality” and “Shared Background Culture” address the commonality between the users of the system. The user ranking speaks to both of those consistencies as the user ranking assumes a scoring system based on accepted standards of the system (all users having the same interfaces and functionality availability to them) and general societal understandings. “Intersubjectivity”, or human co-presence, as a precondition is explicitly met; by ranking experiences with another user, attention is given on a peer-to-peer basis and thus every user has the ability to recognize any other user in the system that they have worked on a resolution with. The most important two preconditions in supporting the integration of a user feedback system though, are “Member methods for social order” and “Motivation and Engagement”. The ranking system of users, by users, speaks directly to establishing a hierarchy within the system. Whereas this would usually get flushed out over the course of solving a problem within a team, using a universal feedback system across the entire tool, can accelerate this natural evolution. Finally, the motivation and engagement is also explicit within a feedback tool in the exact same way that it is for the e-commerce tools: with a public display of how valuable a user is considered to be, the impetus to improves ones’ ranking to achieve a higher status can augment the motivational points that Stahl, et al, elaborate upon. Resolving Issues with User Feedback Within a user feedback system, inherent issues arise. As stated previously, a ‘lack of understanding of the feedback process, personal experience, ulterior motives, or any other non-expert review’ may contribute to the feedback system not accurately portraying the contribution of the users involved. As such, this section will identify potential alternatives to a pure user-on-user feedback system. Analysis of the pros and cons of replacing or supplementing the feedback with the following would yield appropriate results for inclusion consideration. First, and as a notable difference between the e-commerce systems versus a math learning tool, is the expected average user age, and the emotional maturity that follows. Whereas adult users dealing with goods have an inherent understanding of the relationship at large, younger students using a math tool may consistently skew the feedback, in such situations by constantly ranking friends high regardless of contribution to the VMT. As such, a potential resolution is to restrict the feedback only to the moderator(s) in each session. While this would detract from allowing user to provide their own impact, it ensures the integrity of the ratings. This, in turn, yields functionality that is more beneficial to the user community at large. Second is the motivation factor. Without any specific provocation to input feedback, the success of this functionality depends solely on the willingness of the user community to contribute back into the tool. Again, unlike the e-commerce sites in which feedback motivation stems from the fact that user attraction is based off of user ratings, incorporating it into the VMT environment carries no requisite activity. The only users that appear to have vested interest in the tool are the moderators, who return to different sessions and have expectations of the activities contained within. This noted, the moderators again prove to be the best users for feedback integrity. Both of the above limitations place the impetus on the moderators in place of the entire user community, which is an available option, but belies the focus of this proposal. A possible solution to this is to observe a similar system to a number of online news reporting sites, or Drexel’s Blackboard system, employ: a post-by-post rating system that allows all users to provide input to the system, but only on particular posts. This resolves a couple of items problematic with the above: users will be less likely to tag each and every posting which should limit the gratuitous ratings that younger users may afford each other, and with the feedback being immediate (at the time of the post) instead of cumulative at the end of the session, users would have more impetus to contribute to the ranking system. An aggregate rating of a user’s posts would manifest as the user’s overall score. A moderator’s input would still be valuable, and a moderator could still contribute to the rating, but the weight shifts back to the general users. Future Resolution The remaining issue that would still need research attention (in the form of case studies akin to those presented in the VMT text) is the determination of whether or not the user feedback display would change the way in which users interacted with the system. By conducting a broad scope of analyses of sessions, some with the user feedback notification and some without, the Stahl text researchers could enumerate how other users reacted to those same case study users with the varying ratings. In the example in this paper, the user “Bwang” is seen as having an expertise in math and being reasonable helpful to other users without any rating attached to him. Over the course of a number of subsequent problem sessions, if Bwang is tagged with any ranking, rating, or notification of his/her skills or general nature, would users be more or less inclined to interact with Bwang? Would the qualitative data shift to any show any significant alteration in how users use VMT? Conclusion Throughout this paper, it has been proposed that a user feedback and ranking system would enhance the VMT system. The enhancement would come in the form of strengthening the users interactivity as an underlying support to the tool. By allowing for users to expedite general project team hierarchies and expectations, the VMT tool would benefit in facilitating learning. Electronic systems that use a user feedback and ranking system have grown to rely on them in an e-commerce environment that survives on how cohesive users peer relationships are. Systems that do not rely on user feedback specifically still have content feedback systems that reflect on the contributing user. With additional research to verify that the integrity of the VMT system is maintained, integrating a user feedback and ranking system into the VMT tool can provide incremental benefits to the environment and group collaborative learning at large. References Arrington, M. (2006). Rapleaf to Challenge Ebay Feedback. TechCrunch.com. Retrieved on November 1, 2010 from http://techcrunch.com/2006/04/23/rapleaf-to-challenge-ebay-feedback/ Blackboard Academic Suite. (2010). Retrieved on November 27, 2010 from https://drexel.blackboard.com eBay.com. (2010). About Detailed Seller Ratings (DSR). Retrieved on November 4, 2010 from http://pages.ebay.com/help/feedback/detailed-seller-ratings.html LaPlante, A. (2007). EBay Feedback: Fatally Flawed? Forbes.com. Retrieved on November 3, 2010 from http://www.forbes.com/2007/01/02/ebay-smallbusiness-feedback-ent-salescx_al_0102smallbizresource.html Stahl, G. (2009). Studying Virtual Math Teams. New York: Springer. Steiner, I. (2006). Online Booksellers React to Amazon.com Feedback Changes. AuctionBytes.com. Retrieved on November 6 from http://www.auctionbytes.com/cab/abn/y06/m07/i10/s04 Tanady, M. (2010). The Aggressive World of eBay Feedback. EzineArticles.com. Retrieved on November 3, 2010 from http://ezinearticles.com/?The-Aggressive-World-Of-eBay-Feedback&id=4809988 Yahoo! News. (2010). The Top News Headlines on Current Events from Yahoo! News. Retrieved on November 26, 2010 from http://news.yahoo.com Acknowledgments I certify that: To the best of my knowledge, this assignment is entirely work produced by me. Any identification of my individual work is accurate. I have not quoted the words of any other person from a printed source or a website without indicating what has been quoted and providing an appropriate citation. I have not submitted any of the material in this document to satisfy the requirements of any other course.