Assignment 4: Feature Reduction

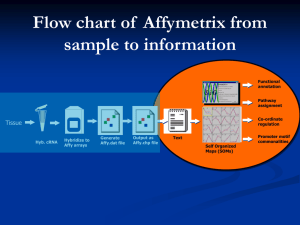

advertisement

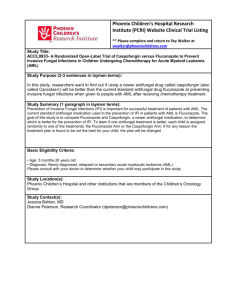

Assignment 1: Using the WEKA Workbench A. Become familiar with the use of the WEKA workbench to invoke several different machine learning schemes. Use latest stable version. Use both the graphical interface (Explorer) and command line interface (CLI). See Weka home page for Weka documentation. B. Use the following learning schemes, with the default settings to analyze the weather data (in weather.arff). For test options, first choose "Use training set", then choose "Percentage Split" using default 66% percentage split. Report model percent error rate. ZeroR (majority class) OneR Naive Bayes Simple J4.8 C. Which of these classifiers are you more likely to trust when determining whether to play? Why? D. What can you say about accuracy when using training set data and when using a separate percentage to train? Assignment 2: Preparing the data and mining it A. Take the file genes-leukemia.csv (here is the description of the data) and convert it to Weka file genes-a.arff. You can convert the file either using a text editor like emacs (brute force way) or find a Weka command that converts .csv file to .arff (a smart way). B. Target field is CLASS. Use J48 on genes-leukemia with "Use training set" option. C. Use genes-leukemia.arff to create two subsets: genes-leukemia-train.arff, with the first 38 samples (s1 ... s38) of the data genes-leukemia-test.arff, with the remaining 34 samples (s39 ... s72). D. Train J48 on genes-leukemia-train.arff and specify "Use training set" as the test option. What decision tree do you get? What is its accuracy? E. Now specify genes-leukemia-test.arff as the test set. What decision tree do you get and how does its accuracy compare to one in the previous question? F. Now remove the field "Source" from the classifier (unclick checkmark next to Source, and click on Apply Filter in the top menu) and repeat steps D and E. What do you observe? Does the accuracy on test set improve and if so, why do you think it does? G. Extra credit: which classifier gives the highest accuracy on the test set? Assignment 3: Data Cleaning and Preparing for Modeling The previous assignment was with the selected subset of top 50 genes for a particular Leukemia dataset. In this assignment you will be doing the work of real data miner, and you will be working with an actual genetic dataset, starting from the beginning. You will see that the process of data mining frequently has many small steps that all need to be done correctly to get good results. However tedious these steps may seem, the goal is a worthy one -- help make an early diagnosis for leukemia -- a common form of cancer. Making a correct diagnosis is literally a life and death decision, and so we need to be careful that we do the analysis correctly. 3A. Get data Take ALL_AML_original_data.zip file from Data directory and extract from it Train file: data_set_ALL_AML_train.txt Test file: data_set_ALL_AML_independent.txt Sample and class data: table_ALL_AML_samples.txt This data comes from pioneering work by Todd Golub et al at MIT Whitehead Institute (now MIT Broad Institute). 1. Rename the train file to ALL_AML_grow.train.orig.txt and test file to ALL_AML_grow.test.orig.txt . Convention: we use the same file root for files of similar type and use different extensions for different versions of these files. Here "orig" stands for original input files and "grow" stands for genes in rows. We will use extension .tmp for temporary files that are typically used for just one step in the process and can be deleted later. Note: The pioneering analysis of MIT biologists is described in their paper Molecular Classification of Cancer: Class Discovery and Class Prediction by Gene Expression Monitoring (pdf). Both train and test datasets are tab-delimited files with 7130 records. The "train" file should have 78 fields and "test" 70 fields. The first two fields are Gene Description (a long description like GB DEF = PDGFRalpha protein) and Gene Accession Number (a short name like X95095_at) The remaining fields are pairs of a sample number (e.g. 1,2,..38) and an Affymetix "call" (P is gene is present, A if absent, M if marginal). Think of the training data as a very tall and narrow table with 7130 rows and 78 columns. Note that it is "sideways" from machine learning point of view. That is the attributes (genes) are in rows, and observations (samples) are in columns. This is the standard format for microarray data, but to use with machine learning algorithms like WEKA, we will need to do "matrix transpose" (flip) the matrix to make files with genes in columns and samples in rows. We will do that in step 3B.6 of this assignment. Here is a small extract Gene Description Gene Accession 1 call 2 call ... Number GB DEF = GABAa receptor alpha-3 subunit A28102_at 151 A 263 P ... ... AB000114_at 72 A 21 A ... ... AB000115_at 281 A 250 P ... ... AB000220_at 36 A 43 A ... 3B: Clean the data Perform the following cleaning steps on both the train and test sets. Use unix tools, scripts or other tools for each task. Document all the steps and create intermediate files for each step. After each step, report the number of fields and records in train and test files. (Hint: Use unix command wc to find the number of records and use awk or gawk to find the number of fields). Microarray Data Cleaning Steps 1. Remove the initial records with Gene Description containing "control". (Those are Affymetrix controls, not human genes). Call the resulting files ALL_AML_grow.train.noaffy.tmp and ALL_AML_grow.test.noaffy.tmp. Hint: You can use unix command grep to remove the control records. How many such control records are in each file? 2. Remove the first field (long description) and the "call" fields, i.e. keep fields numbered 2,3,5,7,9,... Hint: use unix cut command to do that. 3. Replace all tabs with commas 4. Change "Gene Accession Number" to "ID" in the first record. (You can use emacs here). (Note: That will prevent possible problems that some data mining tools have with blanks in field names.) 5. Normalize the data: for each value, set the minimum field value to 20 and the maximum to 16,000. (Note: The expression values less than 20 or over 16,000 were considered by biologists unreliable for this experiment.) Write a small Java program or Perl script to do that. Call the generated files ALL_AML_grow.train.norm.tmp and ALL_AML_grow.test.norm.tmp 6. Write a short java program or shell script to transpose the training data to get ALL_AML_gcol.test.tmp and ALL_AML_gcol.train.tmp ("gcol" stands for genes in columns). These files should each have 7071 fields, and 39 records in "train", 35 records in "test" datasets. 7. Extract from file table_ALL_AML_samples.txt tables ALL_AML_idclass.train.txt and ALL_AML_idclass.test.txt with sample id and sample labels, space separated. Here you can use a combination of unix commands and manual editing by emacs. Add a header row with "ID Class" to each of the files. File ALL_AML_idclass.train.txt should have 39 records and two columns. First record (header) has "ID Class", next 27 records have class "ALL" and last 11 records have class "AML". Be sure to remove all spaces and tabs from this file. ALL_AML_idclass.test.txt should have 20 "ALL" samples and 14 "AML" samples, intermixed. 8. Note that the sample numbers in ALL_AML_gcol*.csv files are in different order than in *idclass files. Use Unix commands to create combined files ALL_AML_gcol_class.train.csv and ALL_AML_gcol_class.test.csv which have ID as the first field, Class as the last field, and gene expression fields in between. 3C: Build Models on a full dataset 1. As in assignment 2, convert ALL_AML_gcol_class.train.csv to ALL_AML_allgenes.train.arff ALL_AML_gcol_class.test.csv to ALL_AML_allgenes.test.arff 2. Using ALL_AML_allgenes.train.arff as train file and ALL_AML_allgenes.test.arff as test, build a model using OneR. What accuracy do you get? 3. Now, excluding the field ID, build models using OneR, NaiveBayes Simple, and J4.8, using training set only. What models and error rates you get with each method? Warning: some of the methods may not finish or give you errors due to the large number of attributes for this data. 4. If you got thus far -- congratulations! Based on your experience, what three things are important in the process of data mining ? Assignment 4: Feature Reduction Start from the ALL_AML_train_processed data set (zipped file in Data directory). This data was thresholded to >= 20 and <= 16,000, with genes in rows. You should have generated it as part of assignment 3. Name it ALL_AML_gr.thr.train.csv (Note almost all of this data preprocessing is much easier done with data with genes-in-rows format). We will convert the data to genes-in-columns when we are ready to do the modeling). 1. Examining gene variation A. Write a program (or a script) to compute a fold difference for each gene. Fold difference is the Maximum Value across samples divided by minimum value. This value is frequently used by biologists to assess gene variability. B. What is the largest fold difference and how many genes have it? C. What is the lowest fold difference and how many genes have it? D. Count how many genes have fold ratio in the following ranges Range Count Val <= 2 .. 2 <Val <= 4 .. 4 <Val <= 8 .. 8 <Val <= 16 .. 16 <Val <= 32 .. 32 <Val <= 64 .. 64 <Val <= 128 .. 128 <Val <= 256 .. 256 <Val <= 512 .. 512 <Val .. E: Extra Credit: Graph fold ratio distribution appropriately. 2. Finding most significant genes For train set, samples 1-27 belong to class ALL, and 28-38 to class AML. Let Avg1, Avg2 be the average expression values. Let Stdev1, Stdev2 be the sample standard deviations, which can be computed as Stdev = sqrt((N*Sum_sq - Sum_val*Sum_val)/(N*(N-1))) Here N is the number of observations, Sum_val is the sum of values, Sum_sq is the sum of squares of values. Signal to Noise (S2N) ratio is defined as (Avg1 - Avg2) / (Stdev1 + Stdev2) T-value is defined as (Avg1 - Avg2) / sqrt(Stdev1*Stdev/N1 + Stdev2*Stdev2/N2) A. Write a script that will compute for each gene, the average and standard deviation for both classes ("ALL" and "AML"). Also compute for each gene T-value and Signal to Noise ratio. B. Select for each class, top 50 genes with the highest S2N ratio. Which gene has the highest S2N for "ALL" (i.e. its high value is most correlated with class "ALL")? 50th highest ? Give gene names and S2N values Same question for "AML" class. What is the relationship between S2N values for ALL and AML ? C. Select for each class top 50 genes with the highest T-value Which gene has the highest T-value for class "ALL"? 50th highest ? Give gene names and T values For AML ? What is the relationship between T values for ALL and AML ? Will a similar relationship hold if there are more than 2 classes? D. How many genes are in common between top 50 genes for ALL selected using S2N and those selected using T-value ? How many genes are in common among top 3 genes in each list? E. Same question for top genes for "AML" . 3. Lessons Learned What have you learned so far about the process of feature selection and data preparation? Predict Treatment Outcome Note: For this assignment, we used CART from Salford Systems, which was available to us under an educational license. If CART is not available, another decision tree tool, such as J4.8 in Weka can be used instead. Start with genes-leukemia.csv dataset used in assignment 2. (See Dataset directory). As a predictor use field TREATMENT_RESPONSE, which has values Success, Failure or "?" (missing) Step 1. Examine the records where TREATMENT_RESPONSE is non-missing. Q1: How many such records are there? Q2: Can you describe these records using other sample fields (e.g. Year from XXXX to YYYY , or Gender = X, etc) Q3: Why is it not correct to build predictive models for TREATMENT_RESPONSE using records where it is missing? Step 2. Select only the records with non-missing TREATMENT_RESPONSE. Keep SNUM (sample number) but remove sample fields that are all the same or missing. Call the reduced dataset genes- reduced.csv Q4: Which sample fields you should keep? Step 3. Build a CART Model using leave-one-out cross validation. Q5: what tree do you get? and what is the expected error rate? Q6: what are the important variables and their relative importance, according to CART? Q7: Remove the top predictor -- and re-run the CART -- what do you get? Step 4: Extra credit (10%): Use Google to search the web for the name of top gene that predicts the outcome and briefly report relevant information that you find. Step 5: Randomization test Randomize the TREATMENT_RESPONSE variable 10 times and re-run the CART for each randomized class. Q8: Report the trees and error rates you get. Q9: Based on the results in Q8, do you think the tree that you found with the original data is significant? ID Mid I/15 Mid II/15 120060194 120070044 120070063 120070167 207210067 208110169 208110227 8 -11 5 11 13 9 8 -11 11 8 12 11 Hws(13)/30 10 15 20 10 10 25 20 Sub Total/60 26 15 42 32 32 51 42 Final/40 Total/100 Grade