UIST10full

advertisement

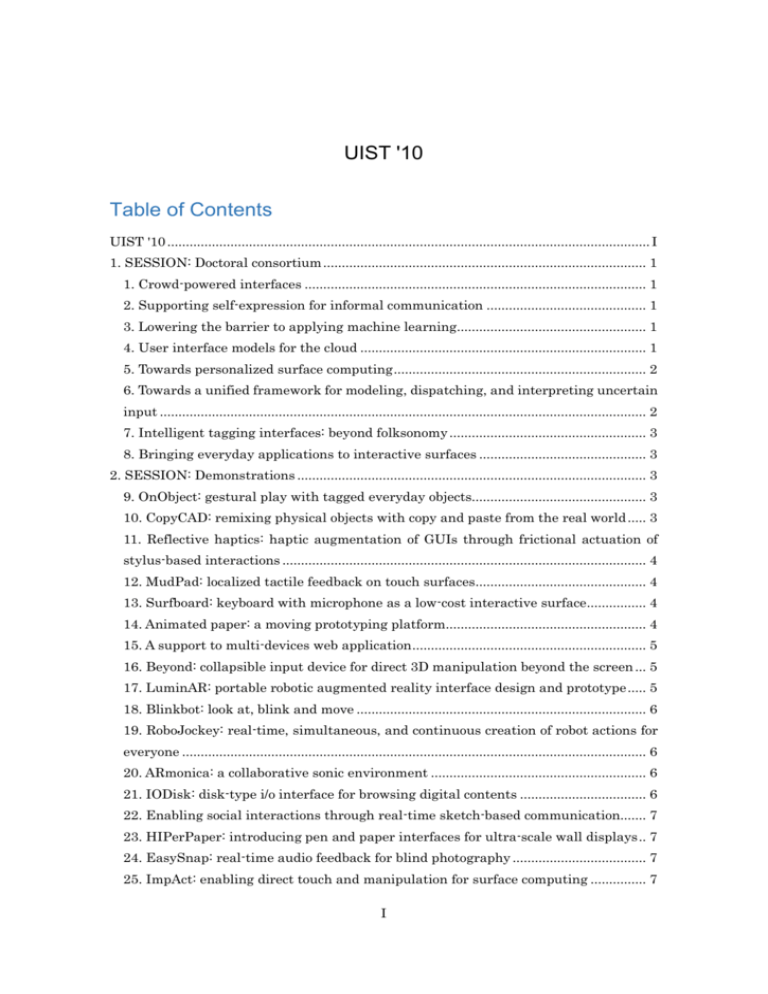

UIST '10 Table of Contents UIST '10 .................................................................................................................................. I 1. SESSION: Doctoral consortium ....................................................................................... 1 1. Crowd-powered interfaces ............................................................................................ 1 2. Supporting self-expression for informal communication ........................................... 1 3. Lowering the barrier to applying machine learning................................................... 1 4. User interface models for the cloud ............................................................................. 1 5. Towards personalized surface computing .................................................................... 2 6. Towards a unified framework for modeling, dispatching, and interpreting uncertain input ................................................................................................................................... 2 7. Intelligent tagging interfaces: beyond folksonomy ..................................................... 3 8. Bringing everyday applications to interactive surfaces ............................................. 3 2. SESSION: Demonstrations .............................................................................................. 3 9. OnObject: gestural play with tagged everyday objects............................................... 3 10. CopyCAD: remixing physical objects with copy and paste from the real world ..... 3 11. Reflective haptics: haptic augmentation of GUIs through frictional actuation of stylus-based interactions .................................................................................................. 4 12. MudPad: localized tactile feedback on touch surfaces.............................................. 4 13. Surfboard: keyboard with microphone as a low-cost interactive surface................ 4 14. Animated paper: a moving prototyping platform...................................................... 4 15. A support to multi-devices web application ............................................................... 5 16. Beyond: collapsible input device for direct 3D manipulation beyond the screen ... 5 17. LuminAR: portable robotic augmented reality interface design and prototype ..... 5 18. Blinkbot: look at, blink and move .............................................................................. 6 19. RoboJockey: real-time, simultaneous, and continuous creation of robot actions for everyone ............................................................................................................................. 6 20. ARmonica: a collaborative sonic environment .......................................................... 6 21. IODisk: disk-type i/o interface for browsing digital contents .................................. 6 22. Enabling social interactions through real-time sketch-based communication....... 7 23. HIPerPaper: introducing pen and paper interfaces for ultra-scale wall displays .. 7 24. EasySnap: real-time audio feedback for blind photography .................................... 7 25. ImpAct: enabling direct touch and manipulation for surface computing ............... 7 I 26. The multiplayer: multi-perspective social video navigation .................................... 8 3. SESSION: Posters............................................................................................................. 8 27. The enhancement of hearing using a combination of sound and skin sensation to the pinna ............................................................................................................................ 8 28. What can internet search engines "suggest" about the usage and usability of popular desktop applications? .......................................................................................... 8 29. Interacting with live preview frames: in-picture cues for a digital camera interface ............................................................................................................................................ 8 30. HyperSource: bridging the gap between source and code-related web sites .......... 9 31. Shoe-shaped i/o interface ............................................................................................ 9 32. Development of the motion-controllable ball ............................................................ 9 33. PETALS: a visual interface for landmine detection ............................................... 10 34. Pinstripe: eyes-free continuous input anywhere on interactive clothing.............. 10 35. Kinetic tiles: modular construction units for interactive kinetic surfaces............ 10 36. Stacksplorer: understanding dynamic program behavior .......................................11 37. Memento: unifying content and context to aid webpage re-visitation ...................11 38. Interactive calibration of a multi-projector system in a video-wall multi-touch environment ...................................................................................................................... 11 39. CodeGraffiti: communication by sketching for pair programmers ........................ 12 40. Mouseless ................................................................................................................... 12 41. Anywhere touchtyping: text input on arbitrary surface using depth sensing ...... 12 42. Using temporal video annotation as a navigational aid for video browsing ......... 12 43. Tweeting halo: clothing that tweets ......................................................................... 13 44. DoubleFlip: a motion gesture delimiter for interaction.......................................... 13 45. QWIC: performance heuristics for large scale exploratory user interfaces .......... 13 46. What interfaces mean: a history and sociology of computer windows .................. 13 47. Exploring pen and paper interaction with high-resolution wall displays............. 14 48. Enabling tangible interaction on capacitive touch panels ..................................... 14 49. MobileSurface: interaction in the air for mobile computing .................................. 14 II 1. SESSION: Doctoral consortium 1. Crowd-powered interfaces Michael S. Bernstein, We investigate crowd-powered interfaces: interfaces that embed human activity to support high-level conceptual activities such as writing, editing and question-answering. For example, a crowd-ppowered interface using paid crowd workers can compute a series of textual cuts and edits to a paragraph, then provide the user with an interface to condense his or her writing. We map out the design space of interfaces that depend on outsourced, friendsourced, and data mined resources, and report on designs for each of these. We discuss technical and motivational challenges inherent in human-powered interfaces. 2. Supporting self-expression for informal communication Lisa G. Cowan, Mobile phones are becoming the central tools for communicating and can help us keep in touch with friends and family on-the-go. However, they can also place high demands on attention and constrain interaction. My research concerns how to design communication mechanisms that mitigate these problems to support self-expression for informal communication on mobile phones. I will study how people communicate with camera-phone photos, paper-based sketches, and projected information and how this communication impacts social practices. 3. Lowering the barrier to applying machine learning Kayur Patel, Machine learning algorithms are key components in many cutting edge applications of computation. However, the full potential of machine learning has not been realized because using machine learning is hard, even for otherwise tech-savvy developers. This is because developing with machine learning is different than normal programming. My thesis is that developers applying machine learning need new general-purpose tools that provide structure for common processes and common pipelines while remaining flexible to account for variability in problems. In this paper, I describe my efforts to understanding the difficulties that developers face when applying machine learning. I then describe Gestalt, a general-purpose integrated development environment designed the application of machine learning. Finally, I describe work on developing a pattern language for building machine learning systems and creating new techniques that help developers understand the interaction between their data and learning algorithms. 4. User interface models for the cloud Hubert Pham, 1 The current desktop metaphor is unsuitable for the coming age of cloud-based applications. The desktop was developed in an era that was focused on local resources, and consequently its gestures, semantics, and security model reflect heavy reliance on hierarchy and physical locations. This paper proposes a new user interface model that accounts for cloud applications, incorporating representations of people and new gestures for sharing and access, while minimizing the prominence of location. The model's key feature is a lightweight mechanism to group objects for resource organization, sharing, and access control, towards the goal of providing simple semantics for a wide range of tasks, while also achieving security through greater usability. 5. Towards personalized surface computing Dominik Schmidt, With recent progress in the field of surface computing it becomes foreseeable that interactive surfaces will turn into a commodity in the future, ubiquitously integrated into our everyday environments. At the same time, we can observe a trend towards personal data and whole applications being accessible over the Internet, anytime from anywhere. We envision a future where interactive surfaces surrounding us serve as powerful portals to access these kinds of data and services. In this paper, we contribute two novel interaction techniques supporting parts of this vision: First, HandsDown, a biometric user identification approach based on hand contours and, second, PhoneTouch, a novel technique for using mobile phones in conjunction with interactive surfaces. 6. Towards a unified framework for modeling, dispatching, and interpreting uncertain input Julia Schwarz, Many new input technologies (such as touch and voice) hold the promise of more natural user interfaces. However, many of these technologies create inputs with some uncertainty. Unfortunately, conventional infrastructure lacks a method for easily handling uncertainty, and as a result input produced by these technologies is often converted to conventional events as quickly as possible, leading to a stunted interactive experience. Our ongoing work aims to design a unified framework for modeling uncertain input and dispatching it to interactors. This should allow developers to easily create interactors which can interpret uncertain input, give the user appropriate feedback, and accurately resolve any ambiguity. This abstract presents an overview of the design of a framework for handling input with uncertainty and describes topics we hope to pursue in future work. We also give an example of how we built highly accurate touch buttons using our framework. For examples of what interactors can be built and a more detailed description of our framework we refer the reader to [8]. 2 7. Intelligent tagging interfaces: beyond folksonomy Jesse Vig, This paper summarizes our work on using tags to broaden the dialog between a recommender system and its users. We present two tagging applications that enrich this dialog: tagsplanations are tag-based explanations of recommendations provided by a system to its users, and Movie Tuner is a conversational recommender system that enables users to provide feedback on movie recommendations using tags. We discuss the design of both systems and the experimental methodology used to evaluate the design choices. 8. Bringing everyday applications to interactive surfaces Malte Weiss, This paper presents ongoing work that intends to simplify the introduction of everyday applications to interactive tabletops. SLAP Widgets bring tangible general-purpose widgets to tabletops while providing the flexibility of on-screen controls. Madgets maintain consistency between physical controls and their digital state. BendDesk represents our vision of a multi-touch enabled office environment. Our pattern language captures knowledge for the design of interactive tabletops. For each project, we describe its technical background, present the current state of research, and discuss future work. 2. SESSION: Demonstrations 9. OnObject: gestural play with tagged everyday objects Keywon Chung, Michael Shilman, Chris Merrill, Hiroshi Ishii, Many Tangible User Interface (TUI) systems employ sensor-equipped physical objects. However they do not easily scale to users' actual environments; most everyday objects lack the necessary hardware, and modification requires hardware and software development by skilled individuals. This limits TUI creation by end users, resulting in inflexible interfaces in which the mapping of sensor input and output events cannot be easily modified reflecting the end user's wishes and circumstances. We introduce OnObject, a small device worn on the hand, which can program physical objects to respond to a set of gestural triggers. Users attach RFID tags to situated objects, grab them by the tag, and program their responses to grab, release, shake, swing, and thrust gestures using a built-in button and a microphone. In this paper, we demonstrate how novice end users including preschool children can instantly create engaging gestural object interfaces with sound feedback from toys, drawings, or clay. 10. CopyCAD: remixing physical objects with copy and paste from the real world Sean Follmer, David Carr, Emily Lovell, Hiroshi Ishii, This paper introduces a novel technique for integrating geometry from physical objects 3 into computer aided design (CAD) software. We allow users to copy arbitrary real world object geometry into 2D CAD designs at scale through the use of a camera/projector system. This paper also introduces a system, CopyCAD, that uses this technique, and augments a Computer Controlled (CNC) milling machine. CopyCAD gathers input from physical objects, sketches and interactions directly on a milling machine, allowing novice users to copy parts of real world objects, modify them and then create a new physical part. 11. Reflective haptics: haptic augmentation of GUIs through frictional actuation of stylus-based interactions Fabian Hemmert, Alexander Müller, Ron Jagodzinski, Götz Wintergerst, Gesche Joost, In this paper, we present a novel system for stylus-based GUI interactions: Simulated physics through actuated frictional properties of a touch screen stylus. We present a prototype that implements a series of principles which we propose for the design of frictionally augmented GUIs. It is discussed how such actuation could be a potential addition of value for stylus-controlled GUIs, through enabling prioritized content, allowing for inherent confirmation, and leveraging on manual dexterity. 12. MudPad: localized tactile feedback on touch surfaces Yvonne Jansen, Thorsten Karrer, Jan Borchers, We present MudPad, a system that is capable of localized active haptic feedback on multitouch surfaces. An array of electromagnets locally actuates a tablet-sized overlay containing magnetorheological (MR) fluid. The reaction time of the fluid is fast enough for realtime feedback ranging from static levels of surface softness to a broad set of dynamically changeable textures. As each area can be addressed individually, the entire visual interface can be enriched with a multi-touch haptic layer that conveys semantic information as the appropriate counterpart to multi-touch input. 13. Surfboard: keyboard with microphone as a low-cost interactive surface Jun Kato, Daisuke Sakamoto, Takeo Igarashi, We introduce a technique to detect simple gestures of "surfing" (moving a hand horizontally) on a standard keyboard by analyzing recorded sounds in real-time with a microphone attached close to the keyboard. This technique allows the user to maintain a focus on the screen while surfing on the keyboard. Since this technique uses a standard keyboard without any modification, the user can take full advantage of the input functionality and tactile quality of his favorite keyboard supplemented with our interface. 14. Animated paper: a moving prototyping platform Naoya Koizumi, Kentaro Yasu, Angela Liu, Maki Sugimoto, Masahiko Inami, We have developed a novel prototyping method that utilizes animated paper, a versatile 4 platform created from paper and shape memory alloy (SMA), which is easy to control using a range of different energy sources from sunlight to lasers. We have further designed a laser point tracking system to improve the precision of the wireless control system by embedding retro-reflective material on the paper to act as light markers. It is possible to change the movement of paper prototypes by varying where to mount the SMA or how to heat it, creating a wide range of applications. 15. A support to multi-devices web application Xaiver Le Pallec, Raphaël Marvie, José Rouillard, Jean-Claude Tarby, Programming an application which uses interactive devices located on different terminals is not easy. Programming such applications with standard Web technologies (HTTP, Javascript, Web browser) is even more difficult. However, Web applications have interesting properties like running on very different terminals, the lack of a specific installation step, the ability to evolve the application code at runtime. Our demonstration presents a support for designing multi-devices Web applications. After introducing the context of this work, we briefly describe some problems related to the design of multi-devices web application. Then, we present the toolkit we have implemented to help the development of applications based upon distant interactive devices. 16. Beyond: collapsible input device for direct 3D manipulation beyond the screen Jinha Lee, Surat Teerapittayanon, Hiroshi Ishii, What would it be like to reach into a screen and manipulate or design virtual objects as in real world? We present Beyond, a collapsible input device for direct 3D manipulation. When pressed against a screen, Beyond collapses in the physical world and extends into the digital space of the screen, such that users can perceive that they are inserting the tool into the virtual space. Beyond allows users to directly interact with 3D media, avoiding separation between the users' input and the displayed 3D graphics without requiring special glasses or wearables, thereby enabling users to select, draw, and sculpt in 3D virtual space unfettered. We describe detailed interaction techniques, implementation and application scenarios focused on 3D geometric design and prototyping. 17. LuminAR: portable robotic augmented reality interface design and prototype Natan Linder, Pattie Maes, In this paper we introduce LuminAR: a prototype for a new portable and compact projector-camera system designed to use the traditional incandescent bulb interface as a power source, and a robotic desk lamp that carries it, enabling it with dynamic motion capabilities. We are exploring how the LuminAR system embodied in a familiar form 5 factor of a classic Angle Poise lamp may evolve into a new class of robotic, digital information devices. 18. Blinkbot: look at, blink and move Pranav Mistry, Kentaro Ishii, Masahiko Inami, Takeo Igarashi, In this paper we present BlinkBot - a hands free input interface to control and command a robot. BlinkBot explores the natural modality of gaze and blink to direct a robot to move an object from a location to another. The paper also explains detailed hardware and software implementation of the prototype system. 19. RoboJockey: real-time, simultaneous, and continuous creation of robot actions for everyone Takumi Shirokura, Daisuke Sakamoto, Yuta Sugiura, Tetsuo Ono, Masahiko Inami, Takeo Igarashi, We developed a RoboJockey (Robot Jockey) interface for coordinating robot actions, such as dancing - similar to "Disc jockey" and "Video jockey". The system enables a user to choreograph a dance for a robot to perform by using a simple visual language. Users can coordinate humanoid robot actions with a combination of arm and leg movements. Every action is automatically performed to background music and beat. The RoboJockey will give a new entertainment experience with robots to the end-users. 20. ARmonica: a collaborative sonic environment Mengu Sukan, Ohan Oda, Xiang Shi, Manuel Entrena, Shrenik Sadalgi, Jie Qi, Steven Feiner, ARmonica is a 3D audiovisual augmented reality environment in which players can position and edit virtual bars that play sounds when struck by virtual balls launched under the influence of physics. Players experience ARmonica through head-tracked head-worn displays and tracked hand-held ultramobile personal computers, and interact through tracked Wii remotes and touch-screen taps. The goal is for players to collaborate in the creation and editing of an evolving sonic environment. Research challenges include supporting walk-up usability without sacrificing deeper functionality. 21. IODisk: disk-type i/o interface for browsing digital contents Koji Tsukada, Keisuke Kambara, We propose a disk-type I/O interface, IODisk, which helps users browse various digital contents intuitively in their living environment. IODisk mainly consists of a forcefeedback mechanism integrated in the rotation axis of a disk. Users can control the playing speed/direction contents (e.g., videos or picture slideshows) in proportion to the rotational speed/direction of the disk. We developed a prototype system and some applications. 6 22. Enabling social interactions through real-time sketch-based communication Nadir Weibel, Lisa G. Cowan, Laura R. Pina, William G. Griswold, James D. Hollan, We present UbiSketch, a tool for ubiquitous real-time sketch-based communication. We describe the UbiSketch system, which enables people to create doodles, drawings, and notes with digital pens and paper and publish them quickly and easily via their mobile phones to social communication channels, such as Facebook, Twitter, and email. The natural paper-based social interaction enabled by UbiSketch has the potential to enrich current mobile communication practices. 23. HIPerPaper: introducing pen and paper interfaces for ultra-scale wall displays Nadir Weibel, Anne Marie Piper, James D. Hollan, While recent advances in graphics, display, and computer hardware support ultra-scale visualizations of a tremendous amount of data sets, mechanisms for interacting with this information on large high-resolution wall displays are still under investigation. Different issues in terms of user interface, ergonomics, multi-user interaction, and system flexibility arise while facing ultra-scale wall displays and none of the introduced approaches fully address them. We introduce HIPerPaper, a novel digital pen and paper interface that enables natural interaction with the HIPerSpace wall, a 31.8 by 7.5 foot tiled wall display of 268,720,000 pixels. HIPerPaper provides a flexible, portable, and inexpensive medium for interacting with large high-resolution wall displays. 24. EasySnap: real-time audio feedback for blind photography Samuel White, Hanjie Ji, Jeffrey P. Bigham, This demonstration presents EasySnap, an application that enables blind and low-vision users to take high-quality photos by providing real-time audio feedback as they point their existing camera phones. Users can readily follow the audio instructions to adjust their framing, zoom level and subject lighting appropriately. Real-time feedback is achieved on current hardware using computer vision in conjunction with use patterns drawn from current blind photographers. 25. ImpAct: enabling direct touch and manipulation for surface computing Anusha Withana, Makoto Kondo, Gota Kakehi, Yasutoshi Makino, Maki Sugimoto, Masahiko Inami, This paper explores direct touch and manipulation techniques for surface computing platforms using a special force feedback stylus named ImpAct(Immersive Haptic Augmentation for Direct Touch). Proposed haptic stylus can change its length when it is pushed against a display surface. Correspondingly, a virtual stem is rendered inside the display area so that user perceives the stylus immersed through to the digital space below the screen. We propose ImpAct as a tool to probe and manipulate digital objects in 7 the shallow region beneath display surface. ImpAct creates a direct touch interface by providing kinesthetic haptic sensations along with continuous visual contact to digital objects below the screen surface. 26. The multiplayer: multi-perspective social video navigation Zihao Yu, Nicholas Diakopoulos, Mor Naaman, We present a multi-perspective video "multiplayer" designed to organize social video aggregated from online sites like YouTube. Our system automatically time-aligns videos using audio fingerprinting, thus bringing them into a unified temporal frame. The interface utilizes social metadata to visually aid navigation and cue users to more interesting portions of an event. We provide details about the visual and interaction design rationale of the multiplayer. 3. SESSION: Posters 27. The enhancement of hearing using a combination of sound and skin sensation to the pinna Kanako Aou, Asuka Ishii, Masahiro Furukawa, Shogo Fukushima, Hiroyuki Kajimoto, Recent development in sound technologies has enabled the realistic replay of real-life sounds. Thanks to these technologies, we can experience a virtual real sound environment. However, there are other types of sound technologies that enhance reality, such as acoustic filters, sound effects, and background music. They are quite effective if carefully prepared, but they also alter the sound itself. Consequently, sound is simultaneously used to reconstruct realistic environments and to enhance emotions, which are actually incompatible functions. With this background, we focused on using tactile modality to enhance emotions and propose a method that enhances the sound experience by a combination of sound and skin sensation to the pinna (earlobe). In this paper, we evaluate the effectiveness of this method. 28. What can internet search engines "suggest" about the usage and usability of popular desktop applications? Adam Fourney, Richard Mann, Michael Terry, In this paper, we show how Internet search query logs can yield rich, ecologically valid data sets describing the common tasks and issues that people encounter when using software on a day-to-day basis. These data sets can feed directly into standard usability practices. We address challenges in collecting, filtering, and summarizing queries, and show how data can be collected at very low cost, even without direct access to raw query logs. 29. Interacting with live preview frames: in-picture cues for a digital camera interface Steven R. Gomez, 8 We present a new interaction paradigm for digital cameras aimed at making interactive imaging algorithms accessible on these devices. In our system, the user creates visual cues in front of the lens during the live preview frames that are continuously processed before the snapshot is taken. These cues are recognized by the camera's image processor to control the lens or other settings. We design and analyze vision-based camera interactions, including focus and zoom controls, and argue that the vision-based paradigm offers a new level of photographer control needed for the next generation of digital cameras. 30. HyperSource: bridging the gap between source and code-related web sites Björn Hartmann, Mark Dhillon, Programmers frequently use the Web while writing code: they search for libraries, code examples, tutorials, documentation, and engage in discussions on Q&A forums. This link between code and visited Web pages largely remains implicit today. Connecting source code and (selective) browsing history can help programmers maintain context, reduce the cost of Web content re-retrieval, and enhance understanding when code is shared. This paper introduces HyperSource, an IDE augmentation that associates browsing histories with source code edits. HyperSource comprises a browser extension that logs visited pages; a novel source document format that maps visited pages to individual characters; and a user interface that enables interaction with these histories. 31. Shoe-shaped i/o interface Hideaki Higuchi, Takuya Nojima, In this research, we propose a shoe-shaped I/O interface. The benefits to users of wearable devices are significantly reduced if they are aware of them. Wearable devices should have the ability to be worn without requiring any attention from the user. However, previous wearable systems required users to be careful and be aware of wearing or carrying them. To solve this problem, we propose a shoe-shaped I/O interface. By wearing the shoes throughout the day, users soon cease to be conscious of them. Electromechanical devices are potentially easy to install in shoes. This report describes the concept of a shoe-shaped I/O interface, the development of a prototype system, and possible applications. 32. Development of the motion-controllable ball Takashi Ichikawa, Takuya Nojima, In this report, we propose a novel ball type interactive interface device. Balls are one of the most important pieces of equipment used for entertainment and sports. Their motion guides a player's response in terms of, for example, a feint or similar movement. Many kinds of breaking ball throws have been developed for various sports(e.g. baseball). 9 However, acquiring the skill to appropriately react to these breaking balls is often hard to achieve and requires long-term training. Many researchers focus on the ball itself and have developed interactive balls with visual and acoustic feedbacks. However, these balls do not have the ability for motion control. In this paper, we introduce a ball-type motion control interface device. It is composed of a ball and an air-pressure tank to change its vector using gas ejection. We conducted an experiment that measures the ball's flight path while subjected to gas ejection and the results showed that the prototype system had enough power to change the ball's vector while flying 33. PETALS: a visual interface for landmine detection Lahiru G. Jayatilaka, Luca F. Bertuccelli, James Staszewski, Krzysztof Z. Gajos, Post-conflict landmines have serious humanitarian repercussions: landmines cost lives, limbs and land. The primary method used to locate these buried devices relies on the inherently dangerous and difficult task of a human listening to audio feedback from a metal detector. Researchers have previously hypothesized that expert operators respond to these challenges by building mental patterns with metal detectors through the identification of object-dependent spatially distributed metallic fields. This paper presents the preliminary stages of a novel interface - Pattern Enhancement Tool for Assisting Landmine Sensing (PETALS) - that aims to assist with building and visualizing these patterns, rather than relying on memory alone. Simulated demining experiments show that the experimental interface decreases classification error from 23% to 5% and reduces localization error by 54%, demonstrating the potential for PETALS to improve novice deminer safety and efficiency. 34. Pinstripe: eyes-free continuous input anywhere on interactive clothing Thorsten Karrer, Moritz Wittenhagen, Florian Heller, Jan Borchers, We present Pinstripe, a textile user interface element for eyes-free, continuous value input on smart garments that uses pinching and rolling a piece of cloth between your fingers. Input granularity can be controlled by the amount of cloth pinched. Pinstripe input elements are invisible, and can be included across large areas of a garment. Pinstripe thus addresses several problems previously identified in the placement and operation of textile UI elements on smart clothing. 35. Kinetic tiles: modular construction units for interactive kinetic surfaces Hyunjung Kim, Woohun Lee, We propose and demonstrate Kinetic Tiles, modular con-struction units for Interactive Kinetic Surfaces (IKSs). We aimed to design Kinetic Tiles to be accessible and available so that users can construct IKSs easily and rapidly. The components of Kinetic Tiles are inexpensive and easily available. In addition, the use of magnetic force enables the 10 separation of the surface material and actuators so that users only interact with the tile modules as if constructing a tile mosaic. Kinetic Tiles can be utilized as a new design and architectural material that allows the surfaces of everyday objects and spaces to convey ambient and pleasurable kinetic expressions. 36. Stacksplorer: understanding dynamic program behavior Jan-Peter Krämer, Thorsten Karrer, Jonathan Diehl, Jan Borchers, To thoroughly comprehend application behavior, programmers need to understand the interactions of objects at runtime. Today, these interactions are often poorly visualized in common IDEs except during debugging. Stacksplorer allows visualizing and traversing potential call stacks in an application even when it is not running by showing callers and called methods in two columns next to the code editor. The relevant information is gathered from the source code automatically. 37. Memento: unifying content and context to aid webpage re-visitation Chinmay E. Kulkarni, Santosh Raju, Raghavendra Udupa, While users often revisit pages on the Web, tool support for such re-visitation is still lacking. Current tools (such as browser histories) only provide users with basic information such as the date of the last visit and title of the page visited. In this paper, we describe a system that provides users with descriptive topic-phrases that aid refinding. Unlike prior work, our system considers both the content of a webpage and the context in which the page was visited. Preliminary evaluation of this system suggests users find this approach of combining content with context useful. 38. Interactive calibration of a multi-projector system in a video-wall multi-touch environment Alessandro Lai, Alessandro Soro, Riccardo Scateni, Wall-sized interactive displays gain more and more attention as a valuable tool for multiuser applications, but typically require the adoption of projectors tiles. Projectors tend to display deformed images, due to lens distortion and/or imperfection, and because they are almost never perfectly aligned to the projection surface. Multi-projector videowalls are typically bounded to the video architecture and to the specific application to be displayed. This makes it harder to develop interactive applications, in which a fine grained control of the coordinate transformations (to and from user space and model space) is required. This paper presents a solution to such issues: implementing the blending functionalities at an application level allows seamless development of multidisplay interactive applications with multi-touch capabilities. The description of the multi-touch interaction, guaranteed by an array of cameras on the baseline of the wall, is beyond the scope of this work which focuses on calibration. 11 39. CodeGraffiti: communication by sketching for pair programmers Leonhard Lichtschlag, Jan Borchers, In pair programming, two software developers work on their code together in front of a single workstation, one typing, the other commenting. This frequently involves pointing to code on the screen, annotating it verbally, or sketching on paper or a nearby whiteboard, little of which is captured in the source code for later reference. CodeGraffiti lets pair programmers simultaneously write their code, and annotate it with ephemeral and persistent sketches on screen using touch or pen input. We integrated CodeGraffiti into the Xcode software development environment, to study how these techniques may improve the pair programming workflow. 40. Mouseless Pranav Mistry, Patricia Maes, In this short paper we present Mouseless - a novel input device that provides the familiarity of interaction of a physical computer mouse without actually requiring a real hardware mouse. The paper also briefly describes hardware and software implementation of the prototype system and discusses interactions supported. 41. Anywhere touchtyping: text input on arbitrary surface using depth sensing Adiyan Mujibiya, Takashi Miyaki, Jun Rekimoto, In this paper, touch typing enabled virtual keyboard system using depth sensing on arbitrary surface is proposed. Keystroke event detection is conducted using 3dimensional hand appearance database matching combined with fingertip's surface touch sensing. Our prototype system acquired hand posture depth map by implementing phase shift algorithm for Digital Light Processor (DLP) fringe projection on arbitrary flat surface. The system robustly detects hand postures on the sensible surface with no requirement of hand position alignment on virtual keyboard frame. The keystroke feedback is the physical touch to the surface, thus no specific hardware must be worn. The system works real-time in average of 20 frames per second. 42. Using temporal video annotation as a navigational aid for video browsing Stefanie Müller, Gregor Miller, Sidney Fels, Video is a complex information space that requires advanced navigational aids for effective browsing. The increasing number of temporal video annotations offers new opportunities to provide video navigation according to a user's needs. We present a novel video browsing interface called TAV (Temporal Annotation Viewing) that provides the user with a visual overview of temporal video annotations. TAV enables the user to quickly determine the general content of a video, the location of scenes of interest and the type of annotations that are displayed while watching the video. An ongoing user 12 study will evaluate our novel approach. 43. Tweeting halo: clothing that tweets Wai Shan (Florence) Ng, Ehud Sharlin, People often like to express their unique personalities, interests, and opinions. This poster explores new ways that allow a user to express her feelings in both physical and virtual settings. With our Tweeting Halo, we demonstrate how a wearable lightweight projector can be used for self-expression very much like a hairstyle, makeup or a T-shirt imprint. Our current prototype allows a user to post a message physically above their head and virtually on Twitter at the same time. We also explore simple ways that will allow physical followers of the Tweeting Halo user to easily become virtual followers by simply taking a snapshot of her projected tweet with a mobile device such as a camera phone. In this extended abstract we present our current prototype, and the results of a design critique we performed using it. 44. DoubleFlip: a motion gesture delimiter for interaction Jaime Ruiz, Yang Li, In order to use motion gestures with mobile devices it is imperative that the device be able to distinguish between input motion and everyday motion. In this abstract we present DoubleFlip, a unique motion gesture designed to act as an input delimiter for mobile motion gestures. We demonstrate that the DoubleFlip gesture is extremely resistant to false positive conditions, while still achieving high recognition accuracy. Since DoubleFlip is easy to perform and less likely to be accidentally invoked, it provides an always-active input event for mobile interaction. 45. QWIC: performance heuristics for large scale exploratory user interfaces Daniel A. Smith, Joe Lambert, mc schraefel, David Bretherton, Faceted browsers offer an effective way to explore relationships and build new knowledge across data sets. So far, web-based faceted browsers have been hampered by limited feature performance and scale. QWIC, Quick Web Interface Control, describes a set of design heuristics to address performance speed both at the interface and the backend to operate on large-scale sources. 46. What interfaces mean: a history and sociology of computer windows Louis-Jean Teitelbaum, This poster presents a cursory look at the history of windows in Graphical User Interfaces. It examines the controversy between tiling and overlapping window managers and explains that controversy's sociological importance: windows are control devices, enabling their users to manage their activity and attention. It then explores a few possible reasons for the relative disappearance of windowing in recent computing 13 devices. It concludes with a recapitulative typology. 47. Exploring pen and paper interaction with high-resolution wall displays Nadir Weibel, Anne Marie Piper, James D. Hollan, We introduce HIPerPaper, a novel digital pen and paper interface that enables natural interaction with a 31.8 by 7.5 foot tiled wall display of 268,720,000 pixels. HIPerPaper provides a flexible, portable, and inexpensive medium for interacting with large highresolution wall displays. While the size and resolution of such displays allow visualization of data sets of a scale not previously possible, mechanisms for interacting with wall displays remain challenging. HIPerPaper enables multiple concurrent users to select, move, scale, and rotate objects on a high-dimension wall display. 48. Enabling tangible interaction on capacitive touch panels Neng-Hao Yu, Li-Wei Chan, Lung-Pan Cheng, Mike Y. Chen, Yi-Ping Hung, We propose two approaches to sense tangible objects on capacitive touch screens, which are used in off-the-shelf multi-touch devices such as Apple iPad, iPhone, and 3M's multitouch displays. We seek for the approaches that do not require modifications to the panels: spatial tag and frequency tag. Spatial tag is similar to fiducial tag used by tangible tabletop surface interaction, and uses multi-point, geometric patterns to encode object IDs. Frequency tag simulates high-frequency touches in the time domain to encode object IDs, using modulation circuits embedded inside tangible objects to simulate highspeed touches in varying frequency. We will show several demo applications. The first combines simultaneous tangible + touch input system. This explores how tangible inputs (e.g., pen, easer, etc.) and some simple gestures work together on capacitive touch panels. 49. MobileSurface: interaction in the air for mobile computing Ji Zhao, Hujia Liu, Chunhui Zhang, Zhengyou Zhang, We describe a virtual interactive surface technology based on a projector-camera system connected to a mobile device. This system, named mobile surface, can project images on any free surfaces and enable interaction in the air within the projection area. The projector used in the system scans a laser beam very quickly across the projection area to produce a stable image at 60 fps. The camera-projector synchronization is applied to obtain the image of the appointed scanning line. So our system can project what is perceived as a stable image onto the display surface, while simulta neously working as a structured light 3D scanning system. 14