Chapter 3 Regular languages and grammars

advertisement

Section 3.3 regular grammars

As we will show shortly, regular grammars are a third method of describing regular languages.

Recall that a grammar (V, T, P, S) is used to generate strings in a language by a process

called a derivation. Basically, beginning with S, we repeatedly replace variable symbols by the

right hand side of rules until only terminal symbols remain.

Definition 3.3 A grammar G = (V, T, S, P) is said to be right-linear if all productions are of the

form A xB or A x where A, B V and x T*. A grammar is said to be left-linear if all

productions are of the form A Bx or A x. A regular grammar is one that is either rightlinear or left-linear. Note that A is a special case of the A x rule since T* contains .

Also note that a regular grammar must be strictly right-linear or strictly left-linear and may not

have productions of both types.

Expressed another way, each production has at most one variable on the right side and that

variable must be either the leftmost or the rightmost symbol. We may also without loss of

generality assume that there is at most one terminal symbol on the right hand side of a

production. To see that this is not really a restriction, suppose we have a production like S

abbA. We could introduce two new variables B1 and B2 and replace the rule by the following

three rules:

S aB1

B1 bB2

B2 bA

Convention: For the regular grammars you construct the right hand side will be a single

terminal followed by a variable or just a terminal or . We will use only right-linear grammars.

However, we may not mix the two forms of productions i.e. we cannot have productions of both

types e.g. A aB and A Cb in the same grammar. A grammar which has both kinds of

productions is called a linear grammar and the corresponding language is a linear language.

There are linear languages that are not regular. (As we’ll see later, the set of all strings of the

form anbn is a linear language, but not regular.) This gives us a containment property—the

languages generated by regular grammars are a proper subset of those generated by linear

grammars.

We now show that right linear grammars generate regular languages. We will follow the

convention above that each production has at most one terminal symbol on the right hand side

and that the variable is at the extreme right. Let’s go back to a grammar we saw earlier—the

grammar over {a, b} that generates all strings of odd length ending in b.

Sb

S bA | aA

A aS | bS

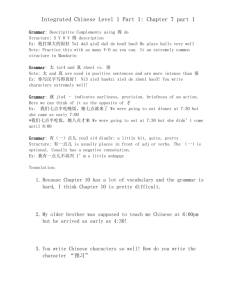

The automaton that can be produced by this grammar is shown below. We’ll explain the final

state labeled Z in a moment.

Here’s the algorithm to construct an NFA from a right-linear grammar (V, T, S, P):

Note: this discussion differs from that in the book.

1.

2.

3.

4.

5.

Create a state for each element of V in the grammar plus an additional final state Z

Let = T

The start state of the NFA is S

The set of final states F = {A | A is in P} {Z}

Defining the transition function requires two types of transitions,

(A, a) = B if A aB is in P

(A, a) = Z if A a is in P

Theorem 3.3: Let G = (V, T, S, P) be a right-linear grammar. Then L(G) is a regular language.

Proof sketch—by induction on the length of a derivation using the grammar above

Basis: if S then S F

if S a then (S, a) = Z and since Z F again we’re O.K.

Hypothesis: If w L(G) and w can be derived in n or fewer steps, then w L(M) and if w

L(M) and |w| n, then S derives w in n or fewer steps.

Induction: Suppose S derives w in n + 1 steps. Then w has the form aax, abx, bax or bbx

where a, b . Without loss of generality we consider only the derivation of aax. It must

proceed as follows:

n-1

S aA aaS aax

But by hypothesis, since S derives x is n-1 steps, x L(M) i.e. there is a path through GM

labeled x from start state S to final state Z. But *(S, aa) = (A, a) = S so there is a path

labeled w from S to Z and thus aax L(M).

Going the other way, suppose w L(M) and |w| = n + 1. Then, in GM, there is a path labeled

w from start state S to final state Z. w must have one of four forms: aay, aby, bay or bby.

Looking at aax again, after two moves the machine must be in state S. This means there is a

path from S to Z labeled y and by hypothesis S derives y. Then,

S aA aaS aay = w is a derivation of w so w L(G).

Right linear grammars for regular languages.

Let’s begin by looking at an example of obtaining a grammar that corresponds to an automaton

we have seen before—the DFA to recognize strings of odd length ending in b.

A finite automaton has five parts:

S –a finite set of states

– an alphabet or finite set of symbols

q0 – the start state

F S -- set of final or accepting states

--the state transition function

Recall that the grammar has four parts

N = set of variables

T = set of terminals

P = set of productions or rules

S = start symbol

{q0, q1, q2}

{a, b}

q0

{q2}

specified moves above

{A, B, C}

{a, b}

{A}

Letting A correspond to q0, B to q1, and C to q2 (as shown in red above) we have:

automaton move

(q0, a) = q1

(q0, b) = q2

(q1, a) = q0

(q1, b) = q0

(q2, a) = q0

(q2, b) = q0

grammar production

A aB

A bC

B aA

B bA

C aA

C bA

We can use a shorthand to write out the productions, namely, A aB | bC

B aA | bA

C aA | bA

Observe that we don’t yet have any way to terminate the process. Thus, we want to add

productions that correspond to reading the last symbol and ending up in an accept state. In

this particular case we want one more production:

A b since that takes us to a final state q2 in the automaton. We could also use a transition for each final state. In this example it would be C .

We’re now ready to look at the following theorem:

Theorem 3.4 If L is a regular language on the alphabet then there exists a right-linear

grammar G = (V, , S, P) such that L = L(G).

Formally, let L be a regular language. Then there is a DFA M =(Q, , , q0, F) such that L =

L(M). Define a grammar G = (V, T, P, S) as follows:

Let V = Q. We’ll use the notation [qi] to emphasize we’re looking at a grammar variable rather

than a state in the automaton.

Let T = , and let S = [q0]

The set of productions P is defined as follows:

If (p, a) = q put in the production [p] a[q]

Since acceptance in M means we end up in a final state, for every p F, put in a production

[p] .

You can read the rest of the proof in the text.

Finally, we have theorem 3.6: A language L is regular iff there exists a regular grammar G

such that L = L(G).

Let’s look at another example of a machine to grammar construction:

Let’s go back to the even number of a’s and odd number of b’s automaton:

Let’s let S be EE, A be OE, B be OO and C be EO. Then, the grammar rules are

S aA | bC

A aS | bB

B aC | bA

C aB | bS

We need one more production to terminate a derivation: C

The theorems we have proven here allow us to describe a regular language in one of three

ways, all of which are equivalent:

regular expression

automaton—DFA or NFA

regular grammar.