Does Objectification Affect Women`s Willingness to Compete

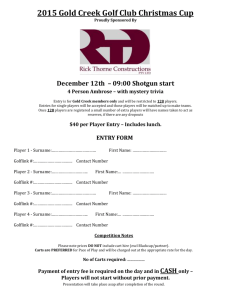

advertisement